Edge and Corner Detection in Unorganized Point Clouds for Robotic

Pick and Place Applications

Mohit Vohra

1

, Ravi Prakash

1

and Laxmidhar Behera

1,2

1

Department of Electrical Engineering, IIT Kanpur, India

2

TCS Innovation Labs, Noida, India

Keywords:

Edge Extraction, Unorganized Point Cloud, Autonomous Grasping.

Abstract:

In this paper, we propose a novel edge and corner detection algorithm for an unorganized point cloud. Our edge

detection method classifies a query point as an edge point by evaluating the distribution of local neighboring

points around the query point. The proposed technique has been tested on generic items such as dragons,

bunnies, and coffee cups from the Stanford 3D scanning repository. The proposed technique can be directly

applied to real and unprocessed point cloud data of random clutter of objects. To demonstrate the proposed

technique’s efficacy, we compare it to the other solutions for 3D edge extractions in an unorganized point

cloud data. We observed that the proposed method could handle the raw and noisy data with little variations

in parameters compared to other methods. We also extend the algorithm to estimate the 6D pose of known

objects in the presence of dense clutter while handling multiple instances of the object. The overall approach

is tested for a warehouse application, where an actual UR5 robot manipulator is used for robotic pick and place

operations in an autonomous mode.

1 INTRODUCTION

Over the past decade, the construction industry has

struggled to improve its productivity, while the manu-

facturing industry has experienced a dramatic produc-

tivity increase (Changali et al., 2015) (Vohra et al.,

2019) (Pharswan et al., 2019). A possible reason

is the lack of advanced automation in construction

(Asadi and Han, 2018). However, recently various in-

dustries have shown their interest in construction au-

tomation due to the following benefits, i.e., the con-

struction work will be continuous, and as a result, the

construction period will decrease, which will provide

tremendous economic benefits. Additionally, con-

struction automation improves worker’s safety and

enhances the quality of work. The most crucial part of

the construction is to build a wall and to develop a sys-

tem for such a task; the system should be able to es-

timate the brick pose in the clutter of bricks, grasp it,

and place it in a given pattern. Recently, a New York-

based company, namely "Construction Robotics", has

developed a bricklaying robot called SAM100 (semi-

automated mason) (Parkes, 2019), which makes a

wall six times faster than a human. The SAM100 re-

quires a systematic stack of bricks at regular intervals,

making this system semi-autonomous, as the name

suggests.

Similarly, in the warehouse industry, the task of

unloading goods from trucks or containers is crucial.

With the development of technology, various solu-

tions have been proposed to incorporate automation

in unloading goods (Doliotis et al., 2016) (Stoyanov

et al., 2016). One of the main challenges in the au-

tomation of the above work is that the system has to

deal with a stack of objects, which can come in ran-

dom configurations, as shown in Fig. 1. For devel-

oping a system for such a task, the system must esti-

mate the pose of the cartons in a clutter, grasp it, and

arrange it in the appropriate stack for further process-

ing.

In this paper, we focus on estimating the pose of

the objects (carton or brick) in clutter, as shown in

Fig. 1. We assume that all the objects present in the

clutter have the same dimensions, which is very com-

mon in warehouse industries (clutter of cartons all

having the same dimensions) and construction sites

(clutter of bricks). Traditionally, Object pose is es-

timated by matching the 2D object features (Lowe,

2004) (Collet et al., 2011) or 3D object features (Drost

et al., 2010) (Hinterstoisser et al., 2016) between ob-

ject model points and view points. Recently, CNN

based solutions have shown excellent results for 6D

Vohra, M., Prakash, R. and Behera, L.

Edge and Corner Detection in Unorganized Point Clouds for Robotic Pick and Place Applications.

DOI: 10.5220/0010501202450253

In Proceedings of the 18th International Conference on Informatics in Control, Automation and Robotics (ICINCO 2021), pages 245-253

ISBN: 978-989-758-522-7

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

245

Figure 1: Cartons Clutter (Image Courtesy: Internet).

pose estimation, which exempts us from using hand-

crafted features. For example, (Xiang et al., 2017)

(Tekin et al., 2018) can estimate the pose of the ob-

jects directly from raw images. Similarly, (Qi et al.,

2017)(Zhou and Tuzel, 2018) can directly process the

raw point cloud data and predict the pose of the ob-

jects. While all the above methods have shown excel-

lent results, they cannot be used directly for our work.

• Since the objects are textureless. Therefore the

number of features will be less, making the feature

matching methods less reliable. Furthermore, due

to the presence of multiple instances of the object,

it can be challenging to select features that corre-

spond to a single object.

• The performance of CNN-based algorithms relies

on extensive training on large datasets. However,

due to the large variety of objects in the warehouse

industry (various sizes and colors), it is challeng-

ing to train CNN for all types of objects.

Therefore, we aim to develop a method that re-

quires fewer features and can easily be deployed for

objects with different dimensions.

The main idea of our approach is that if we can

determine at least three corner points of the cartons,

then this information is sufficient to estimate its pose.

So the first step is to extract sharp features like edges

and boundaries in point cloud data. In the case of

edge detection in unstructured 3D point clouds, tradi-

tional methods reconstruct a surface to create a mesh

(Kazhdan and Hoppe, 2013) or a graph (Demarsin

et al., 2007) to analyze the neighborhood of each

point. However, reconstruction tends to wash away

sharp edges, and graph-based methods are computa-

tionally expensive. In (Bazazian et al., 2015), the au-

thor performs fast computation of edges by construct-

ing covariance matrices from local neighbors, but the

author has demonstrated for synthetic data. In (Ni

et al., 2016), the authors locate edge points by fit-

ting planes to local neighborhood points. Further a

discriminative learning based approach (Hackel et al.,

2016) is applied to unstructured point clouds. The au-

thors train a random forest-based binary classifier on

a set of features that learn to classify points into edge

vs. non-edge points. One drawback of their method

is poor performance on unseen data.

In this paper, we present a simple method to ex-

tract edges in real unstructured point cloud data. In

this approach, we assign a score to each point based

on its local neighborhood distribution. Based on the

score, we classify a point as an edge (or boundary)

point or a non-edge point. From the edge points, we

find the line equation, the length of the 3D edges, and

the corner points, which are intersections of two or

more edges. For each corner point, the correspond-

ing points in the local object frame are calculated to

estimate the object’s 6D pose. Our approach’s main

advantage is that it can be easily applied to other ob-

jects with known dimensions.

The remainder of this paper is organised as fol-

lows. Proposed 3D edge extraction is presented in

Section II. In Section III corner points are found us-

ing those edges. Pose estimation using edges and cor-

ners are presented in Section IV. In Section V, ex-

perimental results for robotic manipulation using a

6-DOF robot manipulator and their qualitative com-

parison with the state-of-the-art is done. This paper is

finally concluded in Section VI.

2 EDGE POINTS EXTRACTION

In this section, we will explain the method for extract-

ing the edge points. Input to the algorithm is raw point

cloud data P , user-defined radius r

s

, and threshold

value t

h

. The output of the algorithm is point cloud E

which contains the edge points. To decide if a given

query point p

i

∈ P is an edge point or not, we calcu-

late R (p

i

), which is defined as a set of all points in-

side a sphere, centered at point p

i

with radius r

s

. For

an unorganized point cloud, this is achieved through

a k-dimensional (K-d) tree. We call each point in set

R (p

i

) as a neighboring point of p

i

, and it can be rep-

resented as R (p

i

) =

n

1

, n

2

, . . . , n

k

. For each query

point p

i

and neighboring point n

j

we calculate the di-

rectional vector as

d(p

i

, n

j

) = n

j

− p

i

(1)

ˆ

d(p

i

, n

j

) =

d(p

i

, n

j

)

d(p

i

, n

j

)

(2)

Then we calculate the resultant directional vector

ˆ

R(p

i

) as sum of all directional vector and normalize

it

R(p

i

) =

k

∑

j=1

ˆ

d(p

i

, n

j

),

ˆ

R(p

i

) =

R(p

i

)

k

R(p

i

)

k

(3)

ICINCO 2021 - 18th International Conference on Informatics in Control, Automation and Robotics

246

We assign a score s(p

i

) to each query point p

i

as an average of the dot product between

ˆ

R(p

i

) and

ˆ

d(p

i

, n

j

) for all neighboring points.

s(p

i

) =

∑

k

j=1

ˆ

R(p

i

) ·

ˆ

d(p

i

, n

j

)

k

(4)

If s(p

i

) exceeds some threshold t

h

, p

i

is considered

an edge point, otherwise not. We have tested the algo-

rithm on standard data set from Stanford 3D Scanning

Repository

1

and (Lai et al., 2014), with point cloud

models of various objects such as bowls, cups, cereal

boxes, coffee mugs, soda cans, bunnies, and dragons.

We have also applied our algorithm to a clutter of ran-

dom objects to extract edges, shown in Fig. 2.

(a) Coffee mug (b) bunny

(c) dragon (d) Objects Clutter

Figure 2: Edge cloud.

3 ESTIMATING THE LENGTH OF

EDGES

Once we have found all the edge points, our next

step is to find the line equation of edges, length of

edges, and corner points. In general, the equation of

a line is represented by two entities, a point through

which line passes and a unit directional vector from

that point. However, in our case, we represent the line

with two extreme points. The advantage of the above

representation is that we can estimate the length of the

edge by calculating the distance between two extreme

points. The intersecting point between two edges can

be found by calculating the distance between the ex-

tremities of edges.

3.1 Reference Index and Line Equation

To find the line equation, we apply the RANSAC

method on the edge point cloud E . The output of

the RANSAC method is L and I , where L represents

the equation of a line in standard form i.e., a point

1

http://graphics.stanford.edu/data/3Dscanrep/

and a directional vector, and I represent the set of

inliers. To find two extremities of line L, we have

to make sure that two extremities should represent

the same edge because, in a cluttered environment, I

could have points that belong to different objects. So

for this task, we will find the reference point p

r

. We

define a point p

r

∈ I , which has the maximum num-

ber of neighbors in I , and r is the reference index of

p

r

in I . The maximum number of neighbors property

ensures that p

r

is not at the extreme ends, and from

p

r

we will find the two extreme points, which is ex-

plained in the next section. Following are the steps to

estimate the reference index r

• Use RANSAC on E for estimating the line equa-

tion L and set of inliers I .

• We define R (p

i

) as set of all inlier points inside a

sphere, centered at point p

i

, where p

i

∈ I

• we define r = argmax

i

size(R (p

i

))

3.2 Finding Extremities

In this section, we will explain the procedure to find

the extreme points e

1

and e

2

in a set of points I

for a reference point p

r

. To test if a query point

p

i

∈ I is an extreme point or not, we find a set of

inliers R (p

i

), with radius r

s

and represent the set as

R (p

i

) =

n

1

, n

2

, . . . , n

k

. For each query point p

i

,

we calculate the directional vector from a query point

to the reference point d

1

= p

r

− p

i

, and a local di-

rectional vector from the query point to the neighbor

points d

2

= n

j

− p

i

, where n

j

∈ R (p

i

). Now to de-

cide if the query point is an extreme point or not, we

compute the dot product between d

1

and d

2

for all

neighboring points. If any of the dot product value is

negative, then p

i

is not an extreme point; otherwise,

it is. We repeat the procedure for all p

i

and select the

extreme points which have the smallest distance from

p

r

. Implementation of the above procedure is given

in Algorithm 1.

3.3 Get All Edges

To find all the lines in the edges point cloud E, we re-

cursively apply the above algorithms, and the points

corresponding to the line will be removed from E to

obtain the new line equation. The following is a se-

quence of steps to extract all the lines.

• Let P be the raw Point cloud data, r

s

be the radius

and t

h

be the threshold value to extract the edges

point cloud E.

• Apply RANSAC on E to get reference index r and

set of inliers I .

Edge and Corner Detection in Unorganized Point Clouds for Robotic Pick and Place Applications

247

Algorithm 1: Get extreme points.

Require: r, I

Ensure: e

1

, e

2

extreme points

1: for i = 0 → size(I ) and i 6= r do

2: find R (p

i

)

3: d

1

= p

r

− p

i

4:

ˆ

d

1

=

d

1

k

d

1

k

5: for j = 0 → size(R (p

i

)) do

6: d

2

= n

j

− p

i

7:

ˆ

d

2

=

d

2

k

d

2

k

8: v =

ˆ

d

1

·

ˆ

d

2

9: if v < 0 then p

i

is not an extreme point

10: break

11: end if

12: end for

13: if v > 0 then p

i

can be an extreme point

14: p

i

→ points save point

15:

ˆ

d

1

→ directions save direction

16:

k

d

1

k

→ distances save distance

17: end if

18: end for

19: e

1

→ mindis(distances)

20: e

2

is first minima opposite to e

1

using saved

ˆ

d

1

• Extract two extreme points e

1

and e

2

.

• Store e

1

and e

2

in an array A and remove all the

points between e

1

and e

2

from E.

• repeat the steps 2 − 4.

4 POSE ESTIMATION

4.1 Club Edges of Same Cube

Algorithm 2: Get edges of same cube.

Require: A, a

Ensure: C all edges of a cube

1: l

1

= A(a)

2: while not traverse all edges do

3: l

2

∈ A − l

1

4: if l

1

⊥ l

2

and l

1

∩ l

2

6= φ then

5: l

1

, l

2

→ C save l

1

, l

2

in C

6: A 6≡ l

1

remove l

1

from A

7: l

1

= l

2

replace l

1

with l

2

8: end if

9: end while

Once we have all the edges in A, our next step is

to club the edges of the same cube. Let a be an edge

number, and the corresponding edge is l

1

= A(a). If

l

1

represents one edge of a cube, then we need to find

all other edges of the same cube. To find other edges,

we consider the property that l

2

must be orthogonal to

l

1

, i.e., l

1

⊥ l

2

, and intersect with each other at a point,

i.e., l

1

∩l

2

6= φ. If l

2

satisfies the above conditions, we

store l

1

and l

2

in an array and replace l

1

with l

2

. We re-

peat the above steps until we cover all the edges. The

process is described in Algorithm 2. Once we have all

the edges representing the same cube, we compute the

corner points, which are intersections of two or more

edges. These corner points are calculated in the cam-

era frame, and by estimating the corresponding points

in the local object frame, we can estimate the object’s

pose.

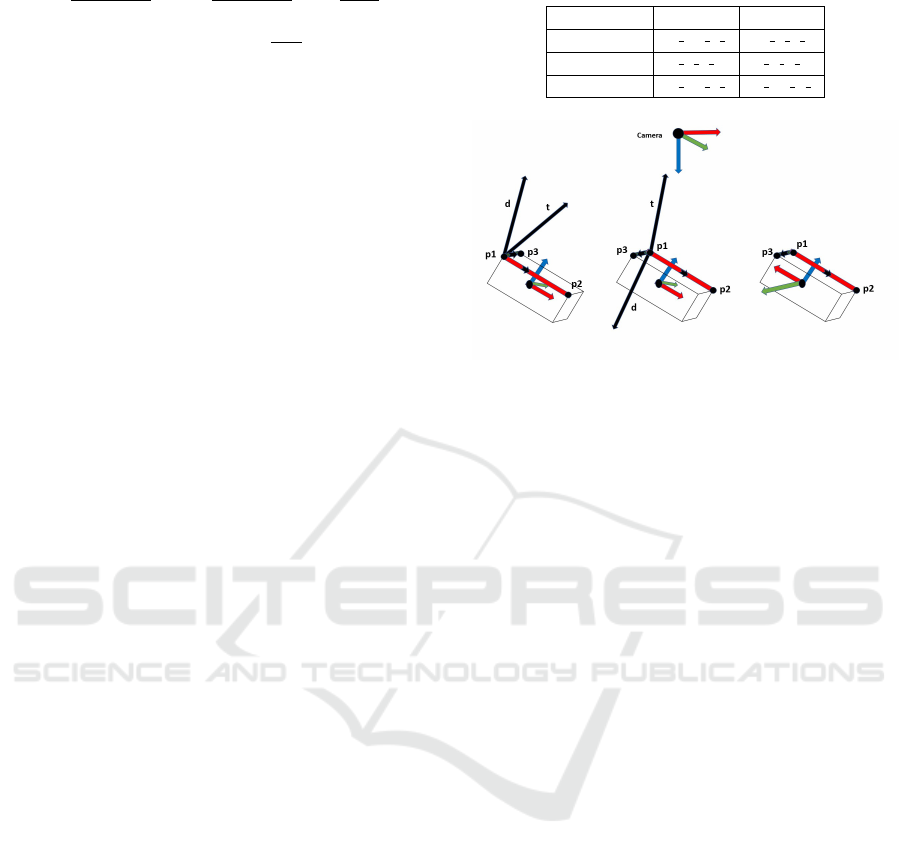

(a) Local Brick frame (b) Pose using b, h

(c) Pose using l, b (d) Pose using l, h

Figure 3: Local brick frame and assumptions.

4.2 Corresponding points and Pose

Estimation

4.2.1 Local Frame and Assumptions

Let l, b, h represent the length, breadth, and height of

the cube, and we define a local frame with the origin

at the centroid of the cube, and x-axis, y-axis, and z-

axis pointing along the length, breadth, and height of

the cube respectively, shown in Fig. 3.

For estimating the pose, we need a minimum

of two orthogonal edges. Although two orthogonal

edges can give two poses, we will assume that the

third axis corresponding to the remaining edge will

point to camera origin. So this assumption filter out

the one solution, and we will get a unique pose from

two edges shown in Fig. 3.

4.2.2 Corner Points and Directional Vector

Let two edges are l

1

, l

2

, and from these edges, we

will calculate three corner points, p

1

, p

2

, p

3

, where

p

1

is the intersecting point of two lines, p

2

is another

extreme of l

1

and p

3

is another extreme of l

2

. We also

define four unit directional vector d

1

, d

2

, t, and d as

ICINCO 2021 - 18th International Conference on Informatics in Control, Automation and Robotics

248

d

1

=

p

2

− p

1

k

p

2

− p

1

k

, d

2

=

p

3

− p

1

k

p

3

− p

1

k

, t =

−p

1

k

p

1

k

(5)

d = d

1

× d

2

, d =

d

k

d

k

(6)

Vector d

1

represents the unit directional vector

corresponding to edge l

1

, d

2

represents the unit direc-

tional vector corresponding to edge l

2

and t represents

the unit directional vector from p

1

to camera origin.

Vector d is a unit directional vector orthogonal to d

1

and d

2

.

4.2.3 Direction Assignment and Corresponding

Points

Once we have the corner points, our next step is

to find their corresponding points in the local object

frame. For estimating the corresponding points, it is

important to verify if the d

1

( or d

2

) is representing the

+x(y,z) or -x(y,z) wrt local object frame. Further, we

have to assign the direction to d

1

and d

2

in such a way

that third remaining axis of the brick should point to-

wards the camera frame.

We explain direction assignment with an example.

Let

k

l

1

k

= l and

k

l

2

k

= b. Since remaining edge is the

height edge so corresponding axis i.e. z-axis of local

frame must point towards the camera frame which is

shown in Fig. 4. From the two edges we compute the

corner points and directional vectors. For visualiza-

tion, we represent l

1

in red color because l

1

must have

a direction of either ˆx or − ˆx in local frame. Similarly

we represent l

2

in green color because l

2

must have a

direction of either ˆy or − ˆy in local frame. Directional

vector and corner points are represented in black color

(Fig. 4). Let us assume that d

1

has a direction of ˆx and

d

2

has a direction of ˆy in local frame. To verify our

assumption, we compute d which represents the di-

rectional vector along the third axis (z-axis) and val-

idate our assumptions by computing the dot product

between d and t.

As shown in Fig. 4, for the first brick our direction

assumptions are correct because dot product between

d and t is positive and corresponding points are given

in Table 1.

For second brick, our direction assumptions are

incorrect because dot product between d and t is neg-

ative, which means either d

1

has a direction of − ˆx or

d

2

has a direction of −ˆy. One can conclude from sec-

ond brick (Fig. 4), that d

1

with a direction of ˆx and d

2

with a direction of − ˆy is the valid solution and corre-

sponding points are given in Table1.

If we consider the other solution i.e. − ˆx for d

1

and ˆy for d

2

, then as shown in third brick, local cube

frame rotates by an angle 180 about z-axis, and since

the cube is symmetric, so both solutions will project

the same model.

Table 1: Correspondences.

Corner points Brick 1 Brick 2

p

1

−

l

2

, −

b

2

,

h

2

−

l

2

,

b

2

,

h

2

p

2

l

2

,

b

2

,

h

2

l

2

,

b

2

,

h

2

p

3

−

l

2

, −

b

2

,

h

2

−

l

2

, −

b

2

,

h

2

Figure 4: Direction assignment and corresponding points.

4.2.4 Pose Refinement

Using three correspondences, we compute the pose

of the cube. To further refine the pose, we need more

correspondences. From Algorithm 2 we get C which

represents all edges of same cube and intersection

of edges will give the corner points. From the ini-

tial pose calculated from three correspondences, we

will find the correspondence between the other corner

points in camera frame and in local frame by calcu-

lating the distance between corner points and the pre-

dicted corner points from the initial pose. Pair of cor-

ner point and predicted corner point with minimum

distance will form a correspondence pair. Thus more

correspondences will give more accurate pose.

5 EXPERIMENTAL RESULTS

AND DISCUSSION

5.1 Experimental Setup

Our robot platform setup is shown in Fig. 5. It

consists of a UR5 robot manipulator with its con-

troller box (internal computer) and a host PC (external

computer). The UR5 robot manipulator is a 6-DoF

robot arm designed to work safely alongside humans.

The low-level robot controller is a program running

on UR5’s internal computer, broadcasting robot arm

data, receiving and interpreting the commands, and

controlling the arm accordingly. There are several

options for communicating with the robot low-level

controller, for example, teach pendant or opening a

TCP socket (C++/Python) on a host computer. Our

vision hardware consists of an RGB-D Microsoft

Edge and Corner Detection in Unorganized Point Clouds for Robotic Pick and Place Applications

249

(a) Setup (b) Visual Processing (c) Grasp (d) Retrieve (e) Place

Figure 5: Experimental setup and execution steps during robotic manipulation.

Kinect sensor mounted on top of the workspace. Point

cloud processing is done in the PCL library, and ROS

drivers are used for communication between the sen-

sor and the manipulator. A suction gripper is mounted

at the end effector of the manipulator.

5.2 Motion Control Module

The perception module passes the 6D pose of the ob-

ject to the main motion control node running on the

host PC. The main controller then interacts with low

level Robot Controller to execute the motion. A pick

and place operation for an object consists of four ac-

tions: i) approach the object, ii) grasp, iii) retrieve

and iv) place the object. The overall trajectory has

five way-points: an initial-point (I), a mid-point (M),

a goal-point (G), a retrieval point (R), and a final des-

tination point (F). A final path is generated which

passes through all of the above points.

i) Approach: This part of the trajectory connects

I and M. The point I is obtained by forward kine-

matics on current joint state of the robot and M is an

intermediate point defined by M = G + d ˆz, where G

is the goal-point provided by the pose-estimation pro-

cess and ˆz is the unit vector in vertical up direction at

G. The value of d is selected subjectively after several

pick-place trial runs.

ii) Grasp: As the robot’s end effector reaches M,

the end-effector align according to the brick pose.

This section of the trajectory is executed between M

and G with directed straight line trajectory of the end

effector.

iii) Retrieve: Retrieval is the process of bringing

the grasped object out of the clutter. This is executed

from G to R. The retrieval path is traversed vertically

up straight line trajectory. R is given by R = G + h ˆz,

where G is the goal-point and ˆz is the unit vector in

vertical up direction at G. The value of h is selected

to ensure the clearance of the lifted object from the

clutter.

iii) Place: Placing is the process of dropping the

grasped object at the destination place. This is exe-

cuted from R to F. Fig. 5 shows the execution steps

during robotic manipulation.

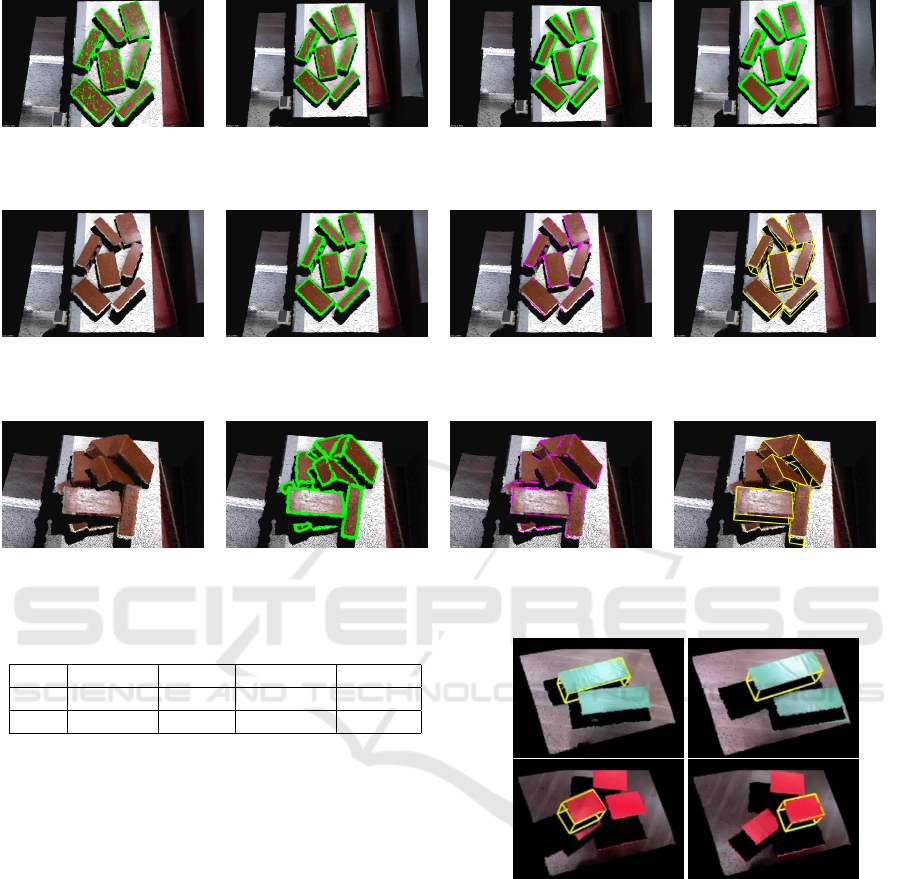

5.3 Experimental Validation

We explore two experimental settings: when bricks

are presented to the robot in isolation and when bricks

are presented in a dense cluttered scenario as shown

in Fig. 7 and Fig. 8 respectively.

5.3.1 Objects Present in the Isolation

In the first experiment we measure the dimension of

bricks and place the bricks separately in front of the

3D sensor.

Edge Points: For extracting edges, we perform sev-

eral experiments for selecting the optimum value of

r

s

and t

h

. We initiate with r

s

= 0.005m and increment

it with a step size of 0.001m. We record the compu-

tation time at each r

s

(Table 2). Based on quality of

edges (Fig. 6) and computation time we select the r

s

.

Based on several trials we found that for extracting

edge points, r

s

= 0.02m and t

h

= 0.35 are the optimal

values.

Table 2: Computation time (sec) at various r

s

.

r

s

= 0.010 r

s

= 0.015 r

s

= 0.020 r

s

= 0.025 r

s

= 0.030

0.66538 1.29415 2.13939 3.16722 4.40235

Lines and Corner Points: For finding the equation of

lines we apply RANSAC method with threshold value

of 0.01m and for finding the corner point, we compute

the distance between two extremities of edges and if

distance is < 0.01m, then corner point is the average

of two extreme points. With these parameters, we find

the edges and corner points which is shown in Fig. 7

and computation time for each step is shown in Table

3.

ICINCO 2021 - 18th International Conference on Informatics in Control, Automation and Robotics

250

(a) r

s

= 0.01 (b) r

s

= 0.015 (c) r

s

= 0.025 (d) r

s

= 0.030

Figure 6: Effect of r

s

at t

h

= 0.35.

(a) Raw point cloud (b) Edges Point (c) All Edges (d) Model Fitting

Figure 7: Isolated bricks experiment.

(a) Input (b) Edges points (c) All Edges (d) Model Fitting

Figure 8: Clutter bricks experiment.

Table 3: Computation time (sec) at each step.

Edges Point All edges Model Fitting Total Time

Exp 1 2.13939 2.0854 0.07573 4.36192

Exp 2 2.13056 5.26684 0.21726 7.61466

5.3.2 Clutter of Bricks

In the second experiment we place the brick in clut-

ter as shown in Fig. 8. For edge points and lines,

we use the same parameter values which were used in

experiment 1. Result of each step is shown in Fig. 8

and computation time for each step is shown in Table

3. With each successful grasp of the brick, computa-

tion time will decreases, because the number of points

which needs to be processed will decrease. Above ex-

periment is performed on a system with an i7 proces-

sor having a clock speed of 3.5GHz and 8GB RAM.

5.4 Performance on Different Objects

As mentioned earlier, our method can be easily ap-

plied for the other objects with known dimensions.

Hence we tested our algorithm on two different ob-

jects with different dimensions. Fig. 9 shows the

output of our method on two different objects (only

dimensions are known in advance). A single pose has

Figure 9: Pose predictions for different objects.

been visualized in Fig. 9 to avoid a mess in the im-

ages. From Fig. 9, we can claim that our method can

easily be deployed for other objects.

5.5 Performance Analysis

To demonstrate the efficacy of our proposed edge ex-

traction method from unorganized point cloud, we

compare the results from the method (Bazazian et al.,

2015) for edge extraction. Their method estimates the

sharp features by analysing the eigenvalues of the co-

variance matrix which is defined by each point's k-

Edge and Corner Detection in Unorganized Point Clouds for Robotic Pick and Place Applications

251

nearest neighbors. We apply their method

2

on raw

point cloud data with k vary from 4 to 30 as shown in

Fig. 10. The results of the method (Bazazian et al.,

2015) is unsatisfactory on the same data set where our

method perform very well. It is because of the inher-

ent noise in the sensor, points on a flat surface has

large variations. Thus all eigenvalues of the covari-

ance matrix will be large, hence predicts the sharp

edges even at the flat surface.

(a) k = 4 (b) k = 5

(c) k = 10 (d) k = 30

Figure 10: Edges from covariance matrix based method.

6 CONCLUSION

Novel edge and corner detection algorithm for un-

organized point clouds was proposed and tested on

generic objects like a coffee mug, dragon, bunny, and

clutter of random objects. The algorithm is used for

6D pose estimation of known objects in clutter for

robotic pick and place applications. The proposed

technique is tested on two warehouse scenarios, when

objects are placed distinctly and when objects are

placed in a dense clutter. Results of each scenario

is reported in the paper along with the computation

time at each step. To demonstrate the efficacy of the

edge extraction technique, we compared it with the

covariance matrix based solution for 3D edge extrac-

tions from unorganized point cloud in a real scenario

and report better performance. The overall approach

is tested in a warehouse application where a real UR5

robot manipulator is used for robotic pick and place

operations.

REFERENCES

Asadi, K. and Han, K. (2018). Real-time image-to-bim reg-

istration using perspective alignment for automated

construction monitoring. In Construction Research

Congress, volume 2018, pages 388–397.

2

https://github.com/denabazazian/

Bazazian, D., Casas, J. R., and Ruiz-Hidalgo, J. (2015).

Fast and robust edge extraction in unorganized point

clouds. In 2015 International Conference on Digi-

tal Image Computing: Techniques and Applications

(DICTA), pages 1–8. IEEE.

Changali, S., Mohammad, A., and van Nieuwland, M.

(2015). The construction productivity imperative.

How to build megaprojects better.„McKinsey Quar-

terly.

Collet, A., Martinez, M., and Srinivasa, S. S. (2011). The

moped framework: Object recognition and pose esti-

mation for manipulation. The International Journal of

Robotics Research, 30(10):1284–1306.

Demarsin, K., Vanderstraeten, D., Volodine, T., and Roose,

D. (2007). Detection of closed sharp edges in point

clouds using normal estimation and graph theory.

Computer-Aided Design, 39(4):276–283.

Doliotis, P., McMurrough, C. D., Criswell, A., Middleton,

M. B., and Rajan, S. T. (2016). A 3d perception-based

robotic manipulation system for automated truck un-

loading. In 2016 IEEE International Conference on

Automation Science and Engineering (CASE), pages

262–267. IEEE.

Drost, B., Ulrich, M., Navab, N., and Ilic, S. (2010). Model

globally, match locally: Efficient and robust 3d ob-

ject recognition. In 2010 IEEE computer society con-

ference on computer vision and pattern recognition,

pages 998–1005. Ieee.

Hackel, T., Wegner, J. D., and Schindler, K. (2016). Con-

tour detection in unstructured 3d point clouds. In Pro-

ceedings of the IEEE Conference on Computer Vision

and Pattern Recognition, pages 1610–1618.

Hinterstoisser, S., Lepetit, V., Rajkumar, N., and Konolige,

K. (2016). Going further with point pair features. In

European conference on computer vision, pages 834–

848. Springer.

Kazhdan, M. and Hoppe, H. (2013). Screened poisson sur-

face reconstruction. ACM Transactions on Graphics

(ToG), 32(3):29.

Lai, K., Bo, L., and Fox, D. (2014). Unsupervised fea-

ture learning for 3d scene labeling. In 2014 IEEE In-

ternational Conference on Robotics and Automation

(ICRA), pages 3050–3057. IEEE.

Lowe, D. G. (2004). Distinctive image features from scale-

invariant keypoints. International journal of computer

vision, 60(2):91–110.

Ni, H., Lin, X., Ning, X., and Zhang, J. (2016). Edge de-

tection and feature line tracing in 3d-point clouds by

analyzing geometric properties of neighborhoods. Re-

mote Sensing, 8(9):710.

Parkes, S. (2019). Automated brick laying system and

method of use thereof. US Patent App. 16/047,143.

Pharswan, S. V., Vohra, M., Kumar, A., and Behera, L.

(2019). Domain-independent unsupervised detec-

tion of grasp regions to grasp novel objects. In

2019 IEEE/RSJ International Conference on Intel-

ligent Robots and Systems (IROS), pages 640–645.

IEEE.

Qi, C. R., Su, H., Mo, K., and Guibas, L. J. (2017). Pointnet:

Deep learning on point sets for 3d classification and

ICINCO 2021 - 18th International Conference on Informatics in Control, Automation and Robotics

252

segmentation. In Proceedings of the IEEE Conference

on Computer Vision and Pattern Recognition, pages

652–660.

Stoyanov, T., Vaskevicius, N., Mueller, C. A., Fromm, T.,

Krug, R., Tincani, V., Mojtahedzadeh, R., Kunaschk,

S., Ernits, R. M., Canelhas, D. R., et al. (2016). No

more heavy lifting: Robotic solutions to the container

unloading problem. IEEE Robotics & Automation

Magazine, 23(4):94–106.

Tekin, B., Sinha, S. N., and Fua, P. (2018). Real-time seam-

less single shot 6d object pose prediction. In Proceed-

ings of the IEEE Conference on Computer Vision and

Pattern Recognition, pages 292–301.

Vohra, M., Prakash, R., and Behera, L. (2019). Real-time

grasp pose estimation for novel objects in densely

cluttered environment. In 2019 28th IEEE Interna-

tional Conference on Robot and Human Interactive

Communication (RO-MAN), pages 1–6. IEEE.

Xiang, Y., Schmidt, T., Narayanan, V., and Fox, D. (2017).

Posecnn: A convolutional neural network for 6d ob-

ject pose estimation in cluttered scenes. arXiv preprint

arXiv:1711.00199.

Zhou, Y. and Tuzel, O. (2018). Voxelnet: End-to-end learn-

ing for point cloud based 3d object detection. In Pro-

ceedings of the IEEE Conference on Computer Vision

and Pattern Recognition, pages 4490–4499.

Edge and Corner Detection in Unorganized Point Clouds for Robotic Pick and Place Applications

253