Over Two Years of Challenging Environmental Conditions for

Localization: The IPLT Dataset

Youssef Bouaziz

1,2 a

, Eric Royer

1

, Guillaume Bresson

2

and Michel Dhome

1

1

Université Clermont Auvergne, CNRS, SIGMA Clermont, Institut Pascal, 63000 Clermont-Ferrand, France

2

Institut VEDECOM, 23 bis allée des Marronniers, 78000 Versailles, France

Keywords:

Visual-based Navigation, Computer Vision for Transportation, Long-term SLAM.

Abstract:

This paper presents a new challenging dataset for autonomous driving applications: Institut Pascal Long-Term

— IPLT — Dataset which was collected over two years and it contains, at the moment, 127 sequences and it

still growing. This dataset has been captured in a parking lot where our experimental vehicle has followed the

same path with slight lateral and angular deviations while we made sure to incorporate various environmental

conditions caused by luminance, weather, seasonal changes.

1 INTRODUCTION

Autonomous driving applications are very critical and

should be taken with absolute caution before deploy-

ment on public roads. Therefore, real-world data are

needed in development, testing and validation phases.

This paper presents a new dataset called IPLT (In-

stitut Pascal Long-Term) dataset which mainly ad-

dresses localization under challenging conditions is-

sues (snow, rain, change of season. . . ).

Before explaining in details the composition of

our dataset, it is important to explore the structure

of an autonomous robot first. Figure 1 represents the

operating mechanism of a general autonomous navi-

gation platform in See-Think-Act cycle as explained

in (Siegwart et al., 2011).

According to Figure 1, we can identify the

four main modules interfering in this See-Think-Act

mechanism:

• Perception of the environment and the state of the

robot thanks to the different equipped sensors.

• Robot localization and mapping in the environ-

ment.

• Obstacle avoidance and trajectory planning.

• Processing and executing mission orders.

In our case, we are interested only in the first two

modules which are directly dependant to the dataset

presented in this paper. Our experimental vehicle ac-

quires external environmental data through different

a

https://orcid.org/0000-0003-3257-6859

Localization and

Mapping

Cameras

Lidars

GPS

Sensors

Sensory data

Cognition

Path planning

Position

+

Global map

Motion control

Path

Path execution

Acting

Actuator commands

Real world

environment

Figure 1: Autonomous driving platform represented in See-

Think-Act Cycle (Siegwart et al., 2011).

equipped sensors (camera images, laser scans, GPS

data, odometry data,...). Then, these sensory infor-

mation are received by the localization and mapping

(SLAM) module to allow the vehicle to interpret the

environment so it can localize and update the map.

In our dataset, we repeatedly traverse the same

parking lot, therefore, we managed to record many

dynamic elements such as weather and lighting

changes, seasonal changes, parking lot state changes

(parked cars changes, empty parking lot, full parking

lot, . . . ), moving cars, moving pedestrians, . . . . In

Figure 2, we present an overview of images showing

some types of environmental conditions included in

our dataset.

Our dataset is composed of 127 sequences in total

and they are distributed as follows:

Bouaziz, Y., Royer, E., Bresson, G. and Dhome, M.

Over Two Years of Challenging Environmental Conditions for Localization: The IPLT Dataset.

DOI: 10.5220/0010518303830387

In Proceedings of the 18th International Conference on Informatics in Control, Automation and Robotics (ICINCO 2021), pages 383-387

ISBN: 978-989-758-522-7

Copyright

c

2021 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

383

(a)

2018-10-19-10-54-31

(b)

2018-10-22-19-40-27

(c)

2018-10-26-07-31-09

(d)

2018-10-26-09-11-01

(e)

2018-12-11-17-33-30

(f)

2018-12-13-10-36-57

(g)

2019-01-23-10-33-15

(h)

2019-01-23-16-05-30

(i)

2019-02-04-10-58-40

(j)

2019-10-01-16-54-55

(k)

2019-10-22-15-01-25

(l)

2019-12-05-16-43-56

(m)

2020-01-15-11-13-20

(n)

2020-01-31-16-07-34

(o)

2020-02-05-18-37-10

Figure 2: An overview of images recorded with the front camera for some sequences of our dataset. For each sequence we

are indicating the acquisition date and symbolizing the environmental condition by a small icon. Please refer to Table 1 for

more details about the designation of these condition icons.

Table 1: Designation of condition icons.

Icon Designation

Day & sunny condition

Dusk condition

Night condition

Cloudy weather

Rainy weather

Fog condition

Snow condition

• 22 sequences with sunny condition

• 43 sequences with cloudy weather

• 19 sequences with rainy weather

• 19 sequences with dusk condition

• 14 sequences with night condition

• 4 sequences with fog condition

• 5 sequences with snow condition

• 1 long sequence (2019-12-05-16-43-56.bag)

recorded over one hour and 10 minutes with mul-

tiple loops in the parking lot starting from 16:44

until 17:54 and it incorporates day, dusk and night

conditions.

We made our dataset public online in the hope

of facilitating evaluations for researchers focusing on

long-term autonomous navigation in dynamic envi-

ronments. Our dataset can be downloaded through

the link: http://iplt.ip.uca.fr/datasets/. Please enter the

following username/password for a read-only access

to our ftp server: ipltuser/iplt_ro.

The remainder of this paper is outlined as follows.

Section 2 presents references of some related datasets,

Section 3 provides information about the IPLT dataset

and the equipment of our experimental shuttle which

has been used to record it and Section 4 concludes the

paper.

2 RELATED WORK

Intensive work on SLAM algorithms has produced a

large number of related datasets such as Ford Cam-

pus Dataset (Pandey et al., 2011), Málaga Urban

Dataset (Blanco-Claraco et al., 2014), Waymo Open

Dataset (Sun et al., 2020),. . . . Some of these datasets

were recorded in static environments with very lit-

tle environmental changes, while some others are not

revisiting a same location when recording different

ICINCO 2021 - 18th International Conference on Informatics in Control, Automation and Robotics

384

sequences. KiTTi (Geiger et al., 2013) is widely

used dataset in SLAM applications, unfortunately,

this dataset is not incorporating many environmental

conditions since it was collected over one week (from

2011-09-26 to 2011-10-03). Later, a new dataset with

a novel labeling scheme and data for 2D and 3D se-

mantic segmentation was proposed in KiTTi 360 (Xie

et al., 2016). However, this dataset consists of only

11 individual sequences and there is little overlap in

trajectories between them.

Applications destined for autonomous driving and

aiming for long-term localization uses must be eval-

uated on real-life scenarios where environment is

changing over time. The VPRiCE challenge (Suen-

derhauf, 2015) is a dataset that offers some challeng-

ing cases for localization. Unfortunately, this dataset

is offering only few sequences of some places that

were revisited twice on different times. Similarly, the

CMU Seasons dataset (Bansal et al., 2014) was ac-

quired in urban and suburban environments totaling

over 8.5 km of travel and contains 7,159 reference

images and 75,335 query images acquired in differ-

ent seasons. Sattler et al. (Sattler et al., 2018) have

also presented a challenging dataset, called Aachen

Day-Night, which incorporates 4,328 daytime images

and 98 night-time queries. The NCLT (Carlevaris-

Bianco et al., 2015), Oxford RobotCar (Maddern

et al., 2017) and UTBM RobotCar (Yan et al., 2020)

datasets are three widely used datasets for long-term

tracking applications as they include different envi-

ronmental conditions. The UTBM RobotCar dataset

is including only few sequences (11 sequences in to-

tal) while in the two others, the traversed path is var-

ied on each recording session.

In addition to environmental conditions, we are

also interested in evaluating the effect of the lat-

eral and angular deviation between sequences on the

localization performance. However, the previously

mentioned datasets do not provide sequences with

such characteristics. This is the main reason that led

us to record our own dataset and make it available to

the community. Our dataset was used in our previous

work (Bouaziz et al., 2021) to evaluate the impact of

environmental changes and lateral and angular devia-

tions on the localization performance.

3 IPLT DATASET

Our dataset contains currently 127 sequences col-

lected over two years. In all the sequences, the ve-

hicle has followed the same path, while in some of

them, we made some slight lateral and angular devi-

ations as specified in the Figure 3. All our sequences

were recorded in the same direction and each one of

them is about 200 m length.

Figure 3: Example of sequences recorded in a parking lot.

As specified in Figure 3, all the sequences in our

dataset are forming loops in the parking lot. This

makes from our dataset a very good asset for loop

closure applications. All the sequences in our dataset

were recorded with our experimental vehicle pre-

sented in Figure 4. It consists of an electric shuttle

that is equipped with two cameras (front and rear),

four LiDAR systems (two front and two rear), a con-

sumer grade global positioning system (GPS). Each

camera is recording gray-scale images with 10Hz fre-

quency and both of them are having 100

◦

FoV (Field

of View).

The cameras were slightly moved in April 2019,

so we have two different calibration settings, one for

sequences recorded before April 2019 and one for

more recent sequences. All the sequences are saved

in rosbag files format and can be read by the ROS

middleware (Quigley et al., 2009). The rosbag files

contain the following rostopics:

• /cameras/front/image: front camera images.

• /cameras/back/image: rear camera images.

• /robot/odom: absolute poses calculated by wheel

odometry.

• /lidars/front_left/scan: front-left lidar data.

• /lidars/front_right/scan: front-right lidar

data.

• /lidars/back_left/scan: back-left lidar data.

• /lidars/back_right/scan: back-right lidar

data.

• /gps_planar: GPS data.

• /tf_static: contains the extrinsic parameters of

all sensors (cameras, lidars, GPS, . . . ).

In Table 2, we present the intrinsic parameters

of our two cameras which are expressed in the uni-

fied camera model (Barreto, 2006). The unified cam-

era model has five parameters: [γ

x

, γ

y

, c

x

, c

y

, ξ] and

they are used to project a 3D point P(X

s

, Y

s

, Z

s

) ex-

pressed in the Spherical coordinates into a 2D Point

Over Two Years of Challenging Environmental Conditions for Localization: The IPLT Dataset

385

LiDAR

Systems

GPS

Front

Camera

z

y

x

Rear

Camera

z

y

x

IMU +

Odometry

Figure 4: The EasyMile EZ10 electric shuttle used to record our dataset.

p

c

expressed in the image plane as explained in Equa-

tion (1) and Figure 5.

Table 2: Intrinsic parameters of the cameras.

from_2018-10-19_to_2019-03-08

γ

x

γ

y

c

x

c

y

ξ

front 766.3141 769.5469 324.2513 239.7592 1.4513

back 763.5804 766.0006 326.2222 250.7755 1.4523

from_2019-10-01

γ

x

γ

y

c

x

c

y

ξ

front 770.0887 768.9841 330.3834 222.0791 1.4666

back 764.4637 763.1171 322.6882 247.8716 1.4565

Figure 5: Unified camera model. A 3D point P is pro-

jected in the image plane of the camera into a distorted point

p

c

(Lébraly, 2012).

p

c

= Km

c

K =

f 0 u

0

0 f v

0

0 0 1

and m

c

=

X

s

ρ

Y

s

ρ

Z

s

ρ

+ ξ

with ρ =

p

X

2

s

+Y

2

s

+ Z

2

s

and ξ = Z

c

≥ 0

(1)

Table 3 shows the extrinsic parameters of the cam-

eras which are already integrated in the rosbag files.

We have expressed the extrinsic parameters of the

front camera in the coordinate system of the rear cam-

era, this means that we present the translation and the

rotation of the front camera with respect to the axis

of the rear camera (see Figure 4). The rotations are

presented in quaternions.

Table 3: Extrinsic parameters of the cameras.

from_2018-10-19_to_2019-03-08

Rotation Translation

q

x

q

y

q

z

q

w

t

x

t

y

t

z

0.0030 -0.9998 0.01479 0.0123 -0.0304 -0.0698 -3.4635

from_2019-10-01

Rotation Translation

q

x

q

y

q

z

q

w

t

x

t

y

t

z

0.0002 -0.9998 0.0200 0.0089 0.0600 -0.0321 -3.4637

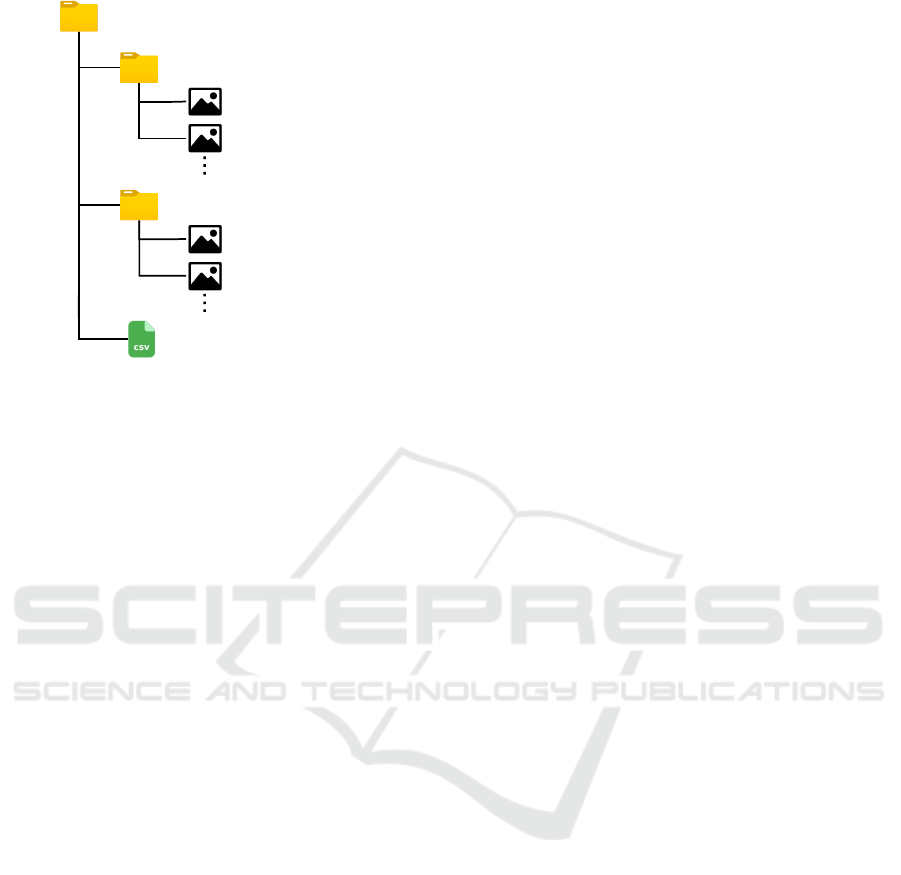

For non ROS users, we provide a Python script

(extract_rosbag.py) that can be used to extract im-

ages and odometry data from the rosbag files. This

script takes as argument a list of rosbag files (one

or more files) and generates a folder for each file as

in the structure presented in Figure 6. Each folder

contains a CSV (comma-separated values) file named

odometry.csv and two sub-folders: camera_back/

and camera_front/. The CSV file contains the ab-

solute poses of the vehicle computed with the wheel

odometry (8 entries for each pose: the translation

t_x, t_y and t_z, the quaternion rotation q_x, q_y,

q_z and q_w, and the corresponding timestamp in

nanoseconds). The two folders camera_back/ and

camera_front/ are containing the images of each

corresponding camera and each image is named with

its acquisition timestamp presented in nanoseconds.

ICINCO 2021 - 18th International Conference on Informatics in Control, Automation and Robotics

386

camera_back/

camera_front/

odometry.csv

<timestamp>.png

<timestamp>.png

<timestamp>.png

<timestamp>.png

2020-02-05-18-37-10/

Figure 6: Structure of a folder generated by using the

Python script (extract_rosbag.py) to extract the content

of the rosbag file "2020-02-05-18-37-10.bag".

4 CONCLUSION

In this paper, we have presented a new dataset that

contains challenging environmental conditions for

long-term localization. This dataset was recorded

over two years and it contains more than 100 se-

quences. We made our dataset available to the com-

munity in the hope that it will be useful to other re-

searchers working in the field of long-term localiza-

tion. This dataset was used in our previous works

to evaluated the performance of different localization

approaches in dynamic environments.

ACKNOWLEDGEMENTS

This work has been sponsored by the French govern-

ment research program "Investissements d’Avenir"

through the IMobS3 Laboratory of Excellence (ANR-

10-LABX-16-01) and the RobotEx Equipment of

Excellence (ANR-10-EQPX-44), by the European

Union through the Regional Competitiveness and

Employment program 2014-2020 (ERDF - AURA re-

gion) and by the AURA region.

REFERENCES

Bansal, A., Badino, H., and Huber, D. (2014). Under-

standing how camera configuration and environmen-

tal conditions affect appearance-based localization. In

2014 IEEE Intelligent Vehicles Symposium Proceed-

ings, pages 800–807. IEEE.

Barreto, J. P. (2006). Unifying image plane liftings for cen-

tral catadioptric and dioptric cameras. In Imaging Be-

yond the Pinhole Camera, pages 21–38. Springer.

Blanco-Claraco, J.-L., Moreno-Duenas, F.-A., and

González-Jiménez, J. (2014). The málaga urban

dataset: High-rate stereo and lidar in a realistic urban

scenario. The International Journal of Robotics

Research, 33(2):207–214.

Bouaziz, Y., Royer, E., Bresson, G., and Dhome, M. (2021).

Keyframes retrieval for robust long-term visual local-

ization in changing conditions. In to appear in 2021

IEEE 19th World Symposium on Applied Machine In-

telligence and Informatics (SAMI). IEEE.

Carlevaris-Bianco, N., Ushani, A. K., and Eustice, R. M.

(2015). University of Michigan North Campus long-

term vision and lidar dataset. International Journal of

Robotics Research, 35(9):1023–1035.

Geiger, A., Lenz, P., Stiller, C., and Urtasun, R. (2013).

Vision meets robotics: The kitti dataset. The Inter-

national Journal of Robotics Research, 32(11):1231–

1237.

Lébraly, P. (2012). Etalonnage de caméras à champs dis-

joints et reconstruction 3D: Application à un robot

mobile. PhD thesis.

Maddern, W., Pascoe, G., Linegar, C., and Newman, P.

(2017). 1 Year, 1000km: The Oxford RobotCar

Dataset. The International Journal of Robotics Re-

search (IJRR), 36(1):3–15.

Pandey, G., McBride, J. R., and Eustice, R. M. (2011). Ford

campus vision and lidar data set. The International

Journal of Robotics Research, 30(13):1543–1552.

Quigley, M., Conley, K., Gerkey, B., Faust, J., Foote, T.,

Leibs, J., Wheeler, R., and Ng, A. Y. (2009). Ros: an

open-source robot operating system. In ICRA work-

shop on open source software, volume 3, page 5.

Kobe, Japan.

Sattler, T., Maddern, W., Toft, C., Torii, A., Hammarstrand,

L., Stenborg, E., Safari, D., Okutomi, M., Pollefeys,

M., Sivic, J., et al. (2018). Benchmarking 6dof out-

door visual localization in changing conditions. In

Proceedings of the IEEE Conference on Computer Vi-

sion and Pattern Recognition, pages 8601–8610.

Siegwart, R., Nourbakhsh, I. R., and Scaramuzza, D.

(2011). Introduction to autonomous mobile robots.

MIT press.

Suenderhauf, N. (2015). The vprice challenge 2015 – visual

place recognition in changing environments.

Sun, P., Kretzschmar, H., Dotiwalla, X., Chouard, A., Pat-

naik, V., Tsui, P., Guo, J., Zhou, Y., Chai, Y., Caine,

B., et al. (2020). Scalability in perception for au-

tonomous driving: Waymo open dataset. In Proceed-

ings of the IEEE/CVF Conference on Computer Vision

and Pattern Recognition, pages 2446–2454.

Xie, J., Kiefel, M., Sun, M.-T., and Geiger, A. (2016). Se-

mantic instance annotation of street scenes by 3d to 2d

label transfer. In Proceedings of the IEEE Conference

on Computer Vision and Pattern Recognition, pages

3688–3697.

Yan, Z., Sun, L., Krajnik, T., and Ruichek, Y. (2020).

EU long-term dataset with multiple sensors for au-

tonomous driving. In Proceedings of the 2020

IEEE/RSJ International Conference on Intelligent

Robots and Systems (IROS).

Over Two Years of Challenging Environmental Conditions for Localization: The IPLT Dataset

387