CogToM-CST: An implementation of the Theory of Mind for the

Cognitive Systems Toolkit

Fabio Grassiotto

1 a

, Esther Luna Colombini

2 b

, Alexandre Sim

˜

oes

3 c

, Ricardo Gudwin

4 d

and Paula Dornhofer Paro Costa

4 e

1

Hub de Intelig

ˆ

encia Artificial e Arquiteturas Cognitivas (H.IAAC), Eldorado Research Institute, Campinas - SP, Brazil

2

Institute of Computing, University of Campinas (UNICAMP), Campinas - SP, Brazil

3

Institute of Science and Technology, S

˜

ao Paulo State University (Unesp), Sorocaba - SP, Brazil

4

School of Electrical and Computer Engineering, University of Campinas (UNICAMP), Campinas - SP, Brazil

Keywords:

Autism, Cognitive Architectures, Artificial Intelligence.

Abstract:

This article proposes CogToM-CST, an implementation of a Theory of Mind (ToM) model using the Cognitive

Systems Toolkit (CST). Psychological research establishes that ToM deficits are usually associated with mind-

blindness, the inability to attribute mental states to others, a typical trait of autism. This cognitive divergence

prevents the proper interpretation of other individuals’ intentions and beliefs in a given scenario, typically

resulting in social interaction problems. Inspired by the psychological Theory of Mind model proposed by

Baron-Cohen, this paper presents a computational implementation exploring the usefulness of the common

concepts in Robotics, such as Affordances, Positioning, and Intention Detection, to augment the effectiveness

of the proposed architecture. We verify the results by evaluating both a canonical False-Belief task and a

subset of tasks from the Facebook bAbI dataset.

1 INTRODUCTION

The objective of this work is to propose a novel cog-

nitive architecture that implements a computational

model for the Theory of Mind using the Cognitive

Systems Toolkit (CST) and its reference architecture

(Paraense et al., 2016). Our earlier work defined the

basis for such an architecture (Grassiotto and Costa,

2021).

Our main motivation is the Autism Spectrum Dis-

order (ASD), a biologically based neurodevelopmen-

tal disorder, and the psychological models proposed

in the last 30 years to explain the reasoning behind

the social interaction issues typically experienced by

autistic individuals (Klin, 2006). ASD is character-

ized by marked and sustained impairment in social in-

teraction, deviance in communication, and restricted

or stereotyped patterns of behaviors and interests (Or-

ganization et al., 1993).

a

https://orcid.org/0000-0003-1885-842X

b

https://orcid.org/0000-0002-1534-5744

c

https://orcid.org/0000-0003-0467-3133

d

https://orcid.org/0000-0002-9666-3954

e

https://orcid.org/0000-0002-1457-6305

Research in technologies for ASD has had a focus

on the diagnosis, monitoring, assessment, and inter-

vention tools, interactive or virtual environments, mo-

bile and wearable applications, educational devices,

games, and therapeutic resources (Boucenna et al.,

2014; Picard, 2009; Kientz et al., 2019; Jaliaawala

and Khan, 2020). Among the current efforts for help-

ing people in the autism spectrum, there is a lack

of Computational assistive systems for helping them

with their impairments in social interactions.

Our understanding is that these systems should be

designed to analyze environmental and visual social

cues not readily interpreted by those individuals in the

spectrum, providing expert advice on the best alterna-

tive for interaction, and improving social integration

outcomes.

Cognitive architectures, we believe, can help

bridge the gap by creating computational modules in-

spired on the human mind that are involved on the

process of social interaction. Such a cognitive archi-

tecture should be able to create assumptions (that we

will be calling beliefs) about the environment and sit-

uations to provide expert advice.

The British psychologist Simon Baron-Cohen

proposed, in his doctorate thesis, the mind-blindness

462

Grassiotto, F., Colombini, E., Simões, A., Gudwin, R. and Costa, P.

CogToM-CST: An implementation of the Theory of Mind for the Cognitive Systems Toolkit.

DOI: 10.5220/0010836300003116

In Proceedings of the 14th International Conference on Agents and Artificial Intelligence (ICAART 2022) - Volume 3, pages 462-469

ISBN: 978-989-758-547-0; ISSN: 2184-433X

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

theory of autism, later published as a book (Baron-

Cohen, 1997). His work proposed the existence of a

mindreading system and established that the cognitive

delays associated with autism are related to deficits in

developing such a system. This mindreading system

is directly related to the concept of Theory of Mind

(ToM) as the innate ability to attribute mental states

to oneself and others and to understand beliefs and

desires that are distinct from their own (Premack and

Woodruff, 1978).

Research has shown that individuals with ASD

show deficits in ToM (Kimhi, 2014; Baraka et al.,

2019; Baron-Cohen, 2001). The deficits can be

demonstrated in several test tasks, in particular false-

belief tasks, i.e., test tasks designed to evaluate chil-

dren’s capacity to understand other people’s mental

states (Baron-Cohen, 1990).

This work contributes to the literature by propos-

ing CogToM-CST, a novel cognitive architecture de-

signed as an assistive tool for individuals in the autism

spectrum, by implementing a computational ToM

mechanism.

2 RELATED WORK

2.1 The Cognitive Systems Toolkit

(CST)

The Cognitive Systems Toolkit is a general toolkit for

the construction of cognitive architectures (Paraense

et al., 2016). Inspired by Baars’s Global Workspace

Theory (GWT) for consciousness, Clarion, and LIDA

cognitive architectures, among others, CST uses

many concepts introduced there (Sun, 2006; Baars

and Franklin, 2009).

GWT establishes that human cognition is

achieved through a series of small special-purpose

processors of an unconscious nature (Baars and

Franklin, 2007). Processing “Coalitions” (i.e.,

alliances of processors) enter the competition for

access to a limited capacity global workspace.

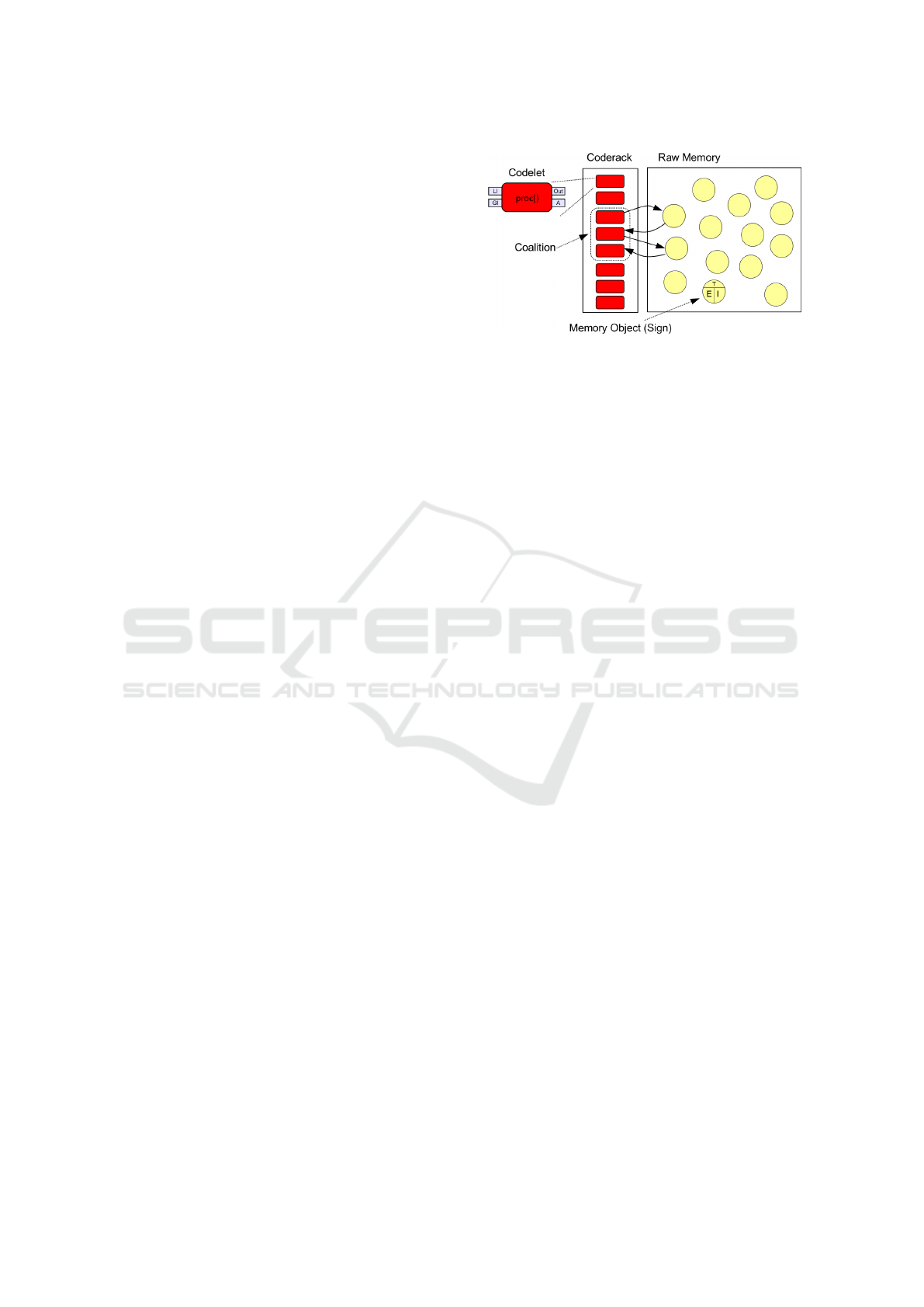

The core concepts in the CST Core architecture

are Codelets and Memory Objects as can be seen in

Figure 1.

Codelets are defined as micro-agents, small pieces

of non-blocking code with a specialized function, de-

signed to be executed continuously and cyclically for

the implementation of cognitive functions in the agent

mind. Codelets are stored in a container known as the

Coderack.

Memory Objects are generic information holders

for the storage of any auxiliary or episodic informa-

Figure 1: The CST Core Architecture. From

https://cst.fee.unicamp.br/.

tion required by the cognitive architecture. Memory

Objects can also be organized in Memory Containers

for grouping purposes.

In the CST Core, there is a strong coupling be-

tween Codelets and Memory Objects. Memory Ob-

jects are holders for any information required for the

Codelet to run and receivers for the data output by the

Codelet. In a similar fashion to Codelets, all Mem-

ory Objects and Memory Containers are stored in a

container known as the Raw Memory.

2.2 Autism and False-belief Tasks

CogToM is inspired by the long-term goal of design-

ing an expert system to assist people in the autism

spectrum to help out with social interaction. To that

purpose, it is essential to understand one of the key

deficits observed in autistic individuals by psycholo-

gists: the performance in False-Belief Tasks.

False-Belief tasks are a type of task used in the

study of ToM to test children. The objective of the test

is to check if the child understands that another person

does not possess the same knowledge as herself.

Baron-Cohen and Frith proposed the Sally-Anne

test as a mechanism to infer the ability of autistic and

non-autistic children to attribute mental states to other

people regardless of the IQ level of the children being

tested (Baron-Cohen et al., 1985).

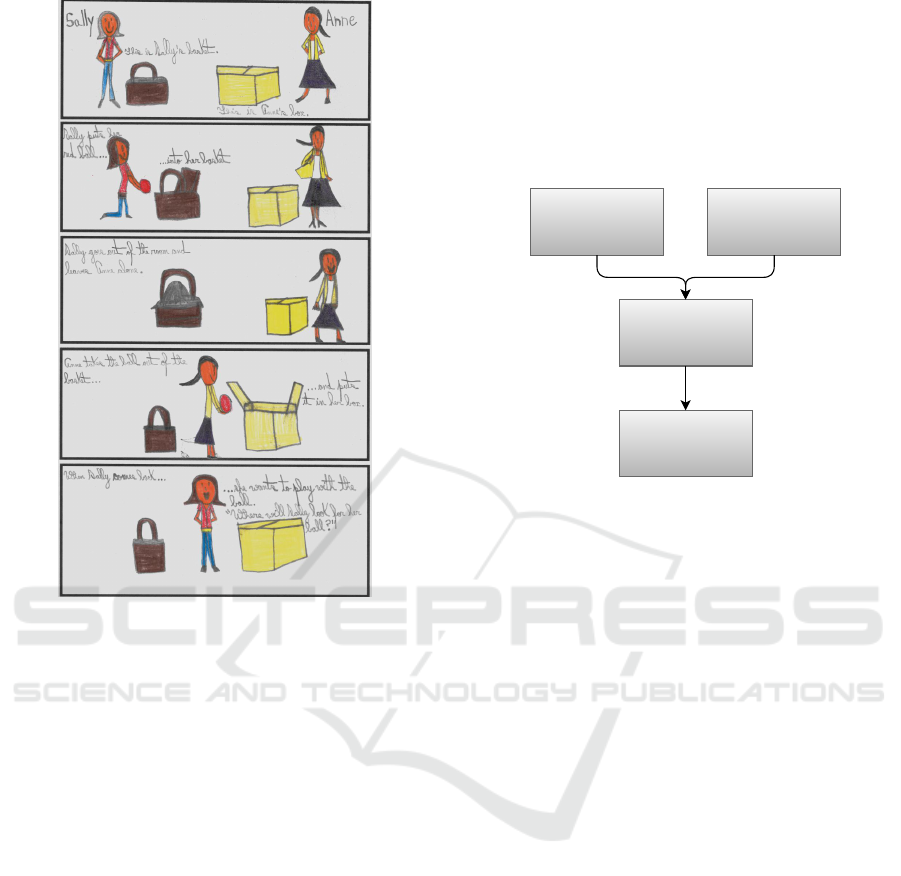

In the test, a sequence of images (Figure 2) is

presented to the children. Starting the sequence, in

the top rectangle, two girls (Sally and Anne) are in

a room, with a basket (Sally’s) and a box (Anne’s).

Sally takes a ball and hides in her basket (second rect-

angle), then leaving the room (third rectangle). Af-

ter that, Anne takes the ball from Sally’s basket and

stores to her box (fourth rectangle). Sally then returns

to the room (fifth rectangle). The child is then asked,

“Where will Sally look for her ball?”. Most autis-

tic children answer that Sally would look for the ball

in the box, whereas control subjects correctly answer

that Sally would look for the ball in the basket.

CogToM-CST: An implementation of the Theory of Mind for the Cognitive Systems Toolkit

463

Figure 2: The Sally-Anne test for false-belief. Adapted

from (Baron-Cohen et al., 1985), drawing by Alice Gras-

siotto.

The results presented in the article supported the

hypothesis that autistic children generally fail to em-

ploy ToM due to the inability to represent mental

states. The downside of this is that autistic subjects

cannot attribute beliefs to others, which brings a dis-

advantage to predicting other people’s behavior. It

is thought that this lack of predicting ability causes

deficits in the social skills of people in the autism

spectrum once it hinders their ability to face the chal-

lenges of social interaction.

Examples of abilities that are linked to the ca-

pacity of understanding each other mental states are,

among others, the ability to empathize and the skills

of coordination and cooperation (Schaafsma et al.,

2015; Sally and Hill, 2006). ToM allows us to

generate expectations about the behavior of others

and, based on these expectations, guide our decision-

making process.

2.3 Baron-Cohen Mindreading

Since it is generally accepted that the failure of em-

ploying a theory of mind causes deficits in the social

skills for people in the autism spectrum, we defined

our approach as the implementation of a computa-

tional model equivalent to the inner workings of ToM

in humans.

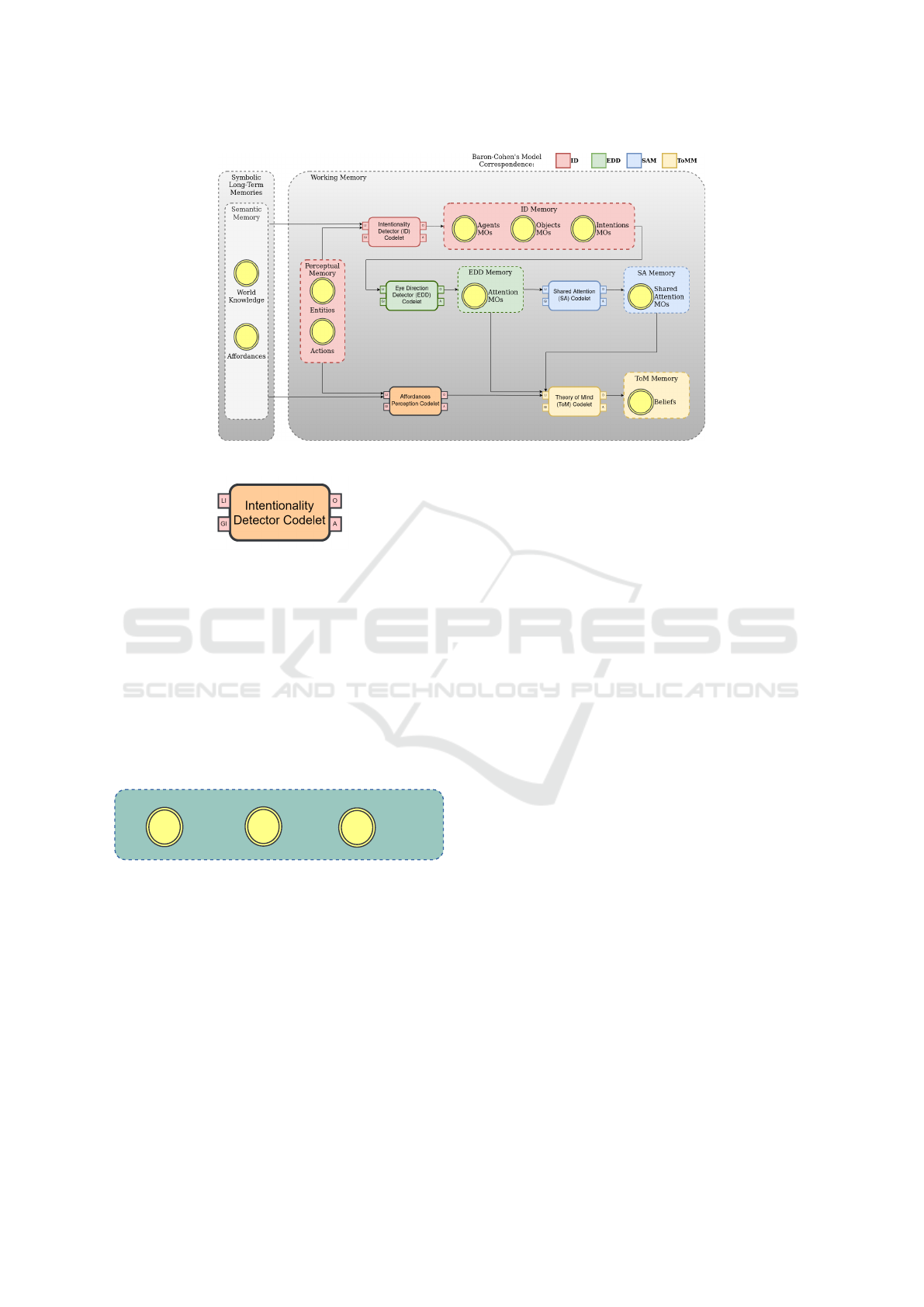

Thus, our proposal for the CogToM cognitive ar-

chitecture will follow a biologically-inspired model

based on the mindreading model proposed by Baron-

Cohen (Baron-Cohen, 1997), as can be seen in Figure

3.

Intentionality

Detector (ID)

Shared Attention

Mechanism (SAM)

Theory of Mind

Mechanism (ToMM)

Eye Direction

Detector (EDD)

Figure 3: The ToM Model extracted from (Baron-Cohen,

1997).

This model seeks to understand the mindreading

process by proposing a set of four separate compo-

nents:

• Intentionality Detector (ID) is a perceptual de-

vice that can interpret movements and identify

agents from objects, assigning goals and desires

to them.

• Eye Direction Detector (EDD) is a visual sys-

tem able to detect the presence of eyes or eye-like

stimuli in others, to compute whether eyes are di-

rected to the self or towards something else and

infer that if the eyes are directed towards some-

thing, the agent to whom the eyes belong to is

seeing that something.

• Shared Attention Mechanism (SAM) builds in-

ternal representations that specify relationships

between an agent, the self, and a third object. By

constructing such representations, SAM can ver-

ify that an agent and the self are paying attention

to the same object.

• Theory of Mind Mechanism (ToMM) com-

pletes the agent development of mindreading by

representing the agent mental states that include,

among others, the states of pretending, thinking,

knowing, believing, imagining, guessing, and de-

ceiving.

The components of the mindreading model are not

isolated as there are interactions required to build the

internal representations of EDD, SAM, and ToMM.

ICAART 2022 - 14th International Conference on Agents and Artificial Intelligence

464

3 AFFORDANCES

The psychologist J.J. Gibson introduced the concept

of Affordances in his 1979 work where he defines the

affordances of an environment as what is offers to an

animal, what provides or furnishes, either for good or

evil (Gibson, 2014).

Since then, this concept has been extended by re-

searchers in the AI field, as described by (McClelland,

2017). Researchers have found applications for affor-

dances in robotics as a process to encode the relation-

ships between actions, objects and effects (Montesano

et al., 2008; S¸ahin et al., 2007). Classic examples are

that a ball might afford to catch, or a box might hide

something inside.

For CogToM, the concept of affordances has

found use in the environmental analysis to assign

properties to objects and the environment it is cur-

rently situated.

4 INTENTION DETECTION

In the field of robotics, Intention Understanding is

seen as a requirement for human-machine interaction

(Yu et al., 2015). By understanding environmental

cues, a robot can deduce the possible human inten-

tion by considering the relationship between objects

and actions. Some models for this were proposed us-

ing affordance-based intention recognition. One ex-

ample of Intention Understanding is to detect if Sally,

in the Sally-Anne test described earlier, intends to put

her ball inside her basket.

The CogToM architecture proposes using external

systems capable of understanding human intention to

augment the environmental analysis it requires.

5 PROPOSED ARCHITECTURE

5.1 CogToM-CST

We design this cognitive architecture using the CST

toolkit as an agent that implements decision-making

functionality to implement an AI Observer. The ob-

jective of this Observer is passing a false-belief task

by implementing the mindreading model and integrat-

ing it with the processing of affordances and inten-

tions. It relies on inputs as an external system, in the

form of a visual camera system capable of identifying

agents and objects, eye direction, and human intention

and positioning. Affordances are seen as properties of

the entities (objects and agents) in the system.

The outputs of the system (Beliefs) are textual rep-

resentations of the mental state of an agent as per-

ceived by the Observer.

5.2 CST Components

Using the CST Toolkit, the architecture is modeled

by defining Codelets and Memory Objects, according

to Figure 4. A correspondence between the computa-

tional architecture to the mind model is illustrated in

the figure through a color scheme. Red blocks corre-

spond to Baron-Cohen’s ID module as shown in Fig-

ure 3, green blocks correspond to EDD module, blue

blocks correspond to SAM, and pale yellow blocks

correspond to ToMM.

5.2.1 Codelets

Codelets are the processes executed within the sim-

ulation step and are modeled after the psychologi-

cal model by Baron-Cohen, where each mind module

corresponds to a Codelet. Codelets have local (LI)

and global (GI) inputs, and provide an activation (A)

and a set of outputs (O) to Memory Objects (MOs) as

can be seen in Figure 5.

• The Intentionality Detector Codelet identifies

which entities in a scene are agents or objects,

based on movement and action detection, creat-

ing memories for the Agents, their Intentions, and

Objects.

This Codelet is activated by the visual identifica-

tion of movement on a scene and recalls from se-

mantic memory for object identification.

• The Eye Direction Detector Codelet identifies

eye direction from the agents and objects created

by the Intentionality Detector Codelet, creates and

attaches that information to Attention Memory

Objects.

This Codelet is activated by the ID Codelet.

• The Shared Attention Mechanism Codelet de-

tects shared attention from the objects created by

the EDD Codelet, and then creates and attaches

information to Shared Attention Memory Objects.

This Codelet is activated by the EDD Codelet.

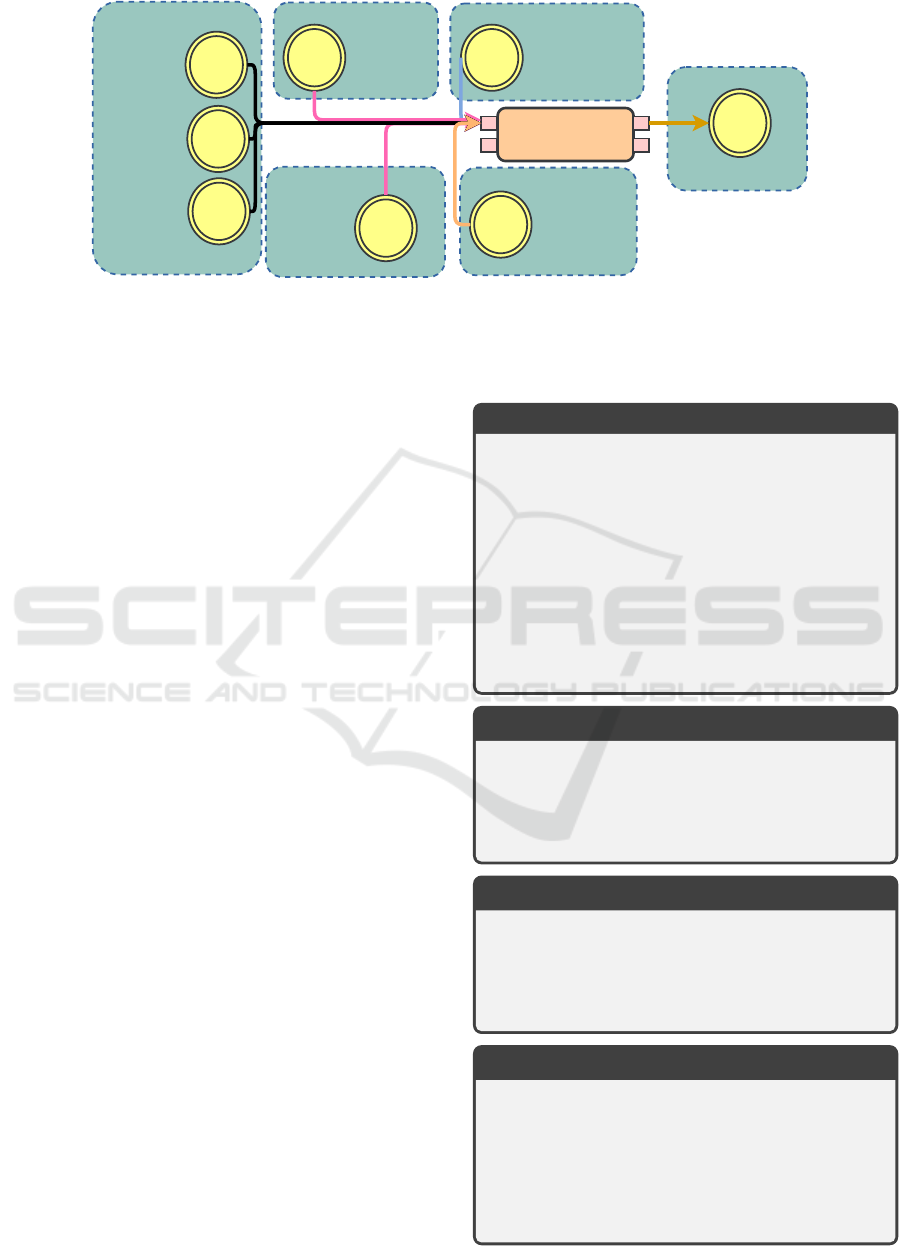

• The Theory of Mind Codelet works as an inte-

grator of all the information from working mem-

ory and creates Belief Memory Objects.

This Codelet is activated by all other Codelets.

The Affordances Perception Codelet and the

Positioning Perception Codelet do not exist in the

mind model. Their purpose is to create affordances

and position properties for the objects in the scene

CogToM-CST: An implementation of the Theory of Mind for the Cognitive Systems Toolkit

465

Figure 4: CogToM-CST Proposed Architecture.

Figure 5: Example Codelet.

based on the camera input, the Agents, the Objects,

and the Intentions associated with them.

5.2.2 Memory Objects

Memory for this system is modeled after the concepts

of semantic and episodic memories. Memory Objects

(and Memory Containers) are the generic information

holders in memory that are modeled after the storage

of data required for each of the modules of the mind

model, as can be seen in Figure 6.

ID Memory

Intentions

MOs

Agents

MOs

Objects

MOs

Figure 6: Example Memory Containers.

Semantic memories consist of storing world

knowledge information (not in the scope of this im-

plementation) and Affordances. All the other memo-

ries for the mind model are representations in episodic

memory.

Affordances Memory Objects are Memory Ob-

jects that retain agents and objects interaction proper-

ties as a dictionary lookup.

Positioning Memory Objects are Memory Ob-

jects created from a camera input to inform the cur-

rent location of agents and objects in a scene.

Activation Memory Objects are special-purpose

Memory Objects used in this architecture to synchro-

nize the execution of Codelets. The CST Toolkit

was designed for multithreading, while the architec-

ture we are modeling required sequential execution of

the Codelets processes to produce the Beliefs we are

looking for.

Memory Objects in ID memory are the agents,

their intentions, and objects in the environment. Each

of these entities is modeled as Memory Containers

(MCs) in the architecture, producing an Agents MC,

Objects MC, and Intentions MC.

Memory Objects in EDD memory store the at-

tention of each agent for objects or other agents in the

environment, modeled as Attention MCs.

Memory Objects in SAM memory store which

objects or agents have the shared attention of two or

more agents, modeled as Shared Attention MCs.

Memory Objects in ToM Memory are Beliefs, the

main purpose of this cognitive architecture, modeled

as Belief MCs.

5.2.3 Belief Construction

Beliefs in ToM Memory are modeled as text descrip-

tions for the mental states the Observer will provide.

There are two sets of beliefs the system will consider:

Beliefs for each one of the agents in the scene, and

self-beliefs, those associated with knowledge the Ob-

server has about the environment.

< AGENT, BELIEV ES|KNOW S, OBJECT,

AFFORDANCE, TARGET OBJECT >

Where:

• AGENT is the main agent that the mental state

applies to, for example, Sally. In the case of self-

belief, the agent is the Observer itself.

ICAART 2022 - 14th International Conference on Agents and Artificial Intelligence

466

ToM Memory

Semantic Memory

ID Memory

EDD Memory

Theory of Mind

Codelet

O

A

LI

GI

Attention

MOs

SAM Memory

Shared

Attention

MOs

Intentions

MOs

Beliefs

Affordances

MOs

Agents

MOs

Objects

MOs

Positioning

Memory

Positioning

MOs

Figure 7: ToM Codelet View.

• BELIEVES—KNOWS is the mental state as-

signed to the agent. Various mental states could be

considered, including pretending, thinking, know-

ing, believing, imagining, guessing, and deceiv-

ing. For this system, the mental state Believe is

used for agents’ beliefs, whereas the Knows men-

tal state is used for self-beliefs about the environ-

ment.

• OBJECT is the object of the belief, for example

Ball.

• AFFORDANCE is the main property, or affor-

dance, of the object. For example, a Box may

Contain something.

• TARGET OBJECT is the target object for the

affordance, when applicable. For example, a Box

may contain a Ball.

As shown in Figure 7, Beliefs are built from the

ToM model proposed by the literature that provides

the set of agents, objects, intentions, and attentions in

the scene through the ID, EDD, and SAM modules

and their Memory Containers. From this initial out-

put, the architecture integrates the affordances from

semantic memory. A single combination of an agent

and one object defines a Belief object. Based on this

set composed of an agent, an object, an affordance,

and intention Memory Objects, a textual representa-

tion of that Belief is created in the ToM Memory as a

new Memory Object.

Source code for the implementation of the Cog-

ToM Cognitive archictecture is available at (Gras-

siotto and Costa, 2020).

6 RESULTS

Two sets of validation tests were considered: the

canonical false-belief task as described by the litera-

ture, and a subset of tasks from the bAbI dataset from

Facebook Research (Weston et al., 2015). The bAbI

dataset is a set of 20 simple toy tasks to evaluate ques-

tion answering and reading comprehension.

Canonical False-Belief Test

Sally and Anne are in the room. Basket, box

and ball are on the floor.

Sally reaches for the ball.

Sally puts the ball in the basket.

Sally exits the room.

Anne reaches for the basket.

Anne gets the ball from the basket.

Anne puts the ball in the box.

Anne exits the room, and Sally enters.

Sally searches for the ball in the room.

Task 1: Single Supporting Fact

Mary went to the bathroom.

John moved to the hallway.

Mary travelled to the office.

Where is Mary? A:office

Task 2: Two Supporting Facts

John is in the playground.

John picked up the football.

Bob went to the kitchen.

Where is the football? A:playground

Task 3: Three Supporting Facts

John picked up the apple.

John went to the office.

John went to the kitchen.

John dropped the apple.

Where was the apple before the kitchen?

A:office

CogToM-CST: An implementation of the Theory of Mind for the Cognitive Systems Toolkit

467

Beliefs for the canonical false-belief test are pro-

vided below.

Sally BELIEVES Anne Exists None

Sally BELIEVES Basket Contains None

Sally BELIEVES Box Contains None

Sally BELIEVES Ball Hidden In Basket

Anne BELIEVES Sally Exists None

Anne BELIEVES Basket Contains None

Anne BELIEVES Box Contains None

Anne BELIEVES Ball OnHand Of Anne

Observer KNOWS Sally IS AT Room

Observer KNOWS Anne IS AT Outside

Observer KNOWS Basket IS AT Room

Observer KNOWS Box IS AT Room

Observer KNOWS Ball IS AT Room

Since Sally was not present in the room while

Anne took the ball from the basket and hid it, she still

believes the ball is in the basket. Therefore, the sys-

tem we designed can pass the false-belief task.

Facebook bAbI Task 1 consists of a question to

identify the location of an agent, given one single sup-

porting task (Mary traveled to the office):

Mary BELIEVES John Exists None

John BELIEVES Mary Exists None

Observer KNOWS Mary IS AT Office

Observer KNOWS John IS AT Hallway

Introducing the concept of Observer beliefs for the

location of the agent, the beliefs could be produced

correctly.

Facebook bAbI Task 2 is quite similar to the first

one producing similar results:

John BELIEVES Bob Exists None

John BELIEVES Football Pickup None

Bob BELIEVES John Exists None

Bob BELIEVES Football Pickup None

Observer KNOWS John IS AT Playground

Observer KNOWS Bob IS AT Kitchen

Observer KNOWS Football IS AT Playground

Facebook bAbI Task 3 requires a temporal reg-

istry of the beliefs created in each step of the simula-

tion. The system can identify temporal succession by

the internal steps of the creation of beliefs:

Simulation running mind step: 2

John BELIEVES Apple Pickup None

Observer KNOWS John IS AT Office

Observer KNOWS Apple IS AT Office

Simulation running mind step: 3

John BELIEVES Apple Pickup None

Observer KNOWS John IS AT Kitchen

Observer KNOWS Apple IS AT Kitchen

Simulation running mind step: 4

John BELIEVES Apple Dropped None

Observer KNOWS John IS AT Kitchen

Observer KNOWS Apple IS AT Kitchen

Therefore, by comparing beliefs between simula-

tion steps, it is possible to reply to the question pro-

posed by this test.

7 CONCLUSION

The Cognitive Systems Toolkit provides an oppor-

tunity for organizing cognitive architectures follow-

ing well-defined structures. In the process of reim-

plementation of the proposed architecture, it became

clear that the new organization offers gains for mod-

eling cognitive systems and states.

CogToM was earlier designed as a platform to val-

idate the viability of a computational system to pass

false-belief tasks based on implementing a psycho-

logical model of the human mind, and we identified

the need for integrating further information about the

world in the form of affordances and human inten-

tions. The reuse of other proposed architectures based

on the CST toolkit will be of value to our research.

Even tough this system has been designed with a

focus on the autism spectrum disorder, we understand

that the results obtained could be applied to the fields

of robotic social interaction, social agents and others.

We could reproduce the earlier results with this

cognitive architecture after moving the internal con-

cepts to this new toolkit. The system continued to be

generic enough to allow for testing with simple tasks

as described by the Facebook bAbI dataset.

A future plan for this architecture is to create

generic components for a Theory of Mind module to

reuse the implementation for other systems based on

the CST toolkit.

ACKNOWLEDGEMENTS

This project is part of the Hub for Artificial Intelli-

gence and Cognitive Architectures (H.IAAC - Hub

de Intelig

ˆ

encia Artificial e Arquiteturas Cognitivas).

We acknowledge the support of PPI-Softex/MCTI by

grant 01245.013778/2020-21 through the Brazilian

Federal Government.

ICAART 2022 - 14th International Conference on Agents and Artificial Intelligence

468

REFERENCES

Baars, B. J. and Franklin, S. (2007). An architectural

model of conscious and unconscious brain functions:

Global workspace theory and ida. Neural networks,

20(9):955–961.

Baars, B. J. and Franklin, S. (2009). Consciousness is com-

putational: The lida model of global workspace the-

ory. International Journal of Machine Consciousness,

1(01):23–32.

Baraka, M., El-Dessouky, H. M., Abd El-Wahed, E. E.,

Amer, S. S. A., et al. (2019). Theory of mind: its

development and its relation to communication disor-

ders: a systematic review. Menoufia Medical Journal,

32(1):25.

Baron-Cohen, S. (1990). Autism: A specific cognitive dis-

order of “Mind-Blindness”. International Review of

Psychiatry, 2(1):81–90.

Baron-Cohen, S. (1997). Mindblindness: An essay on

autism and theory of mind. MIT press.

Baron-Cohen, S. (2001). Theory of mind in normal devel-

opment and autism. Prisme, 34(1):74–183.

Baron-Cohen, S., Leslie, A. M., Frith, U., et al. (1985).

Does the autistic child have a “theory of mind”. Cog-

nition, 21(1):37–46.

Boucenna, S., Narzisi, A., Tilmont, E., Muratori, F., Piog-

gia, G., Cohen, D., and Chetouani, M. (2014). In-

teractive technologies for autistic children: A review.

Cognitive Computation, 6(4):722–740.

Gibson, J. J. (2014). The ecological approach to visual per-

ception: classic edition. Psychology Press.

Grassiotto, F. and Costa, P. D. P. (2020). CogToM-

CST source code. https://github.com/AI-Unicamp/

CogTom-cst/. Last checked on Aug 27, 2021.

Grassiotto, F. and Costa, P. D. P. (2021). Cogtom: A

cognitive architecture implementation of the theory of

mind. In ICAART (2), pages 546–553.

Jaliaawala, M. S. and Khan, R. A. (2020). Can autism be

catered with artificial intelligence-assisted interven-

tion technology? a comprehensive survey. Artificial

Intelligence Review, 53(2):1039–1069.

Kientz, J. A., Hayes, G. R., Goodwin, M. S., Gelsomini, M.,

and Abowd, G. D. (2019). Interactive technologies

and autism. Synthesis Lectures on Assistive, Rehabil-

itative, and Health-Preserving Technologies, 9(1):i–

229.

Kimhi, Y. (2014). Theory of mind abilities and deficits in

autism spectrum disorders. Topics in Language Dis-

orders, 34(4):329–343.

Klin, A. (2006). Autism and asperger syndrome: an

overview. Brazilian Journal of Psychiatry, 28:s3–s11.

McClelland, T. (2017). Ai and affordances for mental ac-

tion. environment, 1:127.

Montesano, L., Lopes, M., Bernardino, A., and Santos-

Victor, J. (2008). Learning object affordances: from

sensory–motor coordination to imitation. IEEE Trans-

actions on Robotics, 24(1):15–26.

Organization, W. H. et al. (1993). The ICD-10 classifica-

tion of mental and behavioural disorders: diagnostic

criteria for research, volume 2. World Health Organi-

zation.

Paraense, A. L., Raizer, K., de Paula, S. M., Rohmer, E., and

Gudwin, R. R. (2016). The cognitive systems toolkit

and the cst reference cognitive architecture. Biologi-

cally Inspired Cognitive Architectures, 17:32–48.

Picard, R. W. (2009). Future affective technology for autism

and emotion communication. Philosophical Trans-

actions of the Royal Society B: Biological Sciences,

364(1535):3575–3584.

Premack, D. and Woodruff, G. (1978). Does the chim-

panzee have a theory of mind? Behavioral and brain

sciences, 1(4):515–526.

S¸ahin, E., C¸ akmak, M., Do

˘

gar, M. R., U

˘

gur, E., and

¨

Uc¸oluk,

G. (2007). To afford or not to afford: A new formal-

ization of affordances toward affordance-based robot

control. Adaptive Behavior, 15(4):447–472.

Sally, D. and Hill, E. (2006). The development of inter-

personal strategy: Autism, theory-of-mind, coopera-

tion and fairness. Journal of economic psychology,

27(1):73–97.

Schaafsma, S. M., Pfaff, D. W., Spunt, R. P., and Adolphs,

R. (2015). Deconstructing and reconstructing theory

of mind. Trends in cognitive sciences, 19(2):65–72.

Sun, R. (2006). The clarion cognitive architecture: Extend-

ing cognitive modeling to social simulation. Cogni-

tion and multi-agent interaction, pages 79–99.

Weston, J., Bordes, A., Chopra, S., Rush, A. M.,

van Merri

¨

enboer, B., Joulin, A., and Mikolov, T.

(2015). Towards ai-complete question answering:

A set of prerequisite toy tasks. arXiv preprint

arXiv:1502.05698.

Yu, Z., Kim, S., Mallipeddi, R., and Lee, M. (2015). Hu-

man intention understanding based on object affor-

dance and action classification. In 2015 International

Joint Conference on Neural Networks (IJCNN), pages

1–6. IEEE.

CogToM-CST: An implementation of the Theory of Mind for the Cognitive Systems Toolkit

469