Construction of a Support Tool for User Reading of

Privacy Policies and Assessment of its User Impact

Sachiko Kanamori

1

, Hirotsune Sato

2,1

, Naoya Tabata

3,1

and Ryo Nojima

1

1

National Institute of Information and Communications Technology, Koganei, Tokyo, Japan

2

Shinshu University, Matsumoto, Nagano, Japan

3

Aichi Gakuin University, Nisshin, Aichi, Japan

Keywords:

Privacy Policy, Machine Learning, Natural Language Processing, Domain Adaptation.

Abstract:

Today’s service providers must notify users of their privacy policies and obtain user consent in advance. Frame-

works that impose these requirements have become mandatory. Originally designed to protect user privacy,

obtaining user consent in advance has become a mere formality. These problems are introduced by the gap

between service providers’ privacy policies, which prioritize the observance of laws and guidelines, and user

expectations of these policies. In particular, users wish to easily understand how their data will be handled. To

reduce this gap, we provide a tool that supports users in reading privacy policies in Japanese. We assess the

effectiveness of the tool in experiments and follow-up questionnaires.

1 INTRODUCTION

It has long been considered mandatory for service

providers to have frameworks for presenting privacy

policies and obtaining prior consent. Posting a pri-

vacy policy has become a requirement for service

providers. To ensure that users have at least looked

at a policy, some service providers require that users

scroll to the end of the privacy policy before they

are allowed to click the consent button. Other service

providers send a link to their privacy policies when-

ever a user navigates to their web page. Users are fre-

quently requested to consent to the acquisition of per-

sonal data.

Presenting privacy policies and acquiring con-

sent are intended to protect users, but they do not

guarantee actual consent (McDonald and Cranor,

2008). Consent from users is now thought to be

merely perfunctory; many users merely agree to the

policy and use the service without reading it (Cate,

2010). Many users would rather use the service im-

mediately than read a privacy policy (Kanamori et al.,

2017).

Whereas service providers prioritize compliance

with laws and guidelines, users are more interested

in how their data will be handled (Reidenberg et al.,

2014), (Rao et al., 2016). Privacy policies the ser-

vice providers create resemble legal documents, and

general users do not easily understand them (Proctor

et al., 2008); they prefer information written in simple

sentences.

For these reasons, signed consent has become a

mere formality. Ensuring that users actually read and

comprehend privacy policies will require reading as-

sistance tools. In the White Paper 2020 (MIC, 2020),

issued by the Ministry of Internal Affairs and Com-

munications of Japan in December 2020, 89.8% of

Japanese reported using the Internet, and approxi-

mately 110 million Japanese see privacy policies on a

daily basis. Hence, privacy policy reading assistance

tools in Japanese will be highly useful. In the present

paper, we introduce our privacy policy user under-

standing support tool that will help Japanese users un-

derstand and recognize privacy and consent.

1.1 Our Contribution

We intend the privacy policy user understanding sup-

port tool for Japanese users who do not usually read

Japanese privacy policies. To our knowledge, no such

tool exists as yet. Unlike the words in English sen-

tences, words in Japanese sentences are not separated

by spaces, and this limitation demands morphologi-

cal analysis by machine learning prior to data analy-

sis. When privacy policies contain many legal terms,

users can have difficulty interpreting them. We de-

signed our tool to present users with separate unique

expressions of information.

412

Kanamori, S., Sato, H., Tabata, N. and Nojima, R.

Construction of a Support Tool for User Reading of Privacy Policies and Assessment of its User Impact.

DOI: 10.5220/0010847500003120

In Proceedings of the 8th International Conference on Information Systems Secur ity and Privacy (ICISSP 2022), pages 412-419

ISBN: 978-989-758-553-1; ISSN: 2184-4356

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

1.2 Paper Organization

The remainder of the paper is organized as fol-

lows. Section 2 introduces related work, and Section

3 describes our constructed privacy policy user un-

derstanding support tool. In Sections 4 and 5, we de-

scribe how we created the machine learning training

data for privacy policies and how we conducted the

survey evaluation of the method, respectively. In Sec-

tion 6, we analyze the results and discuss them in Sec-

tion 7. Section 8 presents the conclusions and future

work prospects.

2 RELATED WORK

To prevent the downgrading of consent acquisition to

a mechanistic process, researchers have made many

proposals (Kelley et al., 2009), (Cranor et al., 2006),

which can be categorized into two groups: methods

for service providers that display their privacy poli-

cies in plain language users can easily understand

(Category 1,) and tools that help users understand the

policies (Category 2). The tool we constructed is a

Category 2 tool. In the existing literature, researchers

have proposed Category 2 tools for privacy policies

written in English, but to our knowledge, no schol-

ars have developed any such tools in Japanese. We

discuss Japanese privacy policies Section 2.2.1 of the

present manuscript.

2.1 Proposals for Service Providers

Individual countries have begun mandating privacy

policies and prior user consent acquisition by legal en-

forcement. Examples include the US Consumer Pri-

vacy Bill of Rights, protection regulations in Eu-

rope (EU, nd), and the revised Act on the Protec-

tion of Personal Information in Japan (PPC, nd). In

Japan, the Personal Information Protection Commit-

tee supervises the handling of personal information.

Under the revised Act, a business operator collecting

personal information must publicly announce the pur-

pose of use, management, and provision of that infor-

mation to any third parties or pre-notify the person

whose information is being collected.

However, although the system has improved, the

acquisition of consent has lost its substance (Cate,

2010). Many proposals for service providers to make

easily understandable privacy policies have been pub-

lished (Kelley et al., 2009), (Cranor et al., 2006).

Some companies have revised their privacy poli-

cies for easier user interpretation. These revisions

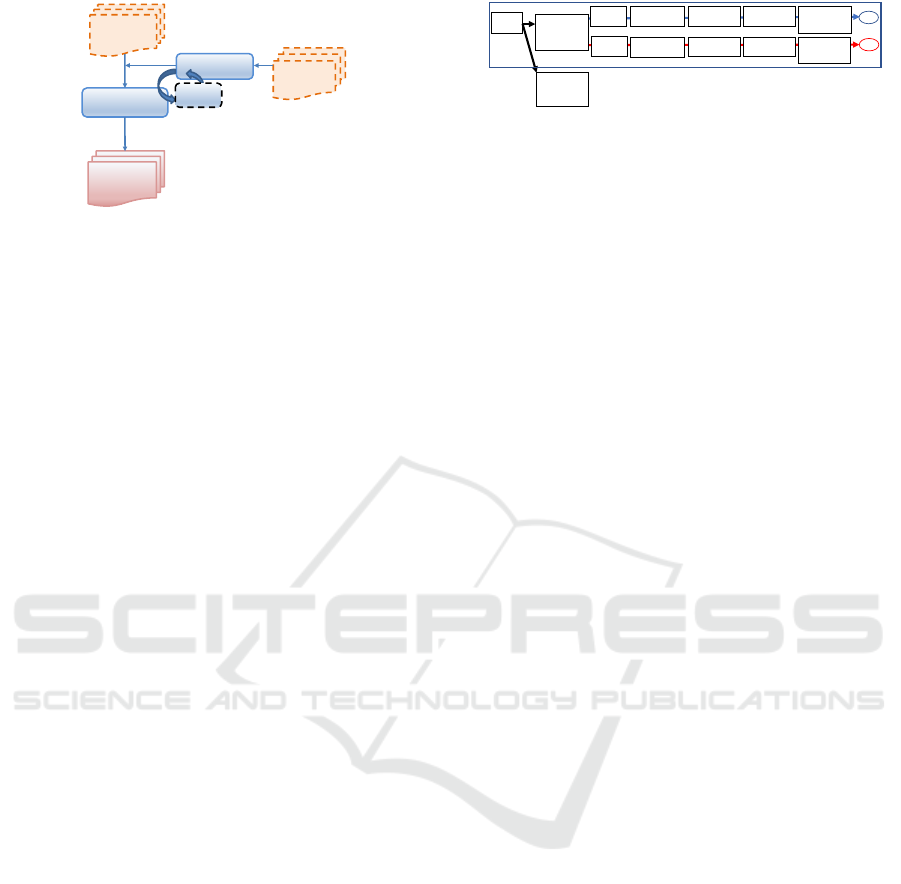

Automatic

analysis

Information

to be collected

Browsing history

IP address

Server

Browser

User

Summarize of privacy policy

Privacy

policy

Figure 1: Overview of the Privacy Policy User Understand-

ing Support Tool.

are sometimes accompanied by videos and illustra-

tions. Nevertheless, because not all privacy policies

are revised in this way, assistance tools are needed for

users reading privacy policies.

2.2 Tools for the User

Several tools (Harkous et al., 2018), (H. Harkous

and Aberer, 2016) can assist users in reading privacy

policies in English. The authors of (Harkous et al.,

2018) proposed a deep-learning auto-analysis frame-

work for privacy policies known as “Polisis”, and the

authors of (H. Harkous and Aberer, 2016) introduced

an interactive privacy bot called “Pribot” that dis-

closes privacy policies and consent acquisition using a

chatbot that mimics conversation with the user. How-

ever, both tools (Harkous et al., 2018), (H. Harkous

and Aberer, 2016) interpret privacy policies in En-

glish and access the privacy policy database in (Wil-

son et al., 2016) for machine learning. Therefore, they

are unavailable to Japanese users.

2.2.1 Tools for Reading Japanese Privacy

Policies

Our proposed method extracts information from a

privacy policy written in natural Japanese. Among

the related studies on extraction methods, (Hasegawa

et al., 2004) introduced a technology that extracts in-

formation of a predetermined event or matter from a

large amount of text data such as newspaper articles

and stores the extracted information in a database. In-

formation extraction from general text uses only ba-

sic technologies such as syntactic analysis. Although

the process is well researched, highly developed, and

accurate, syntactic analysis loses accuracy when sen-

tences contain many specific words (such as techni-

cal terms). Therefore, the authors of (Axelrod et al.,

2011) combined a small number of specific field sen-

tences with general sentences. For the present study,

we designed privacy policy as a domain adaptation

specialized for the information collected and con-

structed a user support tool using the training data.

Construction of a Support Tool for User Reading of Privacy Policies and Assessment of its User Impact

413

3 PRIVACY POLICY USER

UNDERSTANDING SUPPORT

TOOL

3.1 Overview

Figure 1 shows the overall outline of our privacy pol-

icy user understanding support tool. The privacy pol-

icy displayed in the browser is automatically ana-

lyzed, and the result is presented to the user. The

information collected from the user, the information

used by the service providers, and the information

provided to the third party are summarized and dis-

played.

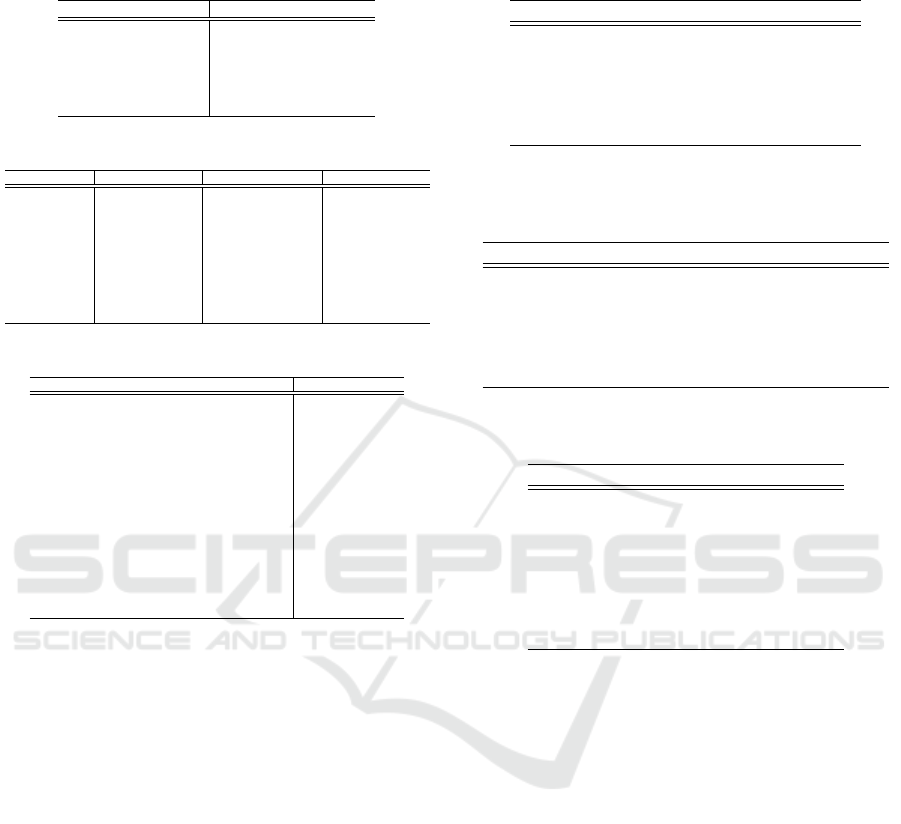

The collected information is summarized and

displyed in Figure 2

1

. The summary display screen

presents the unique expressions in each privacy pol-

icy that related to a given type of information handling

and a given tag.

3.2 Constructing Method

First, a user selects a privacy policy, and our con-

structed tool reads the policy in HTML. Then, the tool

preprocesses the data, such as removing HTML tags

and unnecessary characters, normalizing to prepare

for Japanese natural language processing. This pro-

cess requires morphological analysis to break down a

sentence into its smallest units and divide it. For our

study, we used MeCab (Liang, 2019): Yet Another

Part-of-Speech and Morphological Analyzer, an open

source program. To extract the unique expressions

from the privacy policy sentences, we used long short-

term memory with conditional random field (LEE,

2017).

4 CREATING MACHINE

LEARNING TRAINING DATA

FOR PRIVACY POLICY

To accurately extract the unique expressions from pri-

vacy policies, it is important to build precise training

data. Here we describe how we created the training

data for machine learning for the privacy policy user

understanding support tool.

1

Image indicates the screen capture of the tool (Sum-

mary of the privacy policy the user selected.).

Figure 2: The Privacy Policy Summary Display Screen.

4.1 Selecting Privacy Policies for

Tagging

On June 8, 2017, we accessed the ALEXA TOP 500

site (Alexa Internet, 2017) and selected 94 privacy

policies from the top 200 sites in Japan. We rejected

the remaining 106 sites because they did not satisfy

the specified privacy policy parameters

2

.

Determining the Text to Be Tagged: Many sen-

tences in privacy policies are irrelevant to what in-

formation is collected such as contact address. To ob-

tain the appropriate information, we tagged words and

phrases that indicated information handling. The 14

selected words and phrases are listed in Table 1. We

selected the words following the process below:

• Among all selected privacy policies, 1,859 words

appeared simultaneously with “personal informa-

tion (2,504 occurrences)”.

• Among these 1,859 words, 14 related to the user

information terms collect, use, and third party

(Table 1), and we selected and divided these into

three categories.

4.2 Select Tag Type

The tag type is required for generating the training

data for sequence labeling. Many tag types are pos-

sible, as shown in the example below. Furthermore,

because different people will interpret tag types dif-

ferently, they must be carefully chosen.

4.2.1 Candidate of Tag Type

We collected and examined the data by comparing the

tags on related projects.

IREX

3

Project: IREX (Sekine and Isahara, 2000) is

a general expression extraction-tagging method.

3

2

The excluded sites included privacy policies in English

(29 sites), Chinese (11 sites), and Spanish (21 sites) as well

as policies that contained inappropriate content (32 sites) or

were not downloadable (12 sites).

3

IREX stands for Information Retrieval and Extraction

Exercise.

ICISSP 2022 - 8th International Conference on Information Systems Security and Privacy

414

Table 1: Selection of words and phrases for handling infor-

mation.

No. Phrase Co-occurrence Handling

frequency category

1 Utilize 707 Use

2 Provide 476 Collect

3 Get 323 Collect

4 Collect 170 Collect

5 Use 140 Use

6 Consign 113 Third Party

7 Share 76 Third Party

8 Register 57 Collect

9 Process 53 Use

10 Hold 43 Use

11 Save 43 Use

12 Keep 37 Use

13 Maintain 36 Use

14 Transfer 28 Third Party

Scale of Privacy Information: (Sato and Tabata,

2013) defined the scale of privacy information and di-

vided it into four categories: autobiographical infor-

mation, attribute information, identification informa-

tion,and password information.

Smartphone Privacy Initiative: The (SPI, 2012)

committee categorized information provided by smart

phone users in the following categories: user iden-

tification information, third-party information, action

history of communication services, and user status.

4.2.2 Selected Tag Type

Comparing the frequency of mentions in (Sato and

Tabata, 2013), (SPI, 2012) with the 94 privacy poli-

cies, we selected the following tag types:

Identification Information: Information that can

identify the specific individual who requires the

service.

Examples: Contractor information (name, ad-

dress, etc.), payment information (credit card

number, bank account number), personal identifi-

cation code (ID, user name, e-mail address, pass-

word), mechanically allocated identification code

(cookie, IP address, terminal identification infor-

mation, application ID), information recorded in

the service provider (usage history, communica-

tion history).

Personal Information: Various types of information

related to individual users.

Examples: Special care-required personal infor-

mation (such as race, ethnic information, health

status), individual life (e.g., profile), list of

friends/related parties (contact list, address book,

friend list), history of online activities (search

keywords, posted comments, customer reviews,

current location), photos and videos (posted pho-

tos, posted videos, profile photos).

Abstract Information: a collection of multiple

pieces of information (abstract words and

phrases), a generic term for related phrases.

Examples: Personal information, statistical

information, and personal data.

4.3 Tagging Work

Tagging was performed by six coauthors and two

tagging rule-makers. The eight taggers were familiar

with tagging rules and surveyed the privacy policies

of many companies before selecting one. The eight

taggers also repeatedly reviewed the policies and tag-

ging rules as shown in Figure 3. The longest and

shortest privacy policies were 13,481 characters and

1,413 characters in length, respectively. On average,

one manual tagging required 30 minutes per 4,995

characters. Each of the eight workers tagged six pri-

vacy policies.

4.3.1 Tag Differences Caused by Differences

among the Workers

Comparing the training data tagged by different work-

ers, we found slight variations in both the length of the

tagging range and the types of tags assigned.

Variation in Tagging Range: For instance, for the

tagging target range, the same privacy policy state-

ment was tagged with four ranges: “the nearest wire-

less network and base station for the mobile terminal,”

“the nearest wireless network for the mobile termi-

nal,” “wireless network and base station,” and “wire-

less network.”

Variations in Tag Types: The privacy policies were

labeled with the tags described in subsection 4.2.2

(e.g., Identification Information). The selected tags

varied among the workers. For example, what one

worker tagged “location information as “identifica-

tion information”, another might have tagged as “per-

sonal information.” Because it is possible to identify

an individual by a combination of location informa-

tion, it was sometimes difficult for the taggers to de-

termine whether a location information tag was “iden-

tification information” or “personal information.” To

resolve discrepancies, we conducted additional re-

views among the workers.

5 SURVEY IMPLEMENTATION

METHOD

We verified the effectiveness of the constructed tool in

a user evaluation study in which we divided the eval-

uation subjects into two groups. One group was asked

Construction of a Support Tool for User Reading of Privacy Policies and Assessment of its User Impact

415

Tagging Work

Related Work

Tagged

Privacy Policy

Data set of

Privacy Policy

Select Tag Type

Machine Learning Training Data

Review

Figure 3: Tagging work procedure.

to simply read the privacy policy, and the other was

given the tool we constructed. We measured the tool’s

effectiveness by comparing the differences between

the groups.

Although the tool allows users to select a privacy

policy, we created a sample policy for Internet shop-

ping sites to ensure the same conditions for all sub-

jects. Subjects who did not use the constructed tool

were requested to read the full text of the sample pol-

icy and to answer the questions that followed the pol-

icy. The subjects who used the constructed tool were

asked to display the unclear sample policy, examine

the summary display the tool produced, and to answer

the questions that followed.

5.1 Survey Method, Period, and

Procedure

We administered the survey online to a panel of sub-

jects who had registered with research companies.

The survey included women and men aged 15 to 69

who owned personal computers. Among the 2,106 re-

spondents whose surveys we collected, 516 said they

did not wish to review privacy policies and 60 said

they never shopped online. For the former group, we

asked: “In this scenario, you are being asked to con-

firm the privacy policy on this shopping site. Do you

want to confirm the privacy policy on this shopping

site?” For the latter group, we asked, “Have you ever

shopped on the Internet?” We then excluded respon-

dents who answered no to either question. After we

excluded these respondents’ surveys, 1,530 remained

for analysis: average age 44.0 years, 765 women

(50.0 %), 765 men (50.0 %). For all indicators, we

divided the respondents into two groups, those who

did (n = 762) and those who did not (n = 768) use the

privacy policy user understanding support tool. The

group that did not use the tool were shown a privacy

policy and asked about their subjective and objective

understanding of the policy they read as well as their

understanding of the service’s risks. The group that

could use the tool were shown an unclear privacy pol-

icy, requested the use of the tool from the monitor,

end

end

Without

the tool

Subjective

understanding

Good Feeling,

Trust, No risk,

Intention to use

Display

Scenario

Confirm the

privacy policy

on this shopping

site.

Don’t confirm

the privacy policy

on this shopping

site.

※Evaluation target is in the blue box.

Display

privacy policy

With

the tool

Good Feeling,

Trust, No risk,

Intention to use

Display summary

of privacy policy

Subjective

understanding

Objective

understanding

Objective

understanding

Figure 4: Survey procedures and evaluation targets.

and received a summary of the policy. They were also

asked about their subjective and objective understand-

ing and understanding of the service’s risks.

Period: The web survey was administered from Jan-

uary 4 to 8, 2021.

Procedure: The survey procedure is shown in Fig-

ure 4. The tool is intended for users who believe it

is important to read privacy policies before accepting

them but find them too long or difficult to understand.

Ethics: The survey was approved by the Personal

Data Handling Research and Development Council of

the National Institute of Information and Communi-

cations Technology, Japan.

The research questions were as follows.

RQ1: Does the tool improve the user’s under-

standing of privacy policies?

RQ2: Does the tool change the user’s understanding

of service risks?

5.2 Measuring Subjective

Understanding

To measure the participants’ subjective understand-

ing, we evaluated readability, understandability, and

plainness indices. Table 2 presents the question and

response options for readability. The same 6-point

Likert scale was used for the understandability and

plainness evaluations.

5.3 Measuring Objective

Understanding

To measure the degree of objective understanding, we

asked three types of question about information the

policy said would be collected. “Please select “Yes”

for any information that you believe will be collected

by the service provider under the privacy policy of

this shopping site. Please select “No” for any infor-

mation that you believe will not be collected”. For

the information to be used, the question changed to

“Please select “Yes” for any information that you be-

lieve will be used by the service provider under the

privacy policy of this shopping site.” Same as the

information to be provided to the third party. The

question items are as Table 3. Five out of 10 choices

provided the information to be collected, used, and

provided to third parties. Each correct answer scored

ICISSP 2022 - 8th International Conference on Information Systems Security and Privacy

416

Table 2: Question and answer options for subjective under-

standing: readability.

Question Answer options

What did you think about 1.Very difficult to read

the privacy policy of 2.Difficult to read

this shopping site? 3.Somewhat difficult to read

Please choose the one 4.Fairly easy to read

that you think is the 5.Quite easy to read

closest. 6.Very easy to read

Table 3: Answer item list for each information type.

information type collect used provide

answer item list date/time of use name cookie information

cookie information date of birth device identifier

IP address mail address location information

location information purchased goods IP address

purchased goods credit card information customer ID

friend list friend list friend list

face photo face photo face photo

medical history medical history medical history

interest interest interest

voice sound voice sound voice sound.

Table 4: Question and answer options for service risk.

Question answer option

When you read the privacy policy on this shopping 1.not favorable

site, were you satisfied with the service? 2.rather unfavorable

3.rather favorable

4.very favorable

When you read the privacy policy on this shopping 1.not trusted

site, did you trust the service? 2.rather did not trust

3.trusted

4.fully trusted

When you read the privacy policy on this shopping 1.dangerous

site, were you concerned that personal information 2.rather dangerous

could be leaked from this service? 3.rather safe

4.safe

After reading the privacy policy of this shopping 1.not at all

site, would you consider using the service? 2.a little

3.mostly

4.certainly.

one point. We then determined the degree of objec-

tive understanding as the number of points out of

10. The first five items in each list were the correct an-

swers, and participants who understood should have

responded yes. The choices were randomly displayed

to each respondent.

5.4 Measuring Understanding of

Service Risk

In addition to assessing the content of the privacy

policies, we evaluated service risks because this un-

derstanding can sometimes be crucial. We assumed

that a better understanding of a privacy policy would

alter user’s positive feelings, trust, and risk aversion

toward the service, and intentions to use the ser-

vice. Properly understanding the risks described in a

privacy policy is especially important when using a

service.

We measured participants’ levels of positive feel-

ings, trust, and lack of risk degree as quantitative vari-

ables related to the presented services based on their

answers to the questions in Table 4. Each item was

worth 1 to 4 points.

Table 5: Subjective understanding (readability, understand-

ability, plainness) without/with the tool AV (SD).

without tool with tool

readability 2.99 3.18

(1.12) (1.23)

understandability 3.17 3.29

(1.03) (1.14)

plainly 3.11 3.25

(1.03) (1.11)

Table 6: Objective understanding (collected information,

used information, information provided to third parties)

without/with the tool AV (SD).

without tool with tool

information to be collected 7.88 8.05

(1.77) (1.82)

information to be used 8.13 8.16

(1.89) (1.89)

information to be provided 7.16 7.38

for the third party (1.94) (1.98)

Table 7: Good feeling, trust, no risk, intention to use with-

out/with tool AV(SD).

without tool with tool

good feeling 2.19 2.01

(0.71) (0.71)

Trust 2.23 2.06

(0.71) (0.71)

No Risk 2.09 1.88

(0.66) (0.67)

Will use 2.14 1.94

(0.65) (0.69)

6 ANALYSIS RESULTS

6.1 Subjective Understanding Results

As we noted earlier, we measured participants’ sub-

jective understanding of the privacy policies they

viewed based on their ratings for the policies’ read-

ability, understandability, and plainness. Table 5 gives

the results of the t test without correspondence. The

readability, understandability, and plainness scores

were significantly higher in the group that used the

tool than in the group that did not have the tool

t(1375) = 2.56 (p < .01), t(1375) = 1.95 (p < .05),

and t(1375) = 1.98 (p < .05), respectively, confirm-

ing the effectiveness of the tool.

6.2 Objective Understanding Results

We measured participants’ objective understanding

by quantifying what they reported was the informa-

tion to be collected, information to be used, and infor-

Construction of a Support Tool for User Reading of Privacy Policies and Assessment of its User Impact

417

mation to be provided to third parties. Table 6 shows

the results of the t test without correspondence.

The information the two groups understood would be

collected and used did not differ significantly between

groups t(1375) = 1.53 (ns) and t(1375) = 0.10 (ns),

respectively, but the group that used the tool showed

a better understanding of what information would be

provided to third parties than the group without the

tool t(1375) = 1.99 (p < .05).

6.3 Service Risk Results

We measured survey respondents’ positive feelings

about the service, their trust in the service, their sense

that there would no risk in using the service, and their

intention to use the service in t tests with no cor-

respondence. The results are given in Table 7. We

found that the group that used the tool showed signif-

icantly lower ratings on positive feelings, trust, per-

ception of risk, and intention to use the service than

the group without the tool: t(1375) = 4.98 (p <

.01), t(1375) = 4.79 (p < .01), t(1375) = 5.78 (p <

.01), and t(1375) = 5.58 (p < .01), respectively.

7 DISCUSSION

Based on the results of our web survey on our privacy

policy user understanding support tool, we answered

the following research questions:

RQ1: Does the Tool Improve a User’s Understand-

ing of a Privacy Policy?

According to the subjective understanding results,

the tool improved the readability, understandability,

and plainness of the privacy policy. Therefore, the tool

can improve the experience of reading privacy poli-

cies.

However, we could not identify any clear im-

provement in objective understanding, which we ex-

plain as follows. First, when summarizing and dis-

playing the policy, the tool arranges a large number

of unique expressions in tabular form that is not easy

for viewers to remember in full. Second, some partic-

ipants answered yes to every question about what in-

formation would be collected. Therefore, the results

might reflect some users’ concern that all information

is collected whether or not it is related to the service.

RQ2: Does the Tool Change the User’s Under-

standing of Service Risks?

The tool reduced the participants’ positive feel-

ings toward the service they were reading about, their

trust in the service, their sense that there would be

no risk in using the service, and their intention to

use the service. We surmised that the tool enhanced

users’ recognition of the risks with their personal data,

which suggests that it effectively raised users’ risk

awareness.

8 CONCLUSION AND FUTURE

WORK

To prevent the acquisition of consent to companies’

privacy policies from becoming mere formalities that

carry no weight or understanding for users, we con-

structed a privacy policy user understanding sup-

port tool and verified its effectiveness. The tool en-

hanced users’ subjective understanding of the services

they read about and their awareness of the related

risks. Service providers’ privacy policies need to be

easily understood by users, and providers should sup-

port this understanding. We expect that the tool we

developed will help users better understand the con-

tent of the privacy policies they encounter and make

decisions based on their understanding of how service

providers intend to use their personal data.

One Limitation of this study is that we con-

structed our tool to target specifically privacy poli-

cies written in Japanese, and we conducted the sur-

vey and investigations on monitors registered with

a Japanese survey company. Therefore, these results

could be biased toward the Japanese context; it re-

mains for future researchers to test whether our find-

ings hold in other cultural contexts. Moreover, we

constructed the tool solely for Japanese rather than

any other languages. However, we believe that re-

searchers who follow our procedure can construct ap-

propriate privacy policy support tools in other lan-

guages as well. A second study limitation is that

the tool is displayed on a personal computer screen,

which limits the available monitors to the ones that

belong to the computers’ owners. Future researchers

should study the tool’s effectiveness with privacy

policies displayed on a smart phone.

Artificial intelligence and other technological ad-

vancements will likely promote data usage in the fu-

ture. Therefore, service providers should continue to

implement appropriate and legally compliant mea-

sures to protect users’ privacy. However, it is also im-

portant to increase users’ own privacy awareness. We

believe that in addition to general education about pri-

vacy and data collection, our privacy policy user un-

derstanding support tool can help in achieving that

goal of greater consumer privacy awareness.

In this validation study, the privacy policy user un-

derstanding support tool enhanced the participants’

understanding of the risks associated with using the

ICISSP 2022 - 8th International Conference on Information Systems Security and Privacy

418

services they read about, which is one aspect of under-

standing privacy policies. Therefore, in future work,

we must examine what types of user understanding

would render a privacy policy a consensual agreement

between users and the service provider. For this pur-

pose, we should identify the factors related to users’

understanding of and trust in a service by investigat-

ing the relationship between how service providers

actually handle users’ privacy information and how

users think their data will be used. To improve user

understanding, we must therefore explore additional

functions and implementations of the privacy policy

user understanding support tool.

ACKNOWLEDGEMENTS

We would like to express our deepest gratitude to Mr.

Yasushi Kasai and Mr. Takaomi Hayashi of the In-

stitute of Future Engineering for their cooperation in

preparing this paper. This work was supported by

JST, CREST Grant Number JPMJCR21M1, Japan.

REFERENCES

Alexa Internet, I. (2017). Alexa top sites in Japan. https:

//www.alexa.com/topsites/countries/JP.

Axelrod, A., He, X., and Gao, J. (2011). Domain adapta-

tion via pseudo in-domain data selection. In Proceed-

ings of the 2011 Conference on Empirical Methods in

Natural Language Processing, pages 355–362, Edin-

burgh, Scotland, UK. Association for Computational

Linguistics.

Cate, F. H. (2010). The limits of notice and choice. IEEE

Security & Privacy, 8(2):59–62.

Cranor, L. F., Guduru, P., and Arjula, M. (2006). User

interfaces for privacy agents. ACM Transactions on

Computer-Human Interaction, 13(2):135––178.

EU (n.d.). Regulation (eu) 2016/679 of the european parlia-

ment and of the council. https://eur-lex.europa.eu/eli/

reg/2016/679/oj. (Accessed on 06/08/2021).

H. Harkous, K. Fawaz, K. G. S. and Aberer, K. (2016). Pri-

bots: Conversational privacy with chatbots. In Twelfth

Symposium on Usable Privacy and Security (SOUPS

2016), Denver, CO. USENIX Association.

Harkous, H., Fawaz, K., Lebret, R., Schaub, F., Shin, K. G.,

and Aberer, K. (2018). Polisis: Automated analysis

and presentation of privacy policies using deep learn-

ing. Proceedings Of The 27Th Usenix Security Sym-

posium, pages 531–548.

Hasegawa, T., Sekine, S., and Grishman, R. (2004). Discov-

ering relations among named entities from large cor-

pora. In Proceedings of the 42nd Annual Meeting on

Association for Computational Linguistics, ACL ’04,

pages 415–es, USA. Association for Computational

Linguistics.

Kanamori, S., Nojima, R., Iwai, A., Kawaguchi, K., Sato,

H., Suwa, H., and Tabata, N. (2017). A study for

reasons why users do not read privacy policies. In

Proceedings of Computer Security Symposium 2017,

pages 874-881. in Japanese.

Kelley, P. G., Bresee, J., Cranor, L. F., and Reeder, R. W.

(2009). A ”nutrition label” for privacy. In Proceedings

of the 5th Symposium on Usable Privacy and Security

(SOUPS 2009), pages 1–12, New York, NY, USA. As-

sociation for Computing Machinery.

LEE, C. (2017). Lstm-crf models for named entity recogni-

tion. IEICE Transactions on Information and Systems,

E100.D(4):882–887.

Liang, X. (2019). Mecab usage and add user dictionary

to mebab. https://towardsdatascience.com/mecab-

usage-and-user-dictionary-to-mecab-9ee58966fc6.

Accessed on 21/11/2021.

McDonald, M. A. and Cranor, L. F. (2008). The cost of

reading privacy policies. I/S: A Journal of Law and

Policy for the Information Society, 4:543–568.

MIC (2020). Information and communications in Japan

(white paper 2020). (Accessed on 06/08/2021).

PPC (n.d.). Personal information protection commission

of Japan. https://www.ppc.go.jp/en/index.html. (Ac-

cessed on 06/08/2021).

Proctor, R. W., Ali, M. A., and Vu, K. P. L. (2008). Exam-

ining usability of web privacy policies. International

Journal of Human–Computer Interaction, 24(3):307–

328.

Rao, A., Schaub, F., Sadeh, N., Acquisti, A., and Kang,

R. (2016). Expecting the unexpected: Understanding

mismatched privacy expectations online. In Twelfth

Symposium on Usable Privacy and Security (SOUPS

2016), pages 77–96, Denver, CO. USENIX Associa-

tion.

Reidenberg, J. R., Russell, N. C., Callen, A., Quasir, S., and

Norton, T. (2014). Privacy harms and the effective-

ness of the notice and choice framework. 2014 TPRC

Confarence Paper.

Sato, H. and Tabata, N. (2013). Development of the multi-

dimensional privacy scale for internet users (MPS-I).

Japanese Journal of Personality, 21(3):312–315. in

Japanese.

Sekine, S. and Isahara, H. (2000). IREX: IR & IE evalu-

ation project in Japanese. In Proceedings of the Sec-

ond International Conference on Language Resources

and Evaluation (LREC’00), Athens, Greece. Euro-

pean Language Resources Association (ELRA).

SPI (2012). Smartphone privacy initiative.

https://www.soumu.go.jp/menu news/s-news/

01kiban08 02000087.html.

Wilson, S., Schaub, F., Dara, A. A., Liu, F., Cherivirala, S.,

Leon, P. G., Andersen, M. S., Zimmeck, S., Sathyen-

dra, K. M., Russell, N. C., Norton, T. B., Hovy, E.,

Reidenberg, J., and Sadeh, N. (2016). The creation

and analysis of a website privacy policy corpus. In

Proceedings of the 54th Annual Meeting of the Associ-

ation for Computational Linguistics (Volume 1: Long

Papers), pages 1330–1340, Berlin, Germany. Associ-

ation for Computational Linguistics.

Construction of a Support Tool for User Reading of Privacy Policies and Assessment of its User Impact

419