Model Analysis of Human Group Behavior Strategy using Cooperative

Agents

Norifumi Watanabe

1

and Kota Itoda

2

1

Graduate School of Data Science, Research Center for Liberal Education, Musashino University,

3-3-3 Ariake, Koto-ku, Tokyo, Japan

2

Asia AI Institute, Musashino University, 3-3-3 Ariake, Koto-ku, Tokyo, Japan

Keywords:

Pattern Task, Cooperative Group Behavior, Intention Estimation of Others, Agent Model, Simulation.

Abstract:

Flexible and cooperative human group behavior are realized by changing our intentions and behaviors based

on dynamic estimation of other participants’ intention, and also adjustment of self and others’ intention. We

analyze human small group behavior using cooperative pattern task in 2D grid world to clarify an individual

action selection process including inference of others’ intention and adjustment of intention among partic-

ipants. In previous research, we have constructed behavior strategy models based on the human behavioral

experiments, implemented the models to cooperative agents, and confirmed the goal achievement in almost the

same steps to humans in the agent simulations. In this research, we analyze combinations of human behavior

strategies realizing group behavior by comparing agent behavior to subjects behavior.

1 INTRODUCTION

Understanding of flexible cooperative behavior in

groups is important for construction of intelligent sys-

tems and social agents that cooperate with human. In

cooperative behavior that we usually see in groups,

it is considered that the top-down decision-making

process is based on the shared intention of a group,

and the bottom-up decision-making process is based

on the dynamic estimation of each other’s intentions

based on each behavior and adjusting them according

to a situation. In goal-type ball games such as hand-

ball, soccer or basketball, players interact with each

other in dynamic situations and estimate each inten-

tion based on the nonverbal communication such as

eye contact or body language, and change their be-

havior to deceive or deal with the opponents. Also in

everyday lives, we understand the others’ intentions

through their behavior and decide to cooperate with

them. The interaction of multi-person requires the

participants not only estimate the one other person’s

intention, but also select whom to focus and estimate

the shared intention of the group.

Purpose of this study is to clarify the behavioral

decision-making process in intelligent interaction in

such a group. We will conduct behavioral experi-

ments using a cooperative task pattern task that fo-

cuses on the selection of others and the coordination

of intentions between others and the self in group be-

havior, and analyze the results using an agent model.

2 RESEARCH BACKGROUND

We engage in complex interactions with others,

such as competition and cooperation, in various sit-

uations in society, and several studies have clar-

ified and modeled these processes. The BDI

model of beliefs (B), desires (D), and intentions (I)

based on Bratman’s ”Theory of Intentions”(Bratman,

1987)(Rao and Georgeff, 1991)(G.Weiss, 2013) and

the Bayesian Theory of Mind by Baker, Tenen-

baum, and colleagues(C. L. Baker, 2009)(C. L. Baker,

2014)(W. Yoshida, 2008) are examples of research

that model interactions with others. In addition,

Yokoyama et al. have studied meta-strategies in inter-

personal interaction(T. Omori and Ishikawa, 2010).

In the BDI model(Bratman, 1987)(Rao and

Georgeff, 1991)(G.Weiss, 2013), we set our own

goals based on our beliefs about the surrounding envi-

ronment and choose the means to achieve those goals.

And we formulate intentions to carry out our goals

and act in accordance with those intentions. When

others intervene here, we set new goals based on

our beliefs and the intentions of others, choose other

means to achieve those goals, and form new intentions

Watanabe, N. and Itoda, K.

Model Analysis of Human Group Behavior Strategy using Cooperative Agents.

DOI: 10.5220/0010848800003116

In Proceedings of the 14th International Conference on Agents and Artificial Intelligence (ICAART 2022) - Volume 1, pages 299-305

ISBN: 978-989-758-547-0; ISSN: 2184-433X

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

299

to carry them out.

Thus, our intentions are determined in part by the

environment based on one’s own beliefs. In addi-

tion, we estimate the intentions of others based on our

own internal models, and make decisions by balanc-

ing . The ability of a person to estimate the inten-

tions of others is important in theory of mind, and the

model proposed by Baker, Tenenbaum, and others as

the Bayesian Theory of Mind states that an estimation

of human intentions behaves similar to a probabilistic

model based on Markov decision processes. Further-

more, in the case of a group where there are multiple

others, we are considered to have a ”shared concept”

to share our intentions and form a common behavior.

If there are more than one other person, we can share

the intention of each and have shared concept to form

common action, and analyzing such structure of the

multi-person interaction is also important for human

robot interaction(R. Sato, 2014). For example, in soc-

cer, which is an excellent cooperative behavior, even

when each player acquires different environmental in-

formation, they can set a common goal, instantly set

the means to achieve it, and formulate the intention to

realize it. In this way we believe that it is important to

model the choices of others involved in such shared

concepts and the behaviors generated from them in

order to understand interactions with others.

Next, in interaction with other people, it is nec-

essary to self-observe how other people guess about

themselves, thereby guessing what kind of influ-

ence can be given to others by themselves. Self-

observation principle(T. Makino, 2003) estimates the

behavior of others by adopting a model that looks at

himself objectively to others. By the principle, it is

possible for people to match with or withdraw oth-

ers in the group and construct strategies mutually pre-

dicting of each mind. Furthermore, estimating inter-

nal states of each other also helps cooperative actions

such as mutually coordinating behaviors and work-

ing jointly. In order to adjust their behavior, it is im-

portant to select one from the multiple sub-goals and

estimate what the others intend. The problem is the

recurrence of intention estimation between self and

others. Omori et al. have proposed a model of meta-

strategies such as active and passive in interpersonal

interaction to construct social robots(T. Omori and

Ishikawa, 2010). In addition to one-to-one estimation

of mutual intentions, there is a strategy for estimating

the intentions shared by multiple persons at the same

time based on mutual estimation in group behavior.

In other words, there is a process of coordinating in-

tentions by dynamically considering one’s own role

in the group and selecting others to be involved in co-

operative behavior from multiple others. We examine

the effect of the difference between the two groups.

Therefore, a cooperative task that abstracts the group

behavior, we analyzed the subject’s behavior using a

pattern task as a cooperative task, and simulate the

agent based on the results.

3 PATTERN TASK

3.1 Outline of Our Task

We propose the pattern task for analyzing human co-

operative behavior. In this task, four subjects partic-

ipate and cooperate in a grid world without verbal

communication, and aim to realize the location goal

pattern in as few steps as they can. Each subject be-

haves as an agent in the grid world and can take 5 ac-

tions (stop, go-left, go-right, go-up, go-down) (shown

in Figure 1). Since the goal patterns are defined with

relative distances of three points in grid without over-

laps, each four agents ought to consider whom to co-

operate with to achieve the pattern within minimal

steps. That is, although the goal is achieved by whole

four agents by positioning to form the goal pattern,

each subject must estimate others’ intention to pre-

vent misunderstanding for achieving the goal in each

steps, and tell others whether to participate forming

the pattern or not, through only their behaviors.

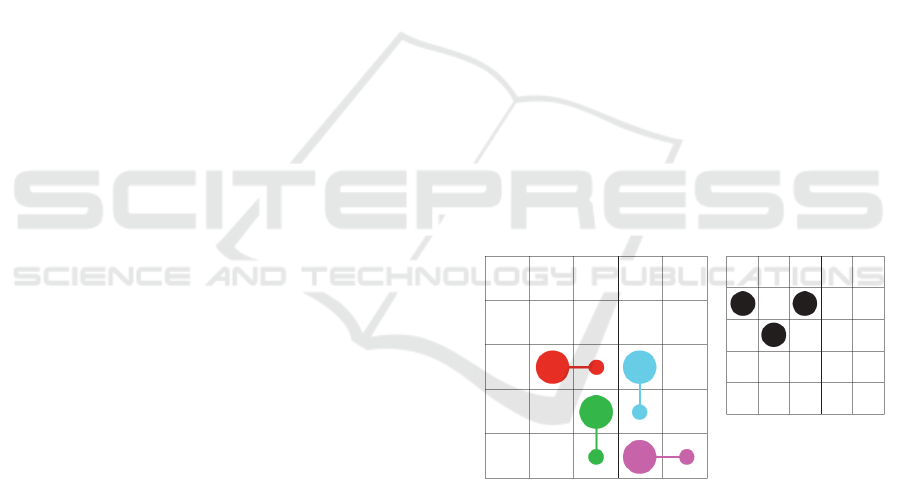

Figure 1: Pattern Task (Left) The grid world. The large

round sprites in this figure represent the agents and their

current locations and the small ones represent the location

in the previous step. (Right) The goal pattern. Since the end

condition of each trial is defined by the relative position of

three points, the trial ends in this situation.

Phase1: Select three coordinations where the sub-

ject realize the goal pattern at last, or the pattern

will be realized by the other subjects.

Phase2: Select other subjects whom to be focused

to realize the goal pattern.

Phase3: Select one of the five actions (stop, go-left,

go-right, go-up, go-down).

ICAART 2022 - 14th International Conference on Agents and Artificial Intelligence

300

Phase4: Select three coordinations where the sub-

jects focused in Phase2 selected in Phase 1.

Phase5: Select the subjects who are considered to

be selected in Phase2 by the subject.

The above whole five phases are repeated in each

steps of a trial until the subjects achieve the goal pat-

tern or the limit of maxi- mum steps. Then the initial

locations of the agents are changed and after chang-

ing some different initial locations, the different goal

pattern is applied and repeated each trials.

Several rules are set in this task:

• Subjects are not allowed to talk about their loca-

tion or action which enables the other to specify

the agent to the other subjects.

• The goal pattern can be realized by three out of

four agents, and it is not necessary for whole four

agents to locate in the goal locations.

• Since the task achievement is judged by the rela-

tive locations of the goal pattern, parallel shift of

the coordinations is accepted but the rotation or

reverse of the pattern is not accepted.

• The agents selected in Phase2 or 5 don’t conclude

the agent who is selecting in this phase himself.

If the number of selected agents was more than

three (for example, in the case the distance of the

goal pattern was the same with few agents), the

agent select the only three agents most possible to

achieve the pattern.

• The goal coordinations selected in Phase 1 and 4

are the most realizable pattern to achieve.

• Agents are allowed to move to the same location

of the other agents and they are able to move to

the four neighboring cell of the grid world (left,

right, up, down). The field is not torus grid world

and the ends of the field are not connected (i.e., the

agent cannot go right at the right edge and also the

other edges).

3.2 Human Behavioral Analysis

A total of 77 trials of the coordination task were con-

ducted with 20 subjects using one to three different

goal patterns. As a result, each subject assumed a

novel pattern at the beginning of the task, and the re-

lationship between the pattern assumed by the sub-

ject and the pattern assumed by others. After that,

some subjects changed their own goal patterns when

the intentions of all the subjects became consistent,

and finally all the subjects continued to estimate their

own patterns in a consistent manner. Specifically, the

following three relationships were found between the

patterns selected by the subjects and the patterns se-

lected by others.

(a) Select the same pattern as Phase 1 in the previous

step.

(b) Select the same pattern as the pattern selected by

Phase 1 by another agent.

(c) Select a new pattern different from every pattern

selected in the previous step.

Based on these 3 choices, we analyzed the pat-

tern selection process of subjects. In the early part

of task, subjects selected a new pattern of choice (c).

After that, one of subjects showed a change in strat-

egy, matching a pattern selected by other subjects.

Such a change in strategy can be divided into cases

where subjects reach a common agreement in the first

half of trial and cases where they agree in the sec-

ond half. Based on these results, in this pattern task,

which encourages everyone to cooperate, we present

each other’s intentions to others in a form that is as

easy to understand as possible, and select the goal

pattern that shortens the total number of steps to be

taken in each situation. In the pattern task, we present

our intentions to others in a way that is as clear as

possible, and select a goal pattern that shortens the

overall arrival time in each situation. In order to see

the tendency of pattern selection within subjects, we

checked self-priority of goal pattern selected by sub-

jects at each step. In Phase 1, 86/113 (76%) of the

subjects’ choices were included in the shortest goal

set, and 86/132 (65%) of the subjects’ choices were

included the case where Phase 1 was not included in

the shortest goal set. Based on these results, we con-

struct an agent model based on the behavioral strate-

gies of the group.

4 AGENT MODEL

Based on the results of previous chapter, we construct

an agent model and conduct simulations. In this sim-

ulation, we clarify the action decision process includ-

ing estimation of intended goal patterns of others in

Phases 1 to 5. We compare the set of goal patterns

that can be reached in shortest time at each step with

set of goal patterns estimated based on the actions of

others after initial step, and implement the process of

narrowing down goal by majority vote.

In the initial step, each agent’s action is deter-

mined from the tree structure of the shortest goal pat-

tern (Phase 1), the set of interested others (Phase 2)

which determines the three agents that make up the

pattern, and the action of agent corresponding to each

goal point (Phase 3). Next, the goal patterns of the

Model Analysis of Human Group Behavior Strategy using Cooperative Agents

301

others corresponding to Phase4 and Phase5 and the

others of interest are inferred based on the tree struc-

ture from the numbers of the other agents and their

actions. Action decisions after the initial step are

determined by goal patterns estimated based on this

method and the own goal patterns determined in the

same way as in the initial step. In addition, it is deter-

mined by using its own goal pattern in the previous

step (see (K. Itoda, 2017) for details of each algo-

rithm). The agent action selection algorithm is pre-

sented in Algorithm 1.

Algorithm 1: Agent Action Selection.

From the experiment, it was found that subjects’

behavioral decision making process includes In or-

der to reach each other’s goal as quickly as possible

within the shortest step in each step, majority-based

decisions are taken. In addition, in Phases 4 and 5,

subjects’ attention is focused on intentions of others.

The agent intention estimation algorithm is presented

in Algorithm 2.

Algorithm 2: Intention Estimation of Other agents.

In addition, when selecting a pattern from a set

of goal patterns that can be reached in the shortest

possible time, it is thought that subjects will prefer-

entially select a pattern that includes themselves, or

will randomly select a pattern regardless of whether

it includes themselves or not, and will then estimate

and adjust the goal patterns of others. Therefore, we

set the following conditions for the simulation. The

following five strategies are used to determine agent’s

behavior.

Strategy A: Self-priority Selection x Intention Es-

timation of Other Agents

It estimates goal patterns from previous steps of

other agents and matches them with the goals that

can be reached by current shortest path. After

that, it extracts a set of patterns that include itself

among them, and randomly selects a goal among

them to go to that goal.

Strategy B: Self-priority Selection x No Intention

Estimation of Other Agents

Estimate the goal that can be reached by current

shortest path, extract the pattern set that includes

own from the goal, and randomly selects a goal

from pattern set.

Strategy C: Random Selection x Intention Esti-

mation of Other Agents

Estimate the goal pattern from previous steps of

ICAART 2022 - 14th International Conference on Agents and Artificial Intelligence

302

other agents and match it with the goal to be

reached by the current shortest path. After that,

randomly select a goal among them and go to that

goal.

Strategy D: Random Selection x No Intention Es-

timation of Other Agents

Estimate the goals that can be reached by current

shortest path. It randomly selects one of the goals

and goes to that goal.

Strategy E: Random Behavior Agents

At each step, randomly select and execute an ac-

tion from the action set, regardless of the current

state.

5 ANALYSIS OF SUBJECT

STRATEGIES

In order to clarify subject’s behavioral strategy by

comparing the combination of each strategy and the

behavior, we implement the model strategies A to

E from the previous section on each of four agents.

In this experiment, we first simulate a random initial

state to verify how quickly each strategy combination

itself accomplishes the task. To compare the results

with subject’s behavior, we simulated the initial state

of the subject’s behavior experiment and compared fi-

nal positions.

5.1 Combination of Strategies and

Number of Steps to Reach the Goal

First, we check how easy it is to accomplish the task

for each combination of strategies. We randomly pre-

pared 100 initial positions and 100 initial goal pat-

terns, and simulated them. The average number of

steps required to reach the goal for each combination

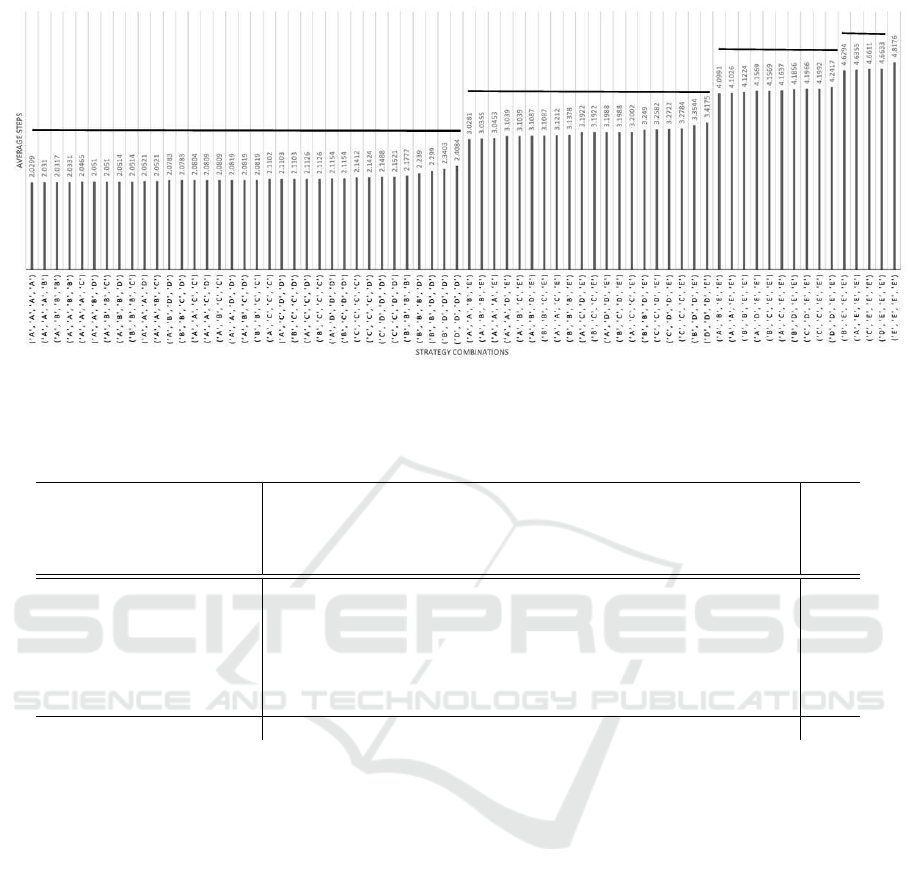

of strategies is shown in Figure 2.

Figure 2 shows that the average number of steps

required to reach the goal increased in a stepwise pat-

tern according to the number of agents in strategy

E that randomly selected actions among four agents.

This suggests that randomly acting agents have a large

impact as noise in the group. On the other hand, there

was not much difference in the average number of

steps among combinations of strategies with the same

number of agents of strategy E in the group.

In particular, (A, A, A, A) and (A, A, A, B), which

have many agents with self-priority strategy A and es-

timates the intentions of others, are able to accom-

plish a task in a shorter number of steps. On the other

hand, the combination with not only a large number

of strategy A but also a large number of strategy B,

which is self-priority but does not perform estimation

of others’ intentions, comes out on top. Strategy A

can reduce the number of steps to reach a goal, be-

cause it can limit the goal of the group by consider-

ing self-priority and the intentions of others. Strat-

egy B, which does not estimate the intentions of oth-

ers, achieves a task at an early stage. Even if there

are many strategies that only pursue their own goals

without considering others, it is possible to achieve

the task faster. In particular, strategies such as (B, B,

B, B) are fifth from the bottom among the combina-

tions of strategies that do not include strategy E. In

the case of a self-priority strategy, a coordinator such

as strategy A or strategy C, which randomly selects

the shortest goal but estimates the intention, may be

able to accomplish the task quickly.

5.2 Comparison of Each Strategy

Combination and Subject Behavior

Next, based on the initial conditions used in subject

behavioral experiments, 100 agent simulations were

conducted for each. We analyzed the subjects’ behav-

ioral strategies by comparing their final arrival posi-

tions with the final arrival positions of agents. It is

important to compare the selection of the goal pat-

tern in Phase 1, the selection of others of interest in

Phase 2 in the action sequence of each steps. How-

ever, the agent’s selection is bounded by conditions

such as self-priority, and randomness occurs. For this

reason, in this simulation, we compared the final posi-

tions of the subjects and agents when they completed

a task in order to compare their tendencies as a whole

group. We used the initial state of 15 trials among

all 77 trials of subject data, in which subjects finally

reached the goal and there were no erroneous inputs

in steps of the trials in all phases.

In a preliminary experiment, we found that when

there is little ambiguity in the initial state and the fi-

nal goal is uniquely determined, agents without ran-

domly selected strategy E reach the same location as

subject’s final destination. In this paper, we will ex-

amine how to resolve ambiguity when the ambiguity

of the goal is high. We focus on trials in which min-

imum number of shortest goals that can be reached

from the initial state is two or more and distance from

initial position to initial goal is two or more steps.

Table 1 shows top ten strategy combinations with

the largest number of final arrival position matches.

As a result, except for strategy E, which is a random

action selection strategy, the strategies that include a

large number of strategies A, which are self-priority

and estimate the intentions of others, have a large

number of matches between subjects and agents. This

Model Analysis of Human Group Behavior Strategy using Cooperative Agents

303

Figure 2: Combination of strategies and average number of steps reached in goal pattern. As the number of randomly acting

agents in strategy E increases, steps increase in a stepwise. Randomly acting agents have a large impact on the noise in the

group.

Table 1: Comparison of each strategy combination and the subject’s final position of arrival in the case of high ambiguity.

A A A A A A A A B B

A A A A B B B B B B

initial initial shortest A A A A B B C D B C

distance goal number A A A A B B B B B B total

2 5 10 7 8 10 11 8 22 21 17 18 132

2 3 51 49 58 60 49 51 51 59 57 46 531

2 2 44 23 41 29 37 43 35 45 42 44 383

2 2 79 74 75 75 82 78 72 68 75 68 746

2 2 45 55 41 45 47 40 46 43 46 44 452

2 5 53 59 46 58 46 50 42 47 45 50 496

total 282 267 269 277 272 270 268 283 282 270

means that when there is a high degree of ambiguity in

goals of subjects’ behavior, they choose self-priority

goals so that their own goals are easily perceived by

others. It is also possible that subjects will behave

more like strategy A, which is to execute the task

while estimating goals of others. In addition, since a

task can be accomplished by three of the four agents,

there will be more agreement on final destination po-

sitions when there are three agents with strategy A.

On the other hand, when the number of initial

shortest goals is large, such as three or five, the num-

ber of matches is larger for strategy combinations

such as (A, B, C, C), (A, B, D, D), and (B, B, C,

D) than for combinations that include a large num-

ber of strategies A. This means that rather than all

subjects choosing a goal in a self-priority pattern and

acting while estimating the intentions of others, some

subjects choose a goal randomly among the shortest

goals, or think of a goal without estimating the inten-

tions of others, which at first glance may seem to be

a bad choice, but they reach the same place. If the

ambiguity is too large, there is a high probability that

goals of both subjects will be divided when they make

self-priority choices. In addition, by ignoring the in-

tentions of others, we may reduce the number of pos-

sible goals in each situation. Therefore, by making

these adjustments within the group, it is thought that

subjects perform the task when there is high ambigu-

ity.

6 CONCLUSIONS

In this study, we conducted agent simulations based

on behavioral experiments of a cooperative task pat-

tern task in order to clarify the decision-making pro-

cess in cooperative group behavior. By simulating a

group of agents with each strategy combination, we

verified the characteristics of each strategy from av-

erage number of steps reached in accomplishing the

task. We also simulated each strategy combination

using initial conditions of subjects’ behavioral exper-

ICAART 2022 - 14th International Conference on Agents and Artificial Intelligence

304

iments, and compared final positions of subjects and

agents in achieving a task when ambiguity of goals is

high. We found that when ambiguity is very high, the

strategy combination with shortest number of steps is

not always close to subjects in the case of agent sim-

ulation alone.

In order to analyze the differences between condi-

tions in more detail, we control the ambiguity in each

condition and increase the number of trials in each

case. In addition to ambiguity in goals, there may

be ambiguity in subjects, such as who subjects in the

same goal, and subjects may take actions to explicitly

reduce ambiguity by their actions as well as their esti-

mation strategies. By incorporating these factors into

the model, we can perform more detailed simulations.

REFERENCES

Bratman, M. (1987). Intention, plans, and practical reason.

Harvard University Press.

C. L. Baker, J. B. T. (2014). Modeling human plan recog-

nition using bayesian theory of mind. In in G. Suk-

thankar, C. Geib, H. Bui, D. Pynadath, R. P. Gold-

man, editors, Plan, Activity, and Intention Recogni-

tion, Theory and practice.

C. L. Baker, R. Saxe, J. B. T. (2009). Action understanding

as inverse planning. In Cognition, pages 329–349.

G.Weiss (2013). Multiagent Systems. MITvpress, 2nd edi-

tion.

K. Itoda, N. Watanabe, Y. T. (2017). Analyzing human de-

cision making process with intention estimation us-

ing cooperative pattern task. pages 249–258. Lecture

Notes in Computer Science, vol 10414.

R. Sato, Y. T. (2014). Coordinating turn-taking and talk-

ing in multi-party conversations by controlling robot’s

eye-gaze. The 23rd IEEE International Symposium on

Robot and Human Interactive Communication.

Rao, A. S. and Georgeff, M. P. (1991). Modeling rational

agents within a bdi-architecture. pages 473–484. KR

91.

T. Makino, K. A. (2003). Self-observation principle for es-

timating the other ’s internal state: A new computa-

tional theory of communication. Mathematical Engi-

neering Technical Reports METR.

T. Omori, A. Yokoyama, Y. N. and Ishikawa, S. (2010).

Computational modeling of action decision process

including other’s mind-atheory toward social ability.

In Keynote Talk, IEEE International Conference on

Intelligent Human Computer Interaction (IHCI).

W. Yoshida, R. J. Dolan, K. J. F. (2008). Game theory of

mind. page doi:10.1371/journal.pcbi.1000254. PLoS

Comput Biol4(12): e1000254.

Model Analysis of Human Group Behavior Strategy using Cooperative Agents

305