Climbing the Ladder: How Agents Reach Counterfactual Thinking

Caterina Moruzzi

a

Department of Philosophy, Universit

¨

at Konstanz, 78457, Konstanz, Germany

Keywords:

Counterfactuality, Agency, Decision-making, Causal Reasoning, Robustness.

Abstract:

We increasingly rely on automated decision-making systems to search for information and make everyday

choices. While concerns regarding bias and fairness in machine learning algorithms have high resonance, less

addressed is the equally important question of to what extent we are handing our own role of agents over to

artificial information-retrieval systems. This paper aims at drawing attention to this issue by considering what

agency in decision-making processes amounts to. The main argument that will be proposed is that a system

needs to be capable of reasoning in counterfactual terms in order for it to be attributed agency. To reach this

step, automated system necessarily need to develop a stable and modular model of their environment.

1 INTRODUCTION

Research on agency and causal efficacy has a long his-

tory in the philosophical literature, and recently there

has been a resurgence of interest in this topic (List and

Pettit, 2011; M

¨

uller, 2008; Nyholm, 2018; Ried et al.,

2019; Sarkia, 2021). Like with many other ‘suitcase

words’, there is no general agreement on the defini-

tion of the notion of agency. While the generally ac-

cepted definition of agent in artificial intelligence (AI)

research is “anything that can be viewed as perceiving

its environment through sensors and acting upon that

environment through actuators” (Russell and Norvig,

2011), others are unhappy with the broadness of this

definition and look for a narrower interpretation of the

term, for example as an entity that can learn and be

trained (M

¨

uller and Briegel, 2018).

In order to be able to act upon the environment and

interact with it, it seems that an agent needs to be able

to reason in causal terms, namely to understand which

of the variables present in its surroundings is respon-

sible for the change occurred (Tomasello, 2014). Still,

it may be argued that causal reasoning is not enough

if the agent wants to reach a level of abstraction that

allows it to generalise to unknown scenarios. To reach

this aim, what the agent needs to develop is the capac-

ity of reasoning in counterfactual terms, to project it-

self into unknown situations it has never experienced

before. Through counterfactual reasoning, the agent

can strengthen its predictive abilities and understand

not only what happens but also ‘why’ something hap-

a

https://orcid.org/0000-0002-9728-3873

pens. The different levels of competence possessed

by a system, from correlation between data to coun-

terfactual thinking, are described by Judea Pearl and

Dana Mackenzie through the metaphor of the Ladder

of Causation (Pearl and Mackenzie, 2018). They ar-

gue that data without an understanding of the causal

links occurring between them are not enough: to

be able of generalisation and abstraction to out-of-

distribution scenarios, agents need to frame a model

of the world they live in. In what follows, an agent

will be understood as positioning itself on the top rung

of the Ladder of Causation, that of counterfactuality.

In this paper, the relevance of organising informa-

tion through frames in order to reach the third rung on

this Ladder will be examined, describing the process

of building robustness into a human decision-making

system and considering how recent machine learning

(ML) models try to implement this capacity in auto-

mated systems (Bertsimas and Thiele, 2006; Hansen

and Sargent, 2011). In conclusion, it will be claimed

that models that organise information through sparse

and modular frames show promising results in devel-

oping better generalisation abilities.

2 AGENCY IN

DECISION-MAKING

Automated decision-making systems increasingly

support humans in looking for information. AI in-

formation access systems, such as recommendation

systems, guide us in searching for the information

Moruzzi, C.

Climbing the Ladder: How Agents Reach Counterfactual Thinking.

DOI: 10.5220/0010857900003116

In Proceedings of the 14th International Conference on Agents and Artificial Intelligence (ICAART 2022) - Volume 3, pages 555-560

ISBN: 978-989-758-547-0; ISSN: 2184-433X

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

555

that we absorb everyday, whether it be looking for

a product on an e-commerce platform, watching our

favourite series, or reading the news. They filter the

information that we access and are responsible for

sorting and shaping it, acting as gateways and con-

ditioning public choices and opinions (Nielsen, 2016;

Shoemaker et al., 2009).

Most of the times, we are unaware of these invisi-

ble partners that assist us in our quest for information

and we have the impression of being totally in charge

of the choices we make online. Other times, espe-

cially in systems with human-like interfaces, such

as voice assistants, we interact with them as social

agents, attributing responsibilities to them and, some-

times, placing trust on their decisions (Doyle et al.,

2019; Langer et al., 2021; Pitardi and Marriott, 2021).

Given the pervasiveness of the power asserted by

these information-framing systems, it is urgent to

consider users’ practices of attribution of agency to

them and the impact that their assertion of agency

over our access to information has on the trust that

we place in the systems themselves (Shin, 2020). The

first step we need to take in order to progress in this

direction is to understand which are the essential fea-

tures that a system needs to possess in order to be

deemed an agent.

With this aim, in the next sections human

decision-making processes are examined in the light

of Pearl and Mackenzie’s Ladder of Causation, in or-

der to extract the key ingredients that allow humans to

reach counterfactual reasoning abilities and, thus, be

deemed proper agents.

2.1 Ladder of Causation

To understand how a system can reach the counter-

factual rung, it is first necessary to describe what

the metaphor of the Ladder of Causation amounts to

(Pearl and Mackenzie, 2018).

Rung 1 of the Ladder is ‘Correlation’. A sys-

tem operating on the first rung is a mere observer of

what happens in the world. The question linked to

this stage is: “What is the probability that y happens,

given x?” or, in symbols, P(y|x).

Rung 2 is ‘Intervention’. In order to climb to this

level, the system needs to deliberately interact with

the environment and alter it. The question is: “What is

the probability that y happens if I do x?”, P(y|do(x)).

Rung 3 is ‘Counterfactuality’. Agents that reach

this step are able to imagine counterfactual scenar-

ios and to adapt their actions accordingly. The ques-

tion the agent asks is: “What is the probability that

y’ would occur had x’ occurred, given that I actually

observed x and y?”, P(y

0

x’

|x, y).

While humans are good at forming causal frames

of the available information, according to Pearl and

Mackenzie current state of the art ML models do not

progress beyond the first rung of the Ladder: that of

observing the environment and finding statistical cor-

relation between available data. Progress in AI can

come only through the development of systems that

are able to reason counterfactually and abstract to un-

known data. State-of-the-art automated systems can

produce counterfactuals, but without the capacity of

selecting the relevant ones among them (de V

´

ericourt

et al., 2021). Indeed, while ML systems surpass hu-

man capacities in processing large amount of data, it

is still a challenge for these systems to frame and filter

relevant information and to extrapolate to unknown

scenarios, a necessary ability to climb to the highest

rung on the Ladder.

Analysing the decision-making process that hu-

man agents go through to climb the Ladder is helpful

to understand which are the features that automated

systems need to develop in order to reach agentive

capacities. In addition, this description can help ad-

dressing further questions, such as: Can the counter-

factuality rung be reached through the acquisition of

causal reasoning skills, developed in Rung 2 of the

Ladder of Causation, or are other, qualitatively differ-

ent features, needed for an agent to perform counter-

factual thinking? Supposing that an artificial system

has computational power orders of magnitude larger

than what any system can at present have and, as a

consequence, it can test every possible scenario, could

it reach Rung 3 of the Ladder of Causation, or are

some priors necessary?

For the sake of the present analysis, mechanisms

that allow to proceed from rung 1 to rung 2 on the

Ladder will not be considered, while priority will be

given to addressing the question of to what extent the

framing of information helps agents to make the fi-

nal step toward rung 3, thus reaching counterfactual

reasoning skills.

3 CLIMBING THE LADDER

Suppose that an agent, Bob, wants to lose weight. In

order to decide what choices he needs to make in or-

der to achieve this aim, Bob goes through a decision-

making process. The steps that he will (presumably)

take follow the three rungs of the Ladder of Causation

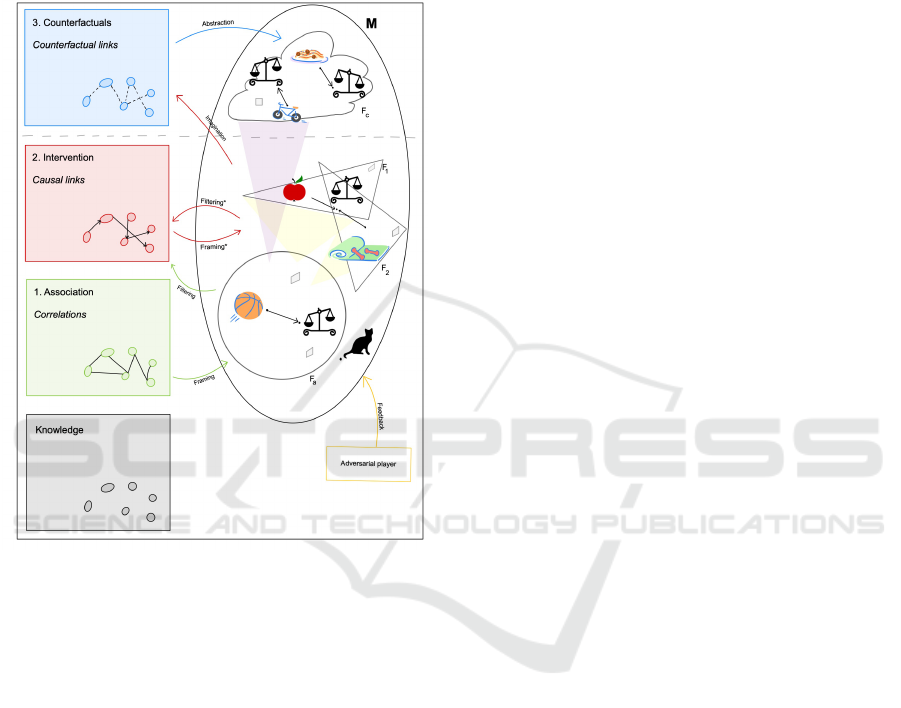

(see Figure 1).

A system operating on the first rung of the Lad-

der, ‘Association’, observes what happens in the avail-

able data, or ‘Knowledge’. At the beginning of the

decision-making process the agent just has data and

ICAART 2022 - 14th International Conference on Agents and Artificial Intelligence

556

the way it starts to frame it is essential to the final

output of the process. To achieve the aim of losing

weight, Bob may start by observing with which prob-

ability the variable ‘Playing basketball’ is associated

to the variable ‘Losing weight’. The initial, approxi-

mate, frame that he creates helps Bob understand and

organise the data (F

a

).

Figure 1: Decision-making process.

In order to climb to the ‘Intervention’ rung, the

agent needs to deliberately interact with the environ-

ment and alter it. In our example, Bob may join a bas-

ketball team to confirm the connection between the

variables ‘Playing basketball’ and ‘Losing weight’,

observed in the previous step. His question is: “What

is the probability that by playing basketball I will lose

weight?” If the intervention supports the observed

data, the agent can draw causal links between the vari-

ables ‘Playing basketball’ and ‘Losing weight’. To

make his frame more robust, Bob chooses among the

alterations to the environment the ones that produce

the desired outcome. He will use his frame to filter

out variables that are not related to the outcome ‘Los-

ing weight’, for example (stroking a) ‘Cat’. Once Bob

trusts the effectiveness of his frame, he starts inter-

preting data according to it. In turn, the frame influ-

ences Bob in drawing causal links between elements

in future experiences (Filtering*).

The process of optimising the frame by filter-

ing out irrelevant information is crucial to make the

frames more robust and more easily adaptable to other

scenarios. Another element that contributes to the

robustness of the frame is the interplay between the

agent and an Adversarial Player (Hansen and Sargent,

2011), which can be understood as a max-min de-

cision rule. The decision maker maximises and as-

sumes that the Adversarial Player chooses a probabil-

ity to minimise her expected utility (Goodfellow et al.,

2014). Suppose Bob cannot play because the bas-

ketball court is not available. The Adversarial Player

may make Bob try different things to achieve the aim

of losing weight, for example drinking lactose-free

milk, taking vitamin pills, practicing yoga, and so on.

Through the interplay with the Adversarial Player and

the feedback received, Bob may find out that adopting

a vegetarian diet works and add it to his frame (F

1

).

Or he may discover that exercising indoors also works

for losing weight and add it to a further frame (F

2

).

Frames are cognitive shortcuts used by agents to

move through the uncertainty of their surroundings

by identifying the variables that are responsible for

change (Kahneman, 2011). Using frames to interact

with the environment helps the agent react to chang-

ing conditions. By capturing the most important as-

pects of the world and filtering out the others, frames

help agents to learn from single experiences and come

up with general rules that can be applied to other sit-

uations, thus progressing toward the counterfactual

rung of the Ladder of Causation.

3.1 Reaching Counterfactuality

Counterfactual thinking amounts to searching for an

explanation to what happened by asking what should

have happened in the past that would have changed

the output (Pearl and Mackenzie, 2018). Reasoning

in counterfactual terms is considered a requisite for

a system to be attributed responsibility and it is a re-

quirement that agents need to satisfy in order to pro-

vide satisfactory, interpretable explanations (Lipton,

1990; Miller, 2019; Molnar, 2020; Wachter et al.,

2017).

Counterfactual reasoning occupies the third and

highest rung on the Ladder of Causation. Human

agents are able to imagine counterfactual scenarios

(‘Imagination’) and to plan how their frames can be

projected onto those (‘Abstraction’, see Figure 1).

The challenge in adapting the frames the agent built in

lower rungs of the Ladder to a counterfactual scenario

comes from the fact that the agent cannot adjust its ac-

tions on the basis of feedback. In our example, Bob

cannot go back in time and undo the action of playing

basketball to know whether he would have lost weight

if he did not play. He can only imagine it, drawing

Climbing the Ladder: How Agents Reach Counterfactual Thinking

557

inferences on how strong the links between the vari-

ables ‘Playing basketball’ and ‘Losing weight’ are by

considering his previous Knowledge.

In order to be able to adapt the frame to a coun-

terfactual scenario, Bob needs to identify fundamen-

tal causal links in available frames and understand the

relation that they have with variables that he has not

experienced, yet. For example, Bob may ask “What

is the probability that I would lose weight if I cycle?”.

He could, then, draw a link between playing basket-

ball and cycling, identify that the two activities have

something in common and, through abstraction, draw

a causal link between ‘Cycling’ and ‘Losing weight’.

Through counterfactual thinking Bob can also reflect

on the original cause of his weight gain, for exam-

ple by asking “What is the probability that I would

not have gained weight, had I not eaten so much dur-

ing my holiday in Italy?”. If the probability is low,

then he can identify ‘Italian food’ as the cause of his

weight gain.

In order to identify the variables responsible for

change, the agent can start by building a causal di-

agram. Figure 2 represents the cause-effect relation

between the variables in our example through a dia-

gram. This kind of causal diagram has been theorised

by Pearl as a way of mapping the data available to the

(alleged) agent, in order to identify cause-effect links

and make better predictions (Pearl and Mackenzie,

2018). The nodes in the diagram stand for the vari-

ables and the arrows for presumed causal relations.

1

An arrow connects the variable ‘Playing basketball’

to the variable ‘Losing weight’, as the agent has con-

cluded that playing basketball caused the weight loss.

Building a causal diagram where the agent can iden-

tify the variables that are responsible for change is

compelling to answer counterfactual questions of the

kind “What would have happened, had I acted dif-

ferently?”, thus allowing the agent to be discounted

from the burdensome need to experience all the pos-

sible scenarios.

Thinking about the past and about what would

have changed if it acted differently allows the agent

to understand which modules of the frame are respon-

sible for change. In so doing, the agent can form an

hyper-model (M) within which all the frames, real and

counterfactual, can be included. Through a higher

level of abstraction, Bob could identify what con-

nects all the activities responsible for losing weight

1

Causation is defined by Pearl as follows:“a variable X

is a cause of Y if Y ‘listens’ to X and determines its value

in response to what it hears.” (Pearl and Mackenzie, 2018)

The connection between the variables ‘Gaining weight’ and

‘Playing basketball’, ‘Cycling’, and ‘Exercising indoors’ is

represented here through a dotted line as it is not a proper

causal relation.

Figure 2: Causal diagram.

and make his model even more robust and capable of

adapting to different contexts. The relations that link

together variables within this hyper-model remain in-

variant and, thanks to its robustness, M can be used to

understand and deal with new data and produce new

estimates.

3.2 Sparse and Compositional Models

The description of how humans build robustness into

their decision-making systems made above supports

the idea that, in order to achieve abstraction, agents

need to build frames which select and organise rele-

vant information and to include these frames into an

hyper-model which they can use to adapt their actions

to both known and unknown scenarios. The explo-

ration of this abstraction mechanism of human cogni-

tion (arguably valid also for some animals) can help

practitioners understand how to program artificial sys-

tems that reach the same level of generalisation and

agency.

Indeed, recent ML systems try to approximate

the aim of dealing with out-of-distribution data and

adapting to unknown scenarios by developing two

features which are essential to the construction of

a comprehensive hyper-model: compositionality and

sparseness. In order to effectively deal with new situ-

ations in the world, agents conveniently build models

made of smaller parts that can be recombined. This

compositional ability is useful to explain new data ob-

served in scenarios previously unknown (Kahneman,

2011), as it allows agents to identify causal connec-

tions that are valid throughout different frames. The

adaptability of the model is enhanced by the ability

of the agent to identify the variables that are responsi-

ble for change, providing explanations of what it ob-

serves and adapting to changes without the need of

experiencing all possible scenarios.

A good model must be stable and robust to

changes. The creation of a sparse and modular model

has been explored as an option for building robustness

in automated systems, for example through the repre-

sentation of variables in sparse factor graphs (Ben-

ICAART 2022 - 14th International Conference on Agents and Artificial Intelligence

558

gio, 2017; Ke et al., 2019). Another example are

Recurrent Independent Mechanisms, a meta-learning

approach which decomposes knowledge in the train-

ing set in modules that can be re-used across tasks

(Goyal et al., 2019; Madan et al., 2021). The selec-

tion of which modules to use for different tasks is

performed by an attention mechanism, while Rein-

forcement Learning mechanisms are responsible for

the process of adaptation to new parameters.

A modular capacity is better achieved in systems

that combine the data-processing capabilities of ML

models with the capacity of abstraction and logical

reasoning of symbolic AI methods. According to sup-

porters of this new paradigm, referred to as the Third

Wave or hybrid AI, statistical models are not enough

to achieve generalisation, we need to teach systems

to handle also logical and symbolic reasoning. This

hybrid approach of symbolic and sub-symbolic meth-

ods allows to hold the advantages of both strategies,

get rid of their respective weaknesses and, at the same

time, program models that fare much better in gener-

alisation and abstraction (Anthony et al., 2017; Ben-

gio et al., 2019; Bonnefon and Rahwan, 2020; Booch

et al., 2020; Garcez and Lamb, 2020; Hill et al., 2020;

Ke et al., 2019; Mao et al., 2019; Moruzzi, 2020). The

benefit of these hybrid models consists in their capac-

ity of combining the computational power of Deep

Learning with symbolic and logical reasoning to not

only be able to process large amounts of data but also

identify which elements within those data stay stable.

4 CONCLUSIONS

The ongoing research presented in this paper con-

tributes to an exhaustive and accurate analysis of

the notion of agency, a useful tool for the investiga-

tion of how to build reliable and flexible decision-

making systems. The study of how the progression

toward generalisation to unknown scenarios happens

and why it is necessary to develop agency helps cre-

ating a deeper theoretical understanding of the char-

acteristics of a robust decision-making process, con-

tributing to address a fundamental issue within AI:

whether and how systems achieve causal agency.

The analysis of the parallel between decision-

making in humans and machines that has been here

presented not only contributes to debates on human

and artificial agency but can also provide relevant in-

sights to research in neuromorphic engineering (Indi-

veri and Sandamirskaya, 2019). Indeed, one of the

challenges in the development of embodied devices

that interact with the environment is the design of so-

lutions through which to generate context-dependent

behaviour, adaptable to changing and unknown con-

ditions.

This paper has identified the ability of sorting and

organising information through frames as a crucial

requisite for agents to build a robust model of their en-

vironment, a model which allows them to adapt and

modify their choices according to the context. The

analysis of the development of agency in decision-

making systems is a preliminary, essential step to

study whether the emulation of biological processes

is a viable path for achieving power-efficient solu-

tions with the aim to build robust and flexible artificial

agents.

REFERENCES

Anthony, T., Tian, Z., and Barber, D. (2017). Thinking fast

and slow with deep learning and tree search. arXiv

preprint, arXiv:1705.08439.

Bengio, Y. (2017). The consciousness prior. arXiv preprint,

arXiv:1709.08568.

Bengio, Y., Deleu, T., Rahaman, N., Ke, R., Lachapelle, S.,

Bilaniuk, O., Goyal, A., and Pal, C. (2019). A meta-

transfer objective for learning to disentangle causal

mechanisms. arXiv preprint, arXiv:1901.10912.

Bertsimas, D. and Thiele, A. (2006). Robust and data-

driven optimization: Modern decision making under

uncertainty. INFORMS TutORials in Operations Re-

search, pages 95–122.

Bonnefon, J.-F. and Rahwan, I. (2020). Machine think-

ing, fast and slow. Trends in Cognitive Sciences,

24(12):1019–1027.

Booch, G., Fabiano, F., Horesh, L., Kate, K., Lenchner, J.,

Linck, N., Loreggia, A., Murugesan, K., Mattei, N.,

Rossi, F., et al. (2020). Thinking fast and slow in AI.

arXiv preprint, arXiv:2010.06002.

de V

´

ericourt, F., Cukier, K., and Mayer-Sch

¨

onberger, V.

(2021). Framers: Human Advantage in an Age of

Technology and Turmoil. Penguin Books Ltd, New

York.

Doyle, P. R., Edwards, J., Dumbleton, O., Clark, L., and

Cowan, B. R. (2019). Mapping perceptions of hu-

manness in speech-based intelligent personal assistant

interaction. arXiv eprint, arXiv:1907.11585.

Garcez, A. d. and Lamb, L. C. (2020). Neurosymbolic AI:

The 3rd wave. arXiv preprint, arXiv:2012.05876.

Goodfellow, I. J., Shlens, J., and Szegedy, C. (2014). Ex-

plaining and harnessing adversarial examples. arXiv

preprint arXiv:1412.6572.

Goyal, A., Lamb, A., Hoffmann, J., Sodhani, S., Levine,

S., Bengio, Y., and Sch

¨

olkopf, B. (2019). Re-

current independent mechanisms. arXiv preprint,

arXiv:1909.10893.

Hansen, L. P. and Sargent, T. J. (2011). Robustness. Prince-

ton University Press, Princeton, NJ.

Climbing the Ladder: How Agents Reach Counterfactual Thinking

559

Hill, F., Tieleman, O., von Glehn, T., Wong, N., Merzic, H.,

and Clark, S. (2020). Grounded language learning fast

and slow. arXiv preprint, arXiv:2009.01719.

Indiveri, G. and Sandamirskaya, Y. (2019). The importance

of space and time for signal processing in neuromor-

phic agents: the challenge of developing low-power,

autonomous agents that interact with the environment.

IEEE Signal Processing Magazine, 36(6):16–28.

Kahneman, D. (2011). Thinking, Fast and Slow. Farrar,

Straus and Giroux, New York.

Ke, N. R., Bilaniuk, O., Goyal, A., Bauer, S., Larochelle,

H., Sch

¨

olkopf, B., Mozer, M. C., Pal, C., and

Bengio, Y. (2019). Learning neural causal mod-

els from unknown interventions. arXiv preprint,

arXiv:1910.01075.

Langer, M., Hunsicker, T., Feldkamp, T., K

¨

onig, C. J., and

Grgi

´

c-Hla

ˇ

ca, N. (2021). “Look! It’s a computer pro-

gram! It’s an algorithm! It’s AI!”: Does terminol-

ogy affect human perceptions and evaluations of in-

telligent systems? arXiv eprint, arXiv:2108.11486.

Lipton, P. (1990). Contrastive explanation. Royal Institute

of Philosophy Supplements, 27:247–266.

List, C. and Pettit, P. (2011). Group Agency: The Possibil-

ity, Design, and Status of Corporate Agents. Oxford

University Press, Oxford.

Madan, K., Ke, N. R., Goyal, A., Sch

¨

olkopf, B., and

Bengio, Y. (2021). Fast and slow learning of re-

current independent mechanisms. arXiv preprint,

arXiv:2105.08710.

Mao, J., Gan, C., Kohli, P., Tenenbaum, J. B., and Wu, J.

(2019). The neuro-symbolic concept learner: Inter-

preting scenes, words, and sentences from natural su-

pervision. arXiv preprint, arXiv:1904.12584.

Miller, T. (2019). Explanation in artificial intelligence: In-

sights from the social sciences. Artificial Intelligence,

267:1–38.

M

¨

uller, T. and Briegel, H. J. (2018). A stochastic process

model for free agency under indeterminism. dialec-

tica, 72(2):219–252.

Molnar, C. (2020). Interpretable Machine Learning.

Lulu.com.

Moruzzi, C. (2020). Artificial creativity and general intelli-

gence. Journal of Science and Technology of the Arts,

12(3):84–99.

M

¨

uller, T. (2008). Living up to one’s commitments:

Agency, strategies and trust. Journal of Applied Logic,

6(2):251–266.

Nielsen, R. K. (2016). News media, search engines and so-

cial networking sites as varieties of online gatekeep-

ers. In Peters, C. and Broersma, M., editors, Rethink-

ing Journalism Again, pages 93–108. Routledge, New

York.

Nyholm, S. (2018). Attributing agency to automated sys-

tems: Reflections on human–robot collaborations and

responsibility-loci. Science and engineering ethics,

24(4):1201–1219.

Pearl, J. and Mackenzie, D. (2018). The Book of Why: The

New Science of Cause and Effect. Hachette UK.

Pitardi, V. and Marriott, H. R. (2021). Alexa, she’s not hu-

man but. . . Unveiling the drivers of consumers’ trust

in voice-based artificial intelligence. Psychology &

Marketing, 38(4):626–642.

Ried, K., M

¨

uller, T., and Briegel, H. J. (2019). Modelling

collective motion based on the principle of agency:

General framework and the case of marching locusts.

PloS one, 14(2):e0212044.

Russell, S. J. and Norvig, P. (2011). Artificial Intelligence:

A Modern Approach. Third Edition. Prentice Hall, Up-

per Saddle River, NJ.

Sarkia, M. (2021). Modeling intentional agency: a neo-

gricean framework. Synthese, pages 1–28.

Shin, D. (2020). User perceptions of algorithmic decisions

in the personalized AI system: Perceptual evaluation

of fairness, accountability, transparency, and explain-

ability. Journal of Broadcasting & Electronic Media,

64(4):541–565.

Shoemaker, P. J., Vos, T. P., and Reese, S. D. (2009). Jour-

nalists as gatekeepers. In Wahl-Jorgensen, K. and

Hanitzsch, T., editors, The Handbook of Journalism

Studies, pages 73–87. Routledge, New York.

Tomasello, M. (2014). A Natural History of Human Think-

ing. Harvard University Press, Cambridge, MA.

Wachter, S., Mittelstadt, B., and Russell, C. (2017). Coun-

terfactual explanations without opening the black box:

Automated decisions and the GDPR. Harvard Journal

of Law & Technology, 31:842–887.

ICAART 2022 - 14th International Conference on Agents and Artificial Intelligence

560