On Ethical Considerations Concerning Autonomous Vehicles

Konstantina Marra

a

, Ilias Panagiotopoulos

b

and George Dimitrakopoulos

c

Department of Informatics and Telematics, Harokopio University, Athens, Greece

Keywords: Autonomous Vehicles, Ethical Dilemmas, Trolley Problem, Social Aspects, Thought Experiments.

Abstract:

Autonomous vehicles are studied in terms of technology and economics, but there is also a social component

to be discussed. Whereas technical challenges are being resolved and great progress is being made in their

design, social and ethical issues arise, with legal and philosophical aspects, which must be addressed.

Following this trend, the present study focuses on exploring peoples’ views concerning ethical dilemmas

related to the behaviour of autonomous vehicles in road accidents. In addition, liability issues in cases of

such accidents are examined. On this basis, a questionnaire based survey is conducted, aiming at

investigating the views of future owners of autonomous vehicles on liability and on the decisions, which

such vehicles should make in the event of an unavoidable road accident. The above is achieved through a

series of thought experiments, which reveal how potential consumers solve different versions of the Trolley-

problem in two cases: with and without the option of equal treatment. The present analysis treats the risk of

accidents as inevitable and tries to prevent public reactions which could stall the adoption of autonomous

vehicles, by revealing peoples’ perceptions of morality, which in the future could contribute to creating

more ethical and trustworthy autonomous vehicles.

a

https://orcid.org/0000-0001-6858-2914

b

https://orcid.org/0000-0003-4366-6470

c

https://orcid.org/0000-0002-7424-8557

1 INTRODUCTION

Autonomous vehicles will become a reality on the

highways in the near future, and it is expected that

by 2040 the highways will have lanes specially

designed for them (Yang and Coughlin, 2014). This

prospect is generally considered to be positive, since

autonomous vehicles are expected to improve our

quality of life and to be able to prevent road

accidents. Schoettle and Sivak (2015), Gless et al.

(2016), Lohmann (2016) and Luzuriaga et al. (2019)

have pointed out the many advantages of using

autonomous vehicles.

However, one should not think that such vehicles

never make mistakes or that they are completely

safe. Accidents can be prevented, provided that

errors and imperfections of the vehicle software

remain as limited as possible (Luzuriaga et al.,

2019). Otherwise, an autonomous vehicle may not

respond appropriately to an unforeseen critical

situation, which could result in vehicle damage,

human injury or even loss of life (Gless et al., 2016)

Technical failures are not the only risk. Autonomous

vehicles are also vulnerable to hacking. A person

with malicious intent could, for example, gain illegal

access to the vehicle and disrupt the operation of its

sensors, in order to cause an accident (Lohmann,

2016; Holstein et al., 2018). Therefore, the scientists

and engineers involved in the design of these

vehicles must overcome significant technological

challenges, particularly those related to the safe

interaction of vehicles with their environment

(drivers of conventional vehicles, pedestrians,

cyclists and other autonomous vehicles).

Although autonomous vehicles are a topic of

discussion from a technological and economic point

of view, there is also a social side to be considered.

While significant progress is being made in their

design, as technical issues are gradually being

resolved, social and ethical issues arise with legal

and philosophical implications that need to be

addressed (Holstein and Dodig-Crnkovic, 2018).

The experts voice concerns related to the behavior of

autonomous vehicles in cases when accidents are

unavoidable. One example of a moral issue, from

180

Marra, K., Panagiotopoulos, I. and Dimitrakopoulos, G.

On Ethical Considerations Concerning Autonomous Vehicles.

DOI: 10.5220/0010979800003191

In Proceedings of the 8th International Conference on Vehicle Technology and Intelligent Transport Systems (VEHITS 2022), pages 180-187

ISBN: 978-989-758-573-9; ISSN: 2184-495X

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

which significant ethical dilemmas arise, is whether

the aim of the vehicle should be to protect its

passengers at all costs or to act in a way that

minimizes the total number of losses (Luzuriaga et

al., 2019). Except for that, in the event of an

accident, it is certain that liability issues will

emerge. One can easily imagine that the attribution

of responsibilities becomes more complex, since

autonomous vehicles can operate completely without

a driver (Gless et al., 2016).

In light of the above, the purpose of the present

study is: (a) to investigate peoples’ views concerning

which behaviours of autonomous vehicles in critical

situations and road accidents should be deemed

acceptable, (b) to highlight possible universal

preferences of future buyers of autonomous vehicles,

which could provide a solution to the problem of

choosing ethical rules, based on which autonomous

vehicles could be programmed in the future. This is

achieved through a series of thought experiments, in

which participants are asked to make decisions on

behalf of the autonomous vehicle during the road

accident.

The paper is structured as follows: Section 2

contains an overview of past studies and presents the

ethical problems of driverless vehicles to which the

present study refers. The methods used to conduct

the present research are explained in section 3.

Results as well as conclusions and final remarks are

mentioned in sections 4 and 5 of the paper,

respectively.

2 ETHICS AND DRIVERLESS

VEHICLES

There are a number of ethical issues related to

driverless vehicles, such as issues concerning safety,

software security, privacy, trust, transparency,

reliability, accountability (Holstein et al., 2018).

First of all, in this section, the term “Driverless

Dilemma” is explained. Furthermore, an overview of

recent studies is given, related to how people

perceive crashes involving driverless vehicles, what

their varying responses to the driverless dilemma are

and what approaches have been proposed for the

morality problem.

2.1 Definition of the “Driverless

Dilemma”

There are three main methods of programming

autonomous vehicles (Luzuriaga et al., 2019): (1) By

using pre-existing ethical rules based on philosophy.

This approach is problematic, as there is no

unanimity among moral philosophers as to what is

moral. (2) Using rules that the general public

considers appropriate and acceptable, in order to

avoid social outcry in the event of a road accident.

(3) Using rules that result from observing the

behaviour of car drivers.

A good way to start investigating peoples’

opinions concerning the behaviour of autonomous

vehicles during an accident, is by extending the

thought experiment of the so-called “Trolley

Problem” in philosophy to smart vehicles, which

will sooner or later have to deal with similar

situations. The emerging “Driverless Dilemma”

according to Holstein and Dodig-Crnkovic (2018),

can be formulated as follows: “A self-driving vehicle

drives on a street at a high speed. In front of the

vehicle, a group of people suddenly blocks the street.

The vehicle is too fast to stop before it reaches the

group. If the vehicle does not react immediately, the

whole group will be killed. The car could however

evade the group by entering the pedestrian way and

consequently kill a previously not involved

pedestrian”.

In these mental experiments, participants are

asked to make a decision on behalf of the

autonomous vehicle as to who should be saved in the

accident. In this way, universal views emerge, which

could provide a solution to the problem of ethical

rules, on the basis of which autonomous vehicles

could be programmed in the future.

However, objections have been raised regarding

the effectiveness of such dilemmas. These objections

include the view that in real life conditions, vehicles

seldom have to deal with only two alternatives. The

possible actions are various and more complicated.

Moreover, these dilemmas are not considered

realistic. It is argued that it isn’t possible for

autonomous vehicles to have enough control to

choose who to rescue, but at the same time not have

enough control to completely avoid the accident.

Dilemmas are also treated as simplistic, as they

ignore factors such as legal aspects and liability

issues. (De Freitas et al., 2020).

In addition to the above, the questions posed by

the dilemmas include a limited number of possible

solutions, all of which are morally questionable. It

can be perceived as wrong to force people to make

such decisions through these mental experiments.

The driverless dilemma is often considered to be a

misguided approach that focuses attention on the

wrong side of the issue of autonomous vehicle

accidents. The research should not focus on who will

On Ethical Considerations Concerning Autonomous Vehicles

181

lose their lives, but on the complete avoidance of the

accident. Indeed, perhaps the most serious concern

against these dilemmas is the fact that they involve

the assumption that one will survive and one will be

killed, based on criteria which ignore that all people

are equal. In the simplest case a comparison is made

between different sizes of groups of people, but

many scenarios suggest making decisions based on

age, gender or social class of people. After all, if

decision-making on autonomous vehicles required

personal data to be taken into account, there would

arise an additional problem of privacy and personal

data protection, as a vehicle would require access to

all personal data (Holstein and Dodig-Crnkovic,

2018).

Even if the driverless dilemma could be solved,

another factor which nevertheless renders it

ineffective is the fact that there is no overall

established infrastructure that allows autonomous

vehicles to function properly yet. Whereas in a smart

city the autonomous vehicle will be able to obtain

detailed information about its environment and

choose the solution with the best result that

maximizes the benefit and/ or minimizes the

damage, one must consider that, until all cities

become smart cities, autonomous vehicles involved

in traffic will have to interact with human drivers.

However, the current mixed environment of vehicles

(smart and not) or locations (with and without smart

infrastructure) means that the decision-making of the

autonomous vehicle cannot be well-founded, due to

the fact that there is insufficient data. Therefore, the

inequality problem would include even more aspects

than it would have if there were already established

smart cities (Holstein and Dodig-Crnkovic, 2018).

In any case, these mental experiments are not

really intended to examine every aspect of a road

accident, but to focus only on ethical aspects in

order to investigate which extreme behaviours of a

vehicle would be accepted by the general public.

This goal is best achieved if the dilemmas are more

simply formulated, even if that means they become

less realistic. It should be borne in mind that non-

experts in artificial intelligence or ethical philosophy

are the majority and are the future buyers of

autonomous vehicles. Therefore, it is important to

find a way of communication between scientists and

the general public, which makes the simplicity of

these mental experiments a positive element. In

addition, the dilemmas manage to draw the public's

attention to the ethics of autonomous vehicles,

which is desirable, since progress in a field can only

take place if a corresponding interest exists. (De

Freitas et al., 2020).

2.2 Responses to the “Driverless

Dilemma”

Ethics of autonomous vehicles have attracted the

attention of many researchers, who seek to define

how such a vehicle should be designed. In theory

this subject has been approached among others by

studies such as Shariff et al. (2017) and Bissell et al.

(2018).

The study by Liu et al. (2019) shows that,

although the consequences of the crashes involving

an autonomous vehicle and a conventional vehicle

were identical, the crash involving the autonomous

vehicle was perceived as more severe, regardless of

whether it was caused by the autonomous vehicle or

by others and whether it resulted in an injury or a

fatality. The research by De Freitas and Cikara

(2020) revealed more negative reactions towards the

manufacturer of the autonomous vehicle, when a

vehicle caused damage deliberately.

According to the study by Gao et al. (2020),

most of the participants wanted to minimize the total

number of people who would be injured in a road

accident. It has also been concluded that most

drivers consider not only their own safety, but also

the safety of pedestrians, as they chose to hit an

obstacle rather than hit pedestrians. Choosing a

course with obstacles in order to protect a pedestrian

could also be considered as a way to minimize the

overall damage caused. Bonnefon et al. (2016) have

also noted that participants strongly agreed it would

be more moral for autonomous vehicles to sacrifice

their own passengers when this sacrifice would

result in minimizing the number of casualties on the

road. However, the same participants showed an

inclination to ride in autonomous vehicles that will

protect them at all costs. According to Liu and Liu

(2021) participants perceived more benefits from

selfish autonomous vehicles which protect the

passenger rather than the pedestrian, showing a

higher intention to use and greater willingness to pay

extra money for these autonomous vehicles.

The results of the research by Tripat (2020)

showed that, due to the shift in accountability,

autonomous vehicles seem to have also shifted

people's moral principles towards self-interest. In the

case of an autonomous vehicle, the control of the

actions of the vehicle by the human driver is limited,

so the responsibility for any harmful consequences

can be attributed to the autonomous vehicle. As a

result, it is possible for the passenger to ensure their

self-protection while exempting themselves from the

moral cost of causing damage to a pedestrian.

Therefore, it is expected that most people would be

VEHITS 2022 - 8th International Conference on Vehicle Technology and Intelligent Transport Systems

182

willing to choose to cause harm to the pedestrian

when it comes to an autonomous vehicle.

The research of Bigman and Gray (2020) showed

that people would prefer to save a woman over a

man, a younger person over an older one, a person

with good physical health over a person with poor

physical health, a person of a higher social status

over a person of lower social status, a law-abiding

person over a delinquent person. Also, the

participants in the research would choose to save

the largest possible number of people, and they

would rather save the pedestrians than the

passengers of the vehicle. With the addition of a

third option, which allowed the two parties

partaking of the dilemma to be treated equally, it

was observed that the vast majority chose this

option, revealing that the general public wants

autonomous vehicles to treat people equally.

The paper by Li et al. (2019) presents three

principles that could serve as solutions to the ethical

problems of autonomous vehicles: the principle of

consciousness transformation, the principle of

responsibility distribution, and the principle of law

making. The principle of consciousness involves

teaching and giving the correct amount and type of

information to the people. The level of responsibility

distribution concerns both ethical and legal aspects.

Small disputes of responsibility can be resolved

through ethics, but complex and difficult judgment

disputes must be resolved through legal means,

which is why the law-making principle is needed.

3 METHODOLOGY

Although researchers have studied the moral

dilemmas of autonomous vehicles both theoretically

and practically, ethical issues have not yet been

resolved, since we can reach no definite conclusion

as to which actions of a vehicle would constitute

moral behaviour. The present analysis does not aim

to provide any recommendations regarding

particular principles on which the programming of

autonomous vehicles can be based. It focuses on

investigating the opinions of the public about

autonomous vehicles with different crash

behaviours, and how these opinions could be

considered before attempting to design any crash

algorithms.

On this basis, the main research questions on

which the present analysis is focused are the

following:

Who is considered responsible in case of an

accident with an autonomous vehicle?

How do people react to different scenarios

of the driverless dilemma?

In order to answer the above questions, a

quantitative survey was carried out through a

questionnaire of 24 closed-ended questions. The

survey was conducted between July and October

2021. Questionnaires were distributed electronically

via mailing lists and social media, and the filling out

was done by individuals residing in Greece. The

sample of individuals was random, so that they do

not have common characteristics and can represent

the general population of the country. Answers were

recorded from 266 participants.

Concerning the questionnaire’s structure, it

includes the following categories:

Demographic attributes (5 questions)

Autonomous vehicle liability issues (2

questions)

Thought experiments (17 questions), which

contains “Driverless dilemma - Possible

solutions” (5 questions), “Thought

experiment with forced choice” (6

questions), and “Thought experiment with

equal treatment option” (6 questions).

More precisely, in order to discover the

participants’ views on liability issues, participants

were asked if they would hold responsible for an

accident the owner of the vehicle, the vehicle itself

or rather the manufacturer of the vehicle. In

addition, they were asked if they would be willing to

take collective action against an autonomous vehicle

manufacturer, in the event of a road accident caused

by an autonomous vehicle.

With respect to the driverless dilemma, questions

were asked on whether the participants would

actively change the vehicle’s course or prefer to

remain inactive, whether the participants would

prefer a larger rather than a smaller group of people

to be saved, which group they would save, if one

group could be saved only with a violation of the

highway code, while the other could be saved

without violating the highway code and whether

they would prefer the vehicle to save its passengers

or the pedestrians. Questions relating to the thought

experiments also include scenarios of autonomous

vehicle accidents, in which the participants have to

decide who to save between two options: younger or

older, male or female, fit or in a bad physical

condition, law-abiding or demonstrating delinquent

behaviour, higher or lower social status person or

animal. In these choices, equal treatment is allowed

at first, but then the participants are presented with

the same questions with forced inequality.

On Ethical Considerations Concerning Autonomous Vehicles

183

Furthermore, the questionnaire in its introduction

section included a definition of the term “autonomous

vehicle” as well as detailed descriptions for each case

of the thought experiments. The above were

introduced in order to solve the problem of

participants’ unfamiliarity with the subject of the

present analysis, assuming that they have no previous

knowledge of autonomous vehicles.

4 RESULTS AND DISCUSSION

This section presents the results of the research.

Table 1 shows the demographic data of the

respondents. Regarding the gender, the participant

sample is almost divided in half. The majority of the

participants are under 29 years of age and live in a

big city/ capital. Most of the participants are

secondary education graduates and single.

Concerning liability issues, more participants

consider the owner of the vehicle responsible

(50.7%) rather than the manufacturer (44.4%). Very

few consider the vehicle itself responsible (4.9%),

probably because they realize that the vehicle is

making the decisions it has been programmed to

make. Results also have shown that people would be

willing to take collective action against an

autonomous vehicle manufacturer in the event of an

autonomous vehicle causing a road accident (60%).

Regarding the autonomous vehicle dilemma,

action and change of course were preferred, when

participants were faced with the choice to maintain

the vehicle’s course or change it (80.5%). Saving the

larger number of lives was also preferred (91.4%),

as well as observing the Highway Code, if one group

can be protected without violating it (75.6%).

The number of people who would prefer to save

the passenger in an accident is exactly the same as the

number of people who would prefer to save the

pedestrian. Not all people who prefer the pedestrian

being saved were consistent with their choice,

however most would indeed be willing to be

passengers to an autonomous vehicle which sacrifices

the passengers (54.1%). If most people who would

like to protect the pedestrian from the passenger were

reluctant to drive vehicles programmed to sacrifice

the passenger, then that would reveal a possible

obstacle to the adoption of autonomous vehicles that

are programmed with such ethical rules.

Furthermore, 92.9% of the participants chose to

save the younger person while 7.1% chose to save

the older when faced with forced inequality. With

equality allowed, 37.2% chose to save the younger

Table 1: Demographic attributes of respondents.

Gender Frequency Percentage (%)

Male 133 50.0

Female 131 49.2

Other 2 0.8

Age Frequency Percentage (%)

≤ 20 88 33.1

21-29 91 34.2

30-39 33 12.4

40-49 37 13.9

50-59 15 5.6

≥ 60 2 0.8

Place of residence Frequency Percentage (%)

Big city / capital

> 100000 inhabitants

160 60.2

Suburb 48 18.0

Small town

< 100000 inhabitants

24 9.0

Province

< 30000 inhabitants

34 12.8

Marital Status Frequency Percentage (%)

Single 205 77.0

Married 54 20.3

Divorced / Separated 6 2.3

Widow/er 1 0.4

Education Frequency Percentage (%)

Primary school graduate 6 2.3

Secondary school

graduate

146 54.9

Trade / technical /

vocational training

graduate

7 2.6

Bachelor’s degree 71 26.7

Mastert’s degree 34 12.8

Doctoral diploma 2 0.7

person, 1.1% chose to save the older and 61.7%

chose the equal treatment (Figure 1a).

In the case of forced inequality, 89.5% of the

respondents preferred to save the female pedestrian,

whereas 10.5% preferred to save the male

pedestrian. With equality allowed, 5.3% would save

the female, 0.3% would save the male, while 94.4%

would choose equal treatment (Figure 1b).

VEHITS 2022 - 8th International Conference on Vehicle Technology and Intelligent Transport Systems

184

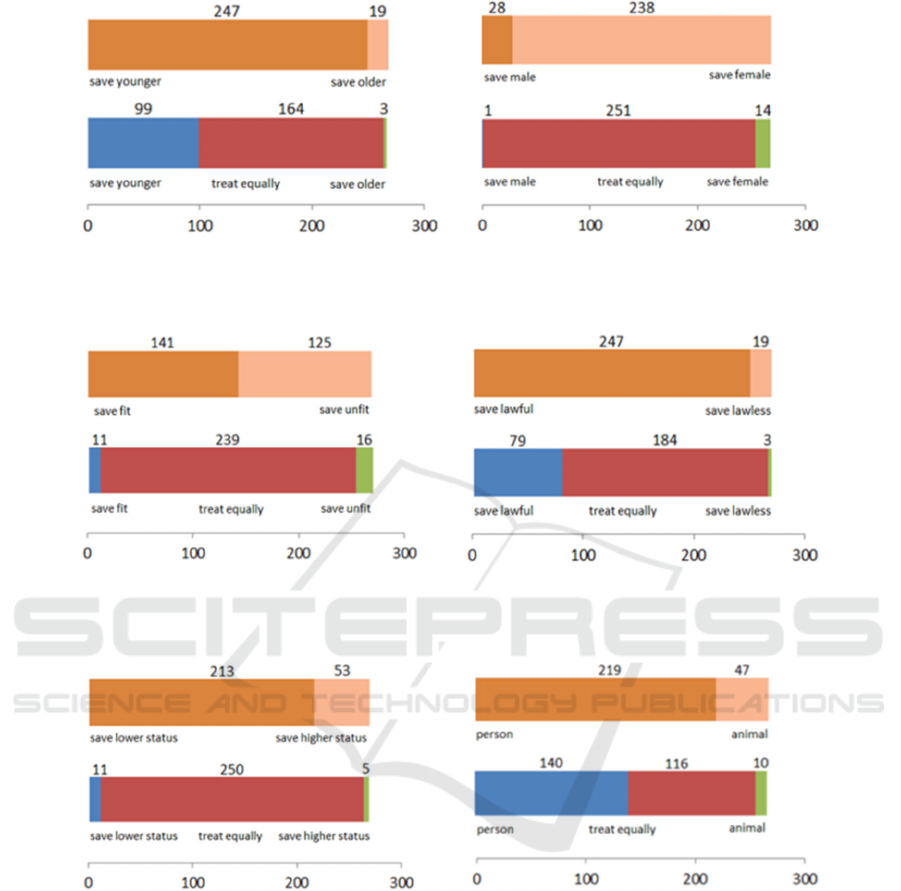

(a) (b)

Figure 1: Number of responses regarding (a) the choice between a younger and an older pedestrian, (b) the choice between

a male and a female pedestrian.

(a) (b)

Figure 2: Number of responses regarding (a) the choice between a pedestrian in good physical condition and a pedestrian in

a bad physical condition, (b) the choice between a law-abiding pedestrian and a pedestrian with delinquent behaviour.

(a) (b)

Figure 3: Number of responses regarding (a) the choice between a pedestrian of lower social status and a pedestrian of

higher social status, (b) the choice between a person and an animal.

Moreover, 53% of the respondents find it

preferable to protect a person with a good physical

condition and 47% would choose in favour of a

person with a bad physical condition, when no

equality is allowed. If equality is allowed, 4.1%

would save the fit person, 6% would save the unfit

person and 89.9% would treat them equally (Figure

2a).

Faced with forced inequality, 92.9% of the

participants chose to save the lawful person, whereas

7.1% chose to save the lawless one. With equality

allowed, 29.7% would save the lawful, 1.1% would

save the lawless, while 69.2% would prefer equal

treatment (Figure 2b).

In addition, 80.1% of the participants chose to

save the pedestrian of lower social status, while

19.9% chose to save the pedestrian of higher social

status in the case of forced inequality. With equal

treatment allowed, 4.1% chose to save the pedestrian

of lower social status, 1.9% chose to save the

pedestrian of higher social status and 94% chose

equality (Figure 3a).

On Ethical Considerations Concerning Autonomous Vehicles

185

Furthermore, 82.3% of the participants chose to

save the human while 17.7% chose to save the

animal when faced with forced inequality. With

equality allowed, 52.6% chose to save the human,

3.8% chose to save the animal and 43.6% chose

equal treatment (Figure 3b).

Overall, it has been observed that people show a

preference for equal treatment of individuals. Equal

treatment was not chosen by the majority only in the

case of the human vs. animal dilemma, in which

saving the person over animal was preferred. When

there is no equal treatment option, the research has

revealed a universally strong preference for saving

younger people over older people, women over men,

law-abiding people against offenders, people of

lower social status over people of higher social

status and humans over animals. It also seems that

there are weaker preferences for saving people who

are in better physical condition than those who have

worse physical condition.

Chi-squared test shows that there is a correlation

between one’s gender and the gender one chooses to

save when there is no choice of equal treatment

(p=0.004996927<0.05). There is also a correlation

between one’s age and the age one chooses to save

when there is no choice of equal treatment

(p=0.004225882<0.05).

At this point, it would be of interest to explore

the relation of the present research to previous

studies. The present study combines the questions

raised by Gao et al. (2020) with the dilemma cases

presented in Bigman and Gray (2020) and adds

questions of liability to them. Compared to the

research by Bigman and Gray (2020), the reactions

of the respondents participating in our research were

similar concerning gender, age, fitness and

lawfulness. However, our participants seem to deem

saving people of lower social status as more

favourable. Also, our participants prefer to take

action during a critical situation on the road (choose

actively whether to change course or remain on the

same course instead of abstaining from making a

decision), whereas the participants of the research by

Bigman and Gray (2020) would choose to remain

inactive. Our participants’ responses also confirm

what the study by Gao et al. (2020) has shown,

namely that people generally choose to save the

greater number of people and that they are

concerned about the safety of the pedestrians, often

choosing to protect the pedestrian instead of the

passenger.

5 CONCLUSIONS

Autonomous vehicles have the potential to offer

great advantages from a social, economic and

environmental point of view as long as the ethical

issues related to them are resolved. On this basis, the

purpose of the present study was to investigate

peoples’ views concerning which behaviours of

autonomous vehicles in critical situations and road

accidents should be deemed acceptable.

According to the results of the present analysis,

participants believe first the owner and next the

manufacturer to be responsible for faults in the

behaviour of the vehicle. That implies that people

regard the owner as accountable for mistakes that

result in a crash, and at the same time hold the

manufacturer of the vehicle responsible for

minimizing the unreliability of the software. In

addition, most respondents thought that autonomous

vehicles should make utilitarian decisions and

behave in a way which ensures the greater good.

Specifically, saving the greater number of people

and sacrificing the passenger in favour of the

pedestrian are actions which seem to be perceived as

more acceptable. A particularly important finding

concerns the strong preference for equal treatment.

Results suggest that people consider unbiased

behaviour on the part of the vehicle more justifiable.

Regarding the limitations of our research, only a

few answers were recorded by participants over 50

years of age. Although that means that the

participants may not be representative of the general

population, they are considered to at least represent

the first buyers of autonomous vehicles. However, it

would be appropriate to repeat the research in a

larger population, to examine whether the findings

remain consistent. Furthermore, the majority of

respondents are not familiar with the subject of the

study, due to the fact that autonomous vehicles are

not expected to become a reality by 2025, and

therefore, it would be appropriate for future research

to take into account the lack of information and

experience of participants. Finally, the present work

has addressed only a small range of ethical issues

that are expected to arise with the introduction of

autonomous vehicles.

Future research should be further extended to

other contexts, such as the development of

technology acceptance models related to ethics of

autonomous vehicles, by revealing peoples’

perceptions of morality. Some extra recommended

questions, which future research could explore, is

the authorities' role in making the owner and

manufacturer of autonomous vehicles liable towards

VEHITS 2022 - 8th International Conference on Vehicle Technology and Intelligent Transport Systems

186

their behaviour and the correlation between the

dilemma responses and the willingness of the

participants to own an autonomous vehicle.

REFERENCES

Bigman Y.E., Gray K. (2020), Life and death decisions of

autonomous vehicles, Nature 579, E1–E2.

Bissell D. ,Birtchnell T., Elliott A., Hsu E.L. (2018),

Autonomous automobilities: The social impacts of

driverless vehicles. Volume: 68 issue: 1, page(s): 116-

134, doi:10.1177/0011392118816743.

Bonnefon J.F., Shariff A., Rahwan I. (2016), The Social

Dilemma of Autonomous Vehicles. Science. 352.

10.1126/science.aaf2654.

De Freitas J, Cikara M. (2020), Deliberately prejudiced

self-driving vehicles elicit the most outrage.

Cognition.208:104555.doi:10.1016/j.cognition.2020.1

04555. Epub 2020 Dec 25. PMID: 33370651.

De Freitas J., Censi A., Di Lillo L., Anthony S.E., Frazzoli

E. (2020), From Driverless Dilemmas to More

Practical Ethics Tests for Autonomous Vehicles,

doi:10.31234/osf.io/ypbve

Gao Z., Sun Y., Hu H., Zhang T., Fei Gao F. (2020),

Investigation of the instinctive reaction of human

drivers in social dilemma based on the use of a driving

simulator and a questionnaire survey, Traffic Injury

Prevention, 21:4, 254-258, doi: 10.1080/15389588.20

20.1739274.

Gless S., Silverman E., Weigend T. (2016), If robots cause

harm, who is to blame? Self-driving cars and criminal

liability, New Criminal Law Review 1 August 2016;

19 (3): 412–436.

Holstein T., Dodig-Crnkovic G., Patrizio Pelliccione P.

(2018), Ethical and Social Aspects of Self-Driving

Cars, arXiv:1802.04103.

Li G., Li Y., Gao Z., Chen F. (2019), Ethical Thinking of

Driverless Cars, In Proceedings of the 2019

International Conference on Modern Educational

Technology (ICMET 2019). Association for

Computing Machinery, New York, NY, USA, 91–95.

Liu P., Du Y., Xu Z. (2019), Machines versus humans:

People’s biased responses to traffic accidents

involving self-driving vehicles, Accident Analysis &

Prevention, Volume 125, Pages 232-240, ISSN 0001-

4575.

Liu P., Liu J. (2021), Selfish or Utilitarian Automated

Vehicles? Deontological Evaluation and Public

Acceptance. International Journal of Human–

Computer Interaction. Volume 37, Pages 1231-1242,

doi: 10.1080/10447318.2021.1876357.

Lohmann M.F. (2016), Liability Issues Concerning Self-

Driving Vehicles, European Journal of Risk

Regulation, Cambridge University Press, 7(2), 335-

340. doi:10.1017/S1867299X00005754.

Luzuriaga M., Heras A., Kunze O. (2019), Hurting Others

vs Hurting Myself, a Dilemma for our Autonomous

Vehicle.

Schoettle B., Sivak M. (2015), A preliminary analysis of

real-world crashes involving self-driving vehicles,

University of Michigan: Transportation Research

Institute. UMTRI-2015-34.

Shariff A., Bonnefon J.F., Rahwan I. (2017).

Psychological roadblocks to the adoption of self-

driving vehicles. Nature Human Behaviour. 1.

10.1038/s41562-017-0202-6.

Tripat G. (2020), Blame It on the Self-Driving Car: How

Autonomous Vehicles Can Alter Consumer Morality,

Journal of Consumer Research, Volume 47, Issue 2,

August 2020, Pages 272–291.

Yang J., Coughlin J. F. (2014), In-vehicle technology for

self-driving cars: advantages and challenges for aging

drivers, International Journal of Automotive

Technology, Vol. 15, No. 2, pp. 333−340, DOI

10.1007/s12239−014−0034−6.

On Ethical Considerations Concerning Autonomous Vehicles

187