Let’s Play! or Don’t? The Impact of UX and Usability on the Adoption of

a Game-based Student Response System

Myrian Rodrigues

a

, Barbara Nery

b

, Miguel Castro

c

, Victor Klisman

d

, Jos

´

e Carlos Duarte

e

,

Bruno Gadelha

f

and Tayana Conte

g

Institute of Computing, Federal University of Amazonas (UFAM), Manaus-AM, Brazil

Keywords:

Game-based Learning, Game-based Student Response, Kahoot!, Remote Learning, Teachers’ Perspective,

Usability, User Experience.

Abstract:

Remote teaching emerged as an alternative to face-to-face classes during the COVID-19 pandemic. In this

scenario, teachers adopt formative assessments through different approaches. One of these approaches is

Game-based Student Response Systems (GSRS). Kahoot! is a prominent GSRS widely adopted in the educa-

tional context. Previous studies investigated the effects and use of Kahoot! by students. Still, none of them

reports the teachers’ perception of its Usability and User Experience (UX), attributes that influence the tool’s

adoption. This paper presents the usability and UX evaluation of Kahoot! from the point of view of teachers

and students. To comparatively visualize the difference in the experience of the two profiles of platform users,

we included five students and five teachers in the study. The evaluation results showed that teachers were more

dissatisfied, although the positive and negative emotions were similar for the two profiles. We then conducted

interviews with the teachers to understand the motives behind their dissatisfaction. The interviews helped us

determine which aspects related to usability and UX teachers perceived as critical during the use of Kahoot!.

1 INTRODUCTION

Due to the pandemic of the New Coronavirus-

COVID-19 (WHO, 2020), social isolation measures

were adopted to combat the proliferation of the new

virus, directly impacting the continuity of in-person

classes. Teaching activities became predominantly re-

mote in most countries to minimize the effects caused

by isolation during the school period (Misirli and Er-

gulec, 2021). With that, even lecturers and institu-

tions that were previously resistant to the insertion

of new technologies had to adapt their practices and

methods. Consequently, the classes needed to be-

come more dynamic to promote student engagement

(Dhawan, 2020).

However, according to Wang and Tahir (2020),

most teachers agree that maintaining students’ con-

centration, motivation, and active participation during

a

https://orcid.org/0000-0002-7152-7331

b

https://orcid.org/0000-0002-2512-6625

c

https://orcid.org/0000-0003-2289-4271

d

https://orcid.org/0000-0001-6041-8124

e

https://orcid.org/0000-0001-5732-9729

f

https://orcid.org/0000-0001-7007-5209

g

https://orcid.org/0000-0001-6436-3773

a class is challenging.

In this context, formative assessments urge as an

alternative to foster student’s engagement. It affects

students’ motivation and promotes active involvement

in their learning (Marchisio et al., 2020). Forma-

tive assessment comprises activities to support stu-

dents during their knowledge acquisition, providing

evidence-based support that helps them assess the

quality of their progress (Marchisio et al., 2020). One

of the ways to implement it in the current scenario

is through Game-Based Student Response Systems

(GSRS) (Wang and Tahir, 2020). GSRSs incorporate

gamification elements into Student Response Systems

(SRSs), used to present multiple-choice questions, en-

abling students to solve them together (Owen and

Licorish, 2020; Wang, 2015).

An example of a GSRS that has been growing in

recent years is Kahoot!, a platform designed to meet

the needs of students and education professionals who

want to create, practice, and share learning games in

an attractive and engaging setting (Wang and Tahir,

2020; Kahoot!, 2021). Furthermore, Kahoot! has also

been frequently used to review knowledge and carry

out formative assessments (Wang and Tahir, 2020).

Since the launch of Kahoot!, researchers have

Rodrigues, M., Nery, B., Castro, M., Klisman, V., Duar te, J., Gadelha, B. and Conte, T.

Let’s Play! or Don’t? The Impact of UX and Usability on the Adoption of a Game-based Student Response System.

DOI: 10.5220/0011065800003182

In Proceedings of the 14th International Conference on Computer Supported Education (CSEDU 2022) - Volume 1, pages 273-280

ISBN: 978-989-758-562-3; ISSN: 2184-5026

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

273

published many studies of its effects in the classroom.

Wang and Tahir (Wang and Tahir, 2020) analyzed 93

studies that address the effect of using Kahoot! for

learning and raised the perceptions of students and

teachers about using the tool. However, they do not

report any issues or perceptions about the platform

regarding its ease of use or usability and user expe-

rience (UX), especially from teachers’ perspectives.

We understand that usability and UX influence the ac-

ceptance or rejection of a system, as they are essential

aspects in adopting tools by users. Therefore, they

are pertinent to be investigated in depth (Hassenzahl,

2018; Van der Heijden, 2004).

This paper investigates teachers’ perceptions re-

garding the usability and UX of Kahoot!. We chose

Kahoot! as the object of study because it is one of

the most used GSRS today, and it is also suitable for

carrying out formative assessments in remote envi-

ronments(Wang and Tahir, 2020; Wang, 2015). We

chose the teacher’s point of view because it is essen-

tial to create and maintain an updating environment

to construct knowledge, which occurs with the sup-

port of technological tools in the online environment

(Heitink et al., 2016). The choice of technology and

its integration into the classroom largely depend on

initial teacher adoption.

We conducted the evaluation using Usability Tests

with the Cooperative Evaluation technique (Dix et al.,

2004) and UX Evaluations with AttrakDiff (Has-

senzahl et al., 2003), PrEmo (Du, 2019) and semi-

structured interviews (Longhurst, 2003). Five teach-

ers and five students participated in the evaluations to

verify if Kahoot! would present satisfactory results

for the two user profiles, on the mobile and desk-

top version of Kahoot!. However, we interviewed the

teachers who participated in the study to raise their

perceptions of usability and UX and identify whether

they influenced the adoption and use of Kahoot! in

online classes.

Through the investigation results, this paper

presents information related to the usability and UX

of Kahoot!, which can encourage improvements in

GSRSs tools, especially for the teacher profile. We

hope to contribute to developing technologies that

bring good usability and UX to students and teachers.

2 BACKGROUND AND RELATED

WORKS

Game-based learning enables new interactions be-

tween teachers and students, transforming lessons

into more dynamic, engaging, and collaborative ac-

tivities (Wang and Tahir, 2020; Nicholson, 2015).

Several studies demonstrate that most game-based

learning tools such as GSRSs have a positive effect

compared to traditional learning methods (Wang and

Tahir, 2020; Wang and Lieberoth, 2016).

2.1 Game-based Student Response

System

Game-based learning environments utilize game prin-

ciples and apply them in the learning context to make

users more engaged and invested in their learning

(C

´

ardenas-Moncada et al., 2020; Pho and Dinscore,

2015). A new generation of SRSs has been popular-

ized in the last decade, the GSRSs. The new genera-

tion adds game-based learning elements such as rank-

ing, sound effects, rating, and nicknames (C

´

ardenas-

Moncada et al., 2020; Wang et al., 2019). GSRSs

are interactive learning tools that allow students to

answer questions in real-time, obtain class perfor-

mance statistics, and participate in formative assess-

ments (Owen and Licorish, 2020; C

´

ardenas-Moncada

et al., 2020).

Empirical studies show that GSRSs positively

impact student learning, motivation and focus

(C

´

ardenas-Moncada et al., 2020; Owen and Licor-

ish, 2020). For teachers, these are essential charac-

teristics of engagement in a remote context (Capone

and Lepore, 2021). An example of GSRS that has be-

come popular in recent years is Kahoot! (Wang and

Tahir, 2020). In Kahoot!, the classroom becomes a

game show, with the teacher playing the presenter role

and the students becoming competitors (C

´

ardenas-

Moncada et al., 2020; Wang, 2015). Kahoot! has as

its primary focus on engagement through gamifica-

tion, where students are motivated to collaborate and

compete in teams or individually through interactive

quizzes, aiming to obtain a higher ranking (Wang and

Tahir, 2020; Wang et al., 2019).

Kahoot! is considered the most popular and cur-

rently the most used GSRS (Wang and Tahir, 2020).

Its popularity and use in classrooms have motivated

several studies evaluating its effects on students and

the teaching process.

2.2 Studies Evaluating Kahoot! and

Similar Tools

Wang and Tahir (2020) performed a literature re-

view of 93 studies focusing on the following research

topics: the effect on learning using Kahoot!, how

Kahoot! affects the classroom dynamic, how Ka-

hoot! affects student anxiety, the students’ percep-

tions about how Kahoot! affects their learning and

how Kahoot! affects teachers’ perceptions.

CSEDU 2022 - 14th International Conference on Computer Supported Education

274

However, while the authors report the teachers’

perceptions, it focuses on a single teacher profile,

most engaged with technologies.

Wang and Tahirs’ literature review also high-

lighted that there had been a notable increase in pub-

lications of articles related to Kahoot!. Among the se-

lected papers, about 88% focus on investigating how

students perceive the use of Kahoot! in learning, 39%

focus on acquired learning, 35% focus on the impact

of dynamization in the classroom, and only 11% focus

on how Kahoot! affects teachers’ perception, high-

lighting a possible gap to be studied and discussed.

Studies such as those by G

¨

oks

¨

un and G

¨

ursoy

(2019), Licorish and George (2018), and Wang (2015)

also discuss user perception and increased engage-

ment when using various GSRSs in the classroom.

However, they do not directly address perceptions or

evaluations regarding the usability and UX of these

tools. Moreover, despite the review of Wang and

Tahir (2020) briefly exposing the teachers’ perspec-

tive on Kahoot!, it only emphasizes pedagogical or

technical challenges related to its implementation, not

presenting relative perceptions on the ease of use, us-

ability, or experience of using the platform.

2.3 Usability and UX in Learning Tools

According to the ISO (ISO, 2019), Usability is “the

extent to which a system, product or service can be

used by users to achieve certain goals with effective-

ness, efficiency, and satisfaction in a certain context of

use”. As stated by Nielsen and Loranger (2006), the

concept of usability is related to five criteria: learn-

ing, memorization, error prevention, efficiency, and

satisfaction.

The ISO 9241-210:2019 defines User Experience

(UX) as “user perceptions and responses resulting

from the use or anticipation of the use of a product,

system or service”. In addition, it states that user ex-

perience is related to three main criteria: usefulness,

ease of use, and pleasure (ISO, 2019). The user expe-

rience encompasses all aspects of the interaction with

a product. It contains their perceptions and responses,

related to pragmatic aspects, linked to objectives and

effective and efficient means of manipulating the en-

vironment, and hedonic aspects, linked to the individ-

uals’ self and their psychological well-being, provid-

ing stimulation, identification, or provoking memo-

ries(Hassenzahl, 2018).

The increasing number of software on the market

made improving the quality of these products a dif-

ferential for their success and expansion (Beauregard

et al., 2007). Among the efforts made to improve the

quality of these products, we can mention the Usabil-

ity Tests (Lewis, 2006) and the UX evaluations (Ver-

meeren et al., 2010).

In a usability-testing session, evaluators observe

one or more participants while performing specific

tasks with the product in a test environment (Lewis,

2006). Amid the different existing techniques for car-

rying out usability tests, the Cooperative Evaluation

technique (Dix et al., 2004) encourages the user to

criticize the system under evaluation and allows eval-

uators to clarify confusing points at the time of appli-

cation from the test (Følstad and Hornbæk, 2010; Dix

et al., 2004). UX evaluations (Vermeeren et al., 2010)

are assessments used to obtain evidence about using

a specific technology by collecting UX data (Rivero

and Conte, 2017).

We used three techniques to collect UX data:

Product Emotion Measure (PrEmo) (Du, 2019),

AttrakDiff (Hassenzahl et al., 2003), and Semi-

structured Interviews (Longhurst, 2003). PrEmo is

a non-verbal self-report method that measures four-

teen emotions that are often triggered by the prod-

uct experience. Of these emotions, seven are pleasant

(admiration, joy, desire, pride, hope, fascination, and

satisfaction), and seven are unpleasant (sadness, fear,

dissatisfaction, shame, monotony, disgust, and con-

tempt) (Desmet, 2018; Laurans and Desmet, 2017;

Du, 2019). AttrakDiff, on the other hand, assesses

user feelings using a questionnaire with twenty-eight

items, in addition to studying the hedonic and prag-

matic dimensions of UX with semantic differentials.

The results are quantitative and comparative data,

which assess the perception of the experiences, not

the actual experiences (Hassenzahl et al., 2003).

Moreover, to collect more detailed information re-

garding the perceptions of use, we conducted Semi-

structured interviews, a verbal approach where the re-

searcher and subjects exchange information through

questions and answers while maintaining the flexibil-

ity to investigate essential points by including more

questions (Longhurst, 2003).

3 METHODOLOGY

Given the influence of Usability and UX on the ac-

ceptance and adoption of a system (Hassenzahl, 2018;

Van der Heijden, 2004), we carried out a study to eval-

uate such attributes in the Kahoot! tool. Our work

contributes to the gap identified in the literature re-

garding the Usability and UX Evaluation of GSRSs.

To evaluate the tool from a more general point of

view, we included teachers and students in the initial

phase of the study.We used Kahoot! in its two avail-

able versions, mobile and desktop.

Let’s Play! or Don’t? The Impact of UX and Usability on the Adoption of a Game-based Student Response System

275

The evaluation took place virtually through

Google Meet, where we invited participants to per-

form the objectives and tasks proposed to them in

Kahoot!. This stage constituted the Usability Test

(Lewis, 2006), and the technique used was the Co-

operative Evaluation (Dix et al., 2004). At the end of

each objective and task performed by the participants,

they answered an online form through Google Forms

to report their emotions by choosing images that rep-

resented them. This activity was part of the UX Eval-

uation (Vermeeren et al., 2010), using the technique

PrEmo (Du, 2019). After completing all the objec-

tives proposed for the evaluations, the participants

were invited to characterize their experience of using

the platform in an online questionnaire

1

using the At-

trakDiff technique (Hassenzahl et al., 2003). The last

stage of the UX Evaluation consisted of an interview

with the teachers that participated in the study.

3.1 Participants

The Usability Test and UX Evaluation included ten

participants aged between 18 and 60 years: five teach-

ers from different backgrounds and five university stu-

dents. One of the criteria for selecting the participat-

ing teachers was that they had never used Kahoot! in

their classroom. However, the previous use of the tool

with the student profile was not an exclusion factor.

For the participating students, we required that they

had no previous experience with the platform. Fur-

thermore, we considered only the teachers for the in-

terview stage because we wanted to understand what

they disliked about the platform.

3.2 Usability Test

To carry out the Usability Test, we established a

roadmap with the general objectives of the partici-

pants, which are available in the complementary ma-

terials (Rodrigues et al., 2022). For teachers, we set

the following objectives:

• Objective 1: Explore the access and registration

on the Kahoot! website as a teacher.

• Objective 2: Explore creating kahoots.

• Objective 3: Explore Kahoot!’s gameplay.

• Objective 4: Explore the settings.

• Objective 5: Explore Kahoot!’s groups.

For the students, we defined the following objec-

tives:

• Objective 1: Explore the access and registration

on the Kahoot! website as a student.

1

http: //www.attrakdiff.de

• Objective 2: Explore Kahoot!’s gameplay.

• Objective 3: Explore Kahoot!’s gameplay.

• Objective 4: Explore the “Discover” section.

We adopted four metrics during the Usability Test:

“Time taken to complete a task,” measured in sec-

onds, “Number of errors made,” “Numbers of times

the participant expressed confusion,” and “Numbers

of times the participant asked for help from the mod-

erator.” We established these metrics according to

their impact on the user experience. For example, the

fact that the participant fails to complete a task is se-

vere. It indicates that using the application is highly

frustrating and prevents its proposal from being effec-

tively achieved.

3.3 UX Evaluation and Interviews

We performed UX Evaluation with the PrEmo tech-

nique right after the Usability Test, after the partici-

pant completed an objective and the proposed tasks.

We aimed to obtain an immediate perception of the

activities. The AttrakDiff questionnaire was applied

after the participants completed all the proposed ob-

jectives for the Usability Test. After analyzing the

results of both techniques, we conducted interviews

with the teachers to investigate their perceptions re-

garding the usability and user experience of Kahoot

and the impact these perceptions’ would have on

teachers’ adoption of the platform in online classes.

The complete interviews had twenty guiding ques-

tions available in full at (Rodrigues et al., 2022).

Given that some teachers had previous experience as

students, the interview questions were mainly about

their experience with the platform, before and after

the first use. We also aimed to raise their impressions

regarding Kahoot!’s interface and whether or not they

would adopt it in their online classes.

4 RESULTS AND DISCUSSION

This section presents the results obtained in the Us-

ability Tests and UX Evaluations performed with

teachers and students. We also present teachers’ per-

ceptions on the use of Kahoot! as a result of the semi-

structured interviews.

4.1 Usability Test: Cooperative

Evaluation

After analyzing the results obtained through the Co-

operative Evaluation(Rodrigues et al., 2022), we ob-

served that the participants had difficulties achieving

CSEDU 2022 - 14th International Conference on Computer Supported Education

276

some objectives and their respective tasks. Teachers

had more significant challenges with objective 2 (Ex-

plore creating kahoots) and objective 3 (Explore Ka-

hoot!’s gameplay.). Some participants reported the

lack of consistency of the platform. Although Ka-

hoot! was configured to their local language, it pre-

sented some pages and terms in English, demonstrat-

ing a lack of standardization.

Regarding Objective 3, we had higher errors and

the number of times that the participant expressed

confusion. It demonstrates that teachers found it more

challenging to perform than the other proposed activ-

ities. Once again, we can observe that Kahoot! does

not present straightforward navigability.

4.2 UX Evaluation

In the UX Evaluation, we observed the experiences

perceived in pragmatic and hedonic terms that Ka-

hoot! brought to the participants. Below, we present

in more detail the results of PrEmo and AttrakDiff.

4.2.1 PrEmo

This method measured the UX through the emo-

tions reported by the participants when perform-

ing the Goals and Tasks defined for the Usability

Test. The results achieved are available in (Rodrigues

et al., 2022).During UX Evaluations, teachers re-

ported emotions like satisfaction, dissatisfaction, and

monotony. The first emotion is classified as a positive

emotion and the following as negative or neutral emo-

tions. The number of negative emotions was close to

the number of positive emotions, where 95 were neg-

ative, and 91 were positive. This result demonstrates

that teachers felt neutral about the experience of us-

ing the tool.

In the student’s results, the most reported emo-

tions were: satisfaction and joy, which are classi-

fied as positive. Therefore, we conclude that the stu-

dents’ experience was good when performing the pro-

posed objectives and tasks, as positive emotions were

pointed out 77 times, against 41 reports of emotions

classified as negative or neutral.

4.2.2 AttrakDiff

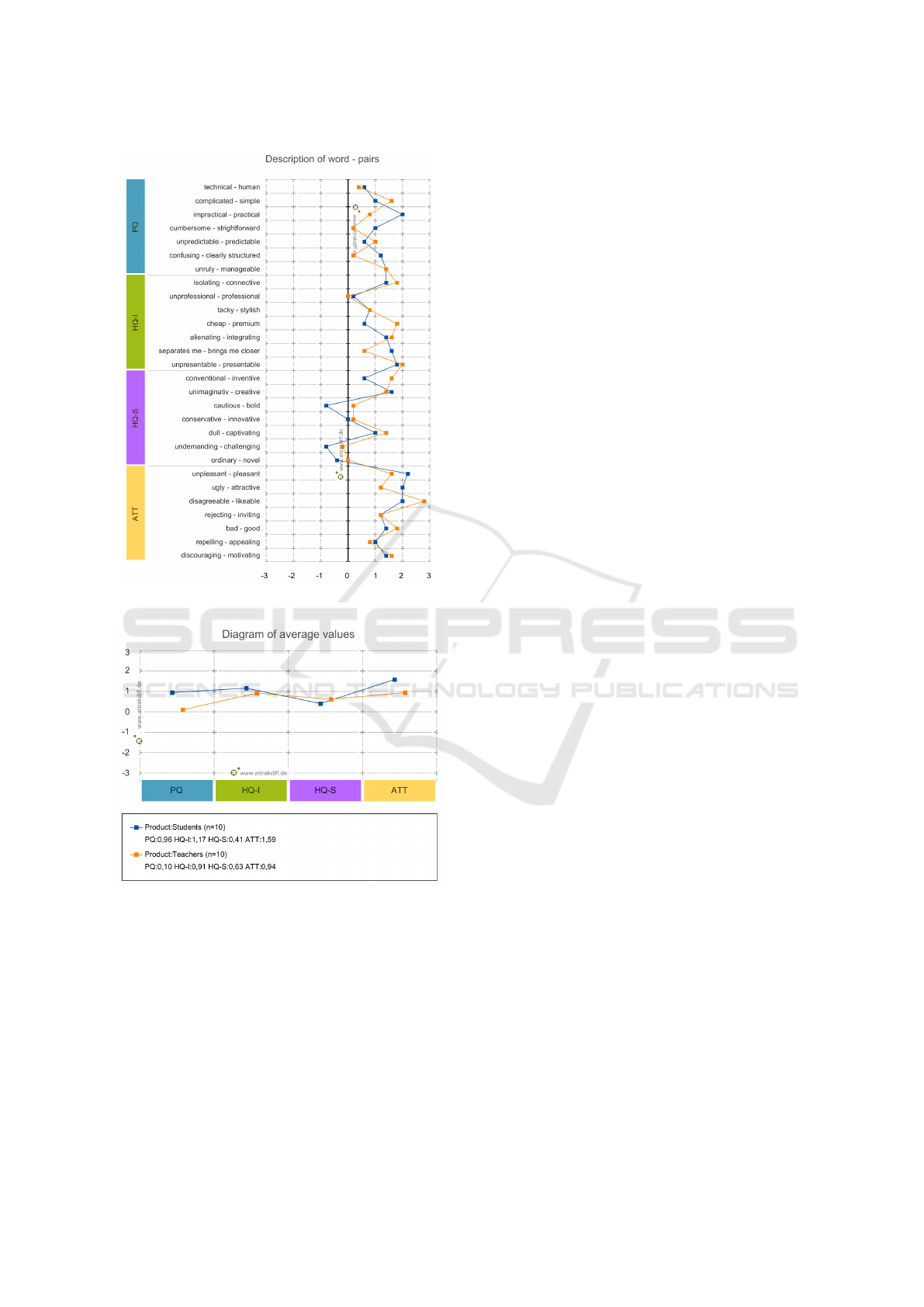

In Figure 1, we can observe that the teachers’ experi-

ence was not as positive as that of the students. Some

words chosen among the pairs were on the horizontal

axis with values close to 0 and some close to -1. This

result indicates that teachers rated Kahoot! as tech-

nical, confusing, and ordinary. Therefore, teachers

consider that these attributes of Kahoot! can be im-

proved.

We can observe that the teacher’s experience, in

general, was less satisfactory. Teachers expressed

more neutral results and minor variance, indicating

the opportunity to improve Kahoot!. On the other

hand, students showed an increasing and positive re-

sult, as their mean values are all positive and some are

higher than 1. In (Rodrigues et al., 2022) we can see

the average values of the dimensions PQ and HQ.

PQ-related results describe Kahoot!’s usability

and how users achieve the objectives when using it.

Figure 2 shows that teachers believed that Kahoot!

would help them achieve their goals on the platform.

However, they also had reservations, as their indica-

tive values of pragmatic qualities are close to zero. On

the other hand, students expressed higher values re-

garding pragmatic qualities, demonstrating that they

were more confident that the platform would help

them achieve the proposed objectives. The results of

the HQ-I dimension, which explores users’ identifi-

cation with Kahoot!, indicated that the platform gen-

erated similar identification in students and teachers.

Regarding the HQ-S, which indicates how much Ka-

hoot! meets the users’ needs in generating interest

and stimulation, we can see that the tool also offered

similar stimulation and motivation in teachers and stu-

dents. As for the ATT, which describes the general

quality of Kahoot! perceived by users, we can infer

that teachers perceived the platform as less attractive

than the students, as its numbers were closer to zero.

Figure 2 shows that students’ experience was gen-

erally positive and without significant variations in

terms of perceptions, as their values for PQ, HQ-I,

and ATT remained moderate, with attention only to

the HQ-S dimension. As its value was closer to zero,

we infer that the platform was moderately stimulat-

ing.

According to Figure 2, the average values of

the pragmatic quality in the teacher’s point of view

(PQ=0.10) were lower than when considering the per-

ceptions of the students (PQ=0.96). It indicates that

teachers rated Kahoot! less efficient and effective than

students. Moreover, the average hedonic quality was

slightly lower for teachers (HQ=0.77) than for stu-

dents (HQ=0.79), implying that teachers had a less

significant stimulus in using the platform.

4.2.3 Interviews

According to the data collected during the interview,

teachers pointed out the good relationship of the

students with the GSRS, as some students already

showed interest in using Kahoot! in the classroom,

which motivated them to adopt the platform. Besides

that, teachers who had already used Kahoot! as a stu-

dents had positive memories of its use. It influenced

Let’s Play! or Don’t? The Impact of UX and Usability on the Adoption of a Game-based Student Response System

277

Figure 1: Teacher’s and Student’s pair of words.

Figure 2: Diagram of average values of AttrakDiff dimen-

sions.

them to want to adopt the tool in their classrooms.

“Kahoot! brought me a vision of when I was a stu-

dent, and I wanted my students to feel the stimulation

I felt as a student, all in a dynamic, cool, and fun way

through a competition. . . ”, reported one of the teach-

ers.

However, this previous positive experience using

the features developed for students promoted high ex-

pectations in the teachers. When teachers use their

specif resources of the tool and their expectations are

not met, they feel frustrated. We can observe that

when one of the teachers with previous experience as

a student says: “This thing of creating an expectation

is terrible, because when I used it for the first time

it was as a student, and I thought it was cool, pretty

and easy to use. So I thought I would like to use it in

my classrooms and show it to my students. I thought

it would be easy for me as a teacher to use, and it

wasn’t.”

Teachers who never tried Kahoot! but were recep-

tive to using new technologies to streamline classes

and carry out formative assessments, reported dissat-

isfaction with the lack of platform standards. They

reported issues related to the language and the lack

of intuitiveness in the interface to achieve the pro-

posed objectives, such as the creation of new kahoots.

We captured some screenshots of interface issues that

teachers reported(Rodrigues et al., 2022).

Regarding the interface problems teachers re-

ported, we can cite:

• An interface that is not visually pleasing and

has solid colors is tiring for the eyes;

• Functions that are difficult to access and that are

not simply arranged, as well as symbols that are

confusing and difficult to understand;

• Difficulty in using some features, such as adding

media to the created kahoot.

4.3 Discussion

After analyzing the results of the Usability and UX

Evalution with the PrEmo and AttrakDiff techniques,

we found that the teachers presented results that

tended to be slightly negative but primarily neutral.

However, they reported being dissatisfied with Ka-

hoot!’s usability and UX. This divergence motivated

us to interview teachers to understand their dissatis-

faction, despite the neutral results.

Some teachers had their first contact with Kahoot!

as pre-study students. However, when they tried the

resources developed for teachers, it did not live up

to their expectations. Possible factors contributing to

this frustration are the difficulty of use in essential

tasks and increased handling complexity during the

execution of the tasks, as shown in the results of the

Cooperative Evaluation and AttrakDiff. We can also

attribute teachers’ dissatisfaction to the loss of the

playful, pleasant, and easy-to-handle aspect that

the tool brought in its interface for student profile, as

evidenced in the interviews.

Teachers who first used Kahoot! with the teacher

profile tended to have more negative opinions about

the platform. These teachers highlighted that for a

CSEDU 2022 - 14th International Conference on Computer Supported Education

278

better acceptance of the platform in their pedagogi-

cal practices, it would need improvements in terms of

usability and UX for the teacher’s profile.

Although Kahoot! did not meet teachers’ expec-

tations, some of them wanted to adopt the tool. The

main factor was the desire to provide their students

with the same positive experience they had previously

as students on the platform, even if they had to over-

come obstacles and challenges. Consequently, the

fact that some teachers were already motivated to use

Kahoot! in their classes may have influenced the neu-

trality of the evaluation results. However, even a pre-

vious motivation was not enough to change the per-

ceptions after using Kahoot! as a teacher.

Regarding problems perceived in the platform in-

terface, we observed that elements such as the color

scale needs reassessing, as the participating teachers

didn’t accept them well. Teachers related problems

regarding the interface’s intuitiveness, such as fea-

tures that are not easily accessible and symbols that

are confusing and difficult to understand. We believe

that corrections and GSRSs projects that consider

Nielsen’s heuristics (Nielsen and Molich, 1990) could

meet many of these improvement requests, aiming the

development of easy-to-navigate interfaces providing

better interaction and experience for target users.

Based on the results, it is possible to verify that

they are complementary to the results obtained in

other studies such as Wang and Tahir (2020), as they

bring the teacher’s perspective of using the tool. This

angle has been little addressed and investigated, de-

spite the importance of the teacher’s role in accept-

ing and using technologies in classrooms. Therefore,

this work complements existing studies, highlighting

harmful elements and opportunities for improvement

regarding the usability and UX of a GSRS, such as

Kahoot!, for the teacher profile.

5 CONCLUSION

Considering that the adoption of technological tools

in pedagogical practices is the teacher’s responsibil-

ity, these tools need to offer good usability and UX for

them. Even though teachers and students use different

functionalities on the platform, we expected that the

experiences would be satisfactory for all users.

Thus, this paper presented the usability and UX

evaluation of Kahoot! and analyzed whether these

factors impacted teachers’ adoption of the tool. As a

result, we identified that the platform promoted a bet-

ter user experience for students and presented more

usability and UX problems for teachers. To better

understand the teacher’s results, we performed inter-

views to collect their perceptions while using the plat-

form and whether this would impact its adoption in

their classrooms or use for conducting formative as-

sessments. The results reinforced that teachers were

already motivated to adopt the platform, primarily be-

cause of its success among students. However, after

its use with the teacher profile, their perception be-

came negative, causing them to condition the future

use of the tool in online classes or in carrying out for-

mative assessments, only if improvements were made

in this regard.

Such information is essential for software devel-

opers, as it helps them identify and avoid problems

similar to those reported by teachers regarding Ka-

hoot!’s usability and UX.

As future work, we suggest carrying out more ro-

bust studies with more GSRSs that raise problems dif-

ferent from those mentioned in this research and in-

vestigate how to make the UX and usability of GSRSs

positive for teachers and students.

ACKNOWLEDGMENTS

This research, carried out within the scope of

the Samsung-UFAM Project for Education and Re-

search (SUPER), according to Article 48 of De-

cree no 6.008/2006(SUFRAMA), was funded by

Samsung Electronics of Amazonia Ltda., under

the terms of Federal Law no 8.387/1991, through

agreement 001/2020, signed with Federal Univer-

sity of Amazonas and FAEPI, Brazil. This re-

search was also supported by the Brazilian fund-

ing agency FAPEAM through process number

062.00150/2020, the Coordination for the Improve-

ment of Higher Education Personnel-Brazil (CAPES)

financial code 001, the S

˜

ao Paulo Research Founda-

tion (FAPESP) under Grant 2020/05191-2, and CNPq

processes314174/2020-6. We also thank to all partic-

ipants of the study present in this paper.

REFERENCES

Beauregard, R., Younkin, A., Corriveau, P., Doherty, R.,

and Salskov, E. (2007). Assessing the quality of user

experience. Intel Technology Journal, 11(1).

Capone, R. and Lepore, M. (2021). From distance learning

to integrated digital learning: A fuzzy cognitive analy-

sis focused on engagement, motivation, and participa-

tion during covid-19 pandemic. Technology, Knowl-

edge and Learning, pages 1–31.

C

´

ardenas-Moncada, C., V

´

eliz-Campos, M., and V

´

eliz, L.

(2020). Game-based student response systems: The

Let’s Play! or Don’t? The Impact of UX and Usability on the Adoption of a Game-based Student Response System

279

impact of kahoot in a chilean vocational higher ed-

ucation efl classroom. Computer-Assisted Language

Learning Electronic Journal (CALL-EJ), 21(1):64–

78.

Desmet, P. (2018). Measuring emotion: Development and

application of an instrument to measure emotional re-

sponses to products. In Blythe, M. and Monk, A.,

editors, Funology 2, pages 391–404. Springer, Cham.

Dhawan, S. (2020). Online learning: A panacea in the time

of covid-19 crisis. Journal of Educational Technology

Systems, 49(1):5–22.

Dix, A., Finlay, J., Abowd, G. D., and Beale, R. (2004).

Evaluation Techniques, pages 318–363. Prentice Hall,

Harlow, third edition.

Du, Y. (2019). PrEmo toolkit: Measuring emotions for

design research. Master’s thesis, Delft University of

Technology, Delft, Pa

´

ıses Baixos.

Følstad, A. and Hornbæk, K. (2010). Work-domain knowl-

edge in usability evaluation: Experiences with coop-

erative usability testing. Journal of systems and soft-

ware, 83(11):2019–2030.

G

¨

oks

¨

un, D. O. and G

¨

ursoy, G. (2019). Comparing success

and engagement in gamified learning experiences via

kahoot and quizizz. Computers & Education, 135:15–

29.

Hassenzahl, M. (2018). The thing and i: Understand-

ing the relationship between user and product. In

Human–Computer Interaction Series, pages 301–313.

Springer International Publishing.

Hassenzahl, M., Burmester, M., and Koller, F. (2003). At-

trakdiff: A questionnaire to measure perceived hedo-

nic and pragmatic quality. In Mensch & Computer,

volume 57, pages 187–196.

Heitink, M., Voogt, J., Verplanken, L., van Braak, J., and

Fisser, P. (2016). Teachers’ professional reasoning

about their pedagogical use of technology. Comput-

ers & education, 101:70–83.

ISO (2019). Iso 9241-210: 2019 ergonomics of human-

system interaction—part 210: Human-centred design

for interactive systems. https://www.iso.org/standard/

77520.html.

Kahoot! (2021). About Kahoot! — Company History &

Key Facts. https://kahoot.com/company/.

Laurans, G. and Desmet, P. M. A. (2017). Developing 14

animated characters for non-verbal self-report of cat-

egorical emotions. Journal of Design Research, 15(3-

4):214–233.

Lewis, J. R. (2006). Usability testing. In Handbook of

Human Factors and Ergonomics, volume 12, page

1275–1316. John Wiley, New York.

Licorish, S. A., Owen, H. E., Daniel, B., and George, J. L.

(2018). Students’ perception of kahoot!’s influence

on teaching and learning. Research and Practice in

Technology Enhanced Learning, 13(1).

Longhurst, R. (2003). Semi-structured interviews and focus

groups. Key methods in geography, 3(2):143–156.

Marchisio, M., Fissore, C., and Barana, A. (2020). From

standardized assessment to automatic formative as-

sessment for adaptive teaching. In 12th International

Conference on Computer Supported Education, vol-

ume 1, pages 285–296. SCITEPRESS.

Misirli, O. and Ergulec, F. (2021). Emergency remote teach-

ing during the covid-19 pandemic: Parents experi-

ences and perspectives. Education and Information

Technologies, pages 1–20.

Nicholson, S. (2015). A RECIPE for meaningful gamifica-

tion. In Gamification in education and business, pages

1–20. Springer.

Nielsen, J. and Loranger, H. (2006). Prioritizing Web Us-

ability. New Riders Publishing, USA.

Nielsen, J. and Molich, R. (1990). Heuristic evaluation of

user interfaces. In Proceedings of the SIGCHI confer-

ence on Human factors in computing systems, pages

249–256.

Owen, H. E. and Licorish, S. A. (2020). Game-based stu-

dent response system: The effectiveness of kahoot! on

junior and senior information science students’ learn-

ing. Journal of Information Technology Education:

Research, 19:511–553.

Pho, A. and Dinscore, A. (2015). Game-based learning.

Tips and trends.

Rivero, L. and Conte, T. (2017). A systematic mapping

study on research contributions on ux evaluation tech-

nologies. In Proceedings of the XVI Brazilian Sympo-

sium on Human Factors in Computing Systems, pages

1–10.

Rodrigues, M., Nery, B., Castro, M., Klisman, V., Duarte,

J. C., Gadelha, B., and Conte, T. (2022). Let’s play!

or don’t? the impact of ux and usability on the adop-

tion of a game-based student response system: Sup-

plementary materials. https://bit.ly/3vOPSRi.

Van der Heijden, H. (2004). User acceptance of hedonic

information systems. MIS quarterly, pages 695–704.

Vermeeren, A. P., Law, E. L.-C., Roto, V., Obrist,

M., Hoonhout, J., and V

¨

a

¨

an

¨

anen-Vainio-Mattila, K.

(2010). User experience evaluation methods: current

state and development needs. In Proceedings of the

6th Nordic conference on human-computer interac-

tion: Extending boundaries, pages 521–530.

Wang, A. I. (2015). The wear out effect of a game-based

student response system. Computers & Education,

82:217–227.

Wang, A. I. and Lieberoth, A. (2016). The effect of points

and audio on concentration, engagement, enjoyment,

learning, motivation, and classroom dynamics using

Kahoot! In 10th European Conference on Games

Based Learning (ECGBL 2016), pages 738–746. Aca-

demic Conferences International Limited.

Wang, A. I. and Tahir, R. (2020). The effect of using Ka-

hoot! for learning – a literature review. Computers &

Education, 149.

Wang, W., Ran, S., Huang, L., and Swigart, V. (2019). Stu-

dent perceptions of classic and game-based online stu-

dent response systems. Nurse educator, 44(4):E6–E9.

WHO (2020). Coronavirus disease — (COVID-

19). https://www.who.int/news-room/q-a-detail/

q-a-coronaviruses.

CSEDU 2022 - 14th International Conference on Computer Supported Education

280