Applying and Extending FEMMP to Select

an Adequate MBSE Methodology

Christophe Ponsard and Valery Ramon

CETIC Research Centre, Charleroi, Belgium

Keywords:

System Engineering, Modelling, MBSE, Evaluation Framework, Methodology, Tooling.

Abstract:

Model-Based Systems Engineering (MBSE) relies on the central concept of model expressed in a well-defined

language like SysML. However, efficiently and effectively driving the system design process through its lifecy-

cle requires an adequate methodology. To ease the selection process among several MBSE methodologies, the

Framework for the Evaluation of MBSE Methodologies for Practitioners (FEMMP) is worthwhile. This paper

reports on the use of FEMMP to help in such a selection process with a focus on recent MBSE methodolo-

gies: Arcadia, ASAP and Grid. In addition to providing new and updated evaluations, it also identifies some

overlooked criteria and suggests a few improvements. A consolidated comparison with older methodologies

is also proposed and discussed.

1 INTRODUCTION

Model-based systems engineering (MBSE) is defined

as “the formalized application of modeling to support

system requirements, design, analysis, verification

and validation activities beginning in the conceptual

design phase and continuing throughout development

and later life cycle phases” (INCOSE, 2007). For the

industry, adopting MBSE is an important paradigm

shift because the system model becomes the central

part for the exchange between engineers rather than

documents (Madni and Purohit, 2019). Moving to

MBSE does not only mean adopting a system mod-

elling language and a tool supporting it, it is also

about the methodology to build and refine the model

in various scenarios such as designing a new system

or modernizing an existing system.

Especially, SysML is just a language and not an

architecture framework nor a methodology and needs

to be complemented with guidelines about how to

start the model, how to structure it using different

views, which artefacts to produce and in which or-

der. Considering Enterprise Architecture frameworks,

they provide guidance to drive transformation at en-

terprise/system level but they tend to focus on the

global perspective with many views intertwining dif-

ferent aspects. They lack of simplified perspective

addressing only subsets of each view (Morkevicius

et al., 2016).

The number of MBSE methodologies have grown

considerably over the past decade which more than

20 methodologies identified across various initiatives

(OMG, 2018)(Weilkiens and Mao, 2022). This raised

the question of selecting an adequate methodology

to suit a specific industrial context. In response

to this need, the Framework for the Evaluation of

MBSE process for Practitioners (FEMMP) provides

a set of criteria evaluated using a standard case study

which enables the objective comparison of method-

ologies (Weilkiens et al., 2016)(Di Maio et al., 2021).

FEMMP is increasingly used to benchmark specific

methodologies such as RePoSyD (Beyerlein, 2020),

Viper (Aboushama, 2020) or in a comparative way

like Arcadia vs OOSEM (Alai, 2019).

This paper is motivated by a concrete selection

process among recent MBSE methodologies, namely

Arcadia (Roques, 2016), ASAP (Ferrogalini and Bas-

tard, 2012), and Grid(Morkevicius et al., 2017) in

connection with a local industrial ecosystem. In or-

der to drive the selection process, an internal evalu-

ation grid was defined before discovering the avail-

ability of FEMMP. Our work was then aligned with

FEMMP which allowed us to use comparison infor-

mation published by others over the past years. It also

highlighted some missing criteria in our initial assess-

ment which could be improved. Conversely, we iden-

tified some areas where FEMMP can be improved and

discussed them.

This paper is structured as follows. Section

2 presents the assessment methodology based on

FEMMP with some extensions. Section 3 introduces

the three methods of interest. Based on this, Sec-

508

Ponsard, C. and Ramon, V.

Applying and Extending FEMMP to Select an Adequate MBSE Methodology.

DOI: 10.5220/0011312200003266

In Proceedings of the 17th International Conference on Software Technologies (ICSOFT 2022), pages 508-515

ISBN: 978-989-758-588-3; ISSN: 2184-2833

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

tion 4 provides a consolidated evaluation summary of

those methods against available evaluation published

in other evaluations. Those results are then discussed

in Section 5. Finally, Section 6 concludes and identi-

fies some future work.

2 ASSESSMENT

METHODOLOGY

This section details our assessment methodology.

First, we had a quite systematic look at available

methodologies. This enabled us to build a short list

the methods of interest that are then investigated us-

ing the FEMMP-based assessment. The precise grid

used is presented in this section including some en-

hancements resulting from an independent question-

naire design process.

2.1 Global Overview and Shortlisting

The starting point was to identify MBSE methodolo-

gies from cataloguing websites such as (OMG, 2018)

and (Weilkiens and Mao, 2022): 13 candidates listed

in Table 1 were gathered. In order to quickly identify

a short list and to focus the detailed evaluation on a

few interesting candidates, the following criteria were

considered and related indicators for their evaluation

were collected as part of Table 1.

• Is the method still actively used?

(indicators: creation date, initiator and activity)

• Is the method SysML-based?

• Is the method well supported by tools?

(indicator: list of tools)

• Is the method independent of a specific tool (ven-

dor lock)?

The expressed need in our case was to focus on

methodologies actively supported by large compa-

nies, preferably based on SysML and tool neutral.

The process resulted in the preselection of Arcadia

(although not purely SysML), ASAP and Grid.

2.2 Detailed Assessment with Extended

FEMMP

The Framework for the Evaluation of MBSE Method-

ologies for Practitioners (FEMMP) aims at gather-

ing all the available MBSE methodologies in a com-

mon repository and enable an objective comparison to

help practitioners in their selection process (Weilkiens

et al., 2016).

The assessment methodology is targeting practi-

tioners and can be performed by practitioner through

the following steps and results:

1. Methodology overview producing a short illus-

trated summary with references.

2. Highlights detailing unique/interesting features.

3. Case Study: short feedback on an application at-

tempt on a standard case (steam engine).

4. Evaluation: evaluation grid driven by questions

and using a set of multidimensional criteria, with

each dimension reflecting crucial parameters for

the evaluation.

5. Discussion summary of the process and results.

This process is quite natural and was actually fol-

lowed prior to discovering the availability of FEMMP.

We also produced methodology descriptions and

highlights, investigated a trial application and devel-

oped our own evaluation grid. However, in order to

enable comparison and check about possible gaps in

our assessment, we decided to align with the FEMMP

grid which has the following structure:

• six aspects: General (G), Information (I), Lan-

guage (L), Model (M), Process (P), Tool (T)

• five categories: Adopting (A), Basics (B), Prati-

cality (P), Support (S), User Experience (U)

• three evaluation metrics: Yes/No, list selection

and qualitative scale. The latter relies on the fol-

lowing qualitative scale: (A) Fully Compliant:

the item is exhaustively covered and addressed,

(B) Acceptable Performance: minor constraints

or limitations apply, but are well documented, (C)

Limited Applicability: major constraints or limi-

tations apply with notable impacts, (G) Generali-

sation: Compliance claimed, but not supported by

evidence, (X) Not Addressed.

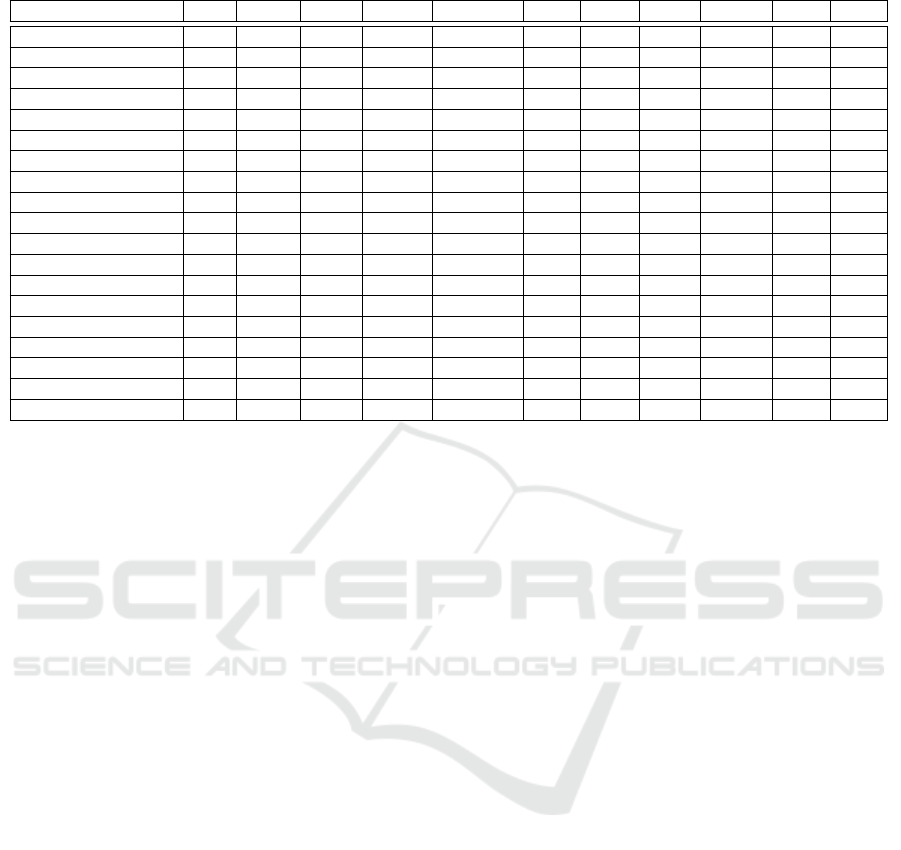

Table 2 shows the global list of questions of

the last version of FEMMP extended with our own

questions based on a comparative analysis with our

framework. The column ID-F represent the internal

FEMMP identifiers (combining the code of the as-

pect, the category and a sequence number) while col-

umn ID-I represents our own categories from A to E:

(A) General, (B) Model, (C) Maturity, (D) Variants,

(E) Process. The analysis of Table 2 reveals some in-

teresting points:

• the tool dimension is quite present in the FEMMP

and mostly absent in ours beyond the existence

of tool support. We decided on purpose to use a

separated and more detailed grid for the tool as-

sessment. This avoids introducing confusion be-

tween method and tool assessment which can be

present with FEMMP. Note also that tool support

is present in the previous shortlisting process.

• across other aspects, our evaluation is missing

a few questions like tailoring, complexity, cap-

ture of process information, redundancy, standard

Applying and Extending FEMMP to Select an Adequate MBSE Methodology

509

and frameworks. This confirms FEMMP was de-

signed and validated more carefully than our ad-

hoc approach.

• for language related questions, we have a similar

coverage but with a finer granularity in ours with

separated questions about diagrams and semantics

when the language is not SysML.

• FEMMP is missing some questions about method

and process related the capture of non-functional

requirements, the distinction between problem

and solution domain, traceability and support for

object-oriented development.

• FEMMP seems to overlook some general ques-

tions about maturity and industrial adoption (C2-

C3). The reason behind this is that FEMMP seems

to assume that criterion to be fulfilled as prerequi-

site. The benefit in our case lies more in the addi-

tional evidence which can be used to refine some

comparison.

• FEMMP similar coverage is achieved for docu-

mentation, training, support activities.

In the detailed evaluation presented in this paper,

we will use FEMMP with our additional questions.

This enables the comparison with published FEMMP

evaluations while covering our additional needs. It

could also be proposed as part of a future evolution

of the method as discussed later. To integrate them

in a distinctive way, we used an additional extension

category (X), e.g. C2 about maturity will be identified

as G-X-01.

3 OVERVIEW OF MBSE

METHODOLOGIES

Due to space constraint, this section only gives a sum-

mary of the three MBSE evaluated methodologies we

shortlisted: ARCADIA, ASAP and Grid. We use

the presentation canvas proposed by FEMMP. Other

methodologies are described in a similar way in other

references like (Weilkiens et al., 2016), (Alai, 2019),

(Javid, 2020), (Di Maio et al., 2021).

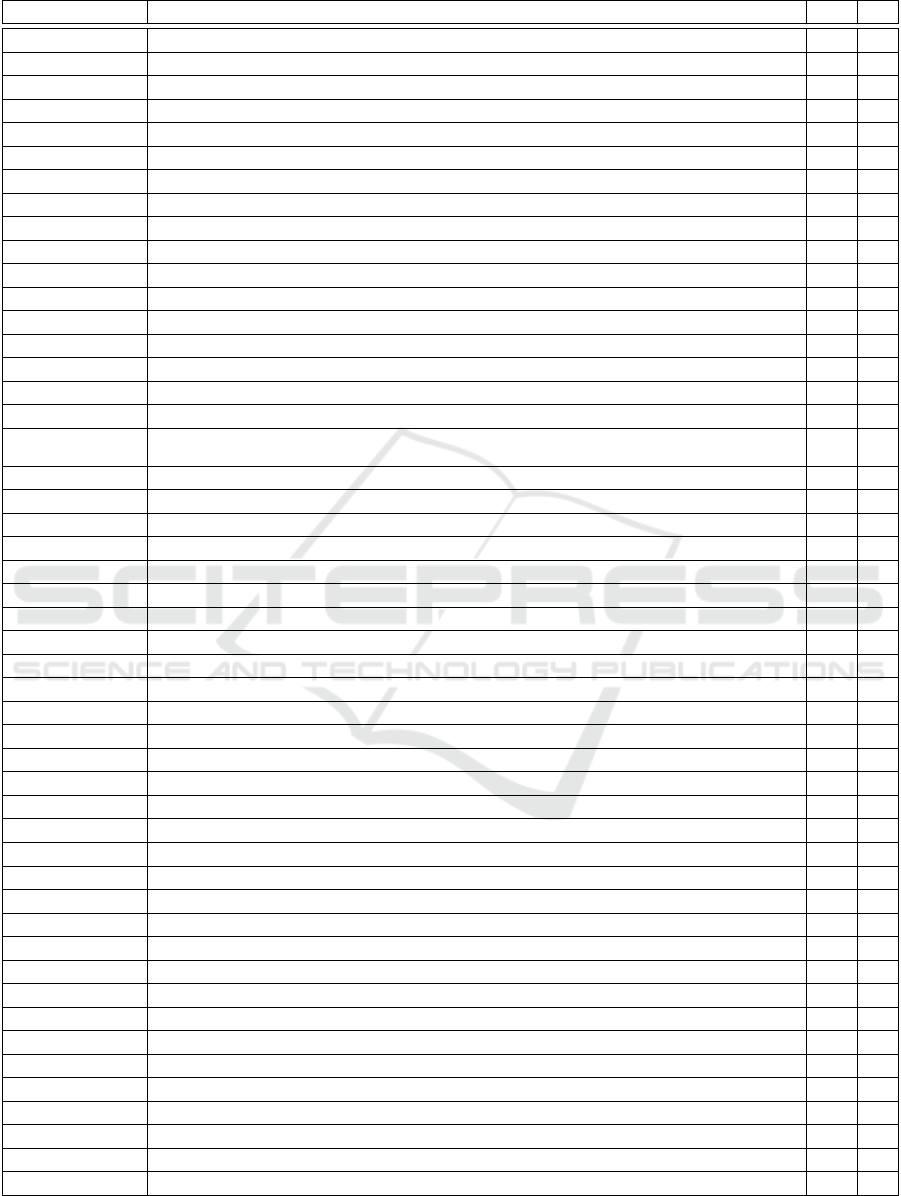

3.1 ARCADIA

ARCADIA (ARChitecture Analysis and Design Inte-

grated Approach) is an MBSE for complex technical

systems, developed by Thales.

Methodology. ARCADIA provides a method but

also a language which is not a SysML profile nor a

domain-specific modelling language. It is a meta-

model strongly inspired by SysML and providing

very similar diagrams but simplified for some be-

havioural aspects and enriched for the architecture

framework (Bonnet et al., 2016). Figure 1 depicts

the five engineering levels of ARCADIA which first

cover the problem domain with operational analy-

sis (user perspective) and system needs (both func-

tional and non-functional) and then the solution do-

main with the logical architecture, physical architec-

ture and end-product breakdown structure/integration

contracts (not shown on the Figure).

Highlights. ARCADIA is implemented by the

CAPELLA tool which is Open Source with a strong

community although some commercial components

are definitely required for an industrial context, e.g.

for collaborative work and toolchain integration. The

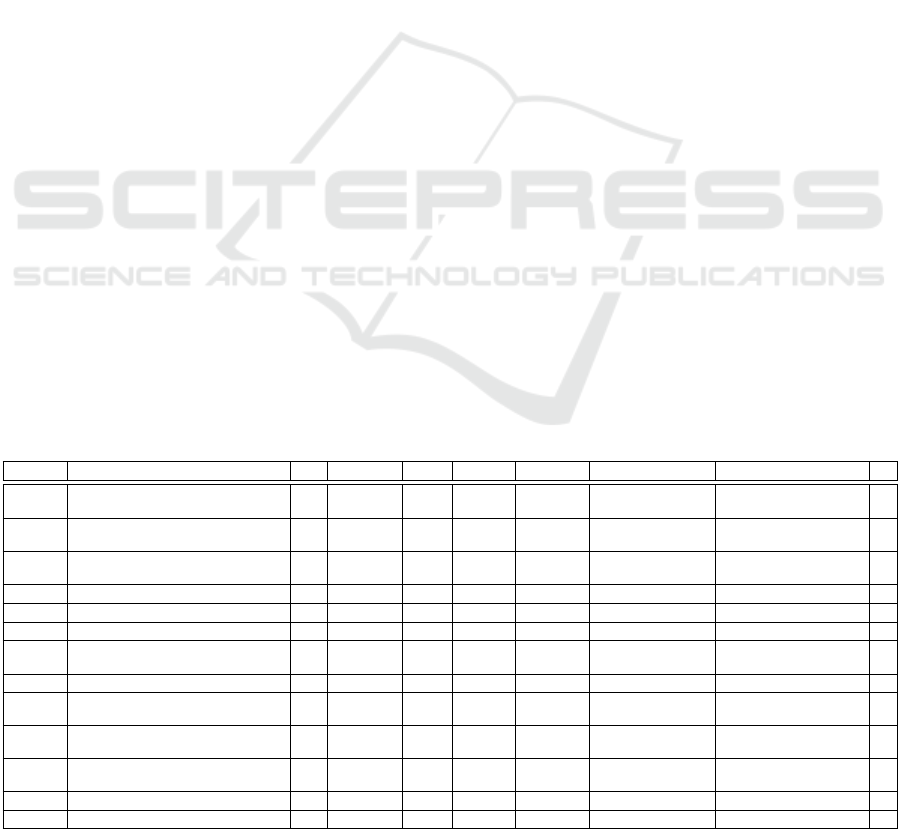

Table 1: Global overview of MBSE methodologies.

Acronym Meaning Since Initiator Active? SysML? ToolNeutral Know tool(s) Reference Sel.

Arcadia ARChitecture Analysis and Design In-

tegrated Approach

2015 Thal

`

es Yes No

(close)

No Capella (Voirin et al., 2015) X

ASAP Advanced System Architect Program 2012 Alstom Yes Yes Yes Artisan (PTC) (Ferrogalini and Bastard,

2012)

X

FAS Functional Architecture for Systems

Method

2010 Lamm

Weilkiens

? Yes Yes Artisan (PTC) (Lamm and Weilkiens,

2010)

GRID – 2016 Morkevicius Yes Yes Yes MagicDraw (Morkevicius et al., 2017) X

Harmony – 2006 IBM No Yes Yes IBM/Telelogic (Hoffmann, 2006)

JPL SA State Analysis 2006 NASA No No No State Database (Ingham et al., 2006)

OOSEM Object-Oriented Systems Engineering

Method

2000 INCOSE ? Yes Yes – (Lykins et al., 2000)

OPM Object-Process Methodology 2002 Dori ? No No OPCAT (Dori et al., 2003)

PPOOA Process Pipelines in OO Architectures 2002 Fernandez No No Yes Visio add-on (Fern

´

andez-S

´

anchez and

Mason, 2002)

PBSE Pattern-Based Systems Engineering 2010 Schindel ? Possibly Yes Sparx EA, Rhapsody (Schindel and Peterson,

2013)

RePoSyD Req. Eng. Project Management and

System Design

2020 Hoppe Yes No Yes RePoSyD (Hoppe, 2020)

SYSMOD SYStem MODelling 2008 Weilkiens Yes Yes Yes MagicDraw (plugin) (Weilkiens, 2020)

STRATA – 2004 Vitech Yes No No Core, Genesys (Vitech, 2016)

ICSOFT 2022 - 17th International Conference on Software Technologies

510

Table 2: Detailed MBSE assessment grid.

Criterion Description ID-F ID-I

Learning Curve How steep does the learning curve feel? G-A-01 C4

Suitability for Beginners How easy to understand is the methodology for (MB)SE novices? G-A-02 C4

Training Effort for SEs How much training does it take for experienced SEs to implement the methodology correctly? G-A-03 C4

Industry Domains Which industry domains does the methodology support particularly well/ has it been developed for? G-B-01 C1

Creativity Support How does the methodology foster a creative environment for the systems engineering team ? G-B-02 E2

Support of Standards Does the methodology also support other standards or norms? If so, how well? G-B-03

Scope For what engineering purpose is the methodology suited (innovation, improved products, refactoring, etc.)? G-P-01

Tailoring How easy is it to tailor the methodology (add/delete/change processes/process steps, or toggle tool features on/off)? G-P-02

Complexity How often is the methodology interrupted by external processes and/or non-integrated tools, e.g. ’paper-review’? G-P-03

Simulation How well does the methodology provide for an integrated simulation of the various model abstractions? G-P-04

Documentation How well is the methodology supported (books, manuals, case studies, on-line help, community, user feedback etc.)? G-S-01 C4

Training How well is training supported (availability, consultants, coaches, e-training, background knowledge required)? G-S-02 C4

Maturity Is the method mature, maintained and supported by an active community ? C2

Industry adoption Is the method already successfully adopted by major companies ? Are there reported experience reports ? C3

Process Info Capture How well does the tool capture the information generated throughout the process? I-B-01

MBSE-SE Exchange How well does the tool facilitate the exchange of the information between the MBSE and the non-MBSE domains I-B-02

Philosophy Are Model Elements clearly distinguished from Diagram Elements (separation of content from representation)? L-B-01

Language What Modelling Language is used (If NOT SysML: How well does it define the real-world semantics, are elements

strictly typed, is their meaning unambiguous do they have a defined purpose etc.)?

L-P-01 A4,A5

B3

Integration How well can the model be integrated with specialty engineering models ? (Does it support coengineering?) L-P-02 B6

Modelling Features What modelling features and approaches are included from the reference framework ISO 42010 (Architecture) M-B-01

Modelling ISO 15288 How well does the MBSE methodology support modelling of the ISO 15288 processes? M-B-02

Abstraction How well does the model support keeping it abstract through multiple levels? M-B-03 B1

Meta-Model Does the methodology provide a (semantic) meta-model, ontology or similar? M-B-04

Scalability How well does the model scale to large projects, ’grows’ with time without becoming cumbersome? M-P-01

Variants How well does the methodology support the variant management? M-P-02 D1

Indep. View Generation How well does the methodology support the generation of views that can be read in another MBSE language? M-P-03

Redundancy How well does the methodology prevent duplication (of work, model elements, artefacts, communications, etc.)? M-P-04

NFR Does the method allow the capture of non-functional requirements? B7

Pb vs Solution Does the method clearly separate the problem and solution domains? B2

Traceability Does the method provide strong traceability support between requirements, functions, (sub)systems/components? B4,B5

ISO Standard What process steps of ISO 15288 are covered? P-B-01

Framework What views from the reference framework are used (MODAF, DODAF...)? P-B-02

Consistency Is the process self-contained (are in-/outputs to all steps connected)? P-P-01 B5

OO Support Does the method provide support for OO development? B8

Precision How precisely is the process implemented in terms of semantics and sequence, do workarounds impacting quality? T-B-01 E1

Information Security How secure is the data/information exchange between parties T-B-02

Perspectives To what level is the creation of experts’ perspectives automated T-E-01

Reporting How quickly are standard/custom reports, is design documentation created T-E-02

Admin How well does the tool help to minimise work that isn’t creating any value T-E-03

Checking Does the tool support consistency checking of the model? (e.g. automated detection of wrong content/formats) T-E-04

Navigation How easy is it to find a specific model element (following links, using queries, a traceability model) ? T-E-05

Connectivity How easily can the information be exchanged with other tools (standard (open) API, import/export, wizard, etc.)? T-P-01

Reuseablity Does the tool allow to reuse any type of Modelling Element across projects T-P-02

Support How well is the tool supported (vendor response times, 24/7 helpline etc.)? T-S-01 C4

Intuitition How intuitive is the tool to work with (e.g. compliance with UX conventions)? T-U-01

View How easy is it to configure the UX dynamically (define a matrix with sorting/filtering, extend with annotations)? T-U-02

UI How readable is the UI (screen layout, zoom, can fonts and sizes be changed, is information well presented)? T-U-03

Multi-User Env. How well does the tool support the distribution of the work among different parties. T-U-04

Known tools Can you identify tools providing good support to the methodology? A6

Applying and Extending FEMMP to Select an Adequate MBSE Methodology

511

Figure 1: ARCADIA methodology.

tooling enforces consistency, supports the methodol-

ogy, ensures traceability and has a globally good user

experience.

Case Study. The steam engine case study has been

modelled in Capella. It is highlighted in (Di Maio

et al., 2021) and explained in more details in (Javid,

2020). Figure 6 depicts an Operational Architecture

Blank (OAB) diagram capturing the allocation of Op-

erational Activities to Operational Entities. The tool

provides good methodological guidance for Arcadia

through a process wizard.

Figure 2: ARCADIA OAB Diagram for the case study.

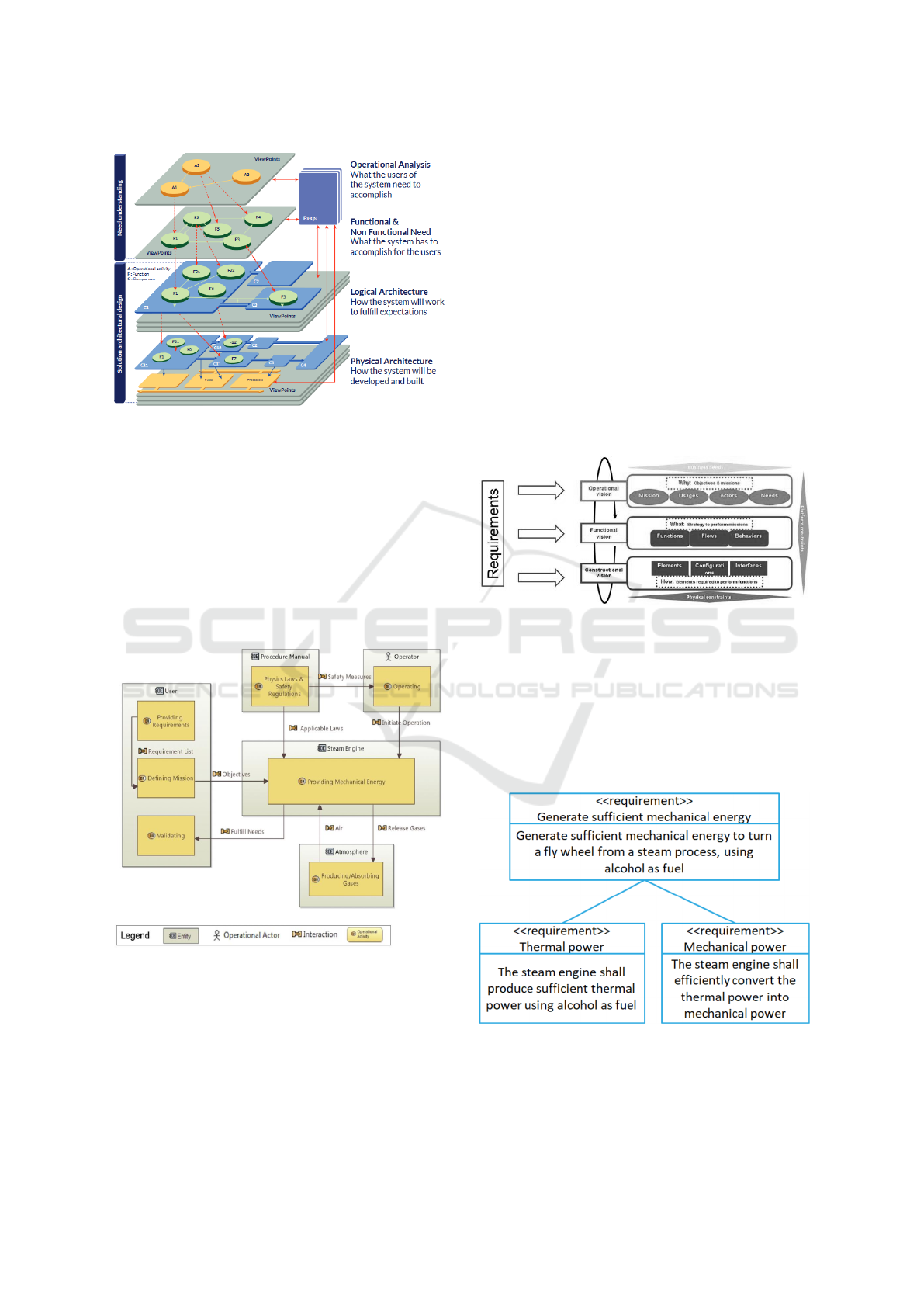

3.2 ASAP

ASAP (Advanced System Architecture Program) is

an efficient top-down MBSE approach developed by

Alstom for its internal use.

Methodology. ASAP is organised in 3 main layers de-

picted in Figure 3. First, the operational level copes

with the point of view of external users to identify

their needs and the external interfaces (WHY). Sec-

ond, the functional level focuses on WHAT the sys-

tem does to fulfill its mission and the identification

of functional flows. The third layer is constructional

to structure into interconnected subsystems and their

expected behaviour to achieve the system functional-

ities (HOW). The expression of each layer relies on

specific diagrams of SysML, only a subset of SysML

is used.

Highlights. The method can be extended to deal with

the software engineering and also relies on UML in

this case. It does not require a specific tooling but a

SysML compliant tool able to cope with industrial re-

quirements (scalability, efficiency, collaboration). A

training program is available covering different pro-

files like managers, readers, designers and architects.

Figure 3: ASAP methodology.

Case Study. We modelled the steam engine using a

SysML tool (PTC Windchill Integrity Modeller). The

tool does not enforce the methodology but is quite

strict about SysML syntax. Figure 4 depicts a small

requirements diagram. In complement, we also stud-

ied a simple ASAP case study, a bathroom scale, that

provided more insight on the use the proposed mod-

elling levels.

Figure 4: ASAP requirements diagram for the case study.

ICSOFT 2022 - 17th International Conference on Software Technologies

512

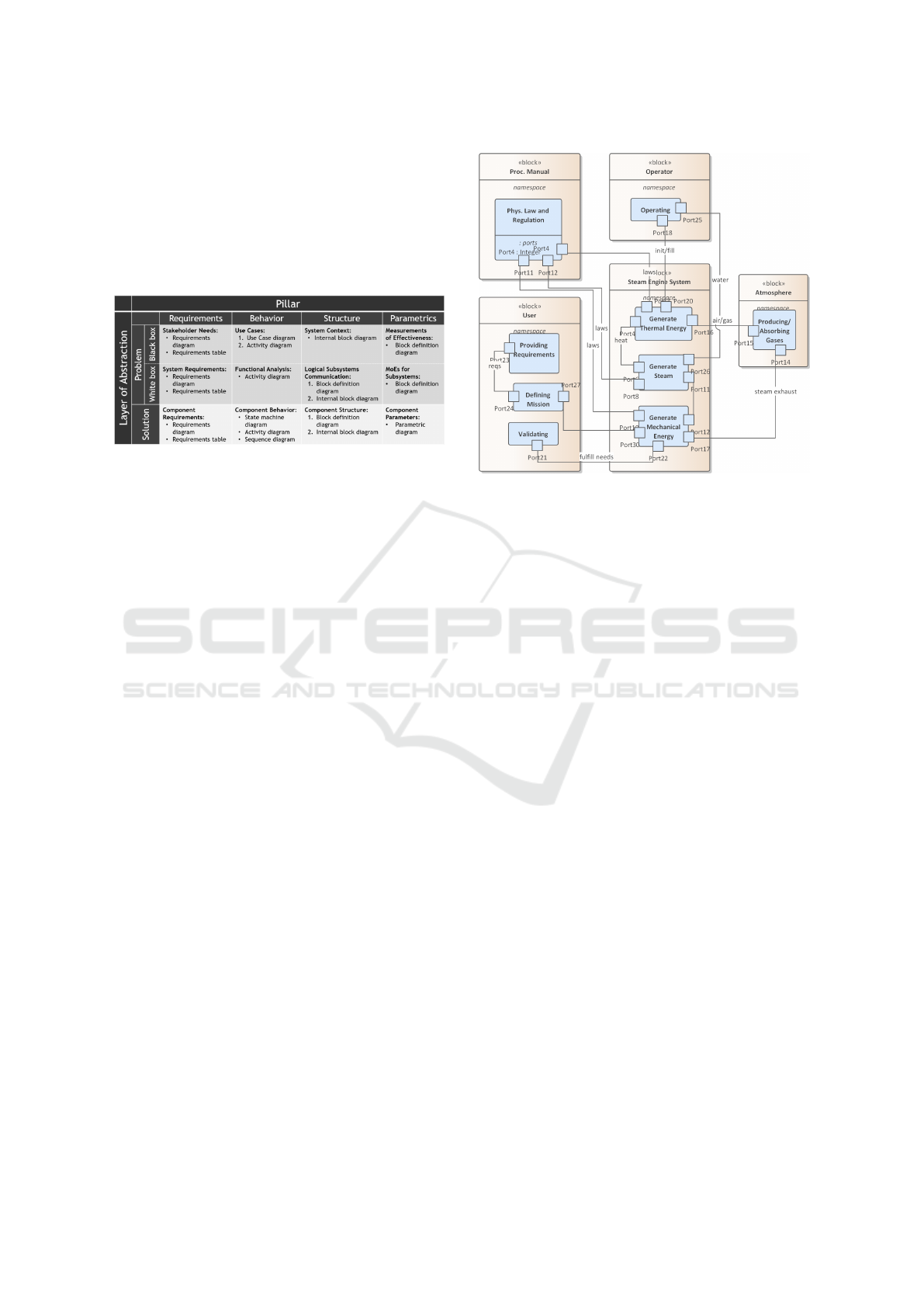

3.3 Grid

The Grid approach is influenced by Bombardier and

Kongsberd Defense & Aerospace. It was built in reac-

tion to previous industrial failure to adopt to MBSE. It

is designed with a strong focus on guiding the system

engineers through the modeling process (Morkevicius

et al., 2016).

Figure 5: Grid methodology.

The methodology is systematically structured using

a bi-dimensional grid depicted in figure 5 where

columns are aspects also known as four pillars of

SysML (requirements, structure, behaviours and para-

metrics) and rows are viewpoints reflecting the prob-

lem domain (using the ”black-box” user perspective

and ”white box” system functionalities) and the so-

lution domain (at the component level). Each cell

can described through specific SysML diagrams. The

global structure is also inspired by Enterprise Ar-

chitecture frameworks such as Zachman (Zachman,

1987). Like ASAP, it is vendor neutral for the tool

support but with known implementation using Mag-

icDraw (No Magic, 2016).

Highlights Grid provides a rich traceability across its

cells which ease the model maintenance, change man-

agement and impact analysis. For example, a state

machine at solution level must enforce some require-

ments at problem level or a use case can be traced to a

functional analysis which is allocated to a subsystems

implemented in a solution level component. Another

strength of Grid is to provide a foundation for product

line engineering based on the parametric pillar. This

dimension is generally not well supported in MBSE

methodologies.

Case Study. The steam case study was modelled us-

ing Enterprise Architect as tool. The collection of di-

agrams is close to those of ASAP given the SysML

adoption which however the additional support for

parametric diagrams enabling to capture variability

points (not present in the case). Figure 6 shows an

Internal Block Diagram (IBD) for the logical subsys-

tem communications. In addition, another case study,

a climate control unit, was also analysed for the use

of parametric diagrams (Morkevicius et al., 2016).

Figure 6: Grid IBD diagram for the case study.

4 MBSE METHODOLOGIES

COMPARISON

Our primary purpose was to compare our three short-

listed methodologies detailed in the previous section.

However, to enable a larger comparison, we also

integrated information published by others, namely

OOSEM vs Arcadia (Alai, 2019), Vitech STRATA

(Aboushama, 2020) and RePoSyD (Beyerlein, 2020).

Table 3 presents a consolidated comparison of those 6

methodologies across 19 criteria. The information re-

sulting from our own evaluation is presented between

brackets. Additional columns remind about the crite-

ria, identifiers, aspect, category and response type.

We carried out this consolidation using the follow-

ing process:

• we only focused on the scale and yes/no types.

• we ruled out questions relating to tool support for

reason identified earlier and discussed in more de-

tails in the next section.

• we included our extensions but did not attempt to

recover answers for external reviews. This also

yields for criteria left empty in such reviews.

• the contributed evaluations were initially per-

formed in our own grid and then transposed in

FEMMP using detailed evaluation information

and not only the resulting score. Each evaluation

was performed by a team of experts with a single

expert in charge of a specific evaluation. Review

meetings were organised to discuss each criterion

comparatively, first before the evaluation to make

sure of the understanding of the criteria, and then

Applying and Extending FEMMP to Select an Adequate MBSE Methodology

513

Table 3: Detailed MBSE assessment grid.

Criterion ID-F ID-I Aspect Category Response Type OOSEM STRATA RePoSyD Arcadia ASAP Grid

Tailoring G-P-02 General Practicality Scale A A C B (B) (A)

Complexity G-P-03 General Practicality Scale B A B A (A) (A)

Documentation G-S-01 C4 General Support Scale B C C A/B B (C)

Training G-S-02 C4 General Support Scale A A (C)

Maturity G-X-01 C2 General Support Scale (Yes) (Yes) ? (Yes) (Yes) ?

Industry adoption G-X-02 C3 General Adopting Scale (Yes) (Yes) ? (Yes) (Yes) (Yes)

Philosophy L-B-01 Language Basics Yes / No Yes Yes Yes Yes (Yes) (Yes)

Language L-P-01 A4,A5,B3 Language Practicality List SysML SDL RML SysML like SysML SysML

Integration L-P-02 Language Practicality Scale C B A C (B) (B)

Abstraction M-B-03 B1 Model Basics Scale (B) (A) (B) (A) (A) (A)

Meta-Model M-B-04 Model Basics Yes / No (SysML) Yes Yes Yes (SysML) (SysML)

Scalability M-P-01 Model Practicality Scale A B A A (A) (A)

Variants M-P-02 D1 Model Practicality Scale B B B B (B) (A)

Independent View Generation M-P-03 Model Practicality Scale (C) (A) (A)

Redundancy M-P-04 Model Practicality Statement B A C A (B) (B)

NFR M-X-01 B7 Model Basics Scale (B) (B) (A)

Pb vs Solution M-X-02 B2 Model Basics Scale (A) (B) (A)

Traceability M-X-03 B4,B5 Model Basics Scale (A) (A) (A)

Consistency P-P-01 B5 Process Practicality Yes / No Yes Yes Yes Yes Yes Yes

after the evaluation, to align some evaluation de-

tails, share the results and produce a summary.

5 DISCUSSION

In this section, we provide some extra discussion be-

yond the FEMMP extension already discussed in Sec-

tion 2.

Methodology Selection is of course the first logical

question. Among our three selected methods, there

is clearly no winner nor looser. Due to its qualita-

tive nature, FEMMP does also not claim to be able to

rank the methodologies objectively and the suggested

weights are more to help in prioritisation. Moreover,

we believe the balance is specific to each case as there

might also be mandatory criteria and more optional

ones given each specific context. For example, vari-

ant management might be very important in systems

deployed in many variants compared to system known

to be unique. The transition management from doc-

ument to model-based must also be considered and

existing workflow must also be preserved, e.g. for

certification.

The Generic Informal Criteria might be tuned in

more quantitative and directed scale enabling more

precision and homogeneity in the ranking. However,

this would require a substantial work to specify, to un-

derstand and to rank. A danger could be to use some

specific solution as reference point. An example is the

variability in the number and nature of the structuring

in the various methods although they share similari-

ties, they are all different.

About the Tool Support, as pointed earlier, tool re-

lated criteria must be carefully formulated within in

the scope of the methodology selection as there must

not be any confusion between them. A key criterion is

probably to be vendor neutral which is favored by the

generalisation of SysML as language. The evaluation

of tool criteria might become quite fuzzy because the

response could vary with the tool considered. Conse-

quently, it seems better to limit the tool related ques-

tions to some questions about a few key features (like

collaboration, generation, integration) and to rely on

a separate assessment for the tool support. This is the

process that was followed in our case without detail-

ing it here because the tool evaluation is in itself a

huge work. Actually it can be more precise and thus

requires a larger set of finer-grained criteria.

About the Case Study: the proposed case study

about the steam engine was used as a starting point. It

provides an interesting common background for com-

paring the methodologies and understanding the var-

ious diagrams types used to support the global de-

sign process. However, in complement, we also anal-

ysed other case studies, including more complex ones

when available. We believe this complementary view-

point is useful in order to understand the way dia-

grams are used by others, especially for more spe-

cialised notations, e.g. parametric diagrams for deal-

ing with variability. It also helps to make sure the

evaluators are not misinterpreting specific parts of the

methodology.

ICSOFT 2022 - 17th International Conference on Software Technologies

514

6 CONCLUSION & NEXT STEPS

This paper performed a comparative evaluation of

MBSE methodologies based on the existing FEMMP

approach with two main extensions. First, we added

a shortlisting step based on a few guiding criteria.

Second, we introduced some extra criteria based on

an independently designed evaluation methodology.

Then, we applied the extended evaluation grid to three

MBSE methodologies, two of them not yet ranked.

The evaluation summary was consolidated with other

published evaluations to reach an total of 6 method-

ologies across 19 criteria.

Our work is limited by the facts that not all criteria

were ranked and by some subjectivity due to qualita-

tive nature of the evaluation. However, we believe this

work is of interest for the consolidated results but also

for the proposed extensions and discussion. We hope

our feedback can add to other reports and help driving

the evolution of FEMMP, providing better guidance in

selecting an adequate MBSE methodology and foster-

ing the adoption of MBSE.

REFERENCES

Aboushama, M. (2020). ViTech Model Based Sys-

tems Engineering: Methodology Assessment Using

the FEMMP Framework. BSC thesis Technische

Hochschule Ingolstadt.

Alai, S. P. (2019). Evaluating arcadia/capella vs.

oosem/sysml for system architecture development.

PhD thesis, Purdue University Graduate School.

Beyerlein, S. T. M. (2020). RePoSyD Model Based Sys-

tems Engineering: Methodology Assessment Using

the FEMMP Framework and a Steam Engine Case

Study. BSC thesis Technische Hochschule Ingolstadt.

Bonnet, S., Voirin, J.-L., Exertier, D., and Normand, V.

(2016). Not (strictly) relying on sysml for mbse: Lan-

guage, tooling and development perspectives: The ar-

cadia/capella rationale. In Annual IEEE Systems Con-

ference (SysCon), pages 1–6. IEEE.

Di Maio, M. et al. (2021). Evaluating mbse methodologies

using the femmp framework. In IEEE International

Symposium on Systems Engineering (ISSE).

Dori, D. et al. (2003). Developing complex systems with

object-process methodology using opcat. In Concep-

tual Modeling - ER 2003.

Fern

´

andez-S

´

anchez, J. L. and Mason, B. J. (2002). A pro-

cess for architecting real-time systems. In Proc. of the

15th Int. Conf. on Software & Systems Engineering

and Their Applications.

Ferrogalini, M. and Bastard, J. L. (2012). Return of experi-

ence on the implementation of the system engineering

approach at ALSTOM. Complex System and Design

Management Int. Conf., Paris (France).

Hoffmann, H.-P. (2006). Sysml-based systems engineering

using a model-driven development approach. INCOSE

International Symposium.

Hoppe, M. (2020). Why using a Reference Model? https:

//wiki.reposyd.de.

INCOSE (2007). SYSTEMS ENGINEERING VISION

2020. http://www.ccose.org/media/upload/SEVis

ion2020\ 20071003\ v2\ 03.pdf.

Ingham, M. D. et al. (2006). Generating requirements for

complex embedded systems using state analysis. Acta

Astronautica, 58(12):648–661.

Javid, I. (2020). Evaluating and comparing mbse method-

ologies for practitioners: Evaluating arcadia/capella

using the femmp framework. PhD Thesis, University

of Applied Sciences Ingolstadt.

Lamm, J. G. and Weilkiens, T. (2010). Funktionale ar-

chitekturen in sysml.

Lykins, H., Friedenthal, S., and Meilich, A. (2000). Adapt-

ing uml for an object oriented systems engineering

method (oosem). In INCOSE Int. Symposium.

Madni, A. M. and Purohit, S. (2019). Economic analysis of

model-based systems engineering. Systems, 7(1).

Morkevicius, A., Bisikirskiene, L., and Jankevicius, N.

(2016). We Choose MBSE: What’s Next? Complex

Systems Design & Management.

Morkevicius, A. et al. (2017). MBSE Grid: A Simplified

SysML-Based Approach for Modeling Complex Sys-

tems. INCOSE International Symposium, 27(1).

No Magic (2016). Model Based Systems Engineering with

MagicGrid. https://www.omgwiki.org/MBSE/lib/exe

/fetch.php?media=mbse:magicgrid.pdf.

OMG (2018). MBSE Methodologies and Metrics.

Roques, P. (2016). Mbse with the arcadia method and the

capella tool. In 8th European Congress on Embedded

Real Time Software and Systems (ERTS).

Schindel, W. and Peterson, T. (2013). Introduction to

pattern-based systems engineering (PBSE): Leverag-

ing MBSE techniques. In INCOSE Int. Symposium.

Vitech (2016). STRATA: A Layered Approach to Project

Success. https://www.vitechcorp.com/strata-method

ology/.

Voirin, J.-L. et al. (2015). From initial investigations up to

large-scale rollout of an MBSE method and its sup-

porting workbench: the Thales experience. In IN-

COSE international symposium.

Weilkiens, T. (2020). SYSMOD - The Systems Modeling

Toolbox, 3rd edition.

Weilkiens, T. and Mao, M. D. (2022). MBSE Methodolo-

gies. https://mbse-methodologies.org.

Weilkiens, T., Scheithauer, A., Di Maio, M., and Klusmann,

N. (2016). Evaluating and comparing mbse method-

ologies for practitioners. In IEEE International Sym-

posium on Systems Engineering (ISSE).

Zachman, J. A. (1987). A framework for information sys-

tems architecture. IBM Syst. J., 26(3).

Applying and Extending FEMMP to Select an Adequate MBSE Methodology

515