Motion Planning for Mobile Robots using the Human Tracking Velocity

Obstacles Method

Zolt

´

an Gyenes

1 a

, Ilshat Mamaev

2 b

, Dongxu Yang

2

, Emese Gincsain

´

e Sz

´

adeczky-Kardoss

1 c

and Bj

¨

orn Hein

2 d

1

Department of Control Engineering and Information Technology, Faculty of Electrical Engineering and Informatics,

Budapest University of Technology and Economics, M

˝

uegyetem rkp. 3., H-1111 Budapest, Hungary

2

Intelligent Process Automation and Robotics Lab, Karlsruhe Institute of Technology (KIT), Karlsruhe, Germany

Keywords:

Mobile Robot, Motion Planning, Autonomous System, Human Tracking.

Abstract:

Human-robot interaction is playing an increasingly important role in everyday life and we can expect an even

bigger explosion in the use of robots in the future. One such use is where a mobile robot needs to follow the

human. The main objective of this paper is to introduce a novel motion planning algorithm for mobile robots,

which can be used to enable the robot to follow a human while maintaining a given distance. The motion

planning algorithm has to take into account obstacles in the workspace of the robot at each sampling time and

to generate a collision-free motion for the agent.

1 INTRODUCTION

Mobile robots are becoming more prominent in our

daily lives. There are already commercially avail-

able street delivery robots (Valdez et al., 2021; Jung,

2020), wide range of applications for warehouse

robots (Ch’ng et al., 2020), and service robots in

healthcare industry (Reiser et al., 2009). Fueled by

advances in artificial intelligence, the developments

in autonomous mobile robotics with co-existence of

humans and robots, have opened new challenges in

motion planning and control. Collision avoidance and

person following are the most basic tasks in mobile

robotics, ensuring safety of the robotic application

and comfortable human robot interaction.

In this paper, we propose an approach to mobile

robot motion control for person-following with dy-

namic collision avoidance combining directive circle

and velocity obstacles methods.

The rest of the paper is structured in the following

way: Section 2 presents selected related work, con-

sidering the motion planning algorithms for mobile

robots. In Section 3, the novel motion planning al-

gorithm is introduced. After that, in Section 4, the

a

https://orcid.org/0000-0003-1803-7146

b

https://orcid.org/0000-0003-2546-7304

c

https://orcid.org/0000-0002-7789-6402

d

https://orcid.org/0000-0001-9569-5201

simulation results are presented. Later on, in Section

5, the paper will be summarized.

2 RELATED WORK

In this Section, human tracking and motion planning

algorithms for mobile robots will be presented.

2.1 Human Detection and Tracking

Object tracking is one of the classical tasks in com-

puter vision. Due to the recent advances in AI-based

object detection, tracking-by-detection has attracted

interest in this field. The Simple Online and Realtime

Tracking (SORT) was introduced by (Bewley et al.,

2016) in 2016 and extended with deep association

metric in 2017 (Wojke et al., 2017). The latter ap-

proach was tuned for pedestrian detection and outper-

formed other tracking algorithms (Xiang et al., 2015;

Yang and Jia, 2016). Another approach is based on

decomposition the person tracking process to several

parts, using threshold segmentation and morphologi-

cal operations for detection, Kalman filter algorithm

for tracking and Hungarian algorithm for the data as-

sociation algorithm (Qian et al., 2020). A tracking

framework called EagerMOT fuses all available ob-

ject observations originating from 2D and 3D detec-

484

Gyenes, Z., Mamaev, I., Yang, D., Szádeczky-Kardoss, E. and Hein, B.

Motion Planning for Mobile Robots using the Human Tracking Velocity Obstacles Method.

DOI: 10.5220/0011318200003271

In Proceedings of the 19th International Conference on Informatics in Control, Automation and Robotics (ICINCO 2022), pages 484-491

ISBN: 978-989-758-585-2; ISSN: 2184-2809

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

tors via a two-stage association procedure. The first

stage associates object detections from different sen-

sor modalities, while in the second stage, a tracking

formulation that allows to update track states is em-

ployed (Kim et al., 2021). Another sensor fusion

approach (Ali et al., 2013) utilizes stereo camera to

extract body and face features and uses LiDAR for

extraction of legs features, combining these features

to classify the target person. However, it only works

when target faces towards the robot, which is a limi-

tation for person following task. For multiple object

tracking (MOT) a single-shot network for simultane-

ously detecting objects and extracting tracking fea-

tures is applied to real-time calculation (Guo et al.,

2020). Some models also learn to manage to track life

circles in a data-driven approach (Chiu et al., 2021).

In an approach, a marker-based solution was in-

troduced for the tracking task (Lenain et al., 2018a).

At this method not only the magnetic but also the op-

tical and acoustic and inertial tracking are described

and also evaluated.

2.2 Motion Planning Methods

The Directive circle (DC) method designs a collision-

free, near-optimal path for a mobile robot to chase

another mobile robot by avoiding moving obstacles.

Finding the shortest path to the another mobile robot

is the first step at the algorithm. Then, the collision-

free directions of the robot are computed using the

velocity vectors and the Directive Circle (Masehian

and Katebi, 2007). The collision-free path closest to

the optimal path is then found. These subtasks are

repeated one after the other until the two objects meet.

A novel potential field based motion planning

method was introduced where a switching strategy

can be used between the attractive potential of the tar-

get and a novel helicoidal potential field (Fedele et al.,

2017). The helicoidal potential field helps to bypass

an obstacle that has a motion around the agent.

The motion planning algorithms can be used also

for Off-road mobile robots (Lenain et al., 2018b).

A novel motion planning method was introduced for

four wheel steering mobile robot that allows the mo-

tion capacity with respect to a normal car-like mobile

robot, while the friction can be reduced.

The main concept of the Velocity Obstacles algo-

rithm (VO) (Fiorini and Shiller, 1998) is to select a

velocity vector that would result in a collision-free

motion for the agent if the position and velocity in-

formation of the obstacles is known at every sampling

time.

B

i

defines the obstacles (i = 1...m where m de-

fines the actual number of obstacles that occur in the

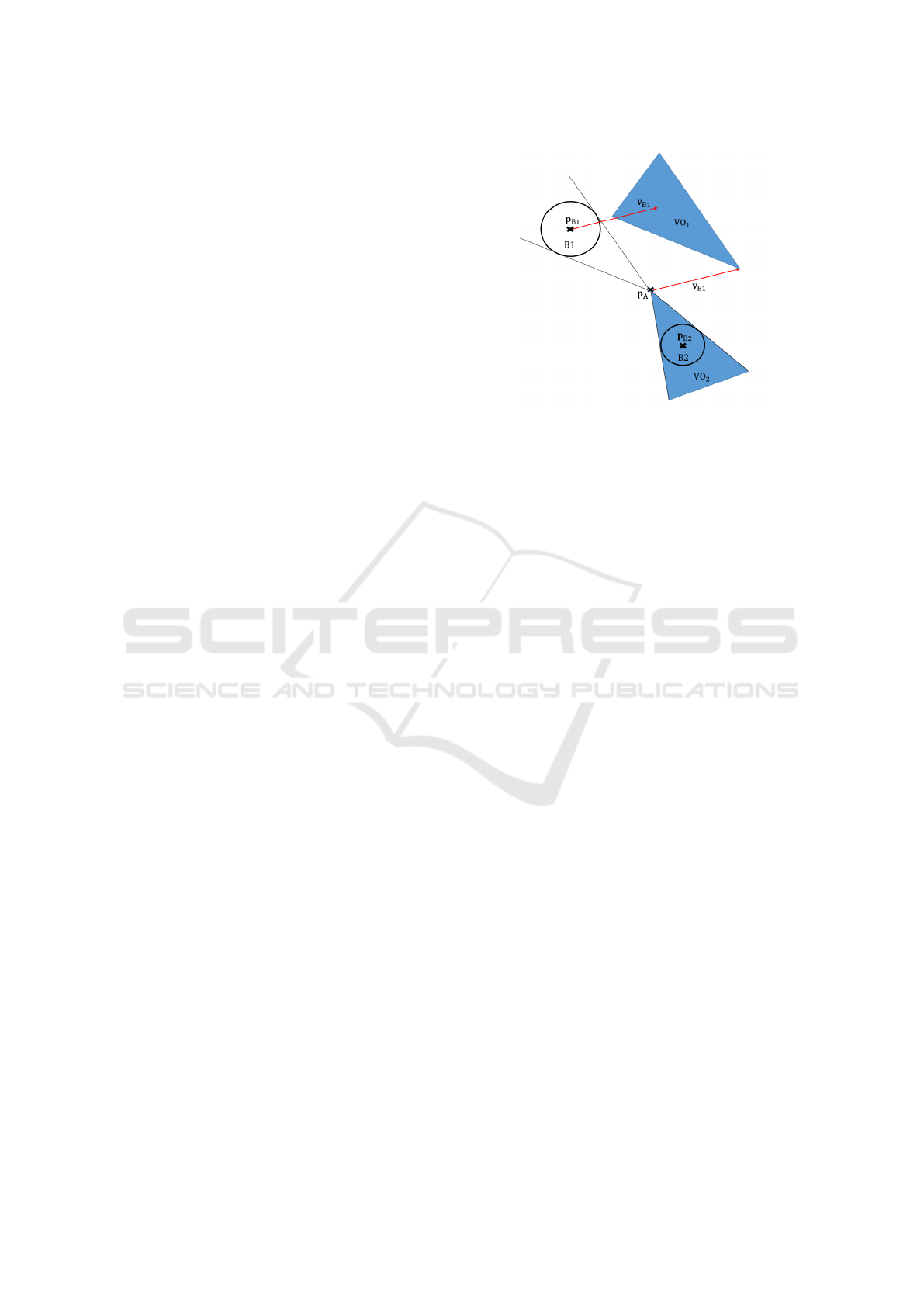

Figure 1: Velocity Obstacles method.

workspace of the agent) and the agent is A.

All velocity vectors of the agent can be calculated

that would cause a collision between the obstacle (B

i

)

and robot (A) in a future time, this sets are the VO

i

cones:

VO

i

= { v

A

| ∃ t : p

A

+ v

A

t ∩ p

Bi

+ v

Bi

t 6= 0} (1)

where v

A

and v

Bi

are the velocity vectors and p

A

and

p

Bi

are the positions of the agent and the obstacle. As

an assumption, both the robot and the obstacles are

disk-shaped and the velocities of the obstacles and the

agent are unchanged until t.

The whole VO can be determined as the union of

the different VO

i

sets if there are more obstacles as:

VO = ∪

m

i=1

VO

i

(2)

Figure 1 represents an example where a moving

obstacle is in position p

B1

and it has velocity v

B1

at the current time. There is a static obstacle in the

workspace with position p

B2

. The two VO areas are

depicted with blue color.

After calculating the VO

i

sets, the Reachable Ve-

locities (RV) can be determined that consist every v

A

velocity vector of the agent that is reachable consid-

ering the previously selected velocity vector. After

the subtraction of the VO from the RV the Reachable

Avoidance Velocities (RAV) can be received.

3 HUMAN TRACKING

VELOCITY OBSTACLES

METHOD

In this Section, the Human Tracking Velocity Obsta-

cles (HTVO) motion planning algorithm is introduced

where the Directive Circle and the Velocity Obstacles

methods are combined and extended. Using this novel

Motion Planning for Mobile Robots using the Human Tracking Velocity Obstacles Method

485

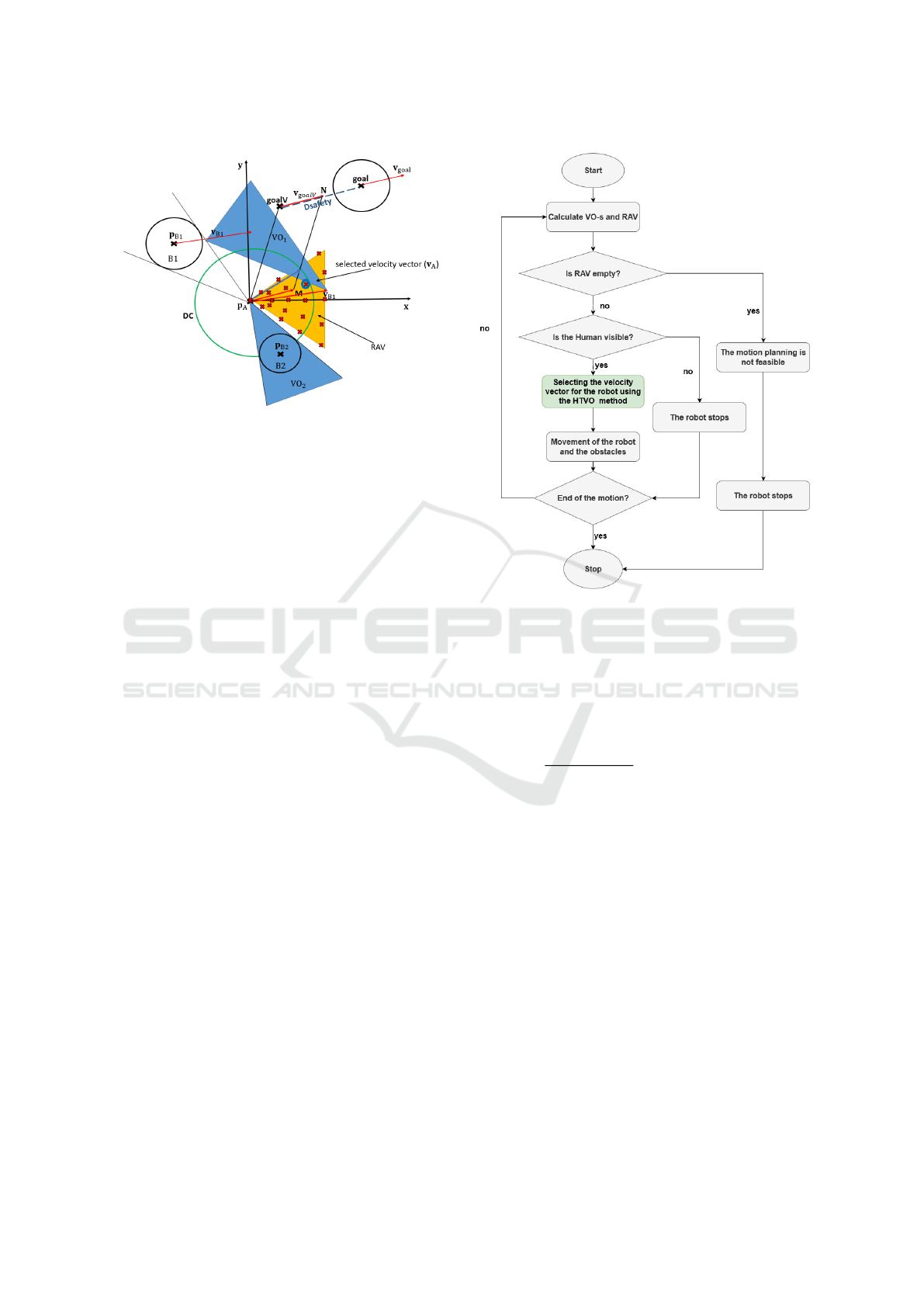

Figure 2: Precheck algorithm.

method, the human tracking and obstacle avoidance

aspects can be considered at the same time, generat-

ing a collision-free motion for the agent in the dy-

namic workspace.

3.1 Precheck Algorithm

At the beginning of the algorithm, it has to be se-

lected, which obstacles play the less role in the mo-

tion of the agent so which VO

i

could be eliminated

from the workspace of the robot at the sampling time.

This step could be called Precheck algorithm.

In Figure 2, the Precheck algorithm is shown.

Two different aspects can be considered while se-

lecting the obstacle to be discarded:

• which obstacle will be reached in the longest time

in the workspace of the robot

• at that time, how large will be the distance be-

tween the robot and the obstacle.

So for every obstacle, the minimum time and dis-

tance must be calculated when the agent and the ob-

stacle are closest to each other during their motion.

t

min

A,Bi

=

−(p

A

− p

Bi

)(v

A

− v

Bi

)

||v

A

− v

Bi

||

, (3)

where t

min

A,Bi

presents the time interval when the robot

and the obstacle will be nearest to each other. If the

value of this parameter is a negative number, then it

was in the past. ||.|| represents the secondary norm.

Between the agent and the obstacle, the minimal

distance can be calculated:

d

min

A,Bi

= ||(p

A

+ v

A

t

min

A,Bi

) − (p

Bi

+ v

Bi

t

min

A,Bi

)||,

(4)

So only those obstacles must be considered that

fulfill the next equation:

0 < t

min

A,Bi

< 2 ∗ T

precheck

AND

d

min

A,Bi

< v

max

∗ T

precheck

(5)

Figure 3: Virtual target and Directive Circle in HTVO.

where v

max

means the maximum velocity that the

robot can reach and T

precheck

is a parameter of the

algorithm that must be tuned. This parameter can

be setted using apriori knowledge. Our experiments

showed that if the value of the T

precheck

parameter is

too small, it generates not a smooth path for the agent.

3.2 Velocity Selection for the Robot

For the human tracking and collision avoidance task,

a novel motion planning algorithm, the Human Track-

ing Velocity Obstacles method can be used which is

based on the combination of Directive circle and the

Velocity Obstacles. Both the obstacles and the hu-

man are represented with circles. In that case, a vir-

tual target must be introduced which is always behind

the real target position (the human that the agent fol-

lows). The distance between the real and the virtual

target position is always constant. In Figure 3, goal

presents the real goal position, v

goal

is the velocity

vector of the human and goalV is the virtual target

position with v

goalV

velocity vector with an end point

of N which is the same velocity vector as the human

has (v

goalV

= v

goal

). The distance between the human

and the virtual target position is the constant Dsafety.

The robot is in position p

A

. The DC circle is pre-

sented with green color which represents a circle with

a radius of the maximum velocity v

max

of the agent.

M denotes the end point of the velocity v

goalV

if it

starts from p

A

. Where M − N section intersects the

DC circle, it will be the velocity vector of the robot

which will generate a motion for the agent resulting

in reaching the virtual target position. This velocity

vector must be selected for the robot if it is in the RAV

set, considering the RV and VO sets in the workspace.

A grid based velocity map is introduced to the RV

set so if the desired velocity vector is not in the RAV

set, then a grid-based velocity selection can be used.

ICINCO 2022 - 19th International Conference on Informatics in Control, Automation and Robotics

486

Figure 4: VOs for HTVO.

In Figure 3, the presented method can be seen.

There are several situations that can be considered

in the velocity selection method (similarly to (Mase-

hian and Katebi, 2007)):

• If there are no intersection point between the DC

and the MN section then the human tracking task

is not solvable because the robot cannot select a

velocity vector that is large enough to reach the

virtual target position

DC ∩ MN =

/

0 (6)

• If there is one intersection point between the DC

and the MN section (p

1

), then it is the solution for

the velocity selection task.

DC ∩ MN = p

1

(7)

v

A

= p

1

(8)

• If there are two intersection points between the

DC and the MN section (p

1

and p

2

), then that

point must be selected which is closer to point N:

DC ∩ MN = {p

1

, p

2

} (9)

v

A

= argmin

p

i

||N − p

i

|| (10)

• If there are two intersection points between the

DC and the MN section (p

1

and p

2

), and N is sit-

uated between these two points, then N must be

selected as the velocity vector of the agent. This

situation can occur when the robot will reach the

virtual target position in the next time step:

DC ∩ MN = {p

1

, p

2

} AND N ∈ p

1

p

2

(11)

v

A

= N (12)

Figure 5: Steps of the HTVO algorithm.

• If the human stands in a position and it has no ve-

locity vector, then the virtual goal is also a static

point. In that case, the robot has to reach the vir-

tual goal position as fast as it is possible. The ve-

locity vector can be calculated as:

v

A

=

goalV − p

A

||goalV − p

A

||

· min(v

max

, ||goalV −p

A

||),

(13)

• If the desired velocity vector that would cause an

appropriate virtual target reaching is not in the

RAV set, then the nearest velocity vector must be

selected from the introduced grid.

DC ∩ MN 6∈ RAV (14)

v

A

= argmin

v

Grid(i)

||(DC ∩ MN) − v

Grid(i)

||,

(15)

where every grid point must be investigated.

In Figure 4, a situation is represented where the

velocity vector which would result the best human

tracking solution is not reachable, so the nearest grid

point will be selected for the agent from the RAV set.

The grid points are the red x-s and the RAV set is pre-

sented with the yellow area.

Motion Planning for Mobile Robots using the Human Tracking Velocity Obstacles Method

487

Figure 6: Simulation result in MATLAB, there is one ob-

stacle in the workspace.

3.3 Visibility Check

In every sampling time, it must be investigated whe-

ther the human that the robot has to follow is visible or

not. If it is visible than the velocity selection method

can be used that was introduced in Section 3.2. The

human is visible if minimum one point is observable

from itself using a sensor (e.g.: LiDAR). If the human

is not visible, the agent stops until the robot receives

information from the human again.

In Section 4, there will be an example, where the

human is not visible.

In Figure 5, the steps of the HTVO algorithm can

be seen. After calculating the VO-s and RAV sets, it

must be checked whether there are available veloc-

ity vectors in the RAV or not. If no, then the motion

planning for the mobile robot is not executable and

the agent stops. If the RAV set is not empty, then the

visibility check is the next step. If the Human is not

visible, then the agent must stop, on the other hand,

if it is visible, then the velocity vector for the robot

can be calculated using the HTVO method. Using this

velocity vector for one time step, the whole method

should execute again until the end of the motion is

not reached.

4 SIMULATION RESULTS

In this section, the simulation results of the Human

Tracking Velocity Obstacles are presented. In every

simulation, the motion planning of an omnidirectional

robot is presented, that follows the human.

4.1 MATLAB Simulation

The motion planning algorithm was implemented first

in MATLAB environment. In every simulation result,

the obstacles have constant velocity vectors. In Fig-

ure 6, there is one obstacle in the workspace of the

robot which crosses the line between the robot and

the human. The obstacle is presented with blue cir-

cle with velocity vector v

B1

and the human (moving

person) is a lila circle with velocity vector v

p1

. The

robot is shown as a green circle with velocity vector

v

A

. The robot has always the opportunity to select the

velocity vector which will result in the human track-

ing method. In the middle of the motion, the human

is not visible because of the obstacle. In that case the

robot slows down and continues the motion only if the

human is visible again. The average running time is

0.0016 s, so the introduced method can be also used

in real time scenarios. The simulations were tested

using the following environment:

• Processor: Intel(R) Core(TM) i5-3320M CPU @

2.60 GHz

• Operation system: Win10, 64 bites

• Memory (RAM): 8,00 GB

• MATLAB 2021a

The motion of the robot can be seen in the following

video (Gyenes, 2022a).

4.2 ROS-Gazebo Simulation

ROS (Robot Operating System) is an open source

software development kit for robotics applications. It

provides developers in a variety of industries with a

standard software platform to aid in research and pro-

totyping, deployment and production.

Robot simulation is a necessary tool in testing

the results of the motion planning algorithms. Well-

designed simulators can quickly test algorithms, de-

sign models, perform tests, and train AI systems

using real-world scenarios. Gazebo provides the

ability to accurately and efficiently simulate robotic

populations in complex indoor and outdoor environ-

ments. To integrate ROS with stand-alone Gazebo, a

set of ROS packages offer wrappers for Stand-alone

Gazebo. They provide the essential interfaces to sim-

ulate robots in Gazebo with ROS messages, services

and dynamic reconfiguration.

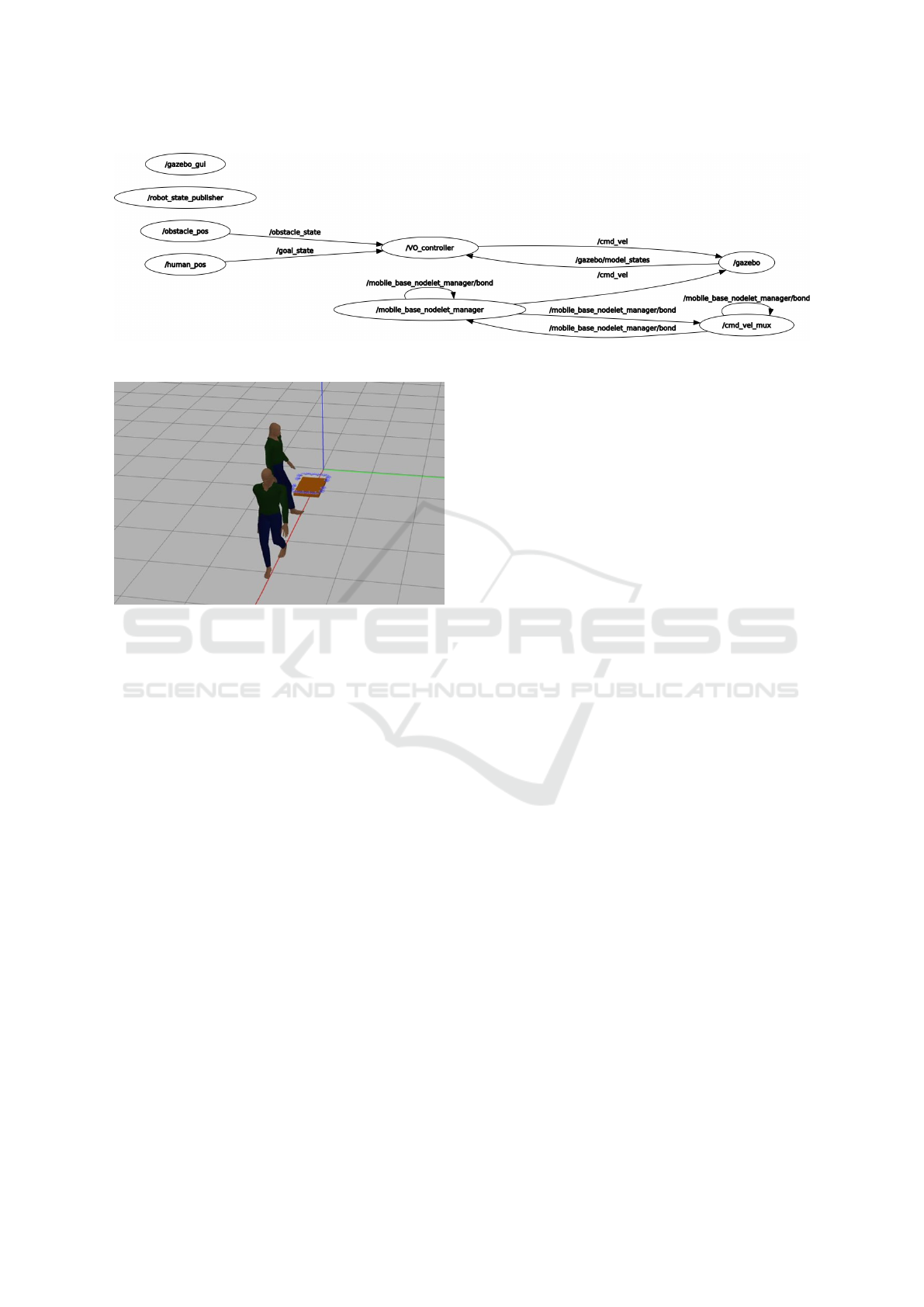

Since in simulation phase, we do not receive data

from real sensor while the controller needs the posi-

tion and velocity information of target and obstacle as

input, two nodes are created in ROS to publish needed

information of goal and obstacle respectively. After-

wards the controller node subscribes the essential data

of virtual objects and then calculates the output ac-

cording to the programmed algorithm and finally pub-

lishes velocity command. The introduced solution of

the ROS graph can be seen in Figure 7.

ICINCO 2022 - 19th International Conference on Informatics in Control, Automation and Robotics

488

Figure 7: ROS graph of the motion planning method.

Figure 8: The robot follows the person while an another

person passes the path.

Two scenarios were tested in ROS-Gazebo envi-

ronment.

1. The mobile robot follows a person who walks

along a straight-line trajectory, while another per-

son (as an obstacle) passes by, which makes the

goal invisible to the mobile robot. In this case, the

robot would stop moving according to the intro-

duced algorithm until it could receive the infor-

mation of its goal again after the obstacle moved

away. The presented example can be seen in Fig-

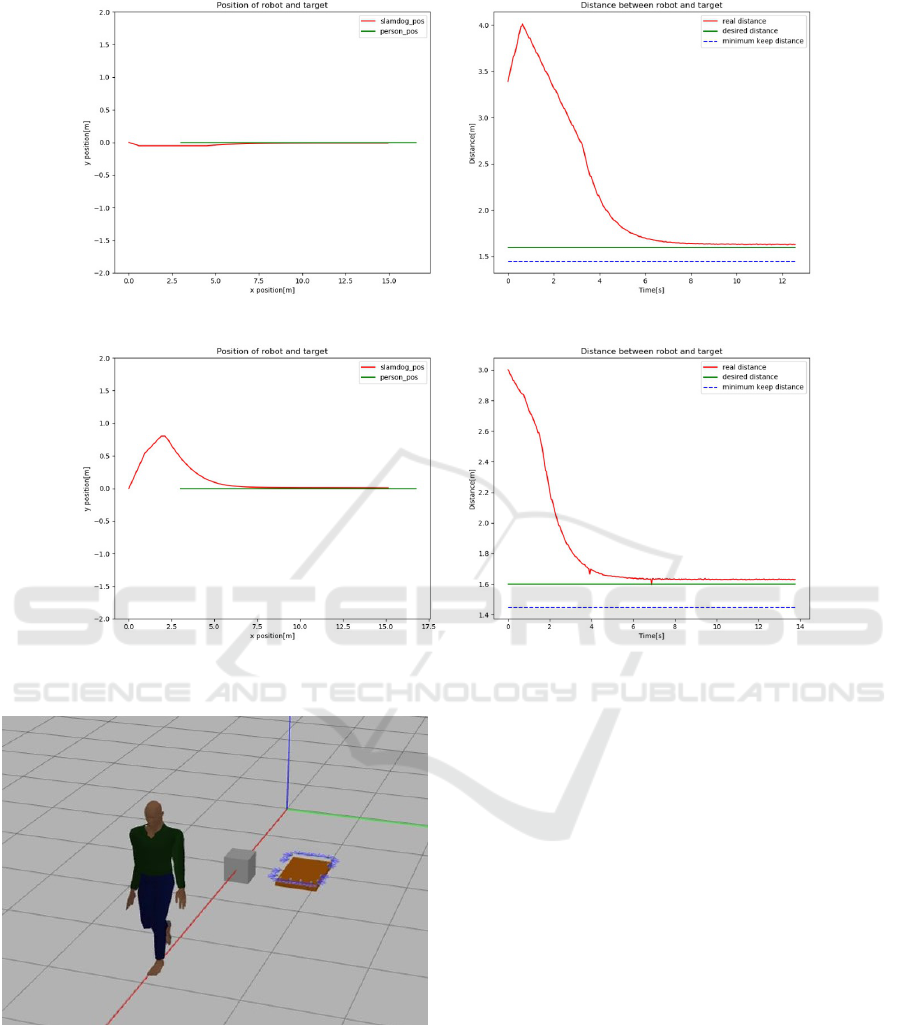

ure 8. Figure 9 left side represents the path of the

robot and the human that the agent follows. It can

be seen that the robot stops when the other per-

son crosses the line and after that follows the tar-

get person. The right side of the picture shows the

distance between the human and the robot, and the

desired distance and the minimum keeping dis-

tance. It can be seen that during the motion, the

distance will be even smaller until the desired dis-

tance is reached. There is a little offset between

the desired and the actual distance in the end of

the motion, it is because the actuators and the de-

lays of the system but it does not influence the so-

lution of the motion planning method. The motion

of the robot can be seen in the following video as

well (Gyenes, 2022b).

2. The mobile robot follows a goal person who walks

along a straight-line trajectory, while a static ob-

stacle stays between the target and robot. The goal

person is still visible to robot although this obsta-

cle exists. In this case the robot should manage

to get around the obstacle generating an evasive

maneuver and continues to track its target.

This case can be seen in Figure 10.

The path of the the motion can be seen in Fig-

ure 11, where an evasive maneuver is generated

considering the static obstacle and after that, the

target person is followed. On the right side of

this figure, the distance can be seen between the

agent and the person, which reaches the desired

distance during the motion of the agent. In both

examples, the human tracking task can be reached

while the collision avoidance task is solved. The

result of the motion can be seen in the following

video (Gyenes, 2022c).

5 CONCLUSIONS

In this work, a novel motion planning algorithm was

introduced for mobile robots where the human track-

ing method can be executed with considering the col-

lision avoidance method at the same time. With this

method, the agent can reach and keep a given distance

to the target person and result in a collision-free mo-

tion in dynamic environment. The novel motion plan-

ning method was tested and validated in two differ-

ent environments in different test-cases. As a future

work, the introduced method would be tested on an

omnidirectional mobile robot (with four omnidirec-

tional wheels). The introduced algorithm can be also

compared with an another human tracking algorithm

based on Model Predictive Control (MPC). The intro-

duced method could be used in the future in different

real case situations (e.g. hospitals, airports) where it

could help the people everyday life.

Motion Planning for Mobile Robots using the Human Tracking Velocity Obstacles Method

489

Figure 9: The path and the distance of the robot and the target.

Figure 11: The path and the distance of the robot and the target in the case if there is a static obstacle between the robot and

the human.

Figure 10: The robot follows the person while a static ob-

stacle is in the workspace.

ACKNOWLEDGEMENTS

The research was supported by the DAAD Short-term

Research Scholarship. Additionally, the research re-

ported in this paper is part of project no. BME-NVA-

02, implemented with the support provided by the

Ministry of Innovation and Technology of Hungary

from the National Research, Development and Inno-

vation Fund, financed under the TKP2021 funding

scheme.

REFERENCES

Ali, B., Iqbal, K. F., Ayaz, Y., and Muhammad, N. (2013).

Human detection and following by a mobile robot us-

ing 3D features. In 2013 IEEE International Confer-

ence on Mechatronics and Automation, pages 1714–

1719. ISSN: 2152-744X.

Bewley, A., Ge, Z., Ott, L., Ramos, F., and Upcroft, B.

(2016). Simple online and realtime tracking. In 2016

IEEE International Conference on Image Processing

(ICIP), pages 3464–3468.

Chiu, H.-K., Li, J., Ambrus¸, R., and Bohg, J. (2021).

Probabilistic 3D Multi-Modal, Multi-Object Track-

ing for Autonomous Driving. In 2021 IEEE In-

ternational Conference on Robotics and Automation

(ICRA), pages 14227–14233. ISSN: 2577-087X.

Ch’ng, C.-H., Liew, S.-Y., Wong, C.-S., and Ooi, B.-Y.

(2020). An Efficient Multi-AMR Control Framework

ICINCO 2022 - 19th International Conference on Informatics in Control, Automation and Robotics

490

for Parcel Sorting Centers. In 2020 IEEE Sensors Ap-

plications Symposium (SAS), pages 1–6.

Fedele, G., D’Alfonso, L., Chiaravalloti, F., and D’Aquila,

G. (2017). Path planning and obstacles avoidance

using switching potential functions. In Proceedings

of the 14th International Conference on Informat-

ics in Control, Automation and Robotics - Volume 2:

ICINCO,, pages 343–350. INSTICC, SciTePress.

Fiorini, P. and Shiller, Z. (1998). Motion planning in dy-

namic environments using velocity obstacles. The in-

ternational journal of robotics research, 17(7):760–

772.

Guo, E., Chen, Z., Fan, Z., and Yang, X. (2020). Real-time

Detection and Tracking Network with Feature Shar-

ing. In 2020 IEEE International Conference on Vi-

sual Communications and Image Processing (VCIP),

pages 519–522. ISSN: 2642-9357.

Gyenes, Z. (2022a). Icinco conference paper, simulation in

matlab. https://youtu.be/SKNefY399Co. Accessed on

2022/04/21.

Gyenes, Z. (2022b). Icinco conference paper, simulation

in ros-gazebo (1). https://youtu.be/FZFnf7xBhAk.

Accessed on 2022/04/21.

Gyenes, Z. (2022c). Icinco conference paper, simulation in

ros-gazebo (2). https://youtu.be/1xABzIMZ3uc.

Jung, J. (2020). Woowa Brothers to Debut New Dilly Drive

at End of Year. https://www.koreatechtoday.com/woo

wa-brothers-to-debut-new-dilly-drive-at-end-of-yea

r/. Section: Food Service.

Kim, A., O

ˇ

sep, A., and Leal-Taix

´

e, L. (2021). EagerMOT:

3D Multi-Object Tracking via Sensor Fusion. In 2021

IEEE International Conference on Robotics and Au-

tomation (ICRA), pages 11315–11321. ISSN: 2577-

087X.

Lenain, R., Nizard, A., Deremetz, M., Thuilot, B., Papot,

V., and Cariou, C. (2018a). Path tracking of a bi-

steerable mobile robot: An adaptive off-road multi-

control law strategy. In Proceedings of the 15th In-

ternational Conference on Informatics in Control, Au-

tomation and Robotics - Volume 2: ICINCO,, pages

163–170. INSTICC, SciTePress.

Lenain, R., Nizard, A., Deremetz, M., Thuilot, B., Papot,

V., and Cariou, C. (2018b). Path tracking of a bi-

steerable mobile robot: An adaptive off-road multi-

control law strategy. In Proceedings of the 15th In-

ternational Conference on Informatics in Control, Au-

tomation and Robotics - Volume 2: ICINCO,, pages

163–170. INSTICC, SciTePress.

Masehian, E. and Katebi, Y. (2007). Robot motion planning

in dynamic environments with moving obstacles and

target. International Journal of Mechanical Systems

Science and Engineering, 1(1):20–25.

Qian, Y., Shi, H., Tian, M., Yang, R., and Duan, Y. (2020).

Multiple Object Tracking for Similar, Monotonic Tar-

gets. In 2020 10th Institute of Electrical and Elec-

tronics Engineers International Conference on Cyber

Technology in Automation, Control, and Intelligent

Systems (CYBER), pages 360–363. ISSN: 2379-7711.

Reiser, U., Connette, C., Fischer, J., Kubacki, J., Bubeck,

A., Weisshardt, F., Jacobs, T., Parlitz, C., H

¨

agele, M.,

and Verl, A. (2009). Care-O-bot® 3 - creating a prod-

uct vision for service robot applications by integrating

design and technology. In 2009 IEEE/RSJ Interna-

tional Conference on Intelligent Robots and Systems,

pages 1992–1998. ISSN: 2153-0866.

Valdez, M., Cook, M., and Potter, S. (2021). Humans and

robots coping with crisis – Starship, Covid-19 and

urban robotics in an unpredictable world. In 2021

IEEE International Conference on Systems, Man, and

Cybernetics (SMC), pages 2596–2601. ISSN: 2577-

1655.

Wojke, N., Bewley, A., and Paulus, D. (2017). Simple on-

line and realtime tracking with a deep association met-

ric. In 2017 IEEE International Conference on Image

Processing (ICIP), pages 3645–3649.

Xiang, Y., Alahi, A., and Savarese, S. (2015). Learning

to Track: Online Multi-object Tracking by Decision

Making. In 2015 IEEE International Conference on

Computer Vision (ICCV), pages 4705–4713, Santiago,

Chile. IEEE.

Yang, M. and Jia, Y. (2016). Temporal dynamic appearance

modeling for online multi-person tracking. Computer

Vision and Image Understanding, 153:16–28.

Motion Planning for Mobile Robots using the Human Tracking Velocity Obstacles Method

491