Automatic Segmentation of the Cervical Region in Colposcopic

Images

Paloma Cepeda Andrade, Sesh Commuri

University of Nevada, Reno, 1664 N Virginia St, Reno, NV, U.S.A.

Keywords: Cervical Cancer, Colposcopy, Image Segmentation, LAB Color Space, Morphological Filtering, K-means.

Abstract: Cervical cancer is one of the most common cancers affecting women, especially in developing countries and

in resource constrained areas in the western world. While easily treatable if detected early, the lack of adequate

resources and skilled physicians make this disease difficult to detect and treat. In this paper, we propose a

vision-based approach anchored in machine-learning principles to detect and quantify lesions on the surface

of the cervix. Preliminary results indicate that the proposed method can segment images of the cervix and

successfully detect lesions other artifacts. The image normalization approach can also determine the locations

of lesions and their spread. Validation of this approach during clinical trials is being pursued as the first step

towards developing low-cost bioinformatics-based screening tools for early detection of cervical cancer.

1 INTRODUCTION

Cervical cancer is the fourth most frequent cancer in

women worldwide and represents 6.6% of all cancers

affecting women (WHO, 2019). Most cervical cancer

cases are caused by various strains of the Human

Papilloma Virus (HPV). There are currently vaccines

that protect against common cancer-causing types of

HPV and can reduce the risk of cervical cancer.

Cervical cancer is treatable if detected and

diagnosed early. Typical screening methods for

detecting cervical cancer currently include techniques

such as Papanicolau (Pap) smear, HPV typing, and

colposcopy. Cost-effective options, such as Visual

Inspection with Acetic Acid (VIA), and Visual

Inspection with Lugol Iodine (VILI) are

recommended as the best screening methods in

developing countries (Sankaranarayanan et al., 2003).

Cryotherapy or loop electrosurgical excision

procedure (LEEP) (Basu & Sankaranarayanan, 2017)

can provide effective and appropriate treatment for

most women who screen positive for precancerous

lesions, and “screen-and-treat” and “screen, diagnose

and treat” are both valuable approaches.

Detection of cervical precancerous lesions can be

done by examining the cervix, vulva, and vagina

through a colposcope. This procedure, called

colposcopy, is used to detect genital warts,

inflammation of the cervix (cervicitis), and

precancerous changes in the tissue of the cervix,

vagina, and vulva. During colposcopy, the vaginal

walls are held open using a speculum and the surface

of the cervix is illuminated using a light source. The

colposcope (shown in Figure 1) is then used to

visually examine the cervix and the adjacent tissues.

Figure 1: Left: Standard colposcope. Right: Portable

colposcope (Basu & Sankaranarayanan, 2017).

During cervical examination, the cervix is first

rinsed with saline solution and then stained with 3-

5% acetic acid (Sankaranarayanan et al., 2004). If the

cervical epithelium contains an abnormal load of

cellular proteins, acetic acid causes these proteins to

coagulate. As a result, the affected areas appear

opaque or white under visual inspection. The higher

the grade of neoplasia, the greater the density of

acetowhitening (AW) (Li & Poirson, 2006). There are

66

Andrade, P. and Commuri, S.

Automatic Segmentation of the Cervical Region in Colposcopic Images.

DOI: 10.5220/0010835200003123

In Proceedings of the 15th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2022) - Volume 1: BIODEVICES, pages 66-73

ISBN: 978-989-758-552-4; ISSN: 2184-4305

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

also other characteristics such as morphological

changes (for example, tissue shape, mosaic, and

punctuation vessels) that can also indicate

abnormalities (Li & Poirson, 2006). Such factors

usually require an expert to determine diagnosis.

Abnormal growth of cells on the surface of the

cervix if untreated, can potentially lead to cervical

cancer. Cervical Intraepithelial Neoplasia (CIN) or

Cervical Dysplasia, refers to the potentially

precancerous transformation of cells of the cervix.

Presence of dysplasia can be further investigated

through Pap smear and biopsy. If detected early, CIN

can be managed by treatment and full recovery is

possible (Castle, Stoler, Solomon, & Schiffman,

2007). However, it is difficult to quantify different

characteristics of these lesions unless the patient is

examined by a trained professional. Even then,

diagnosis can be highly subjective and may lead to

misdiagnosis or an unnecessary biopsy (Castle et al.,

2007; Kudva & Prasad, 2018).

While screening and treatment has been quite

effective in reducing the occurrence of cancer in the

developed world, the need for expensive equipment,

trained colposcopists, and clinical infrastructure has

limited its benefit in other parts of the world. In 2018,

around 570,000 women were diagnosed with cervical

cancer worldwide, and nearly 311,000 women died

from this treatable disease (WHO, 2019). There are

many contributing factors to this alarmingly high rate

of death. These include social stigma, lack of

awareness and access to care, poor staining technique

during colposcopy, poor magnification and resolution

of the available devices in the market, cumbersome

nature of colposcopes due to size and need for

electrical power source, and the need for multiple

visits to the medical clinics, which can be unpleasant

and time-consuming experiences for the patient

(WHO, 2019).

Therefore, there is a need for a low-cost and

portable tool for screening and detecting abnormal

changes in the cervix that may later develop into

invasive cancer. Further, the system must be built

with automated detection and diagnosis capabilities

to enable use by health workers in the field where

experienced doctors and colposcopists are not

available.

Detection of lesions from images of the cervix has

been attempted by several researchers. These

approaches utilize digital images of the cervix

obtained during colposcopic examination. Staining

the cervix with acetic acid exposes any lesions

present as white areas (acetowhite regions) under

illumination. While these AW regions can be

detected, these regions are often distorted by the

presence of glare from the light source, reflections

from the speculum, as well as portions of the

speculum and other artifacts that are captured in the

image. Automatic detection of AW regions requires

the removal of glare, reflections, and other artefacts

from the image, extraction of the region of the cervix

that is of interest and detection of the AW regions.

Further, it is advantageous to locate the lesion and

determine its spread as such information can be used

for targeted biopsy instead of the four-quadrant

biopsy procedure that is commonly used.

In this paper, we present our approach to detection

of precancerous lesions on the cervix. In this

approach we first detect glare and specular reflection

(SR) in the image. This is followed by image

segmentation to identify the cervical region of interest

(ROI). The ROI is then used to detect the presence of

AW regions that are indicative of lesions. The use of

this algorithm in detecting lesions is demonstrated

using two colposcopy datasets that are available in the

public domain (add references). The method and

results are discussed in detail in Sections 3 and 4.

2 LITERATURE REVIEW

2.1 Acquisition of Cervical Images

Automated detection of CIN has benefited from

studies over the past several decades where digital

images of the cervix were collected. Initial efforts

include the Guanacaste Project in Costa Rica between

1993 and 1994 to investigate the role of HPV in the

development of CIN (Bratti et al., 2004). In this

project, researchers used a fixed-focus camera to

obtain cervical images of over ten thousand women.

Archived digitized cervical images from this project

were later used by Hu and co-researchers to develop

a method based on deep learning algorithm for

automated visual evaluation of cervical images (Hu et

al., 2019).

The ASCUS/LSIL Triage Study for Cervical

Cancer (ALTS) was a clinical trial conducted in the

United States between 1996 and 2000. ASCUS stands

for Atypical Squamous Cells of Undetermined

Significance and LSIL for Low-Grade Squamous

Intraepithelial Lesions (Schiffman & Adrianza,

2000). The images collected have also been used in

several research efforts (Greenspan et al., 2009; Guo

et al., 2020) to automate detection of precancerous

lesions on the cervix. While these two studies resulted

in a wealth of digital images that spurred research into

automated analysis of these cervical images, the lack

of uniformity in the imaging process, varying light

Automatic Segmentation of the Cervical Region in Colposcopic Images

67

intensity and focal lengths of the cameras, and

varying sizes of the images, make the analysis of

these images extremely difficult.

2.2 Detection and Removal of Glare

and Specular Reflections (SR)

One of the limiting factors in automatic detection of

CIN is the presence of specular reflections in cervical

images. The presence of moisture on the surface of

the cervix causes reflection of the light source. These

reflections, termed as Specular Reflections (SR),

appear as bright white spots in the cervigrams and

make it difficult to distinguish between AW regions

and reflections.

As mentioned in the previous section, staining the

cervix with acetic acid can expose potential

precancerous lesions. Under normal circumstances,

the epithelium remains transparent after the

application of acetic acid, but when a precancerous

lesion is present, the epithelium becomes opaque, and

the reflection of light gives it a white color (Basu &

Sankaranarayanan, 2017). An example of this

occurrence is shown in Figure 2. The image on the

left is of a cervix before the application of acetic acid.

The image on the right is of the same cervix, after

application of acetic acid, with presence of

acetowhite (AW) features. It can also be seen that SR

on the cervical image will also appear white in color,

but with high brightness and low saturation values.

This presents a problem because it can blend in with

the AW features of interest and produce incorrect

classification or diagnostic results. Therefore, it is

necessary to identify these SR areas prior to any

medical assessment.

Figure 2: Cervix before (left) and after (right) application

of acetic acid. The AW area (blue line) is made visible after

applying acetic acid (Basu & Sankaranarayanan, 2017).

Several researchers have attempted to recover

information occluded by glare and SR. Lange (Lange,

2005) implemented adaptive thresholding to obtain

glare feature maps and remove glare, followed by a

filling algorithm to estimate the color and texture in

the affected areas. Meslouhi and co-investigators

(Meslouhi et al., 2011) proposed an automatic glare

extraction and feature inpainting method based on

obtaining and comparing luminance components

from both RGB and CIE-XYZ color spaces. Das and

Bhattarcharyya (Das et al., 2011) developed an

algorithm to perform glare removal through the

design of morphological filters and with subsequent

inpainting using inward interpolation from the pixels

outside the detected area. Bai and co-researchers (Bai

et al., 2018) also aim to detect areas with SR and

restore the area through a filling algorithm.

Region-filling algorithms may provide an

estimate of what the area would look like without SR.

However, this estimate may forego some texture

features that can be of importance in deciding the

severity of a precancerous lesion.

2.3 Extraction of the Region of Interest

Color images and the correct representation of

features are essential when working with digital

medical images. The most common representation of

color in images in computer monitors is the sRGB

(standard RGB) color space. RGB stands for the three

primary colors: red, green, and blue. Images

represented in this color space are formed by

combining arrays containing values of these

individual color components. For most digital

images, every possible color can be formed with

values in the range of (0, 255) for each primary color

component, in the form: 𝐶

𝑖

= (𝑅

𝑖

, 𝐺

𝑖

, 𝐵

𝑖

) where

0 ≤ 𝑅

𝑖

, 𝐺

𝑖

, 𝐵

𝑖

≤ 255 (Burger & Burge, 2016). While

sRGB is an accepted color standard for screens, it is

limited when trying to extract desired features, as

there are no light or saturation components, which are

good indicators of a lesion present on the cervix.

A different color representation of an sRGB

image can make it easier to extract meaningful

information. The LAB color space was developed to

linearize the representation with respect to human

color perception and to generate a more intuitive

color space. Color is expressed as three dimensions:

L* which represents luminosity; and a* and b*, which

are the components that specify hue and saturation

along a green-red and blue-yellow axis, respectively

(Burger & Burge, 2016). An implementation of this

color space to observe a cervical image is illustrated

in Figure 3. Note that the L* channel presents

information pertaining to the brightness in the image,

a useful feature in detecting AW regions.

Figure 3: Cervix image converted to CIELAB color space,

left to right: Original; L* channel; a*channel; b* channel.

BIODEVICES 2022 - 15th International Conference on Biomedical Electronics and Devices

68

Analysis of a cervical image can be difficult due

to the presence of artifacts such as the speculum in the

image. These artifacts do not present useful

information and are detrimental to the automated

detection of lesions. Therefore, detection of the

cervical area plays an important role in assessment of

colposcopic images by highlighting only the region of

interest (ROI). Accurate detection of lesions on a

cervix involves removal of glare and SR on the

cervical epithelium, accurate delineation of the

cervical region, and identification, location, and

spread of lesions represented by AW regions.

Figure 4 shows a color image of a cervix. This

image contains the speculum used in colposcopy, as

well as interference by the reflection of light on the

cervical epithelium. Manual delineation of the region

of interest and the AW region diagnosed as Cervical

Intraepithelial Neoplasia of Grade 1 (CIN1) are also

presented.

Figure 4: Manual segmentation of the cervical region of

interest (ROI), acetowhite staining (AW), and specular

reflection (SR).

Several researchers have investigated automatic

segmentation of the cervical region to detect lesions.

Using hue, saturation, and brightness (HSV color

space) information in images, Bai and co-researchers

were able to segment cervical images and extract the

ROI. Their approach was shown to have accuracy,

specificity, and sensitivity of 87.25%, 81.99% and

96.70%, respectively (Bai et al., 2018) Das and

Choudhury (Das & Choudhury, 2017) also attempted

to obtain the cervical ROI and detect lesions by

transforming cervical images into the HSV color

space. However, they do not provide validation

information to demonstrate that their method can

reliably extract the ROI in digital images of the

cervix.

Tariq and Burney (Tariq & Burney, 2014) used k-

means segmentation to extract the ROI from images

of the cervix. In their analysis, they found that k-

means ROI segmentation results in better accuracy

when images in LAB color space are used in

comparison to RGB images. Das and other

investigators (Das et al., 2011) proposed an algorithm

to detect the cervical ROI and AW lesions using the

L* channel of the LAB color space. They achieved

accuracy between 89% and 91% but provide no other

quantitative metrics to validate their approach.

While these results demonstrate the advantages of

using image analysis techniques to study cervigrams,

existing results in literature do not adequately address

all the challenges in analyzing images of the cervix.

3 METHODS

In this paper, we present a five-step approach to

analyzing cervigrams and detecting the presence of

lesions on the cervix. These steps are as follows:

1. Convert the cervical image from sRGB to

LAB color space and combine the

information from the L* and a* channels.

2. Use the k-means algorithm to obtain clusters,

segment the image, and identify the cervical

ROI.

3. Implement morphological filters to eliminate

holes and connect similar regions.

4. Automatically crop the segmented and

filtered image to maximize the ROI.

5. Identify AW lesions and calculate their area

in proportion to the cervix.

These steps will be further discussed in the next

subsections.

3.1 Data Acquisition

We have developed a prototype of the Cervitude

Imaging System (CIS). This prototype consists of a

probe equipped with a ranging sensor, a camera, and

LEDs for illuminating the cervix. The device includes

a microprocessor circuit that can control the intensity

of the LEDs and provides two-way communication

with the CIS application running on a host system

such as a computer, Android/iPhone, or a tablet. The

CIS application is built around an image analysis

algorithm that can quickly detect and locate lesions

on the surface of the cervix. With this device, we aim

to provide a portable, low-cost, solution to

communities that lack the necessary resources and

medical personnel. We are currently in the process of

performing a clinical study to perform preliminary

testing and to obtain our images using this prototype.

Two small datasets of cervical images that are

available online were used to demonstrate the

algorithms presented in this paper.

The first dataset used was obtained through the

Atlas of Colposcopy (Basu & Sankaranarayanan).

The Atlas of Colposcopy was developed by the

International Agency for Research on Cancer (IARC)

Automatic Segmentation of the Cervical Region in Colposcopic Images

69

and provides detailed information on everything

related to colposcopy. It is designed to serve as a

comprehensive manual for anyone working in this

area, both in the medical field and academic research.

This website contains a small repository of images

(107 in total) labeled in order of severity, from

“Normal” to “CIN-1”, “CIN-2”, “CIN-3”, and

“Carcinoma in situ (CIS)”. These images are of

relatively high quality, with a size of 600 x 800 pixels,

which makes them suitable for the segmentation

approach presented in this paper. The main drawback

is that there are no annotations available regarding the

cervical ROI, so a qualitative evaluation on the

segmentation results will be made.

The next image dataset that was used for analysis

is publicly available online

1

by Fernandes and co-

researchers (Fernandes et al., 2017). This dataset

contains a total of 284 colposcopic images with

annotations for the cervical ROI. Out of these, we will

use 91 images, which represent the cervical region

after acetic acid has been used. The annotations

provided will be used to validate our segmentation

algorithm. However, a downside is that there is no

pathological information available, so AW lesion

segmentation cannot be validated with this dataset.

3.2 Image Pre-processing

Our image pre-processing approach addresses the

challenges in cervical image analysis in the following

ways: by identifying regions containing SR, and by

preparing the image for k-means segmentation

through conversion of the images to LAB color space.

From Figure 3, it can be observed that both the L* and

a* channels can provide meaningful information.

Therefore, this method uses the information obtained

after combining these two channels, as they will bring

out the areas that present a high level of brightness

and absence of pink or red colors. The results of

combining these two channels are shown on Figure 5.

Figure 5: Visualization of the combination of L* and a*

channels. Left: original images. Right: resulting images.

1

https://archive.ics.uci.edu/ml/datasets

The results from Figure 5 show a way to interpret

specular reflections as an undesired feature for

diagnosing a precancerous lesion. Image (a) is that of

a healthy cervix, so correctly detecting these natural

features is essential to avoid over-treating a patient

for a misdiagnosed illness. Similarly, image (b)

shows the correct identification of areas with SR,

while leaving out the AW region.

3.3 Segmentation of the Cervical

Region

Image segmentation is a critical step when processing

images for classification. Through this process, an

image can be split into a given number of regions. The

k-means algorithm is a type of unsupervised

clustering algorithm commonly used for image

segmentation (Luo et al., 2003) due to its simplicity

and speed. It divides a dataset (in this case, an image)

into K classes, such that similar regions are clustered

together. The objective is to find the center of each

cluster C

i

and to assign all sample points in the

vicinity of the center to that cluster (Tariq & Burney,

2014).

The center of each cluster is the mean of the data

points which belong to it. A Euclidean distance

measurement:

𝐷𝑥, 𝑦

‖

𝑥𝑦

‖

(1)

is used to determine which cluster a data point

belongs to. The algorithm works as follows:

1. For a given number of K, the data points are

randomly grouped into each cluster.

2. The center of each cluster is calculated.

3. The distance from each point to each cluster

center is calculated.

4. Each point is reassigned to the nearest

cluster.

Steps 2-4 are repeated iteratively until there are no

changes in the grouping.

The input to our k-means segmentation algorithm

is the image resulting from the element-wise

multiplication of the L* and a* channels. Then, we

implement the k-means algorithm to obtain three

clusters. Finally, we implement morphological

filtering using a hole-filling operation, followed by an

opening operation, which removes small dark spots

on the image, and connects the bigger, white, areas.

In most cases, this will leave our resulting binary

mask, which will indicate the cervical ROI. In a few

BIODEVICES 2022 - 15th International Conference on Biomedical Electronics and Devices

70

cases, two or more resulting areas will appear. If that

occurs, the largest area will be considered as the ROI.

4 RESULTS AND DISCUSSION

4.1 Atlas of Colposcopy Dataset

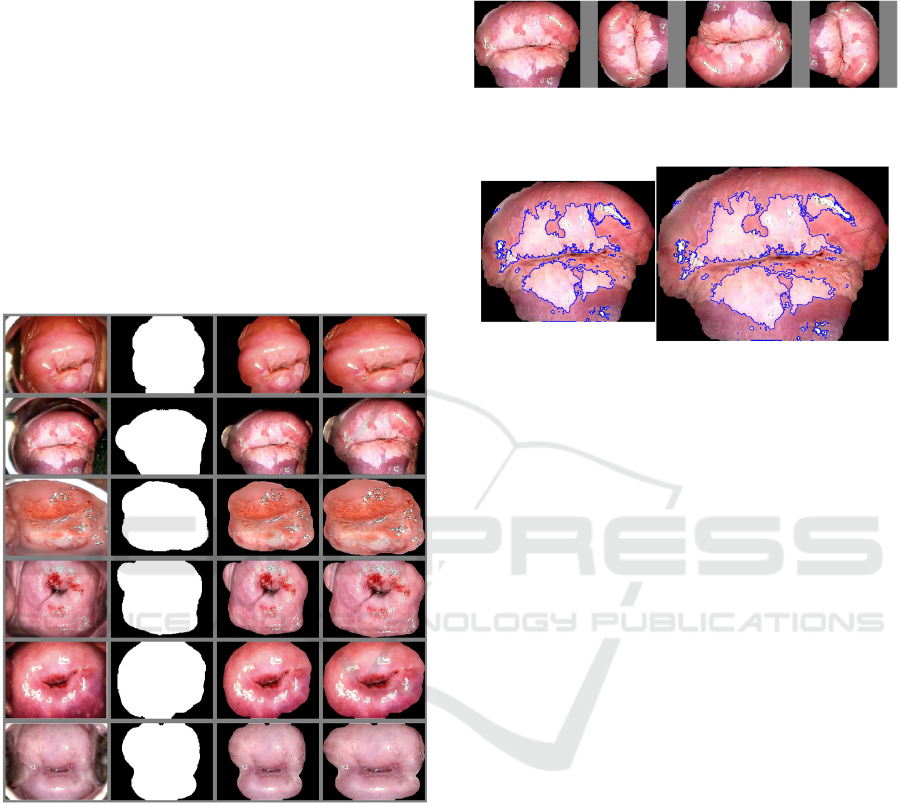

Figure 6 shows the segmentation results on the Atlas

of Colposcopy dataset. The first column shows the

original RGB colposcopy image. The second column

displays the resulting outline after LAB color space

conversion and our segmentation algorithm. The third

column represents the segmented colposcopic image,

without the speculum and outer edges of the cervical

region.

Figure 6: ROI Segmentation Results. Left to right: Original

image; binary mask; segmented image; cropped and resized

segmented image.

Additionally, an auto-crop function was created to

ensure that the cervical area remains at the center of

the processed image. The results are shown on the

fourth column in Figure 6. If the image is rotated, the

region of interest will remain at the center. An

example is illustrated in Figure 7. The size of the

segmented and cropped image is 486 x 599 pixels.

To detect the presence of AW lesions, the k-

means algorithm was used to perform automatic

segmentation of the image in Figure 7. The image

represents a cervix with CIN 2, where a portion of the

cervix is covered by AW lesions. Without the

presence of the speculum and the background, the

AW region detection was successful. The result is

shown on Figure 8.

Figure 7: Segmentation and auto-cropping of cervical

image after 90-degree rotation.

Figure 8: AW lesion detection results. Left: Cropped image.

Right: Cropped and resized image.

From Figure 8, the area of the identified AW

regions in the cropped image covers 35.39% of the

cervical ROI. When the image is resized to its original

dimensions, the segmentation algorithm detects that

the AW area covers 35.34% of the cervical ROI. This

shows that when the cropped and segmented image is

resized to the original dimensions, the AW area

proportion remains within algorithmic precision.

This shows that the ROI segmentation and auto-

cropping will retain the same ratio of AW region with

respect to the area of the cervix. The process is almost

unaffected by the size and orientation of the original

image. Therefore, the procedure can be used during

cervical screening using portable colposcopes or

other imaging devices. Further, the procedure affords

a simple way to compare screening results from

several patient visits and can be used to document the

efficacy of the treatment regimen.

4.2 Fernandes Dataset

We also performed our ROI segmentation algorithm

on the images from the Fernandes dataset. Figure 9

shows our results compared to the provided

annotations. The first column contains the original

images, the second column shows our segmentation

results with true negative values in black, true

positive values in white, false negative values in blue,

and false positive values in red. The third column

shows the ground truth annotations, and the fourth

column shows the final, segmented image.

Automatic Segmentation of the Cervical Region in Colposcopic Images

71

Figure 9: ROI Segmentation Results. Left to right: Original

image; binary mask with false negative segmentation in

blue, and false positive segmentation in red; ground truth;

segmented image.

The false negative (FN) values are those which are

annotated as cervix, and which our algorithm

classified as non-cervix. While we consider this

dataset to be of relatively good quality, in many cases

the illumination in the images is not adequate, and

shadows present in them can affect the performance

of our algorithm. We observed that most of our errors

are FN, where our algorithm did not include

shadowed areas in the cervix as part of the ROI. Even

though we do not have annotations in our other

dataset, a qualitative assessment of our results

indicates that if the ROI is well illuminated, our FN

rate will go down.

The quantitative evaluation of our algorithm is

shown in Table 1. Our results show that the average

accuracy of the segmentation algorithm was 83.33%.

In fact, 67 out of 91 images were segmented with

accuracy over 80%. Specificity indicates the

proportion at which our algorithm can classify pixels

that are not part of the ROI. The average specificity

was almost 87%. Our average sensitivity is about

81%, which shows the rate at which we can correctly

identify a pixel as part of the ROI. Precision is the

measurement indicating the possibility that the pixel

classified as part of the cervix is not part of the

background (Gerig et al., 2001). The average

precision is 80.53%.

Table 1: Accuracy and performance of our segmentation

algorithm.

Performance

Metric

Max

(%)

Min

(%)

Median

(%)

Mean

(%)

F1 Score 95.50 47.36 82.07 79.46

Accuracy 97.44 58.32 83.84 83.33

Specificity 100 58.77 88.83 86.63

Sensitivity 99.72 33.24 81.58 81.12

Precision 100 42.82 81.73 80.53

Our experimental results show that our

segmentation algorithm performs in a suitable

manner, with accuracy, specificity, and sensitivity of

up to 97.44%, 100%, and 99.72%, respectively. This

means that the algorithm can segment the cervical

region in clinical practice with excellent results. We

have shown that, given a small amount of data, an

algorithm can be developed to identify the cervical

ROI, regardless of position or distance from the

colposcope to the cervix. Further improvements are

expected as we complete our clinical study.

5 CONCLUSIONS

In this paper, an image analysis-based approach for

screening for cervical cancer was presented.

Preliminary results indicate that the proposed method

can segment images of the cervix, reduce the effect of

glare from light sources, remove specular reflections

and other artifacts, and successfully detect lesions.

While the method was validated using sample images

from the Atlas of Colposcopy and the Fernandes

dataset, extensive analysis must be conducted using a

variety of images collected in the field to improve the

sensitivity and specificity of the method in obtaining

the cervical ROI and detecting cervical dysplasia.

Validation of this approach during clinical trials is

being pursued by the authors as the first step towards

developing low-cost bioinformatics-based screening

tools for early detection of cervical cancer. Accurate

automatic detection of cervical dysplasia can prove to

be crucial in regions where medical experts or clinical

resources are not available.

REFERENCES

(WHO), W. H. O. (2019). Cervical Cancer.

https://www.who.int/cancer/prevention/diagnosis-

screening/cervical-cancer/en/

BIODEVICES 2022 - 15th International Conference on Biomedical Electronics and Devices

72

Bai, B., Liu, P. Z., Du, Y. Z., & Luo, Y. M. (2018).

Automatic segmentation of cervical region in

colposcopic images using K-means. Australas Phys

Eng Sci Med, 41(4), 1077-1085. https://doi.org/

10.1007/s13246-018-0678-z

Basu, P., & Sankaranarayanan, R. (2017). Atlas of

Colposcopy – Principles and Practice. IARC

CancerBase. https://screening.iarc.fr/atlascolpo.php

Bratti, M. C., Rodríguez, A. C., Schiffman, M., Hildesheim,

A., Morales, J., Alfaro, M., Herrero, R. (2004).

Description of a seven-year prospective study of human

papillomavirus infection and cervical neoplasia among

10000 women in Guanacaste, Costa Rica, Rev Panam

Salud Publica, 15(2), 75-89. https://doi.org/10.1590/

s1020-49892004000200002

Burger, W., & Burge, M. J. (2016). Digital Image

Processing (2 ed.). Springer-Verlag London.

https://doi.org/10.1007/978-1-4471-6684-9

Castle, P. E., Stoler, M. H., Solomon, D., & Schiffman, M.

(2007). The relationship of community biopsy-

diagnosed cervical intraepithelial neoplasia grade 2 to

the quality control pathology-reviewed diagnoses: an

ALTS report. Am J Clin Pathol, 127(5), 805-815.

https://doi.org/10.1309/PT3PNC1QL2F4D2VL

Das, A., Avijit, K., & Bhattacharyya, D. (2011).

Elimination of specular reflection and identification of

ROI: The first step in automated detection of Cervical

Cancer using Digital Colposcopy 2011 IEEE

International Conference on Imaging Systems and

Techniques.

Das, A., & Choudhury, A. (2017). A novel humanitarian

technology for early detection of cervical neoplasia:

ROI extraction and SR detection. 2017 IEEE Region 10

Humanitarian Technology Conference (R10-HTC),

Dhaka.

Fernandes, K., Cardoso, J. S., & Fernandes, J. (2017).

Transfer Learning with Partial Observability Applied to

Cervical Cancer Screening. In L. A. Alexandre, J.

Salvador Sánchez, & J. M. F. Rodrigues, Pattern

Recognition and Image Analysis Cham.

Gerig, G., Jomier, M., & Chakos, M. (2001). Valmet: A

New Validation Tool for Assessing and Improving 3D

Object Segmentation. In W. J. Niessen & M. A.

Viergever, Medical Image Computing and Computer-

Assisted Intervention – MICCAI 2001 Berlin,

Heidelberg.

Greenspan, H., Gordon, S., Zimmerman, G., Lotenberg, S.,

Jeronimo, J., Antani, S., & Long, R. (2009). Automatic

detection of anatomical landmarks in uterine cervix

images. IEEE Trans Med Imaging, 28(3), 454-468.

https://doi.org/10.1109/TMI.2008.2007823

Guo, P., Xue, Z., Long, L. R., & Antani, S. (2020). Cross-

Dataset Evaluation of Deep Learning Networks for

Uterine Cervix Segmentation. Diagnostics (Basel),

10(1).

Hu, L., Bell, D., Antani, S., Xue, Z., Yu, K., Horning, M.

P., Schiffman, M. (2019). An Observational Study of

Deep Learning and Automated Evaluation of Cervical

Images for Cancer Screening. J Natl Cancer Inst

,

111(9), 923-932. https://doi.org/10.1093/jnci/djy225

Kudva, V., & Prasad, K. (2018). Pattern Classification of

Images from Acetic Acid-Based Cervical Cancer

Screening: A Review. Crit Rev Biomed Eng, 46(2),

117-133. https://doi.org/10.1615/CritRevBiomedEng.2

018026017

Lange, H. (2005). Automatic glare removal in reflectance

imagery of the uterine cervix. Proceedings of SPIE -

The International Society for Optical Engineering,

Li, W., & Poirson, A. (2006). Detection and

Characterization of Abnormal Vascular Patterns in

Automated Cervical Image Analysis. In G. Bebis, R.

Boyle, B. Parvin, D. Koracin, P. Remagnino, A. Nefian,

G. Meenakshisundaram, V. Pascucci, J. Zara, J.

Molineros, H. Theisel, & T. Malzbender, Advances in

Visual Computing Berlin, Heidelberg.

Luo, M., Ma, Y.-F., & Zhang, H.-J. (2003). A Spatial

Constrained K-means A pproach to Image

Segmentation Joint Conference of the Fourth

International Conference on Information,

Communications and Signal Processing, 2003 and

Fourth Pacific Rim Conference on Multimedia

Singapore.

Meslouhi, O., Kardouchi, M., Allali, H., Gadi, T., &

Benkaddour, Y. (2011). Automatic detection and

inpainting of specular reflections for colposcopic

images. Open Computer Science, 1(3).

Sankaranarayanan, R., Shastri, S. S., Basu, P., Mahé, C.,

Mandal, R., Amin, G., Dinshaw, K. (2004). The role of

low-level magnification in visual inspection with acetic

acid for the early detection of cervical neoplasia.

Cancer Detect Prev, 28(5), 345-351. https://doi.org/

10.1016/j.cdp.2004.04.004

Sankaranarayanan, R., Wesley, R., Thara, S., Dhakad, N.,

Chandralekha, B., Sebastian, P., Nair, M. K. (2003).

Test characteristics of visual inspection with 4% acetic

acid (VIA) and Lugol's iodine (VILI) in cervical cancer

screening in Kerala, India. Int J Cancer, 106(3), 404-

408. https://doi.org/10.1002/ijc.11245

Schiffman, M., & Adrianza, M. E. (2000). ASCUS-LSIL

Triage Study. Design, methods and characteristics of

trial participants. Acta Cytol, 44(5), 726-742.

https://doi.org/10.1159/000328554

Tariq, H., & Burney, S. M. A. (2014). K-Means Cluster

Analysis for Image Segmentation. International

Journal of Computer Applications, 96.

Automatic Segmentation of the Cervical Region in Colposcopic Images

73