Spatial User Interaction: What Next?

Khadidja Chaoui

1 a

, Sabrina Bouzidi-Hassini

1 b

and Yacine Bellik

2 c

1

Laboratoire des M

´

ethodes de Conception de Syst

`

emes (LMCS), Ecole nationale Sup

´

erieure d’Informatique (ESI),

BP, 68M Oued-Smar,16270 Alger, Algeria

2

Laboratoire Interdisciplinaire des Sciences du Num

´

erique, Universit

´

e Paris-Saclay, Orsay, France

Keywords:

Spatial User Interaction, Interaction Design, Framework, Smart Environments, Ambient Intelligence.

Abstract:

Spatial user interaction refers to interactions that use spatial attributes or spatial relations between entities

to trigger system functions. Spatial user interaction can offer intuitive interactions between users and their

environment, particularly in ubiquitous environments where interconnected objects are in constant interac-

tion. Many research works demonstrated the relevance of the spatial user interaction paradigm. Nevertheless,

only a few were conducted to standardize the necessary concepts for designing spatial applications and to

propose software tools that support spatial user interaction. The present work reviews the literature for spa-

tial user interaction design and development. It analyses papers dealing with applications supporting spatial

user interaction. It shows a lack of generic tools for designing/developing applications supporting spatial user

interactions and proposes directions for future research work in this field.

1 INTRODUCTION

Nowadays, IT (Information Technology) goes beyond

the boundaries of professional areas to dominate our

daily activities. This implies the use of technology

by a variety of users with different skills, preferences

and ages. Human computer interaction models passed

from textual (Command-Line Interface) and graphical

(WIMP

1

) to natural (post WIMP) ones. This remark-

able evolution is need-oriented per more natural and

intuitive relation between machine and user.

Ubiquitous computing consists of multiple inter-

connected input and output devices, often embedded

in daily used physical objects which cooperate seam-

lessly and can be combined together to help users ac-

complish daily tasks. Ubiquitous computing brought

new research approaches, like tangible user interfaces

(Ishii and Ullmer, 1997), which focuses on using

physical objects as input (and sometimes output) de-

vices. More recently, researchers aim to design ambi-

ent environments featured with multiple sensor tech-

nologies, such as interactive media arts (Aylward and

Paradiso, 2006) (Tanaka and Gemeinboeck, 2006), in-

teractive workspaces (Bongers and Mery, 2007). But

a

https://orcid.org/0000-0001-6877-6858

b

https://orcid.org/0000-0002-0466-7190

c

https://orcid.org/0000-0003-0920-3843

1

Windows, icons, menus, pointer

recent technologies like smartphones, flat displays

and some kind of input devices (RFID

2

, NFC

3

) and

networks (Wifi, Bluetooth) made deeper exploration

possible (Huot, 2013). In such environments, the con-

cept of spatial user interaction becomes really inter-

esting.

Spatial user interaction refers to interactions that

result from considering spatial attributes of etities or

spatial relations between them to trigger system func-

tions. Many works (academic and marketed) have

been proposed. They demonstrated the relevance of

the spatial user interaction paradigm. For example,

the Teletact device (Bellik and Farcy, 2002) is a trian-

gulating laser telemeter adapted to the space percep-

tion for the blind. The blind user receives different

sound feedbacks depending on the distance of the ob-

stacle detected by the device. Figure 1 is a photogra-

phy of the Teletact mounted on a white cane.

We present in this paper a literature review around

spatial user interaction design and development. As

we will show later, there is a lack of tools and meth-

ods that help designing applications supporting spa-

tial user interactions.

The outline of this article is as follows. Section

2 focuses on the spatial user interaction paradigm.

Section 3 presents a taxonomy of existing interac-

2

Radio Frequency IDentification

3

Near Field Communication

Chaoui, K., Bouzidi-Hassini, S. and Bellik, Y.

Spatial User Interaction: What Next?.

DOI: 10.5220/0010836700003124

In Proceedings of the 17th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2022) - Volume 2: HUCAPP, pages

163-170

ISBN: 978-989-758-555-5; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

163

Figure 1: Teletact mounted on a white cane (Bellik and

Farcy, 2002).

tion models in order to position spatial user interac-

tion among them. Then, section 4 provides a state of

the art on spatial user interaction and section 5 pro-

vides a discussion. Finally, we provide conclusions

and perspectives of this work.

2 SPATIAL USER INTERACTION

There is not yet a consensus on the definition of the

concept of spatial user interaction. This is why we

propose the following one: “Spatial user interaction

refers to interactions that result from considering spa-

tial attributes or spatial relations between entities to

trigger system functions” (Chaoui et al., 2020).

Spatial user interaction can be implicit or explicit

depending on the user’s intention. If the user inten-

tionally acts in order to trigger a certain system func-

tion then the interaction is said to be explicit. On the

contrary, if the system’s function is triggered without

the user’s intention, then the interaction is said to be

implicit. Note that explicit interactions can be per-

formed by using input interaction devices or sensors,

but implicit interactions are performed only with sen-

sors.

Proxemic interaction represents a particular re-

search topic of spatial user interaction. System func-

tions are triggered according to the proxemic relation-

ships between users and devices. Four variables are

defined to serve for building and interpreting the inter-

action between people and a system within the ecol-

ogy of devices (Greenberg et al., 2011):

• Distance: determines the level of engagement be-

tween user and the system devices.

• Orientation: provides information about which

direction an entity is facing with respect to an-

other.

• Location: indicates the location of the entity.

• Identity: knowledge about the identity of a person,

or a particular device.

Proximic and spatial user interactions may appear

similar, but actually they are not. The main difference

between them is that distance is the main spatial at-

tribute used in proxemic interactions as it defines the

level of attachment between user and the surrounding

devices of the system which is not the case in spatial

user interactions. Consequently, spatial user interac-

tion is more general than proximic one as it defines

interactions by considering various spatial attributes

and relations that may be different from distance such

as speed, acceleration, weight, etc. Furthermore, spa-

tial user interaction aims to exploit orientation and lo-

cation in a richer way than proxemic interaction does.

3 TAXONOMY OF

INTERACTION MODELS

In order to position spatial user interaction with re-

spect to existing other interaction models, we ex-

amined proposed interaction model classifications in

the litterature. (Kim and Maher, 2005) distinguishes

digital andphysical interaction models and presents

a comparison study of two collaborative systems

(workbenches) designed according to both models.

The digital model is implemented by a graphical in-

terface and the physical one is illustrated by a tangible

interface. According to the authors, physical interac-

tion refers to any interaction that uses a physical ob-

ject for invoking a system’s function. It refers also to

any technique applied in the physical space and which

uses physical objects to interact. We think that clas-

sifying models into digital and physical ones is an in-

teresting idea. However, such classification does not

cover all existing models. For example, speech in-

teraction is not digital, nor physical (in the sense of

motor activity). So, if we adopt this classification, we

cannot place speech interaction anywhere.

Authors in (Grosse-Puppendahl et al., 2017), pro-

pose a taxonomy to classify capacitive sensing ap-

proaches based on various criteria, such as sensing

goal, instrumentation of objects into the interaction

etc. Therefore, if we look closely to this classification

we notice that interactions are actually Sensor-Based.

We think that it is important to precise the dif-

ference between the concepts of sensors and input

devices. We consider that input devices are pro-

vided with a set of predefined events (such as mouse

click, mouse double click, mouse move, keyboard

key press, keyboard key release, etc.) which describe

how to use the device. The designer/developer can

use these events to implement his application. Events

then serve as building blocks (or alphabet) for the con-

sidered interaction language. On the other hand, Sen-

HUCAPP 2022 - 6th International Conference on Human Computer Interaction Theory and Applications

164

sors produce information on the state of a given entity

(temperature, brightness, location, speed, etc.). This

information can be produced permanently (at a given

frequency) to the developer or as a response after a

request. Note that an input device can also provide in-

formation on its state, but this is generally done only

at the request of the developer and not permanently.

Defining a classification of interaction models

is not an easy task, as models evolve and new ones

emerge from time to time. To have a solid classi-

fication, we prefer to define general questions that

allow each model to be positioned according to the

answers provided. We list three general questions:

(1) Where does the interaction take place? (2) What

means are used to perform the interaction? (3) What

is the complexity level of the interaction language

structure? We explain these items in the following

paragraphs and we summarize answers to these

questions according to most used interaction models

in Table 1.

Q1: Where Does the Interaction Take Place?

Here we mean where the manipulation of the target

objects of interaction takes place: they can be in the

digital world (graphical and touch interactions), in

the real world (tangible, spatial user interactions), or

in both worlds (mixed reality).

Q2: What Means Are Used to Perform the Inter-

action?

The means used to perform the interaction can be the

user’s body or parts of it (hands and facial expres-

sions for sign language, vocal cords for speech, user’s

position for spatial user interaction), physical objects

(such as in tangible or spatial user interaction),

interaction devices (mouse and keyboard in GUI),

sensors (ambient and spatial user interaction), or a

combination of several of these means.

Q3: What Is the Level of Complexity of the

Interaction Language?

The complexity of the used interaction language

may vary from a low to a very high level . Some

interaction languages are based on a small set of

events (graphical, spatial, and tangible models),

while others use a certain number of commands

(shell language). We may also find some languages

with simple grammar (voice commands) and some

languages with more complex grammar (natural

language, sign language, etc.).

We thus conclude that several parameters should

be considered to distinguish interaction models.

These parameters must be technology independent

because interaction models are constantly evolving.

In our work, we propose three parameters: the place,

the means and the interaction language. According

to Table 1, we can see that spatial user interaction is

a real-world interaction, object-based or body-based

and uses event–based language.

4 STATE OF THE ART

We present in this section a literature review for ap-

plications supporting spatial user interaction and tools

for spatial interface development.

4.1 Applications Supporting Spatial

User Interaction

To analyze existing works on spatial user interaction,

we carried out a targeted study broken down into two

main stages: (i) research of works relating to spatial

user interaction (ii) analysis and classification of these

works according to spatial properties used to support

spatial user interaction. Table 2 summarizes works

that we analyzed from the literature and classify them

according to 3 spatial properties used by these works:

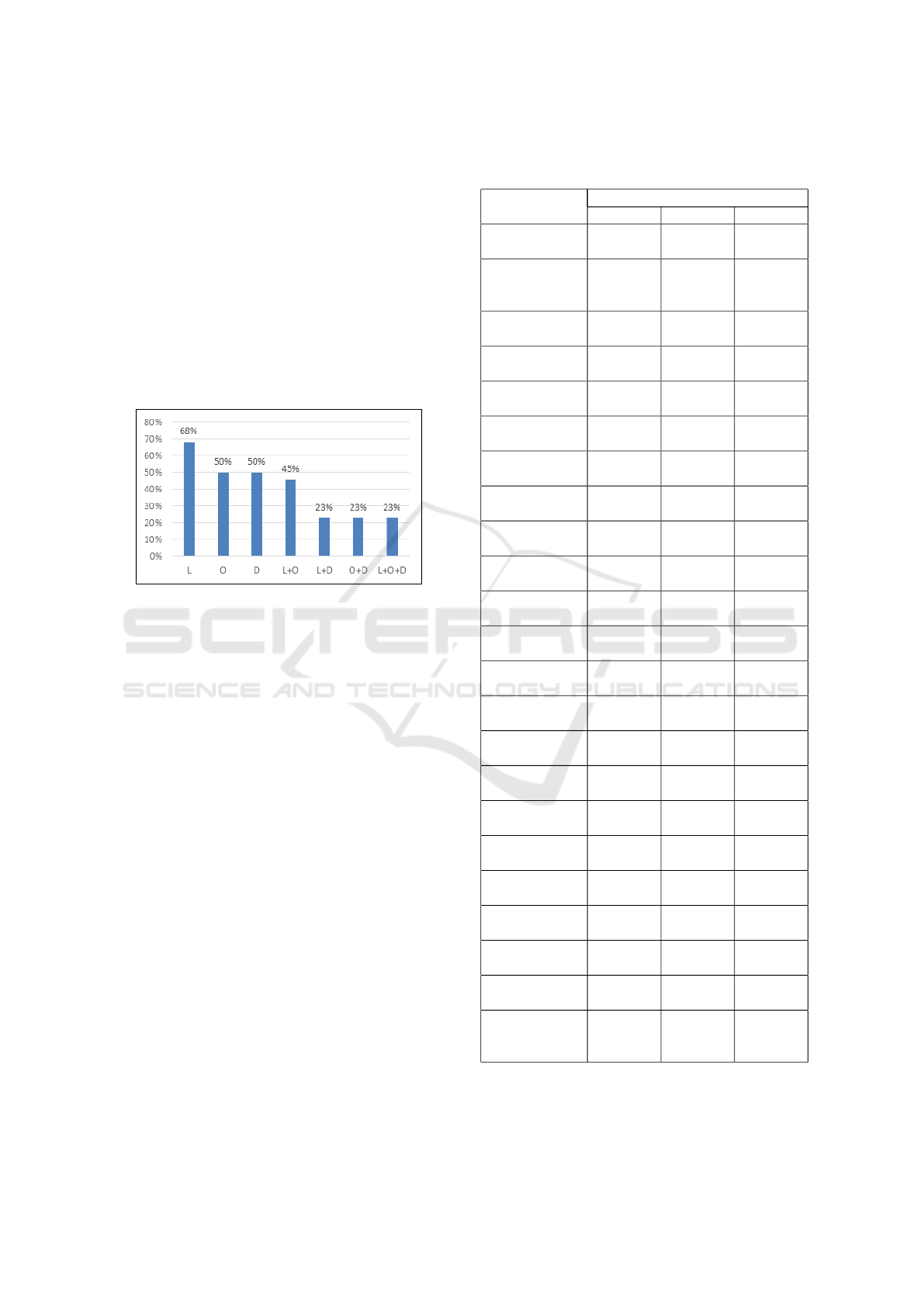

Location, Orientation and Distance. Figure 2 shows

the percentage of the analyzed systems that use each

spatial property or combination of them. For instance,

we found that 68% of the analyzed systems use Loca-

tion.

4.1.1 Location

Location represents object coordinates in the physi-

cal space, usually in the 3D space (x, y, z). Different

applications use this information to implement spatial

interaction between the user and the system. In the

Follow-Me system (Addlesee et al., 2001), the user

moves around a given place while the system tracks

his location and the interface of the application fol-

lows her/him allowing her/him to use the nearest ap-

propriate input device. Proximo (Parle and Quigley,

2006) is a location-sensitive mobile application that

helps users to keep track of different objects using

Bluetooth technology. The curb (Bruner, 2012) was

initially an application for retrieving building infor-

mation and evolved into a registration mobile applica-

tion that allows users to control access to their rooms.

SpaceTop (Lee et al., 2013) allows users to type, click,

draw in 2D and directly manipulate interface ele-

ments that float in the 3D space above the keyboard.

In Spatially-Aware Tangibles Using Mouse Sensors

(Sch

¨

usselbauer et al., 2018) the authors demonstrate a

simple technique that allows tangible objects to track

Spatial User Interaction: What Next?

165

Table 1: Answers to the classification questions according to most used interaction models.

Interaction Models

Command-

Line Inter-

face

Graphical

User Inter-

face

Speech in-

teraction

Gesture in-

teraction

Touch

interaction

Tangible

interaction

Spatial

User Inter-

action

Q1 Digital

world

Digital

world

Digital or

real world

Digital or

real world

Digital

world

Real world Real world

Q2 Device

(keyboard)

Devices

(mouse,

keyboard)

User’s

body (vocal

cords)

User’s body

(hands, fa-

cial expres-

sions)

Device

(touch

screen)

Physical ob-

jects

User’s body,

physical

objects or

a combi-

nation of

them

Q3 Commands Events Grammar Events,

Gram-

mar (sign

language)

Events Events Events

their own position on a surface using an off-the-shelf

optical mouse sensor.

4.1.2 Orientation

Orientation determines the information about which

direction an object is facing another one. HOBS

(Zhang et al., 2014) is an application that uses a se-

lection technique based on the orientation of the head

to interact with smart objects in physical space re-

motely. Note that the orientation attribute is generally

used with the position attribute, it is rarely considered

lonely in a system.

4.1.3 Distance

Distance represents the amount of space that separates

two objects. It is often referred to by the term “prox-

imity”. Spatial interactions based on distance do not

depend on specific locations but rather on the space

between these locations. This is particularly true in

the case of two mobile entities. For instance, Con-

necTable (Tandler et al., 2001) is a system that al-

lows two screens to be coupled together when they

are close to form a single screen, whatever their pre-

cise locations are. Pirates! (Falk et al., 2001) is a

multiplayer computer game which runs on handheld

computers connected using wireless LAN. The vir-

tual game environment is maintained and controlled

by a local server which also keeps track of the play-

ers’ progress over time. The Hello Wall (Prante et al.,

2003) is a public display system that presents infor-

mation of general interest when no one is in the imme-

diate closeness and provides more personal informa-

tion when a user is in close proximity. Proximate In-

teractions (Rekimoto et al., 2003) is a wall-sized am-

bient display supporting three different zones of in-

teraction, which are user distance dependent. Mirror

Space (Roussel et al., 2004) is a multi-user interac-

tive communication system that depends on distance.

It creates a continuum of space for interpreting inter-

personal relationships. Novest (Ishikawa et al., 2017)

is an interface used to estimate the location of the fin-

ger and to classify its state using the back of a hand.

4.1.4 Location and Orientation

Some applications combine location and orientation

information to implement spatial interactions. Vir-

tual shelves (Li et al., 2009) is an interface that al-

lows users to execute commands by spatially access-

ing predefined locations in the air. Pure Land AR

(Land Augmented Reality Edition) (Chan et al., 2013)

is an installation that allows users to visit virtually the

UNESCO World Heritage-listed Mogao Caves. In-

teractive Lighting (Hakulinen et al., 2013) is a multi-

level software framework that allows the creation of

user interfaces to control lighting. Slab (Rateau et al.,

2014) is an interactive tablet for exploring 3D medi-

cal images. Jaguar (Zhang et al., 2018) is a mobile

AR system with flexible object tracking for context

awareness.

4.1.5 Location, Orientation and Distance

We also found applications that combine location, ori-

entation and distance: iCam (Patel et al., 2006) is

a handheld device that can accurately locate its po-

sition and determine its absolute orientation when it

is indoors. It can also determine the 3D position

of any object with its onboard laser pointer. Mobile

spatial interaction (Holzmann, 2011) is a method of

estimating the distance and location of arbitrary ob-

jects in the view field of a mobile phone. This ap-

HUCAPP 2022 - 6th International Conference on Human Computer Interaction Theory and Applications

166

proach uses stereo vision to estimate the distance to

nearby objects, as well as GPS and a digital compass

to get its absolute position and orientation. (Jakob-

sen et al., 2013) presented a study where they ex-

plore how to use the proxemic variables among peo-

ple to drive interaction with information visualiza-

tions. Kubit (Looker and Garvey, 2016) is a respon-

sive holographic user interface using proxemic inter-

action. (Huo et al., 2018) is a method that enables spa-

tial context-sensitive interactions, instant discovery

and localization of surrounding smart things. (Garcia-

Macias et al., 2019) is a proxemic system intended to

help the visually impaired to move around physical

spaces.

Figure 2: Use of spatial properties in the studied systems

(L: Location, O: Orientation, D: Distance).

4.2 Tools for Spatial Interface

Development

Usually, user interface design tools refer to meth-

ods, languages, or software used for interface design.

They can significantly facilitate the expression of de-

sign ideas. In order to analyze works on tools that

support the design and implementation of spatial user

interactions, we made a focused study broken down

into two main stages: (i) research of works related

to the design/development of spatial applications, (ii)

setting up a comparative table based on two criteria:

Tool type and Genericity.

Different design tools exist for graphical (Vos

et al., 2018), touch (Khandkar and Maurer, 2010) and

multimodal interfaces (Elouali et al., 2014). How-

ever, concerning spatial user interaction design and

development, there is no attempt to propose tools that

might help designers in their task. Despite the exis-

tence of a few tools for proxemic interactions which

represent a particular point of view of spatial user in-

teraction, where distance plays a fundamental role,

they remain intended for very specific applications.

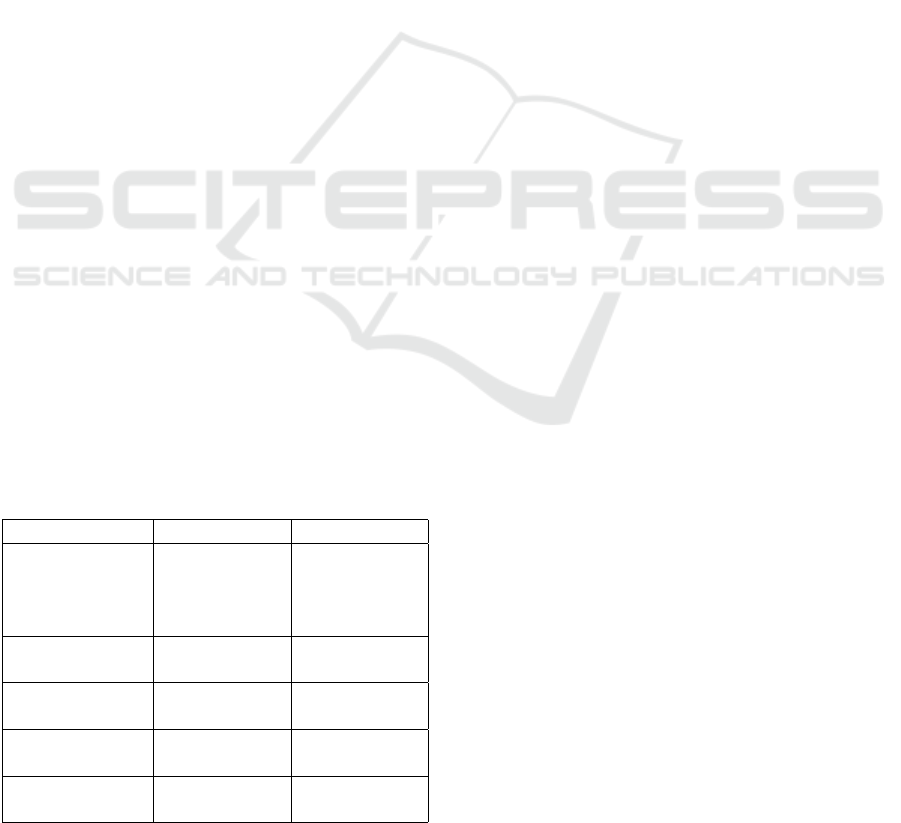

Table 3 summarizes works related to designing

tools that we describe below.

Table 2: Applications supporting spatial user interaction ac-

cording to considered spatial properties.

Reference Spatial properties used

Location Orientation Distance

(Addlesee

et al., 2001)

X

(Parle and

Quigley,

2006)

X

(Bruner,

2012)

X

(Lee et al.,

2013)

X

(Sch

¨

usselbauer

et al., 2018)

X

(Zhang et al.,

2014)

X

(Tandler et al.,

2001)

X

(Falk et al.,

2001)

X

(Prante et al.,

2003)

X

(Rekimoto

et al., 2003)

X

(Roussel

et al., 2004)

X

(Ishikawa

et al., 2017)

X

(Li et al.,

2009)

X X

(Chan et al.,

2013)

X X

(Hakulinen

et al., 2013)

X X

(Rateau et al.,

2014)

X X

(Zhang et al.,

2018)

X X

(Patel et al.,

2006)

X X X

(Holzmann,

2011)

X X X

(Jakobsen

et al., 2013)

X X X

(Looker and

Garvey, 2016)

X X X

(Huo et al.,

2018)

X X X

(Garcia-

Macias et al.,

2019)

X X X

Spatial User Interaction: What Next?

167

(Perez et al., 2020) proposed a proxemic interac-

tion modeling tool based on a DSL (Domain Specific

Language). The latter is composed of symbols and

formal notations representing proxemic environment

concepts which are: Entity, Cyber Physical System,

Identity, Proxemic Zone, Proxemic Environment and

Action. From a graphical model, the tool creates an

XML schema used to generate executable code. In

(Marquardt et al., 2011) the authors presented a prox-

imity toolkit that enables prototyping proxemic inter-

action. It consists of a collection library conceived

in a component-oriented architecture and considers

the proxemic variables between people, objects, and

devices. The toolkit involves four main components:

(1)Proximity Toolkit server, (2) Tracking plugin mod-

ules, (3)Visual monitoring tool and (4) Application

programming interface. A proxemic designers’ tool

for prototyping ubicomp space with proxemic interac-

tions is presented in (Kim et al., 2016). It is built using

software and modeling materials, such as: photoshop,

paper and Lego. Interactions can be defined in pho-

toshop based on proxemic variables. The tool uses an

augmented reality projection for miniatures to enable

tangible interactions and dynamic representations. A

hidden marker stickers and a camera-projector system

enable the unobtrusive integration of digital images

on the physical miniature. SpiderEyes (Dostal et al.,

2014) is a system and toolkit for designing attention

and proximity aware collaborative scenarios around

large wall-sized displays using information visual-

ization pipeline that can be extended to incorporate

proxemic interactions. Authors in (Chulpongsatorn

et al., 2020) explored a design for information visu-

alization based on distance. It describes three prop-

erties (boundedness, connectedness and cardinality)

and five design patterns (subdivision, particalization,

peculiarization, multiplication and nesting) that might

be considered in design.

Table 3: Summary of designing tools for proximic user in-

teraction.

Reference Tool type Genericity

(Perez et al.,

2020)

Modeling

language and

prototyping

environment

Generic

(Marquardt

et al., 2011)

Prototyping

environment

For specific

use

(Kim et al.,

2016)

Prototyping

environment

For specific

use

(Dostal et al.,

2014)

Prototyping

environment

For specific

use

(Chulpongsatorn

et al., 2020)

Prototyping

environment

For specific

use

5 DISCUSSION

The purpose of this study was to analyze works re-

lated to spatial user interaction design and develop-

ment. Currently, there are a lack of tools and meth-

ods that help designing applications supporting spa-

tial user interactions. Accordingly, a literature review

around spatial user interaction design and develop-

ment could contribute to the body of knowledge of

spatial user interaction design and development.

In the following we provide some discussions

about our review results.

5.1 Spatial User Interaction Design

Gaps

According to the literature review, most of the pro-

posed works aim to demonstrate the relevance of

the spatial user interaction technique in itself rather

than on how to specify it in a generic approach and

reusable way. Hence, there is a lack of generic ap-

proaches and tools capable of handling the process

of building applications supporting spatial user inter-

action. Furthermore, existing works do not take into

account all the possibilities of spatial interaction. It

treats only proxemic interactions which are mainly

based on distance. Moreover, proposed tools target

the prototyping of specific applications, except the re-

cent research of (Perez et al., 2020) which came for-

ward to define a language for proxemic interaction

specification.

5.2 Spatial User Interaction Design

Next Step

For future work, we think that it will be necessary

to define a modeling language for spatial user inter-

action. On the one hand, it allows specifying what

concepts to use, in which case and how to specify dif-

ferent interactions. In this way, designers/developers

could easily refer to them when building applications

supporting spatial user interaction. On the other hand,

richer spatial user interactions should be more sys-

tematically exploited by designers through the spa-

tial user interaction modeling language. Furthermore,

it helps to develop a modeling and code generation

frameworks for the automation of parts of develop-

ment.

6 CONCLUSIONS

Spatial user interaction promotes intuitive and simple

exchanges between users and the system. The pur-

HUCAPP 2022 - 6th International Conference on Human Computer Interaction Theory and Applications

168

pose of the present paper is to present a literature re-

view and an analysis relevant to this topic.

In order to position the spatial user interaction

model among the existing ones, we proposed a clas-

sification that takes into account three parameters:

interaction place, interaction means and interaction

language.

Next, we presented a literature review on exist-

ing spatial applications and design tools. Based on

the review results, we identify a lack of generic ap-

proaches and tools capable of supporting the process

of building applications with spatial capabilities. Few

interest was given to the way spatial user interactions

are designed, despite the fact that many works have

proven the usefulness of spatial user interaction as it

offers natural and intuitive exchanges between users

and systems. Several applications that support spatial

user interaction exist exploiting thereby some spatial

attributes.

In order to respond to the shortcomings pointed

out in the literature, currently we work on proposing

a modeling langage for spatial intercations.

REFERENCES

Addlesee, M., Curwen, R., Hodges, S., Newman, J.,

Steggles, P., Ward, A., and Hopper, A. (2001). Im-

plementing a sentient computing system. Computer,

34(8):50–56.

Aylward, R. and Paradiso, J. A. (2006). Sensemble: a wire-

less, compact, multi-user sensor system for interac-

tive dance. In Proceedings of the 2006 conference

on New interfaces for musical expression, pages 134–

139. Citeseer.

Bellik, Y. and Farcy, R. (2002). Comparison of various in-

terface modalities for a locomotion assistance device.

In International Conference on Computers for Handi-

capped Persons, pages 421–428. Springer.

Bongers, B. and Mery, A. (2007). Interactivated reading

table. University of Technology Sydney, Cairns, QLD,

Australia. Retrieved Apr, 6:2010.

Bruner, R. D. (2012). Exploring spatial interactions. PhD

thesis.

Chan, L. K. Y., Kenderdine, S., and Shaw, J. (2013). Spa-

tial user interface for experiencing mogao caves. In

Proceedings of the 1st symposium on Spatial user in-

teraction, pages 21–24.

Chaoui, K., Bouzidi-Hassini, S., and Bellik, Y. (2020). To-

wards a specification language for spatial user interac-

tion. In Symposium on Spatial User Interaction, pages

1–2.

Chulpongsatorn, N., Yu, J., and Knudsen, S. (2020). Ex-

ploring design opportunities for visually congruent

proxemics in information visualization: A design

space.

Dostal, J., Hinrichs, U., Kristensson, P. O., and Quigley,

A. (2014). Spidereyes: designing attention-and

proximity-aware collaborative interfaces for wall-

sized displays. In Proceedings of the 19th interna-

tional conference on Intelligent User Interfaces, pages

143–152.

Elouali, N., Le Pallec, X., Rouillard, J., and Tarby, J.-

C. (2014). Mimic: leveraging sensor-based interac-

tions in multimodal mobile applications. In CHI’14

Extended Abstracts on Human Factors in Computing

Systems, pages 2323–2328.

Falk, J., Ljungstrand, P., Bj

¨

ork, S., and Hansson, R. (2001).

Pirates: proximity-triggered interaction in a multi-

player game. In CHI’01 extended abstracts on Human

factors in computing systems, pages 119–120.

Garcia-Macias, J. A., Ramos, A. G., Hasimoto-Beltran, R.,

and Hernandez, S. E. P. (2019). Uasisi: a modular

and adaptable wearable system to assist the visually

impaired. Procedia Computer Science, 151:425–430.

Greenberg, S., Marquardt, N., Ballendat, T., Diaz-Marino,

R., and Wang, M. (2011). Proxemic interactions: the

new ubicomp? interactions, 18(1):42–50.

Grosse-Puppendahl, T., Holz, C., Cohn, G., Wimmer, R.,

Bechtold, O., Hodges, S., Reynolds, M. S., and Smith,

J. R. (2017). Finding common ground: A survey of

capacitive sensing in human-computer interaction. In

Proceedings of the 2017 CHI conference on human

factors in computing systems, pages 3293–3315.

Hakulinen, J., Turunen, M., and Heimonen, T. (2013). Spa-

tial control framework for interactive lighting. In

Proceedings of International Conference on Making

Sense of Converging Media, pages 59–66.

Holzmann, C., . H. M. (2011). Vision-based distance and

position estimation of nearby objects for mobile spa-

tial interaction. In In Proceedings of the 16th interna-

tional conference on Intelligent user interfaces., pages

(339–342).

Huo, K., Cao, Y., Yoon, S. H., Xu, Z., Chen, G., and Ra-

mani, K. (2018). Scenariot: Spatially mapping smart

things within augmented reality scenes. In Proceed-

ings of the 2018 CHI Conference on Human Factors

in Computing Systems, pages 1–13.

Huot, S. (2013). ’Designeering Interaction’: A Missing

Link in the Evolution of Human-Computer Interac-

tion. PhD thesis.

Ishii, H. and Ullmer, B. (1997). Tangible bits: towards

seamless interfaces between people, bits and atoms.

In Proceedings of the ACM SIGCHI Conference on

Human factors in computing systems, pages 234–241.

Ishikawa, Y., Shizuki, B., and Hoshino, J. (2017). Evalua-

tion of finger position estimation with a small ranging

sensor array. In Proceedings of the 5th Symposium on

Spatial User Interaction, pages 120–127.

Jakobsen, M. R., Haile, Y. S., Knudsen, S., and Hornbæk,

K. (2013). Information visualization and proxemics:

Design opportunities and empirical findings. IEEE

transactions on visualization and computer graphics,

19(12):2386–2395.

Khandkar, S. H. and Maurer, F. (2010). A domain spe-

cific language to define gestures for multi-touch ap-

Spatial User Interaction: What Next?

169

plications. In Proceedings of the 10th Workshop on

Domain-Specific Modeling, pages 1–6.

Kim, H.-J., Kim, J.-W., and Nam, T.-J. (2016). Ministudio:

Designers’ tool for prototyping ubicomp space with

interactive miniature. In Proceedings of the 2016 CHI

Conference on Human Factors in Computing Systems,

pages 213–224.

Kim, M. and Maher, M. L. (2005). Comparison of design-

ers using a tangible user interface and a graphical user

interface and the impact on spatial cognition. Proc.

Human Behaviour in Design, 5.

Lee, J., Olwal, A., Ishii, H., and Boulanger, C. (2013).

Spacetop: integrating 2d and spatial 3d interactions

in a see-through desktop environment. In Proceed-

ings of the SIGCHI Conference on Human Factors in

Computing Systems, pages 189–192.

Li, F. C. Y., Dearman, D., and Truong, K. N. (2009). Vir-

tual shelves: interactions with orientation aware de-

vices. In Proceedings of the 22nd annual ACM sym-

posium on User interface software and technology,

pages 125–128.

Looker, J. and Garvey, T. (2016). Kubit: A responsive and

ergonomic holographic user interface for a proxemic

workspace. In Advances in Ergonomics in Design,

pages 811–821. Springer.

Marquardt, N., Diaz-Marino, R., Boring, S., and Greenberg,

S. (2011). The proximity toolkit: prototyping prox-

emic interactions in ubiquitous computing ecologies.

In Proceedings of the 24th annual ACM symposium on

User interface software and technology, pages 315–

326.

Parle, E. and Quigley, A. (2006). Proximo, location-aware

collaborative recommender. School of Computer Sci-

ence and Informatics, University College Dublin Ire-

land, pages 1251–1253.

Patel, S. N., Rekimoto, J., and Abowd, G. D. (2006). icam:

Precise at-a-distance interaction in the physical envi-

ronment. In International Conference on Pervasive

Computing, pages 272–287. Springer.

Perez, P., Roose, P., Dalmau, M., Cardinale, Y., Masson,

D., and Couture, N. (2020). Mod

´

elisation graphique

des environnements prox

´

emiques bas

´

ee sur un dsl. In

INFORSID 2020, pages 99–14. dblp.

Prante, T., R

¨

ocker, C., Streitz, N., Stenzel, R., Magerkurth,

C., Van Alphen, D., and Plewe, D. (2003). Hello.

wall–beyond ambient displays. In Adjunct Proceed-

ings of Ubicomp, volume 2003, pages 277–278.

Rateau, H., Grisoni, L., and Araujo, B. (2014). Explor-

ing tablet surrounding interaction spaces for medical

imaging. In Proceedings of the 2nd ACM symposium

on Spatial user interaction, pages 150–150.

Rekimoto, J., Ayatsuka, Y., Kohno, M., and Oba, H. (2003).

Proximal interactions: A direct manipulation tech-

nique for wireless networking. In Interact, volume 3,

pages 511–518.

Roussel, N., Evans, H., and Hansen, H. (2004). Mir-

rorspace: using proximity as an interface to video-

mediated communication. In International Con-

ference on Pervasive Computing, pages 345–350.

Springer.

Sch

¨

usselbauer, D., Schmid, A., Wimmer, R., and Muth, L.

(2018). Spatially-aware tangibles using mouse sen-

sors. In Proceedings of the Symposium on Spatial

User Interaction, pages 173–173.

Tanaka, A. and Gemeinboeck, P. (2006). A framework for

spatial interaction in locative media. In Proceedings

of the 2006 conference on new interfaces for musical

expression, pages 26–30. Citeseer.

Tandler, P., Prante, T., M

¨

uller-Tomfelde, C., Streitz, N., and

Steinmetz, R. (2001). Connectables: dynamic cou-

pling of displays for the flexible creation of shared

workspaces. In Proceedings of the 14th annual ACM

symposium on User interface software and technol-

ogy, pages 11–20.

Vos, J., Chin, S., Gao, W., Weaver, J., and Iverson, D.

(2018). Using scene builder to create a user interface.

In Pro JavaFX 9, pages 129–191. Springer.

Zhang, B., Chen, Y.-H., Tuna, C., Dave, A., Li, Y., Lee,

E., and Hartmann, B. (2014). Hobs: head orientation-

based selection in physical spaces. In Proceedings of

the 2nd ACM symposium on Spatial user interaction,

pages 17–25.

Zhang, W., Han, B., and Hui, P. (2018). Jaguar: Low la-

tency mobile augmented reality with flexible tracking.

In Proceedings of the 26th ACM international confer-

ence on Multimedia, pages 355–363.

HUCAPP 2022 - 6th International Conference on Human Computer Interaction Theory and Applications

170