Automatic Estimation of Anthropometric Human Body Measurements

Dana

ˇ

Skorv

´

ankov

´

a

1 a

, Adam Rie

ˇ

cick

´

y

2 b

and Martin Madaras

1,2 c

1

Faculty of Mathematics, Physics and Informatics, Comenius University Bratislava, Slovakia

2

Skeletex Research, Slovakia

Keywords:

Computer Vision, Neural Networks, Body Measurements, Human Body Analysis, Anthropometry, Point

Clouds.

Abstract:

Research tasks related to human body analysis have been drawing a lot of attention in computer vision area

over the last few decades, considering its potential benefits on our day-to-day life. Anthropometry is a field

defining physical measures of a human body size, form, and functional capacities. Specifically, the accurate

estimation of anthropometric body measurements from visual human body data is one of the challenging

problems, where the solution would ease many different areas of applications, including ergonomics, garment

manufacturing, etc. This paper formulates a research in the field of deep learning and neural networks, to

tackle the challenge of body measurements estimation from various types of visual input data (such as 2D

images or 3D point clouds). Also, we deal with the lack of real human data annotated with ground truth body

measurements required for training and evaluation, by generating a synthetic dataset of various human body

shapes and performing a skeleton-driven annotation.

1 INTRODUCTION

Analyzing human body and motion has been an im-

portant field of research for decades. The related tasks

attract attention of many computer vision researchers,

mainly due to the wide range of applications, which

includes surveillance, entertainment industry, sports

performance analysis, ergonomics, human-computer

interaction, garment manufacturing, etc.

Human body analysis covers a number of differ-

ent tasks, including body parts segmentation, pose es-

timation and body measurements estimation; while

capturing human body in motion brings up additional

tasks, such as pose tracking, activity recognition and

classification, and many more. All of the topics are

closely related, thus are often treated as associated or

complementary tasks.

Anthropometric human body measurements

gather various statistical data about human body

and its physical properties. They are generally

categorized into two groups: static and dynamic mea-

surements. Static, or structural, dimensions include

circumferences, lengths, skinfolds and volumetric

measurements. Dynamic, or functional, dimensions

a

https://orcid.org/0000-0003-3791-495X

b

https://orcid.org/0000-0002-1546-0048

c

https://orcid.org/0000-0003-3917-4510

incorporate link measurements, center of gravity

measurements, and body landmark locations. In this

research, we will focus mainly on body circumfer-

ences, widths and lengths of particular body parts or

limbs, and other distances within a human body. One

of the issues in context of anthropometry is the lack

of standardization in body measurements. For this

reason, we clarify the definition of each annotated

measurement in Section 3.1.2.

For the purpose of the anthropometric body mea-

surements estimation, there has been very few data

with ground truth annotations made publicly avail-

able. Up to our knowledge, the only large-scale

dataset of real human body scans along with the

manually measured body dimensions is a commer-

cial dataset CAESAR (Robinette et al., 2002), which

has not been released for public usage. Since annotat-

ing real data using tape measuring is rather exhausting

and time-consuming, considering the potentially large

set of different human subjects; the usual workaround

is to make use of synthetically generated data. How-

ever, at the cost of the relatively fast data annotation,

there is a need for establishing a robust method to ob-

tain the accurate body measures on the body surface.

The main contribution of this paper is fourfold:

(1) we examine various 2D and 3D input human body

data representations, along with their impact on the

Škorvánková, D., Rie

ˇ

cický, A. and Madaras, M.

Automatic Estimation of Anthropometric Human Body Measurements.

DOI: 10.5220/0010878100003124

In Proceedings of the 17th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2022) - Volume 4: VISAPP, pages

537-544

ISBN: 978-989-758-555-5; ISSN: 2184-4321

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

537

ability of a neural network to extract the important

features and process them to estimate true values of

a predefined set of body measurements on the out-

put; (2) to deal with the insufficient amount of pub-

licly available data annotated with ground-truth body

measurements, we generated a large-scale synthetic

dataset of various body shapes in standard body pose,

using parametric human body model, along with cor-

responding point clouds, gray-scale and silhouette

images, skeleton data, and 16 annotated body mea-

surements; (3) to obtain the ground-truth for the 16

measurements on the body models, we established a

skeleton-guided annotation pipeline, which can eas-

ily be extended to compute more complex and task-

specific body dimensions, and finally, (4) we present

a method for an accurate automatic end-to-end hu-

man body measurements estimation from a single in-

put frame.

2 RELATED WORK

The anthropometric body measurements estimation is

an emerging problem in the context of various appli-

cations, such as garment manufacturing, ergonomics,

or surveillance. An automatic estimation of accu-

rate body measures would prevent us from having to

manually tape measure the human bodies. Also, the

automated pipeline would bring consistency in body

measuring, which is often hard to maintain when tape

measuring different human subjects. Aside from nat-

ural human error, or inaccuracies caused by tape mea-

suring, there is an ambiguity across various different

body measuring standards.

There have been numerous algorithmic strategies

presented to tackle the task of human body measure-

ments estimation over the years (Guill

´

o et al., 2020;

Anisuzzaman et al., 2019; Ashmawi et al., 2019;

Song et al., 2017; Dao et al., 2014; Tsoli et al., 2014;

Li et al., 2013). However, they often proved not to

satisfy the accuracy of the estimations, nor meet the

desired efficiency, or computational and complexity

requirements. One of the main problems when pro-

cessing data representing human body is the irregu-

larity and complex structure of the human body sur-

face. In theory, there are no predefined vertices on

the surface of human body to guide the processing;

as it is when analyzing standard 3D objects with cor-

ners and edges. Considering the theoretical and prac-

tical issues with the algorithmic approaches, in many

environments, they have been replaced with machine

learning techniques, such as random forests (Xiaohui

et al., 2018) or neural networks (Yan and K

¨

am

¨

ar

¨

ainen,

2021; Wang et al., 2019).

In order to sufficiently train a machine learning

model, a large amount of human body data annotated

with ground truth body measurements is essential.

In general, there are no such large-scale benchmark

datasets publicly available for research purposes at the

moment. The main reason for this is the exhausting

process of manual tape measuring of real human bod-

ies. Therefore, most researchers have made use of the

synthetic data instead of the real human data. Tejeda

et al. (Gonzalez-Tejeda and Mayer, 2019) focused on

the annotation process of three basic body measure-

ments: chest, waist, and pelvis circumference on 3D

human body model. Our annotation method presented

in this paper is inspired by their approach, while we

optimized and adjusted the conditions in computa-

tion of the particular measurements, and extended the

set of measurements by thirteen additional body mea-

sures.

2.1 1D Statistical Input Data

Regarding the human body measurements estimation,

several existing approaches formulate the task as esti-

mating an extended list of advanced body measures

from a set of predefined basic body measurements

on the input, thus having the 1D statistical input

data (Wang et al., 2019; Liu et al., 2017). Usually,

the estimation is based on an end-to-end learning neu-

ral network, mapping from the input easy-to-measure

body dimensions to the detailed body dimensions on

the output. However, these methods still require man-

ual tape measuring of the few basic attributes, which

may be inconvenient in certain application scenarios.

2.2 Image Input Data

Methods inferring from 2D input images were pro-

posed to estimate the body measurements from visual

data to avoid the need for manual measuring in de-

ployment. Most frequently, the input data are in a

form of RGB images (Yan and K

¨

am

¨

ar

¨

ainen, 2021;

Anisuzzaman et al., 2019; Shigeki et al., 2018), al-

though the three color channels may not be very

beneficial in context of this particular task, at the

cost of processing the three-channeled data. Thus,

several other approaches settled for gray-scale im-

ages (Tejeda and Mayer, 2021) as input data, while

achieving competitive results.

Binary silhouette images of a human body were

also used in some of the strategies (Gonzalez-Tejeda

and Mayer, 2019; Song et al., 2017), suggesting the

contours of the body shape are the most important fea-

ture for the stated task.

VISAPP 2022 - 17th International Conference on Computer Vision Theory and Applications

538

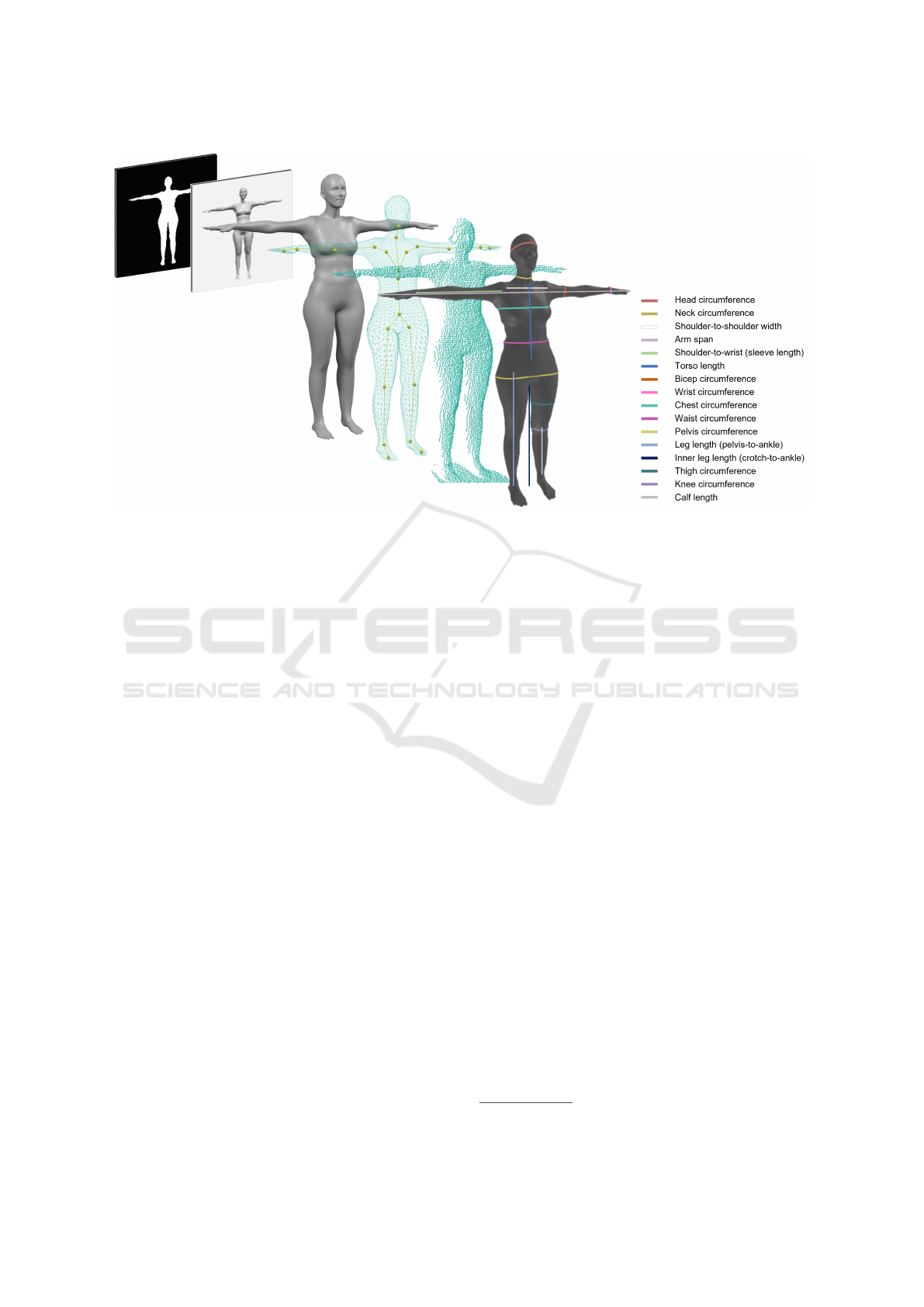

Figure 1: Human data representations included in the generated synthetic dataset: binary silhouette images, gray-scale images,

3D models, skeleton data, 3D point clouds and 16 annotated body measurements.

2.3 3D Input Data

Furthermore, there has been a number of methods

proposing the engagement of 3D input data (Guill

´

o

et al., 2020; Xiaohui et al., 2018; Tsoli et al.,

2014). In (Yan et al., 2020), the authors are fitting a

Skinned Multi-Person Linear (SMPL) body model to

a scanned point cloud, using a non-rigid iterative clos-

est point algorithm as a part of their pipeline. Then,

they run a non-linear regressor to estimate the body

measures on the fitted body model from multiple mea-

sured circumference paths. There also have been few

attempts to compute the body dimensions on 3D body

point clouds (Dao et al., 2014) using analytical ap-

proaches, although the idea has not been developed

much further.

One of the main contributions of this paper are ex-

periments with 3D input data, where we suggest us-

ing 3D point clouds directly on the input, and training

a neural model in an end-to-end fashion to avoid the

need for an expensive alignment of the point cloud

and 3D body model.

3 PROPOSED APPROACH

In this section, we present our proposed strategy to ac-

curately estimate the anthropometric body measure-

ments from visual data. In our work, we exam-

ine various input data types, including binary silhou-

ette images, gray-scale images and 3D point clouds.

Another contribution of our research is an estab-

lished framework for a skeleton-guided computation

of 16 ground-truth body measurements on a 3D body

model. We have produced a large-scale database of

synthetic human body data relevant for various hu-

man body analysis-related tasks and we publish the

dataset

1

for further research.

3.1 Data Acquisition

We consider generating a large-scale collection of

synthetic human body data one of the contributions

of this paper, while containing multiple correspond-

ing data representations, categorized into male and

female body data. It includes 3D body models,

point clouds, gray-scale and binary silhouette images,

skeleton data as well as a set of 16 annotated body

measurements, as illustrated in Figure 1. We present

the details of the database in the subsequent sections.

3.1.1 Synthetic Data Generation

The synthetic human body data were generated us-

ing a SMPL parametric model (Loper et al., 2015)

with high variety of different body shapes. It includes

50k male and 50k female body models. The gray-

scale and binary images were generated by capturing

the rendered body model from a frontal-view. Fur-

thermore, each rendered body model was virtually

1

http://skeletex.xyz/portfolio/datasets

Automatic Estimation of Anthropometric Human Body Measurements

539

Table 1: Definition of annotated anthropometric body measurements. Note that the 3D model is expected to capture the

human body in the default T-pose, with Y-axis representing the vertical axis, and Z-axis pointing towards the camera.

Body measurement Definition

Head circumference circumference taken on the Y-axis at the level in the middle between the head skeleton

joint and the top of the head (the intersection plane is slightly rotated along X-axis to

match the natural head posture)

Neck circumference circumference taken at the Y-axis level in 1/3 distance between the neck joint and the

head joint (the intersection plane is slightly rotated along X-axis to match the natural

posture)

Shoulder-to-shoulder distance between left and right shoulder skeleton joint

Arm span distance between the left and right fingertip in T-pose (the X-axis range of the model)

Shoulder-to-wrist distance between the shoulder and the wrist joint (sleeve length)

Torso length distance between the neck and the pelvis joint

Bicep circumference circumference taken using an intersection plane which normal is perpendicular to X-

axis, at the X coordinate in the middle between the shoulder and the elbow joint

Wrist circumference circumference taken using an intersection plane which normal is perpendicular to X-

axis, at the X coordinate of the wrist joint

Chest circumference circumference taken at the Y-axis level of the maximal intersection of a model and the

mesh signature within the chest region, constrained by axilla and the chest (upper spine)

joint

Waist circumference circumference taken at the Y-axis level of the minimal intersection of a model and the

mesh signature within the waist region – around the natural waist line (mid-spine joint);

the region is scaled relative to the model stature

Pelvis circumference circumference taken at the Y-axis level of the maximal intersection of a model and the

mesh signature within the pelvis region, constrained by the pelvis joint and hip joint

Leg length distance between the pelvis and ankle joint

Inner leg length distance between the crotch and the ankle joint (crotch height); while the Y coordi-

nate being incremented, the crotch is detected in the first iteration after having a single

intersection with the mesh signature, instead of two distinct intersections (the first inter-

section above legs)

Thigh circumference circumference taken at the Y-axis level in the middle between the hip and the knee joint

Knee circumference circumference taken at the Y coordinate of the knee joint

Calf length distance between the knee joint and the ankle joint

scanned from two viewpoints, thus producing a cor-

responding 3D body point cloud containing 88 408

distinct points. Also, Gaussian noise is added to the

virtual scans, following (Jensen et al., 2021; Rako-

tosaona et al., 2019; Rosman et al., 2012), to bring

the resulting data closer to the real data captured by

a structured-light 3D scanner. The body scans are

originally structured in 2D grid, similar to standard

image, where the grid contains 3 real world coordi-

nates at indices containing a valid point of the point

cloud, and zeros (representing an empty grid index)

otherwise. However, to merge scans from two cam-

era viewpoints and thus incorporate more information

into a single point cloud, we discard the grid struc-

ture, and use unorganized point clouds directly as an

input. Another reason to prefer the unstructured point

clouds would be to save the computation time and

memory, related to the large number of empty points

in the grid-structured scans. Nonetheless, the struc-

tured point cloud representation might be useful in

certain cases, and we plan to incorporate this data for-

mat in the future steps of this research, as we suggest

in Section 5.1.

3.1.2 Annotation Process

In this section, we describe the process of annotating

the synthetically generated data with various body di-

mensions measured on the body model surface. Our

annotation method is inspired by CALVIS (Gonzalez-

Tejeda and Mayer, 2019). While we used the same

mesh signature approach, intersecting the model ge-

ometry; we extended the list of body measurements

and adjusted the three original measures (chest, waist

and pelvis circumference) to make them consistent

with the real measures obtained by manual tape mea-

suring. In particular, we optimized the vertical axis

conditions on the body region, where each of the cir-

cumference is measured. We propose, that the vertical

range of each region should be relative to the stature

of the specific body model. Moreover, we mark thir-

teen additional measurements (as described in Fig-

ure 1), which are often used mainly in garment manu-

facturing. In Table 1, we define each of the measure-

ments, to avoid any ambiguities considering different

anthropometric measuring standards.

VISAPP 2022 - 17th International Conference on Computer Vision Theory and Applications

540

3.2 Baseline Models

Here, we present the baseline models used in our ex-

periments to regress the anthropometric body mea-

surements. Our research is focused on examining var-

ious input data types, including both 2D and 3D hu-

man body data representations.

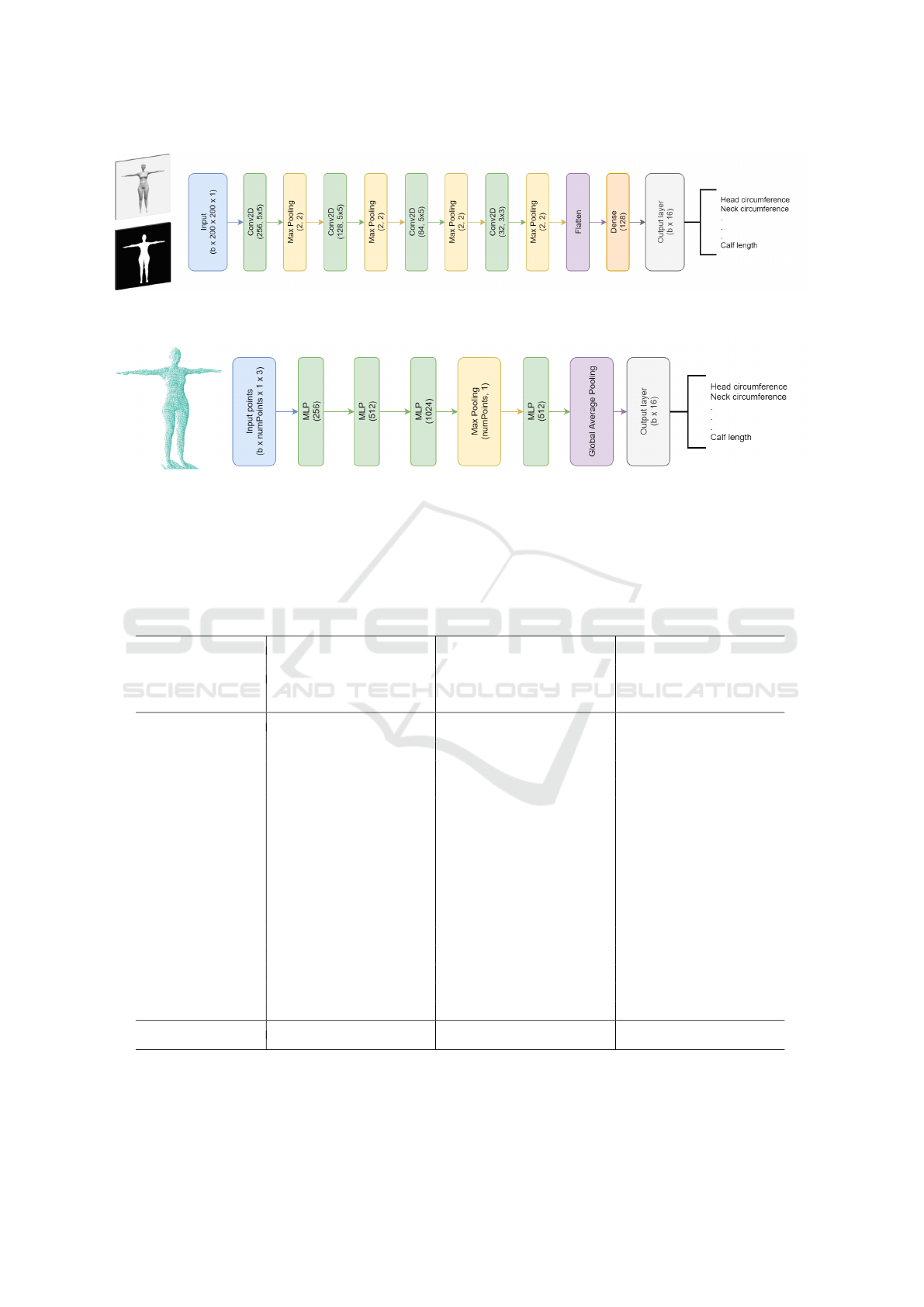

3.2.1 2D Input Data

For 2D input data, namely gray-scale images and

binary silhouette images, we employ a baseline

model for Convolutional Body Dimensions Estima-

tion (Conv-BoDiEs). The model takes a single gray-

scale or silhouette image of size 200 × 200 pixels as

input, same as in (Gonzalez-Tejeda and Mayer, 2019;

Tejeda and Mayer, 2021); and regresses the values of

16 predefined body measurements. The network ar-

chitecture is described in Figure 2, while all of the

convolution layers are followed by ReLU activation.

3.2.2 3D Input Data

In contrast to some of the previous approaches, in-

stead of the exhausting process of fitting a body scan

to a predefined body model, we aim to directly regress

the body measurements from a single unorganized 3D

point cloud merged from two camera viewpoints, or

even a grid-structured point cloud in the future (as ex-

plained in Section 5.1). One of the effective methods

to process the unstructured 3D body scans is to ex-

tract both global and local features in the network,

and aggregate these features to maintain the infor-

mation on the overall context as well as the local

neighbourhoods, as formulated in (Qi et al., 2016)

and the follow-up research. Therefore, we propose a

baseline neural architecture for Point Cloud Body Di-

mensions Estimation (PC-BoDiEs) based on stacked

multi-layer perceptron (MLP) convolutions to regress

the lengths of 16 stated body measurements. Details

of the model architecture are shown in Figure 3. Each

of the MLP layers is followed by ReLU activation.

To lower the density of the body scans and thus

lower the time and memory requirements of the

model, the input point clouds are sub-sampled using

farthest point sampling to match a fixed number of

points before being fed to the network. In experi-

ments, we validate two different point cloud densities

and show the trade-off between the number of points

present and the estimation accuracy of the model.

4 EXPERIMENTS

In this section, we illustrate the conducted experi-

ments using the purposed neural models on the gen-

erated dataset.

4.1 Training Setup

Prior to training, the whole set of gray-scale images

was normalized to zero-mean and one standard devi-

ation. The point clouds were globally normalized to

fit the range of [−1, 1]. While sub-sampling the reso-

lution, we conduct experiments with two settings: us-

ing 512 and 1024 points per point cloud (as reported

in Table 3). During the training stage, the loss func-

tion used for both stated networks was mean absolute

error. All results are reported after training the mod-

els for 300 epochs. For both models, the learning rate

was gradually decreased using a cosine decay, with

the initial value set to 10

−4

and 5 × 10

−4

, for the im-

age and point cloud network respectively. The models

were trained using AMSGrad variant of the Adam op-

timizer, with batches of 32 samples. The experiments

were conducted on Nvidia GeForce RTX 3060.

4.2 Evaluation

For evaluating the models, we use k-fold validation

with k = 5. Each time, the dataset containing a total

of 100k samples was split to train and test set with

ratio 80:20.

We report three evaluation metrics: (1) mean ab-

solute error (MAE) denotes the average error between

the ground truth and the predicted measurements in

millimeters; (2) average precision (AP) for each mea-

surement marks the percentage of samples where

the particular measurement was estimated within the

specified threshold from ground truth; and (3) mean

average precision (mAP) marks the percentage of

samples estimated with MAE under the stated thresh-

old.

In Table 2, we present the performance of our

models Conv-BoDiEs and PC-BoDiEs which use var-

ious input data representations to estimate the value

of 16 pre-defined body measurements. As shown in

the table, the lowest MAE of 4.64 mm was achieved

using gray-scale input images. The MAE obtained

using unorganized point cloud input data is not far

behind, with the value of 4.95 mm. In both cases,

all of the 20k test samples were estimated with MAE

(averaged over all body measurements) within 20 mm

from ground truth. The biggest error among par-

ticular body measurements in all scenarios was re-

ported on neck circumference, head circumference

Automatic Estimation of Anthropometric Human Body Measurements

541

Figure 2: The architecture of Convolutional Body Dimensions Estimation (Conv-BoDiEs) network. The model takes a single

200 × 200 gray-scale or binary image as input, and returns 16 estimated body measurements on the output.

Figure 3: The architecture of Point Cloud Body Dimensions Estimation (PC-BoDiEs) network. The model takes an unorga-

nized 3D body scan merged from two viewpoints as input, and returns 16 estimated body measurements on the output. Note,

that the number of points in the body scan is a hyperparameter.

Table 2: The quantitative results of Conv-BoDiEs and PC-BoDiEs. G means gray-scale, B means binary input image. Mean

absolute error (MAE) is reported per each body measurement over all k = 5 folds, as well as averaged over all measurements

and all folds. Average Precision (AP) is displayed with two thresholds: at 20 mm (AP@20) and at 10 mm (AP@10). For each

measurement, it illustrates the percentage of samples, where the particular measurement was estimated within the threshold

from ground truth. In the last row, mean average precision shows the percentage of samples estimated with MAE under stated

threshold (note, that in this case, it is not equal to the average of the above rows).

Body measurement MAE (mm) AP@20 (%) AP@10 (%)

Conv-BoDiEs PC-BoDiEs Conv-BoDiEs PC-BoDiEs Conv-BoDiEs PC-BoDiEs

G B G B G B

Head circumference 8.38 16.22 8.06 94.09 67.56 94.87 66.12 37.70 68.44

Neck circumference 8.82 17.39 9.07 93.08 64.54 91.76 63.81 35.57 62.46

Shoulder-to-shoulder 7.54 12.41 8.21 96.37 80.36 94.57 71.28 48.06 67.43

Arm span 5.32 7.45 6.95 99.63 96.82 97.75 86.77 71.88 75.57

Shoulder-to-wrist 3.90 6.00 5.18 99.97 99.14 99.66 95.81 81.67 87.63

Torso length 6.51 10.13 7.85 98.46 88.48 95.68 78.10 56.99 69.23

Bicep circumference 4.60 6.66 5.79 99.87 98.37 99.40 91.46 77.05 83.16

Wrist circumference 2.23 3.28 2.48 100.00 99.99 100.00 99.80 98.11 99.79

Chest circumference 2.57 5.24 3.29 100.00 99.71 100.00 99.57 87.22 98.31

Waist circumference 1.65 3.11 2.29 100.00 100.00 100.00 99.98 98.96 99.96

Pelvis circumference 3.51 4.92 5.11 99.89 99.57 99.66 97.09 89.52 88.17

Leg length 2.65 3.69 3.48 100.00 100.00 100.00 99.63 96.97 97.77

Inner leg length 4.16 5.80 2.76 99.67 98.51 99.99 94.10 83.89 98.92

Thigh circumference 2.46 3.31 2.80 99.99 99.97 99.99 99.75 97.98 99.41

Knee circumference 2.76 5.47 3.45 99.98 99.47 99.98 99.33 85.38 97.67

Calf length 7.27 10.56 7.90 96.08 87.39 95.20 73.23 53.68 69.11

Mean 4.64 7.60 4.95 100.00 99.99 100.00 99.84 88.70 99.86

VISAPP 2022 - 17th International Conference on Computer Vision Theory and Applications

542

Table 3: Performance of the PC-BoDiEs model using various input point cloud density.

Body measurement MAE (mm) AP@20 (%) AP@10 (%)

512 pts 1024 pts 512 pts 1024 pts 512 pts 1024 pts

Head circumference 8.06 7.54 94.87 100.00 68.44 100.00

Neck circumference 9.07 8.44 91.76 100.00 62.46 100.00

Shoulder-to-shoulder 8.21 7.93 94.57 100.00 67.43 100.00

Arm span 6.95 6.45 97.75 99.98 75.57 99.97

Shoulder-to-wrist 5.18 4.65 99.66 100.00 87.63 100.00

Torso length 7.85 7.51 95.68 100.00 69.23 100.00

Bicep circumference 5.79 5.51 99.40 100.00 83.16 100.00

Wrist circumference 2.48 2.32 100.00 100.00 99.79 100.00

Chest circumference 3.29 2.96 100.00 100.00 98.31 100.00

Waist circumference 2.29 2.16 100.00 100.00 99.96 100.00

Pelvis circumference

5.11 4.80 99.66 100.00 88.17 99.97

Leg length 3.48 3.23 100.00 100.00 97.77 99.99

Inner leg length 2.76 2.43 99.99 100.00 98.92 100.00

Thigh circumference 2.80 2.57 99.99 100.00 99.41 100.00

Knee circumference 3.45 3.15 99.98 100.00 97.67 100.00

Calf length 7.90 7.48 95.20 100.00 69.11 99.99

Mean 5.29 4.95 100.00 100.00 99.77 99.86

and shoulder-to-shoulder distance; while the models

performed best on waist and wrist circumferences.

5 CONCLUSIONS

In this paper, we examined various human body data

representations, including 2D images and 3D point

clouds, and their impact on a neural network per-

formance estimating anthropometric body measure-

ments. As a part of the research, we generated large-

scale synthetic dataset of multiple corresponding data

formats, which is publicly available for research pur-

poses, and can be used in many human body analysis-

related tasks. We introduced an annotation process

to obtain ground truth for 16 distinct body measure-

ments on a 3D body model. Finally, we presented

baseline end-to-end methods for accurate body mea-

surements estimation from 2D and 3D body input

data. The results of our experiments have shown that

both the grid structure and the depth information in

the input data hold an important additional value, and

have positive effect on final estimation. Approaches

engaging grid-structured gray-scale images, as well

as unstructured 3D point clouds both yield competi-

tive results, reaching the mean error of approximately

5 mm.

5.1 Future Work

In the next step of our research, we plan to incorporate

structure into the 3D body scans, merging the bene-

fits of grid-structure and the depth information, and

examine its impact on the model inference. In this

type of data, the 3D points are organized in a 2D grid,

analogously to the image grid structure. However, in

structured point clouds, instead of RGB or intensity

values, the three channels for each point preserve its

3D coordinates. Besides, we aim to further extend the

experiments with stated data representations, and cap-

ture relevant real human data using 3D scanners, an-

notated with tape measured body dimensions to eval-

uate the models accuracy in real-world scenarios.

REFERENCES

Anisuzzaman, D. M., Shaiket, H. A. W., and Saif, A.

(2019). Online trial room based on human body shape

detection. International Journal of Image, Graphics

and Signal Processing, 11:21–29.

Ashmawi, S., Alharbi, M., Almaghrabi, A., and Alhothali,

A. (2019). Fitme: Body measurement estimations us-

ing machine learning method. Procedia Computer

Science, 163:209–217. 16th Learning and Technol-

ogy Conference 2019Artificial Intelligence and Ma-

chine Learning: Embedding the Intelligence.

Dao, N.-L., Deng, T., and Cai, J. (2014). Fast and automatic

human body circular measurement based on a single

kinect.

Gonzalez-Tejeda, Y. and Mayer, H. (2019). Calvis: Chest,

waist and pelvis circumference from 3d human body

meshes as ground truth for deep learning. In Proceed-

ings of the VIII International Workshop on Represen-

tation, analysis and recognition of shape and motion

FroM Imaging data (RFMI 2019). ACM.

Guill

´

o, A., Azorin-Lopez, J., Saval-Calvo, M., Castillo-

Zaragoza, J., Garcia-D’Urso, N., and Fisher, R.

(2020). Rgb-d-based framework to acquire, visualize

and measure the human body for dietetic treatments.

Sensors, 20:3690.

Automatic Estimation of Anthropometric Human Body Measurements

543

Jensen, J., Hannemose, M., Bærentzen, J., Wilm, J., Fris-

vad, J., and Dahl, A. (2021). Surface reconstruction

from structured light images using differentiable ren-

dering. Sensors, 21(4):1–16.

Li, Z., Jia, W., Mao, Z.-H., Li, J., Chen, H.-C., Zuo, W.,

Wang, K., and Sun, M. (2013). Anthropometric body

measurements based on multi-view stereo image re-

construction. Conference proceedings : ... Annual

International Conference of the IEEE Engineering in

Medicine and Biology Society. IEEE Engineering in

Medicine and Biology Society. Conference, 2013:366–

369.

Liu, K., Wang, J., Kamalha, E., Li, V., and Zeng, X. (2017).

Construction of a prediction model for body dimen-

sions used in garment pattern making based on an-

thropometric data learning. The Journal of The Textile

Institute, 108:1–8.

Loper, M., Mahmood, N., Romero, J., Pons-Moll, G., and

Black, M. J. (2015). SMPL: A skinned multi-person

linear model. ACM Trans. Graphics (Proc. SIG-

GRAPH Asia), 34(6):248:1–248:16.

Qi, C. R., Su, H., Mo, K., and Guibas, L. J. (2016). Pointnet:

Deep learning on point sets for 3d classification and

segmentation. CoRR, abs/1612.00593.

Rakotosaona, M., Barbera, V. L., Guerrero, P., Mitra, N. J.,

and Ovsjanikov, M. (2019). POINTCLEANNET:

learning to denoise and remove outliers from dense

point clouds. CoRR, abs/1901.01060.

Robinette, K., Blackwell, S., Daanen, H., Boehmer, M., and

Fleming, S. (2002). Civilian american and european

surface anthropometry resource (caesar), final report.

volume 1. summary. page 74.

Rosman, G., Dubrovina, A., and Kimmel, R. (2012). Sparse

modeling of shape from structured light. pages 456–

463.

Shigeki, Y., Okura, F., Mitsugami, I., and Yagi, Y. (2018).

Estimating 3d human shape under clothing from a sin-

gle rgb image. IPSJ Transactions on Computer Vision

and Applications, 10:16.

Song, D., Tong, R., Chang, J., Wang, T., Du, J., Tang, M.,

and Zhang, J. (2017). Clothes size prediction from

dressed-human silhouettes. pages 86–98.

Tejeda, Y. G. and Mayer, H. A. (2021). A neural anthro-

pometer learning from body dimensions computed on

human 3d meshes.

Tsoli, A., Loper, M., and Black, M. (2014). Model-based

anthropometry: Predicting measurements from 3d hu-

man scans in multiple poses.

Wang, Z., Wang, J., Xing, Y., Yang, Y., and LIU, K. (2019).

Estimating human body dimensions using rbf artifi-

cial neural networks technology and its application in

activewear pattern making. Applied Sciences, 9:1140.

Xiaohui, T., Xiaoyu, P., Liwen, L., and Qing, X. (2018).

Automatic human body feature extraction and per-

sonal size measurement. Journal of Visual Languages

& Computing, 47:9–18.

Yan, S. and K

¨

am

¨

ar

¨

ainen, J.-K. (2021). Learning

anthropometry from rendered humans. ArXiv,

abs/2101.02515.

Yan, S., Wirta, J., and K

¨

am

¨

ar

¨

ainen, J.-K. (2020). Anthro-

pometric clothing measurements from 3d body scans.

Machine Vision and Applications, 31.

VISAPP 2022 - 17th International Conference on Computer Vision Theory and Applications

544