A BCI-controlled Robot Assistant for Navigation and Object

Manipulation in a VR Smart Home Environment

Ethel Pruss, Jos Prinsen, Anita Vrins, Caterina Ceccato and Maryam Alimardani

a

Department of Cognitive Science and AI, Tilburg University, Tilburg, The Netherlands

Keywords:

Smart Home, Brain-Computer Interface (BCI), Virtual Reality (VR), Assistive Robots, P300 Paradigm.

Abstract:

BCI-controlled smart homes enable people with severe motor disabilities to perform household activities,

which would otherwise be inaccessible to them. In this paper, we present a proof of concept of an assistive

robot with telepresence functionality inside a Virtual Reality (VR) smart home. Using live EEG data and a

P300 Brain-Computer Interface (BCI), the user is able to control a virtual agent and interact with the smart

home environment. We further discuss the potential use cases of our proposed system for patients with motor

impairment and recommend directions for future research.

1 INTRODUCTION

Brain-computer interfaces (BCI) are communication

systems that get their input from brain activity and

translate them into output commands for external de-

vices without requiring the user to move physically

(Wolpaw et al., 2002). Therefore, BCIs can help

patients with motor impairment to regain the abil-

ity to communicate and interact with their environ-

ment through various control paradigms. There are

different methods to collect a user’s brain activity,

among which electroencephalography (EEG) is the

most popular as it is non-invasive and offers a high

temporal resolution (Abiri et al., 2019).

Depending on the EEG component extracted from

the brain, a BCI system can be classified into three

major paradigms; P300, steady-state visual evoked

potential (SSVEP), and motor imagery (Abiri et al.,

2019). The P300 paradigm relies on positive deflec-

tions in form of an event-related potential (ERP) that

are elicited approximately 300 ms after encountering

an intended stimulus in an oddball paradigm (Mat-

tout et al., 2015). Therefore, by comparing the event-

related potentials induced in a sequence of stimuli

presentation, P300 BCIs can identify a user’s choice

of target. The P300 paradigm requires less user train-

ing than other paradigms (Guger et al., 2009), making

it a promising tool for the design of BCI-controlled

interactive environments (Fazel-Rezai et al., 2012).

BCI-controlled smart homes have been studied us-

ing both virtual reality (VR) simulations and physical

a

https://orcid.org/0000-0003-3077-7657

prototypes, which are often limited to a single appli-

ance or function (Edlinger and Guger, 2011; Edlinger

et al., 2009; Sahal et al., 2021). A limitation of pre-

vious BCI smart home studies is that they have only

focused on simple tasks that can be accomplished by

sending digital commands to smart devices. For in-

stance, Edlinger and Guger (2011) presented an ex-

periment involving smart home control inside a vir-

tual environment using a modified P300 speller that

allowed the user to control lights, turn the TV on or

off, select TV channels and adjust the volume. A

more recent study by Sahal et al. (2021) used aug-

mented reality (AR) combined with a limited proto-

type to accomplish similar tasks by utilizing the in-

built capabilities of a smart assistant (Google Assis-

tant).

Although these setups can give patients control

over their entertainment systems and ambiance, they

do not address the most basic needs of immobile pa-

tients; e.g. retrieving a glass of water, medication, or

food. The missing component is the ability to move

and manipulate objects. Combining a BCI-controlled

environment with a robot that could facilitate physi-

cal tasks, such as object retrieval, could address these

needs.

1.1 Robot-assisted Smart Environments

A number of past studies have attempted combin-

ing robot assistants with smart home applications (Do

et al., 2018; Wilson et al., 2019). These studies are

primarily focused on elderly healthcare to improve in-

Pruss, E., Prinsen, J., Vrins, A., Ceccato, C. and Alimardani, M.

A BCI-controlled Robot Assistant for Navigation and Object Manipulation in a VR Smart Home Environment.

DOI: 10.5220/0011010800003123

In Proceedings of the 15th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2022) - Volume 1: BIODEVICES, pages 231-237

ISBN: 978-989-758-552-4; ISSN: 2184-4305

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

231

dependent living. For instance, Koceski and Koceska

(2016) developed a telepresence robot that was able

to drive around and use an extendable arm to grab

and fetch small objects. Additionally, the head of the

robot was equipped with a tablet that included an inte-

grated camera. This had two benefits: a live stream of

the robot’s location was provided to the users so that

it could be operated even when out of sight, and the

user was able to create a two-way video call, which al-

lowed them to connect with caregivers, relatives, and

friends. Participants reported their perceived ‘func-

tionality usefulness’ of the robot on a five-point Lik-

ert scale. Importantly, both navigation and the ma-

nipulator functions achieved scores of 3.0 and higher,

indicating high user acceptance.

Other studies have shown that telepresence robots

can help patients who are limited in their interactions

with the outside world feel more socially connected,

which could alleviate or prevent psychological prob-

lems stemming from loneliness and isolation (Hung

et al., 2021; Moyle et al., 2017; Niemel

¨

a et al., 2021;

Odekerken-Schr

¨

oder et al., 2020; Wiese et al., 2017).

For instance, Niemel

¨

a et al. (2021) conducted field tri-

als with a duration of 6-12 weeks to study the effect of

telepresence robots in elderly care facilities. The au-

thors found that telepresence robots made the patients

feel as if their family members were present, which

had a positive effect on their social well-being. An-

other study addressing loneliness and isolation dur-

ing the COVID-19 pandemic by Odekerken-Schr

¨

oder

et al. (2020) found that in addition to facilitating so-

cial ties between humans, the robot itself can be per-

ceived as a social companion that plays the role of

a personal assistant, a relational peer, or an intimate

buddy. A similar effect was observed using a compan-

ion robot for dementia patients in Hung et al. (2021),

where the robot was perceived as a buddy that facili-

tated social connection and mitigated feelings of lone-

liness.

While the above-mentioned studies highlight the

potential of robot-assisted environments for many

user groups, they are often focused on able-bodied pa-

tients who can interact with the robot through speech

or a handheld device such as a phone or tablet. As

these input devices require motor control, patients

with motor impairment would not be able to use

the systems. For some patients with motor impair-

ment, gaze control or voice control are alternative op-

tions. Tele-operated robots that are controlled by eye-

tracking have been used in studies with a view of as-

sisting disabled patients (Watson et al., 2016; Zhang

and Hansen, 2020), and similarly, voice control has

been used as an additional control option for a smart

home prototype (Luria et al., 2017). However, there

are severe cases of motor impairment where neither

speech nor gaze control is possible. Patients who suf-

fer from locked-in syndrome, e.g., amyotrophic lat-

eral sclerosis (ALS) patients, are unable to use any

traditional input methods. For these patients who can

not communicate through any physical medium, BCI

systems are the only means that would enable them

to express their needs or gain control over assistive

devices that could reduce their reliance on caretakers

(Wolpaw et al., 2002).

For instance, in the study of Spataro et al. (2017)

focusing on ALS patients with locked-in syndrome,

a P300-based BCI was used to control the humanoid

NAO robot. The authors found that a majority of the

patients were able to control the robot successfully

to fetch a glass of water. This suggests that a robot-

assisted smart home environment could be a possible

solution for increasing independence and quality of

life for ALS patients. However, the NAO robot used

in this study is a small size robot not capable of navi-

gation. Therefore the water-fetching capabilities were

tested either on a fixed office desk or a wooden board

laid over a bed, neither of which would be a realis-

tic scenario for a bed-bound patient (Spataro et al.,

2017).

In this study, we demonstrate a proof of concept

for a P300 BCI-controlled assistive robot in a vir-

tual smart home. Specifically, we will focus on com-

plex tasks that are needed for independent living but

cannot be accomplished without object manipulation.

A VR environment is used to simulate an IoT-based

smart home, which can be controlled directly for sim-

ple tasks such as controlling lights. A virtual robot in

the same environment facilitates complex tasks, such

as object retrieval, once such intention is decoded

from the user’s brain activity. VR provides a feasi-

ble platform for rapid prototyping and user evalua-

tion as opposed to physical smart home environments

that are costly and laborious to create (Holzner et al.,

2009). By combining a BCI system with a robot-

assisted smart home, our proposed system illustrates a

proof of concept for future smart homes that can sig-

nificantly increase the independence and life quality

of patients who are immobile or experience reduced

mobility.

2 SYSTEM DESIGN

2.1 Virtual Reality Simulation

The VR smart home environment was developed

in Unity, which is a widely used cross-platform

game engine. We used an add-on called SteamVR,

BIODEVICES 2022 - 15th International Conference on Biomedical Electronics and Devices

232

Figure 1: (a) VR simulation of the smart home environment

and (b) Virtual robot assistant

which is compatible with nearly all head-mounted

displays (HMD). The HMD employed in this study

was an Oculus Quest 2. The environment consists of

one room with kitchen appliances, lights, and basic

kitchen furniture (Figure 1). The 3D assets used in

our environment are, except for the kettle and toaster,

from the Unity Asset Store (Demon, 2020; Q! Dev,

2018; Rubens, 2017; Studio, 2021). We developed the

remaining assets using Blender. The environment can

be viewed either from the third-person perspective of

the room or as a moving first-person perspective that

follows the robot, depending on the user’s preference.

The appliances in the VR room were designed to

provide the user with affordances for the actions they

can take. During interaction, a custom-designed con-

troller board is displayed at the corner of the view-

field in the HMD, which gives possibilities for five

navigation and four object manipulation commands

Figure 2: Custom-designed P300 controller board for a

robot-assisted smart home environment.The arrows and

stop icon are for direct user-controlled robot navigation.

The remaining four icons give high-level commands for the

robot and smart home environment (e.g. make toast, switch

on/off the lights, make tea and clean up trash).

(see Figure 2). The navigation commands allow the

user to directly control the movement of the robot in

four directions and subsequently halt the movement.

The icons with household items (toaster, kettle, light

bulb, and trash can) give high-level commands that

initiate action sequences for four tasks: making toast,

turning the kettle on, turning the light on, and clean-

ing up. The commands include both simple tasks

that can be achieved without robot assistance by com-

municating directly with the smart home appliances

(e.g. switching on the lights), and complex tasks that

require interaction between the robot and the smart

home (e.g. making toast, which requires the robot

to insert bread into the toaster and deliver the toast

to the user). Additionally, the cleaning up task in-

volves shared control from the user to avoid potential

issues around misidentification of disposable items:

when the cleaning mode is initiated, the robot can be

moved around by the user to locate items that need

to be taken to the trash can by the robot (outside of

cleaning mode the robot would not dispose of items).

The VR environment is responsive to the user’s

choices, giving visual and auditory feedback once

their selected action is successfully recognized and

carried out. Specifically, a toaster selection is fol-

lowed by a toast coming out of the toaster, the ket-

tle displays a smoke animation, the light bulb icon

switches on the virtual lamps increasing the lumi-

nance of the room, and the trash can icon triggers a

cleaning animation carried out by the robot assistant.

2.2 P300 BCI

Brain activity is recorded using the Unicorn Hybrid

Black system (g.tec neurotechnology GmbH, Aus-

tria), which is a wireless EEG cap (Fig. 3a). The

signals are collected from 8 channels according to the

10-20 international system (Fz, C3, Cz, C4, Pz, PO7,

Oz, and PO8). The channel positions are visualized

in Fig. 3b. The ground and reference electrodes are

placed on the mastoids of the subject using dispos-

able adhesive surface electrodes. To inspect the EEG

signals and extract P300 potentials, we used the Uni-

corn Suite software. This software includes Unicorn

Recorder and Unicorn Speller; the former is used to

check the quality of the signals and the latter is used

for the P300 paradigm.

The Unicorn Speller runs on MATLAB Simulink

and includes three main modules; signal acquisition

and processing, feature extraction, and classification

(Fig. 4) (Guger et al., 2009). The Speller graphical

user interface (based on which the controller board

was designed, Fig. 2) contains rows and columns of

stimuli that flash at a certain frequency. The user is

A BCI-controlled Robot Assistant for Navigation and Object Manipulation in a VR Smart Home Environment

233

Figure 3: (A) Unicorn Hybrid Black system, (B) electrode

positions for the P300 BCI interface.

asked to focus on one item and count the number of

times it flashes while ignoring other items. Every time

the target item flashes, a P300 response is generated

and reflected in EEG signals. By comparing the tim-

ing of the P300 response in the recorded signals and

the flashing sequences provided by the interface, the

system can identify the target item that was chosen by

the user.

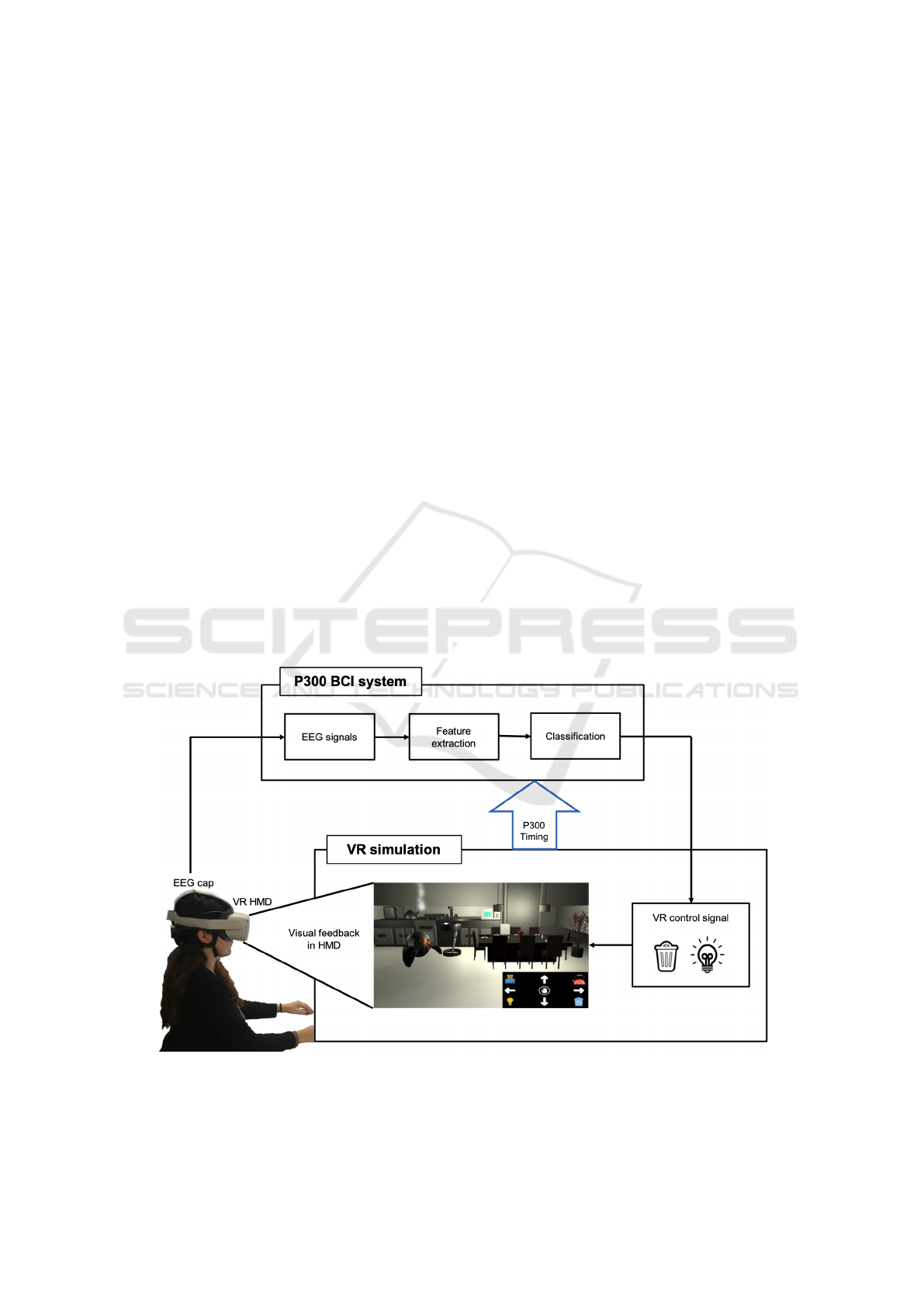

2.3 System Architecture

The general architecture of the solution consists of

two components; the VR smart home and the P300

BCI system. The two are connected using the P300

controller board (Fig. 2) which provides visual cues

to the user. The controller board presents a 3 by 3

table of options, enabling both navigation and object

manipulation commands for the assistive robot.

Using the hardware mentioned above, we created

a BCI-VR loop (Fig. 4) whereby the user’s brain

activity is recorded by the EEG cap as they observe

the VR environment in a HMD. The P300 controller

board is presented as a second display at the bottom

corner of the visual field in the HMD. The P300 BCI

system controls the flashing sequence of the icons on

the controller board. The flashes last 150 ms, with

no delay between them. The user is instructed to

choose their command of choice by focusing on the

representing icon on this board and silently count the

number of flashes. The BCI system would then match

the timing of the flashing with the P300 responses in

the brain to predict the user’s chosen command. The

command recognized by the BCI system is then trans-

lated into a control signal for the VR environment

(e.g. switch on the lamp), following which the user

receives visual feedback for their chosen action in the

HMD.

3 DISCUSSION

The current study proposed a proof of concept for

a BCI-controlled smart home in VR that was medi-

ated by a telepresence robot. The system architec-

ture proposed in this study followed the shared con-

trol paradigm as suggested by Koceski and Koceska

(2016), which allows the user to give the robot com-

mands on a high level, such as moving in a particular

direction or grabbing an object, while the lower-level

tasks needed to accomplish the goal are performed au-

tomatically by the robot. Assigning tasks to the robot

on a high level is assumed to be more feasible for a

user with motor impairment compared to having con-

trol over low-level movements needed to complete the

task. This should keep the training time required to

use the system relatively low while still giving the

user a sense of control.

The integration of a telepresence robot with a

BCI-controlled smart home offers several advantages

to immobile patients. It emulates the ability to

move around in an environment when walking or

wheelchair operation is no longer feasible. Addition-

ally, it can increase perceived social presence through

communicating with caregivers or loved ones who

are physically out of reach (Koceski and Koceska,

2016; Moyle et al., 2019; Niemel

¨

a et al., 2021). Fur-

thermore, the sense of agency and embodiment asso-

ciated with BCI-controlled telepresence robots have

been previously shown to increase user performance

on the BCI task and hence the quality of interaction

with the system (Alimardani et al., 2013). For this ef-

fect, a first-person view of the environment streamed

by the robot is essential. In our current VR design,

either first-person view or third-person view can be

manually chosen at the beginning of the interaction.

In future prototypes, a BCI toggle could be added to

enable the user to change the view dynamically.

Usually, telepresence robots are equipped with a

camera mounted to the robot, providing a first-person

view of the environment to the user. The use of a

VR environment has some advantages over traditional

telepresence interfaces as the incoming video stream

from the robot can be displayed in an HMD instead of

a screen or tablet. In terms of user comfort, an HMD

can show the video feed and the P300 controller re-

gardless of the user’s head orientation, which makes

smart-home environments more accessible to patients

who find sitting upright difficult. This is especially

relevant for ALS patients, as sitting up to look at a

screen can cause fatigue and discomfort (Sahal et al.,

2021). Another benefit of using a VR simulation is

that an entire smart home environment, including in-

teractions with appliances and visual feedback, can

BIODEVICES 2022 - 15th International Conference on Biomedical Electronics and Devices

234

be simulated in a life-like manner without the need

for any additional technology or development. This

allows rapid prototyping, user evaluation, and cus-

tomization at a low cost (Holzner et al., 2009), which

is important because the success of a smart home sys-

tem depends on its ability to meet user needs.

While BCI-driven robot-assisted smart homes

show promise in a lab setting, more work is needed

to establish their application in a real-life situation for

disabled patients. One of the main limitations of the

current prototype (and P300 BCIs in general) is the

trade-off that exists between the system’s classifica-

tion speed and its accuracy. The number of flash se-

quences needed for the P300 controller to accurately

isolate the intended command limits how fast the sys-

tem can respond. Our pilot study with one trained

user showed that 5 flashes are sufficient for the system

to accurately identify the target commands. Improv-

ing speed while maintaining accuracy is particularly

important for robot navigation and control, as slow

response times could make the robot inefficient and

prone to accidents. A previous study using a similar

setup with a VR headset and the visual P300 paradigm

demonstrated that healthy users can reach an average

accuracy of 96% with three flash sequences and that

depending on the user, spelling with only one flash

sequence is possible (K

¨

athner et al., 2015). Such po-

tentially high information transfer rate in P300 BCIs

is promising for robot navigation and control in real

time, however further user evaluation is needed to

confirm this expectation using our proposed proto-

type.

It is particularly important to test the robot func-

tionalities in a physical environment where enhanced

navigation and obstacle avoidance are necessary. Ad-

ditionally, potential end-users should be included as

early as possible in the development process in a user-

centered design approach to ensure that the developed

functionalities are in line with what disabled users and

their caretakers find comfortable and useful (Rogers

et al., 2021). Previous research indicates that 84%

of ALS patients would be interested in using BCI as-

sistive technology with a non-invasive electrode cap

(Huggins et al., 2011). Other surveys attempted to

determine the BCI functionalities that would benefit

patients the most (Huggins et al., 2011; Olsson et al.,

2010). However, as these studies were not geared to-

wards smart homes, further user evaluations are re-

quired to identify the limitations of the proposed so-

lution before it is used in practice.

Future studies should outline the possible func-

tionalities of a robot-assisted smart home based

on user needs and priorities e.g., by conducting a

survey similar to Huggins et al. Huggins et al.

(2011). Additionally, simultaneous decoding of mul-

tiple EEG features through Hybrid BCI methods or

Figure 4: System architecture. The user is instructed to selectively look at one of the blinking icons in the P300 controller

board presented at the bottom corner of the VR visual field. The BCI system then predicts the user’s selected command based

on the P300 response in the EEG signals and subsequently sends a control signal to the VR simulation. This activates the

corresponding appliance or the robot in the VR environment, which serves as visual feedback to the user.

A BCI-controlled Robot Assistant for Navigation and Object Manipulation in a VR Smart Home Environment

235

novel BCI paradigms such as inner speech classifica-

tion (van den Berg et al., 2021) could be employed

to increase the system accuracy, number of the con-

trol commands, and ease of use for the user (Hong

and Khan, 2017). For instance, the motor imagery

paradigm can be integrated as a more intuitive method

for navigation (Su et al., 2011) or the P300 paradigm

can be paired with gaze or attention tracking as an

on/off switch for active/passive control of the inter-

face (Alimardani and Hiraki, 2020). Such a multi-

modal interface will enable asynchronous communi-

cation with the BCI system whenever the user intends

to interact with the environment, which would in re-

turn reduce visual strain from the continuous flashing

of the stimuli.

In sum, robot-assisted smart home systems that

focus on the needs of disabled patients could improve

their quality of life and reduce their reliance on care-

takers, which is beneficial in both healthcare and pri-

vate home care settings. A VR simulation allows re-

searchers to fully consider all aspects of the user expe-

rience before committing to development phases and

to ensure that the potential user groups can benefit

from the system in the long term. While more user

research, prototyping, and testing are still needed, our

application demonstrates the first steps of this process

as a proof of concept.

4 CONCLUSION

In this paper, we presented a proof of concept for a

BCI-controlled robot assistant in a VR-based smart

home that enables patients with motor impairment

to conduct complex tasks such as object manipu-

lation and environment control. Our solution in-

tegrated a VR environment with a P300 BCI sys-

tem through a custom-designed controller interface;

it demonstrated that BCI commands issued via a

custom-designed household-oriented P300 interface

can be sufficient to control a combination of smart

home appliances and an assistive robot. This com-

bination serves as an affordable platform for evalua-

tion and design of real smart home environments for

disabled patients. Further developments in BCI hard-

ware/software, robotics, and VR input methods are

required to realize automated assisted living systems

efficiently.

REFERENCES

Abiri, R., Borhani, S., Sellers, E. W., Jiang, Y., and Zhao, X.

(2019). A comprehensive review of eeg-based brain-

computer interface paradigms. Journal of neural en-

gineering, 16.

Alimardani, M. and Hiraki, K. (2020). Passive brain-

computer interfaces for enhanced human-robot inter-

action. Frontiers in Robotics and AI, 7.

Alimardani, M., Nishio, S., and Ishiguro, H. (2013).

Humanlike robot hands controlled by brain activity

arouse illusion of ownership in operators. Scientific

reports, 3:2396.

Demon, R. (2020). Robot sphere. https://assetstore.unity.

com/packages/3d/characters/robots/robot-sphere-

136226.

Do, H. M., Pham, M., Sheng, W., Yang, D., and Liu,

M. (2018). Rish: A robot-integrated smart home

for elderly care. Robotics and Autonomous Systems,

101:74–92.

Edlinger, G. and Guger, C. (2011). Social environments,

mixed communication and goal-oriented control ap-

plication using a brain-computer interface.

Edlinger, G., Holzner, C., Groenegress, C., Guger, C.,

and Slater, M. (2009). Goal-oriented control with

brain-computer interface. Lecture Notes in Computer

Science (including subseries Lecture Notes in Artifi-

cial Intelligence and Lecture Notes in Bioinformatics),

5638 LNAI:732–740.

Fazel-Rezai, R., Allison, B. Z., Guger, C., Sellers, E. W.,

Kleih, S. C., and K

¨

ubler, A. (2012). P300 brain

computer interface: Current challenges and emerging

trends. Frontiers in Neuroengineering, 0:1–30.

Guger, C., Daban, S., Sellers, E., Holzner, C., Krausz,

G., Carabalona, R., Gramatica, F., and Edlinger, G.

(2009). How many people are able to control a p300-

based brain-computer interface (bci)? Neuroscience

Letters, 462:94–98.

Holzner, C., Guger, C., Edlinger, G., Gronegress, C., and

Slater, M. (2009). Virtual smart home controlled by

thoughts. 2009 18th IEEE International Workshops

on Enabling Technologies: Infrastructures for Collab-

orative Enterprises, pages 236–239.

Hong, K.-S. and Khan, M. J. (2017). Hybrid

brain–computer interface techniques for improved

classification accuracy and increased number of com-

mands: a review. Frontiers in neurorobotics, 11:35.

Huggins, J. E., Wren, P. A., and Gruis, K. L. (2011). What

would brain-computer interface users want? opinions

and priorities of potential users with amyotrophic lat-

eral sclerosis. Amyotrophic Lateral Sclerosis, 12:318–

324.

Hung, L., Gregorio, M., Mann, J., Wallsworth, C., Horne,

N., Berndt, A., Liu, C., Woldum, E., Au-Yeung, A.,

and Chaudhury, H. (2021). Exploring the perceptions

of people with dementia about the social robot paro in

a hospital setting. Dementia, 20.

Koceski, S. and Koceska, N. (2016). Evaluation of an assis-

tive telepresence robot for elderly healthcare. Journal

of Medical Systems, 40:121.

K

¨

athner, I., K

¨

ubler, A., and Halder, S. (2015). Rapid p300

brain-computer interface communication with a head-

mounted display. Frontiers in Neuroscience, 9.

BIODEVICES 2022 - 15th International Conference on Biomedical Electronics and Devices

236

Luria, M., Hoffman, G., and Zuckerman, O. (2017). Com-

paring social robot, screen and voice interfaces for

smart-home control. Proceedings of the 2017 CHI

Conference on Human Factors in Computing Systems,

pages 580–628.

Mattout, J., Perrin, M., Bertrand, O., and Maby, E. (2015).

Improving bci performance through co-adaptation:

Applications to the p300-speller. Annals of Physical

and Rehabilitation Medicine, 58:23–28.

Moyle, W., Arnautovska, U., Ownsworth, T., and Jones, C.

(2017). Potential of telepresence robots to enhance so-

cial connectedness in older adults with dementia: An

integrative review of feasibility.

Moyle, W., Jones, C., Dwan, T., Ownsworth, T., and Sung,

B. (2019). Using telepresence for social connec-

tion: views of older people with dementia, families,

and health professionals from a mixed methods pilot

study. Aging and Mental Health, 23:1643–1650.

Niemel

¨

a, M., van Aerschot, L., Tammela, A., Aaltonen, I.,

and Lammi, H. (2021). Towards ethical guidelines of

using telepresence robots in residential care. Interna-

tional Journal of Social Robotics, 13:431–439.

Odekerken-Schr

¨

oder, G., Mele, C., Russo-Spena, T., Mahr,

D., and Ruggiero, A. (2020). Mitigating loneli-

ness with companion robots in the covid-19 pandemic

and beyond: an integrative framework and research

agenda. Journal of Service Management, 31:1149–

1162.

Olsson, A. G., Markhede, I., Strang, S., and Persson, L. I.

(2010). Differences in quality of life modalities give

rise to needs of individual support in patients with als

and their next of kin. Palliative & Supportive Care,

8:75–82.

Q! Dev (2018). Too many items: Kitchen props.

https://assetstore.unity.com/packages/3d/props/too-

many-items-kitchen-props-127635.

Rogers, W., Kadylak, T., and Bayles, M. (2021). Max-

imizing the benefits of participatory design for hu-

man–robot interaction research with older adults. Hu-

man Factors.

Rubens, R. (2017). Trash bin. https://assetstore.unity.com/

packages/3d/props/furniture/trash-bin-96670.

Sahal, M., Dryden, E., Halac, M., Feldman, S., Heiman-

Patterson, T., and Ayaz, H. (2021). Augmented real-

ity integrated brain computer interface for smart home

control. International Conference on Applied Human

Factors and Ergonomics, pages 89–97.

Spataro, R., Chella, A., Allison, B., Giardina, M., Sorbello,

R., Tramonte, S., Guger, C., and Bella, V. L. (2017).

Reaching and grasping a glass of water by locked-in

als patients through a bci-controlled humanoid robot.

Frontiers in Human Neuroscience, 11.

Studio, A. (2021). Polygon dining room. https://assetstore.

unity.com/packages/3d/props/interior/polygon-

dining-room-199435.

Su, Y., Qi, Y., Luo, J. X., Wu, B., Yang, F., Li, Y., Zhuang,

Y. T., Zheng, X. X., and Chen, W. D. (2011). A hy-

brid brain-computer interface control strategy in a vir-

tual environment. Journal of Zhejiang University SCI-

ENCE C 2011 12:5, 12:351–361.

van den Berg, B., van Donkelaar, S., and Alimardani, M.

(2021). Inner speech classification using eeg sig-

nals: A deep learning approach. 2021 IEEE 2nd In-

ternational Conference on Human-Machine Systems

(ICHMS), pages 1–4.

Watson, G. S., Papelis, Y. E., and Hicks, K. C. (2016).

Simulation-based environment for the eye-tracking

control of tele-operated mobile robots.

Wiese, E., Metta, G., and Wykowska, A. (2017). Robots as

intentional agents: Using neuroscientific methods to

make robots appear more social.

Wilson, G., Pereyda, C., Raghunath, N., de la Cruz,

G., Goel, S., Nesaei, S., Minor, B., Schmitter-

Edgecombe, M., Taylor, M. E., and Cook, D. J.

(2019). Robot-enabled support of daily activities in

smart home environments. Cognitive Systems Re-

search, 54:258–272.

Wolpaw, J. R., Birbaumer, N., McFarland, D. J.,

Pfurtscheller, G., and Vaughan, T. M. (2002).

Brain–computer interfaces for communication and

control. Clinical Neurophysiology, 113:767–791.

Zhang, G. and Hansen, J. P. (2020). People with motor

disabilities using gaze to control telerobots. Extended

Abstracts of the 2020 CHI Conference on Human Fac-

tors in Computing Systems.

A BCI-controlled Robot Assistant for Navigation and Object Manipulation in a VR Smart Home Environment

237