Eye Tracking Calibration based on Smooth Pursuit with

Regulated Visual Guidance

Yangyang Li

1,2 a

, Lili Guo

1b

, Guangbin Sun

1,* c

, Rongrong Fu

3d

, Zhen Yan

1e

and Ji Liang

1f

1

Technological and Engineering Center for Space Utilization of Chinese Academy of Sciences,

No. 9 Deng Zhuang South Rd., Beijing, 100094, China

2

University of Chinese Academy of Sciences, No. 19(A) Yuquan Rd., Beijing, 100049, China

3

Yanshan University, No. 438 West Hebei Avenue, Qinhuangdao, Hebei, 066004, China

Keywords: Eye Tracking, Smooth Pursuit, Gaze Calibration, Visual Guidance, Neural Network.

Abstract: Eye tracking calibration based on smooth pursuit has the characteristics of rapidity and convenience, but most

smooth pursuit calibration methods are based on spontaneous and passive gazes. The spatial-temporal

characteristics of the target movement can significantly affect the tracking performance, but few works have

performed calibration considering the effects of both the spatial and temporal variance of the smooth pursuit

target. Therefore, we proposed an off-line smoothing pursuit calibration featuring actively regulated speed

under specially designed visual guidance paths. In our prelude experiments, we found that there was an

obvious correlation between the eye movement velocity and the error of gaze point measurement. In particular,

when the movement velocity of gaze exceeded 6°/s, the accuracy and precision of the eye-tracking system

were obviously lower. Based on these findings, the visual guidance trajectory was regulated, with the speed

kept below 6°/s. The smooth pursuit calibration was combined with the neural network learning method. The

results showed that the mean absolute error was reduced from 1.0° to 0.4°, and the full calibration process

took only approximately 45 seconds.

1 INTRODUCTION

Human eye gaze behaviour has the dual function of

information acquisition and signal transmission

(Valtakari et al., 2021). Eye tracking is a sensor

measurement technology that is generally used in

human-computer interaction. Analysing the eye

movement of users through eye tracking is helpful for

understanding some of the user’s intentions (Hansen,

Ji, & intelligence, 2009). Humans usually prefer the

human-computer interaction mechanism with a

functionally intuitive interface and simple setting

procedures for ease and productiveness, while the

complex and incomprehensible human-computer

interaction mechanism will impose a burden on users

due to poor usability. From this perspective, the

a

https://orcid.org/0000-0002-5769-7596

b

https://orcid.org/0000-0002-4390-2640

c

https://orcid.org/0000-0002-2939-2066

d

https://orcid.org/0000-0003-0350-7619

e

https://orcid.org/0000-0003-2757-1623

f

https://orcid.org/0000-0001-9068-7990

interaction of eye movement tracking is qualified

with intuitive and simple interaction characteristics.

Eye tracking systems can be divided into two

types: invasive and noninvasive trackers. Most

tracking systems in practice are noninvasive based on

optical principles. Noninvasive eye tracking systems

can be divided into desktop and head-mounted

counterparts in practice (Mahmud, Lin, & Kim,

2020). Desktop eye tracking systems are based on

proximal fixed monitors and interact with digital

information from sensor streams and/or actionable

intelligent agents. They can work far away from the

target object, such as SMI RED250, Tobii Eye

Tracker5, Kinect and so on. This kind of equipment

contains a user-oriented camera, which is used to

capture the user's facial image. According to the

Li, Y., Guo, L., Sun, G., Fu, R., Yan, Z. and Liang, J.

Eye Tracking Calibration based on Smooth Pursuit with Regulated Visual Guidance.

DOI: 10.5220/0011524900003332

In Proceedings of the 14th International Joint Conference on Computational Intelligence (IJCCI 2022), pages 417-425

ISBN: 978-989-758-611-8; ISSN: 2184-3236

Copyright © 2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

417

extracted features from the facial image, the gaze

point on the screen can be calculated. Head-mounted

eye tracking systems, such as SMI Glasses and Tobii

Pro Glasses3, contain at least two eye cameras to

capture eye images directly, in addition to the user-

faced camera with a scene camera to capture the scene

image, and estimate the user fixation points through

the eye images and the scene image. Head-mounted

equipment is more flexible, but it has a compact

design for portability; therefore, the performance of

hardware such as cameras and computing elements is

low, with poor data sampling and processing ability.

In addition, the head-mounted device has two other

limitations: eccentric eye movement (the eye tracking

camera of the head-mounted device has difficulty

capturing eye movement close to the edge) and

coordinate system variation (when tracking the target

object in the real scene, the position of the target

object will change with the user's head position and

user movement, and there is a large difference in the

data representation of the same target tracking

between users).

For remote and noninvasive eye tracking systems,

the commonly used technology is Pupil Center-

Corneal Reflection technology (PCCR) (Larrazabal,

Cena, Martínez, & medicine, 2019; Zhu & Ji, 2005).

Near-infrared light is used to generate a reflective

flash on the surface of the cornea, and then the

reflection image is captured by the camera. The

reflection of the light source is identified by the

reflection image in the cornea and pupil, and a vector

between the cornea and pupil is established, which is

called the fixation vector. Based on the mapping

model, the gaze position is calculated.

To establish the mapping model, parameters

(Guestrin & Eizenman, 2006), such as the pupil

center, corneal curvature center, optical axis and

visual axis, need to be acquired. The parameters of

the eyeball model are subject dependent, so user

calibration is needed before the normal use of an eye

tracking system to collect data of parameters with

individual differences; meanwhile, the process and

method of calibration greatly affect the quality of eye

tracking data (Holmqvist et al., 2011). In addition,

with the passage of time, the estimation of the gaze

point by the mapping model of the eye tracking

system will become inaccurate for parameter drifting.

This will happen when the system characteristics or

user physiological characteristics change. However,

in running time, there is no automatic method to

assess when the precision of the mapping model will

decline and online compensation is difficult.

Therefore, routine offline calibration is a necessary

task (Gomez & Gellersen, 2018). At present, the

existing calibration methods come in two categories:

calibration based on distributed static target points

and smooth pursuit calibration. Calibration based on

distributed static target points displays a set of

specific static target points on the screen and requires

the user to gaze at each of the target points for a period

of time, one at a time (Harezlak, Kasprowski, &

Stasch, 2014). This calibration method varies with

different researchers in the number of target points,

layout, length of time to gaze at the target point, and

type of mapping algorithm. Theoretically, the more

points there are, the higher the precision of the model

estimation. However, the calibration process is

usually characterized by tedious repetition, user

fatigue and low ease of use (Drewes, Pfeuffer, & Alt,

2019). Therefore, researchers have proposed smooth

pursuit calibration (Pfeuffer, Vidal, Turner, Bulling,

& Gellersen, 2013), making use of the user's attention

mechanism by naturally gazing at moving targets for

calibration. The results show that compared with the

distributed standard 9-point static calibration, not

only does the smooth pursuit calibration cover a

wider space and the calibration precision is improved

but also the calibration time is shortened and the

process is easy to use.

The smooth pursuit method has a unique

advantage for people with difficulty in gaze fixation.

In 2017, Pfeuffer (Pfeuffer et al., 2013) proposed

completing the calibration task by using the smooth

pursuit calibration method for people with difficulty

concentrating (such as autistic children). The

experimental results show that when the calibration

target points move along the horizontal uniform path,

the wave horizontal path and vertical path, the mean

error can reach within 1°, which is better than the

fixation error of 1.15° with 28 static points, but the

whole calibration process takes 30 s to 60 s. In 2018,

Argenis Ramirez Gomez (Gomez & Gellersen, 2018)

et al. proposed a new smooth tracking calibration

mechanism named smooth-i, which detects the

motion area by motion matching and collects motion

points to create a calibration profile. When the user

tracks a moving target, the line of sight and the

contour trajectory match, and data points are

automatically collected. If there are inaccurate points,

the modified mapping model is recalibrated. in 2019,

Yasmeen (Abdrabou, Mostafa, Khamis, & Elmougy,

2019) et al. applied gaze estimation based on smooth

pursuit to text input for disabled users as the speed

and precision of smooth tracking and improved the

average input rate. Most of the studies on smooth

tracking calibration are online calibrations with

moving targets during the use of eye tracking

equipment. Although a separate offline calibration

ROBOVIS 2022 - Workshop on Robotics, Computer Vision and Intelligent Systems

418

step is omitted and the fixation error can also reach

1°, it does not apply if there is no moving target

during the use of eye tracking equipment; therefore, a

deliberate calibration with visual guidance has to be

summoned from time to time.

In the strategy of fine calibration to reduce the

fixation error, there are two main methods:

calibration mode-based calibration and data-driven

recalibration. The calibration mode-based approach

reduces errors caused by system variables, focusing

on calibration with choosing an appropriate layout of

distributed static targets and a method of data

integration. For example, Adithya(Adithya, Hanna,

Kumar, & Chai, 2018) et al. used multiple sets of

calibration points in initial calibration to reduce

errors; Harrar (Harrar, Le Trung, Malienko, & Khan,

2018) et al. proposed using five groups of different

types of calibration methods of distributed static

target points and taking the average (directly

watching the calibration point or closing to the

invisible calibration point by prompt). The average

value of the results is lower than the error caused by

only one calibration method. The working principle

of the data-driven calibration method is that the error

is further eliminated by modeling residual error from

the initial calibration data, which is also called

secondary calibration or recalibration. Because they

do not need to modify the existing calibration

program or reconstruct the usual white-box mapping

model of gaze estimation, it only needs to process the

collected eye movement data by numerical methods.

For example, Yunfeng Zhang (Zhang & Hornof,

2014) et al. proposed using an independent quadratic

polynomial regression to estimate the error in the X

and Y directions to compensate for the measurement

results of the eye tracker. Argenis (Gomez &

Gellersen, 2018) et al proposed using a quadratic

polynomial as a calibration function by substituting

the trajectory of the movement into the calibration

function to calculate the error. If the error is greater

than the set threshold, real-time recalibration can be

taken to reduce the error. Zineb (Zineb, Rachid, &

Talbi) et al. used thin plate splines (TPS) as a

surrogate model for a surrogate-based optimization

algorithm, which improved the speed and precision of

calibrations.

We modeled the residual error by a data-driven

method, considered the effect of the static distribution

of target points on the data, and proposed a smooth

pursuit calibration method based on the modulated

guiding trajectory. This method made use of the data

from the initial calibration of the eye tracking

equipment. We established a calibration trajectory in

the recalibration task and modulated its spatial-

temporal characteristics to reduce the influence of

error sources, thereby improving the calibration

performance. Through systematic experiments, we

found that the gaze point error of the smooth curve

trajectory has a correlation with the velocity curve of

the gaze trajectory. When the velocity exceeds a

threshold (approximately 6°/s is found to be a critical

point), the gaze error in the X and Y directions

increases significantly with increasing velocity in

both the X and Y directions. Based on these findings

and considering the general characteristics of the

spatial distribution of eye tracking error, the guidance

trajectory was designed, and its speed was modulated

within an appropriate range. The target calibration

point moves according to the guidance trajectory, and

gaze data are collected at the same time. Without

changing the underlying physical mapping model of

eye movement tracking equipment, a gaze error

compensation model was established through neural

network learning. The experimental results have

shown that the mean error of the user gaze point

estimation decreases to approximately 0.4° from the

initial value of approximately 1.0°, and the time spent

in the whole calibration process is approximately 45

s, which has the advantages of being easy to use, fast

and precise.

2 METHODS

2.1 Smooth Pursuit with Regulated

Visual Guidance and Tracking

Data Collection for Recalibration

The moving target point of the smooth pursuit

calibration program is composed of a circle with a

diameter of 20 pix and a center diameter of 10 pix.

The initial position is located at the center of the blue

calibration plane of the interface, as shown in Figure

2. The calibration gaze point (the green point) follows

the spatial trajectory with certain temporal

characteristics. In our previous experiments, two

calibration modes of hidden and visible smooth

calibration curve trajectories in the calibration

window were tested. It was found that the two

collection methods had little influence on data

quality.

In the following experiment in this work, the

calibration window was configured to show the

calibration trajectory while collecting data.

According to the motion of the calibration points, a

large number of target points can be extracted from

the continuous gaze data. In the calibration window

Eye Tracking Calibration based on Smooth Pursuit with Regulated Visual Guidance

419

of 1600x900 pixels, the calibration data collection

process takes approximately 35 s. According to the

condition that the time stamp of the target point is no

more than 5 ms ahead of the time stamp of the eye

tracking data, no less than 2000 groups of target point

data can be collected on the trajectory. According to

the conclusions drawn by Harezlak (Harezlak et al.,

2014) et al. and our multiple experimental analysis,

the eye movement data are unreliable within the first

600 ms to 700 ms when the smooth pursuit calibration

starts to collect gaze data. Therefore, in the

calibration process proposed, the target point will

jump 750 ms in situ after clicking the calibration start

button, which is mainly based on two considerations:

1. To avoid collecting unreliable data; 2. To remind

users that the smooth pursuit calibration program is

about to start, they need to pay attention at this time.

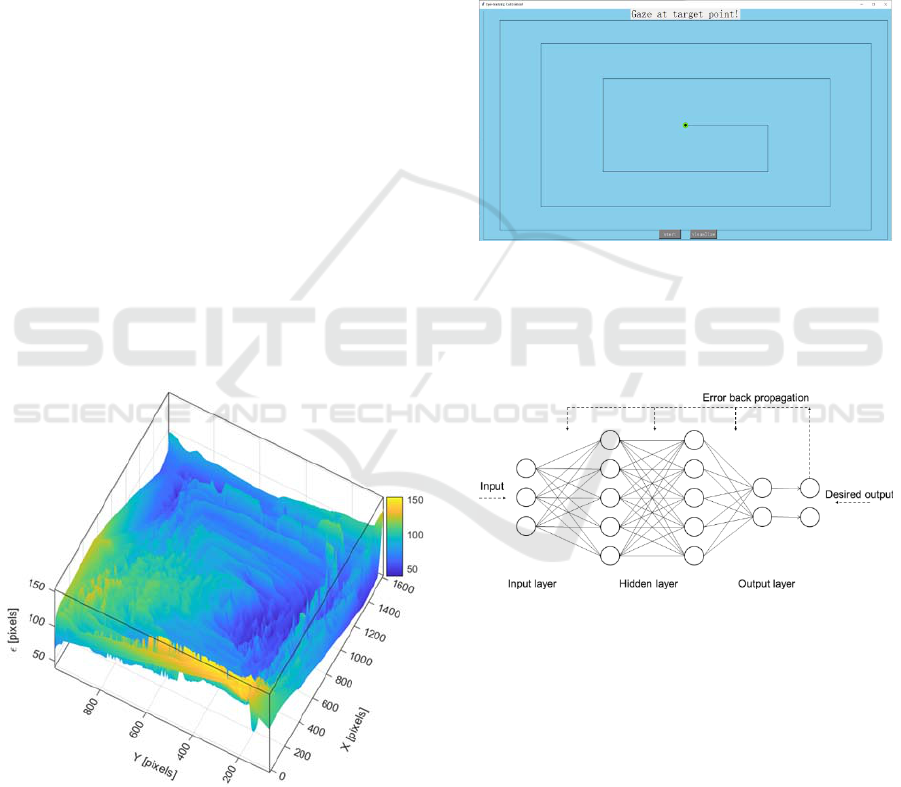

Considering the border-oriented trend of the error

distribution characteristics of the eye tracking system,

as shown in Figure 1, and its relative stability for

specific equipment, initial calibration configuration

and individual experimental personnel, the

appropriate trajectory can be roughly determined by

the error spatial distribution before fine recalibration.

The spatial guidance trajectory design example with

an uneven density distribution is shown in Figure 2.

As shown there, the pursuit calibration trajectory is

segmentally smooth with the higher line density

where the closer to boarder.

Figure 1: Typical spatial distribution of gaze error (where

X and Y are gaze coordinates along horizontal and vertical

directions; ε is gaze error defined by Euclidean distance).

In addition, the time modulation of the guidance

trajectory needs to be considered. Considering the

physiological characteristics of human visual

tracking, it is conjectured that motion speed is an

important factor affecting trajectory calibration error.

The repeated linear path with different uniform

velocities and curve paths with varying velocities are

designed in prelude experiments to verify the

correlation between speed and tracking error and to

determine the appropriate speed threshold, which

provides the basis for the calibration trajectory

regulation. On the other hand, the repeatability

precision analysis of the tracking data in prelude

experiments also provides evidence for the

potentiality of improving accuracy.

Figure 2: Spatially nonuniform square spiral trace on the X-

Y plane with limited speed designed for model learning.

2.2 Recalibration Model for Regulated

Smooth Pursuit

Figure 3: Structural diagram of the BP neural network.

The data-driven recalibration method is widely used.

The error model is learned from the data, and the error

characteristics are extracted to reduce the error. A

large number of researchers (Blignaut, Holmqvist,

Nyström, & Dewhurst, 2014; Drewes et al., 2019;

Huang, Kwok, Ngai, Chan, & Leong, 2016; Zhang &

Hornof, 2014) established the recalibration model

through the regression method. The regression

method is simple, and the program execution is fast.

Simple linear regression and low-order polynomial

regression cannot obtain a good fit. The BP neural

network (Rumelhart, Hinton, & Williams, 1986) is a

network model based on error backpropagation. As

shown in Figure 3, the BP network contains an input

ROBOVIS 2022 - Workshop on Robotics, Computer Vision and Intelligent Systems

420

layer, output layer, and one or more hidden layers.

There is no feedback path across layers and no

interconnection within each layer. All neurons are

connected between adjacent layers. Although it has a

simple structure, it can learn a large number of input

and output mapping relationships with high

efficiency, taking less than 10 s with more than 2000

data points in our case. The experimental results of

Harezlak (Harezlak et al., 2014) et al. also show that

artificial neural networks have a good error

calibration capability.

3 EXPERIMENTS

3.1 Equipment

In this study, we use the Tobii 4c eye tracking system,

which is based on the PCCR principle (as shown in

Figure 4), with a recommended working distance of

50 ~ 95 cm and a near infrared (NIR 850 nm) light

source. The calculation equipment is a Dell XPS8940

PC, Windows 10 64-bit OS, 3.5 GHz Intel i9 CPU.

The eye tracking equipment is installed under a 28-

inch Samsung monitor with a resolution of

3840x2160. All data samples are streamed at 60 Hz

from the Tobii official driver with an initial

calibration, which is done once and indefinitely.

Figure 4: Working environment of eye tracking equipment

(A refers to the calibration window; B refers to the eye

tracking equipment).

3.2 Procedure and Results

During the experiment, to verify the influence of the

speed of the target gaze point on the tracking error,

we repeated the measurement and collected the eye

movement fixation data for analysis. The eye fixation

data were measured by the movement of the eyeball

following the target point along the horizontal line

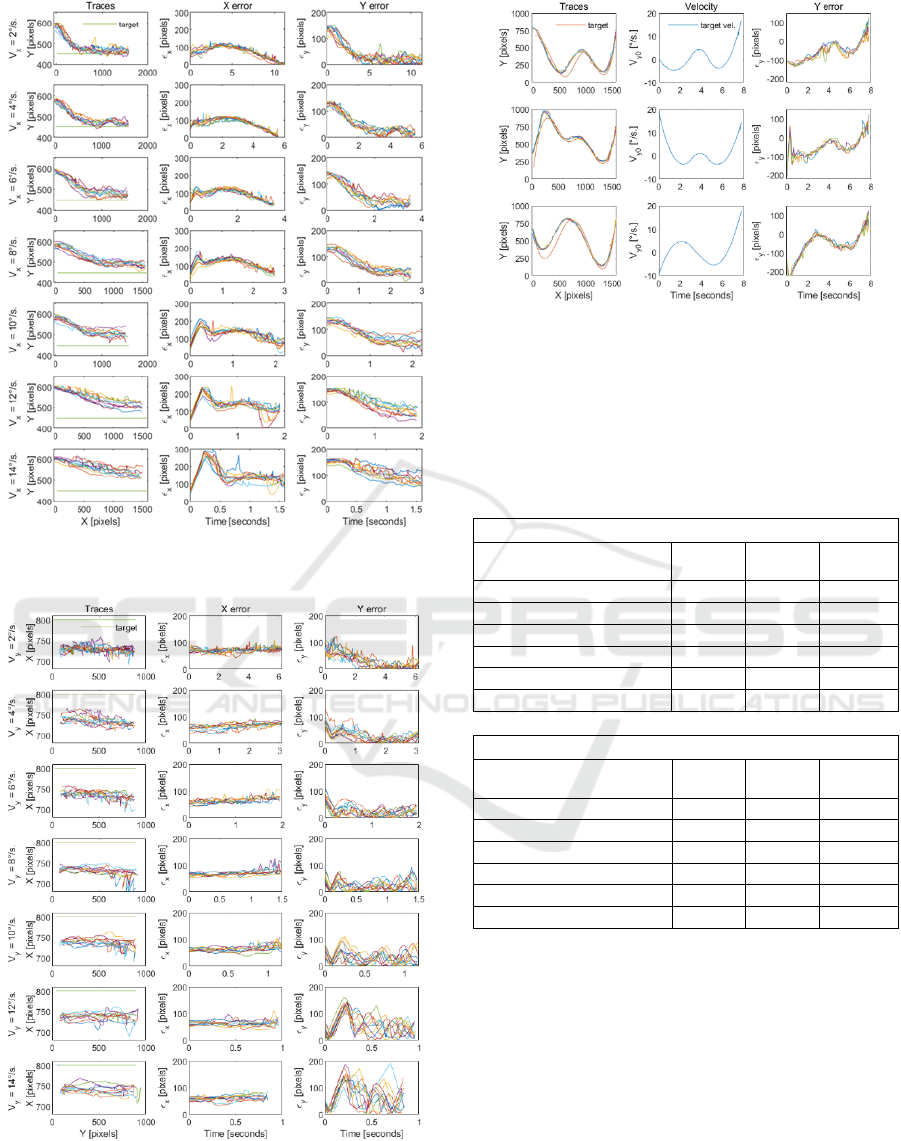

and the vertical line. Seven groups of experiments

were performed at speeds of 2, 4, 6, 8, 10, 12 and 14

°/s. Each group of experiments was repeated ten

times. Figure 5 shows the spatial traces and absolute

error distribution of measured gaze points of all

groups/repetitions with eyeballs following the target

points along the horizontal straight trajectory. Similar

to the vertical straight-line trajectory, Figure 6 shows

the spatial traces and absolute error distribution of the

measured gaze points of all groups/repetitions with

eyeballs following the moving target fixation point.

It can be observed from the error distribution in

Figures 5 and 6 that the eye gaze error of smooth

tracking under multiple repeated measurements is not

random, and with the increase of the gaze moving

speed in each axis, the absolute error gradually

increases, and the dispersion degree of distribution

also increases. However, it can be seen that when the

gaze velocities in the X and Y directions are both

within 6°/s, the direction errors are not prominently

differentiable between each group and tend to be

stable within each group. To further verify the effect

of gaze movement speed on gaze error, we conducted

an experiment of variable-speed gaze movement in

the Y direction. We set three groups of gaze

movement trajectories with large variance, and each

group repeated five times. The target guided the gaze

point to move at a constant speed in the horizontal

direction and at a variable speed in the vertical

direction. The spatial traces, vertical velocity

distribution and vertical error distribution

(nonabsolute value) of the measured gaze points of all

groups/repetitions with eyes following the movement

of the target gaze point are shown in Figure 7.

From the data distribution of Figure 7, we can

further observe that there is an obvious correlation

between the velocity curve and the error curve in the

corresponding axis. When the velocity curve reaches

the extreme value, the direction error also reaches the

extreme value in the corresponding section with slight

phase shifting.

Combined with the characteristics of Figure 5,

Figure 6 and Figure 7, we set the moving speed of

the calibration fixation point to 5.4°/s (equivalent to

2 pix/s for the experimental device) to follow the

target calibration trajectory, and the data generated

are used to train the neural network. However, five

velocity modulation trajectories with large variances

are randomly generated with the fixation point

moving at a constant velocity in the X direction and

at a velocity modulated to less than 6°/s in the Y

direction to predict and verify the error

compensation model.

Table 1 shows the error table of fixation data

collected by an experimenter based on the Tobii 4c

Eye Tracking Calibration based on Smooth Pursuit with Regulated Visual Guidance

421

Figure 5: Horizontal line tracking with different constant X

velocities.

Figure 6: Vertical line tracking with different constant Y

velocities (note X and Y swapped in the ‘Traces’ column).

Figure 7: Tracking error with limited varying Y velocities.

Table 1: Multiple sets of gaze point dataset error (a. Errors

in degrees as unit; b. Errors in pixels. In the figures,

Trace_calibration data are used for calibration, Trace_1~5

are used for testing the recalibration model later, as shown

in Table 2, and X_mae and Y_mae are the mean absolute

errors in the horizontal and vertical directions,

respectively).

Error(degrees)

Test

(experiments number)

X_mae Y_mae mae

Trace

_

calibration 0.81 0.70 1.07

Trace_1 0.69 0.81 1.06

Trace_2 0.84 0.74 1.12

Trace_3 0.80 0.80 1.13

Trace

_

4 0.72 0.61 0.94

Trace

_

5 0.73 0.61 1.13

(a)

Error(pixels)

Test

(experiments number)

X_mae Y_mae mae

Trace

_

calibration 59 51 78

Trace_1 50 59 77

Trace_2 61 54 81

Trace_3 58 59 83

Trace

_

4 53 45 70

Trace

_

5 54 45 70

(b)

fixation smoothing calibration trajectory and five

randomly generated test trajectories (before the

recalibration model is applied), including the average

absolute error and the maximum absolute error in the

X direction and the average absolute error and the

maximum absolute error in the Y direction. In the

process of eye movement data collection, the

measurement process of eye movement is

independent and parallel with the movement of the

target fixation point without interfering with each

other. Finally, according to their respective

timestamps, the advantages of independent and

ROBOVIS 2022 - Workshop on Robotics, Computer Vision and Intelligent Systems

422

parallel visualizing and sampling are to ensure the

real-time performance of measurement data to the

greatest extent, preventing the inaccurate

measurement caused by the time fluctuation noise.

The collected data include the position and time

stamp of the target point on the screen (x_target,

y_target, unit: pix; timestamp, unit: us), Tobii 4c

measurement data and timestamp (timestamp, unit:

us), tobii 4c measurement data include the

measurement of user's eye fixation on the screen and

data validity flag (x_meas, y_meas, unit: pix; validity,

as 0 or 1), the spatial location of the user’s left eye

relative to the center of the screen, and the data

validity signs (x_lefteye, y_lefteye, z_lefteye, unit:

mm; valid, as 0 or 1), the spatial location of the user’s

right eye relative to the center of the screen

(x_righteye, y_righteye, z_righteye, unit: mm;

validity, as 0 or 1). Before training the BP neural

network model, it is necessary to filter the data whose

filtering time difference is greater than the predefined

threshold (set to 5 ms) or validty = 0. With validity =

0, it indicates that the sampling data of eye movement

equipment are invalid, which situation will occur if at

the sampling moment eyes are closed or the user is

not in the working space of the eye movement

tracking equipment.

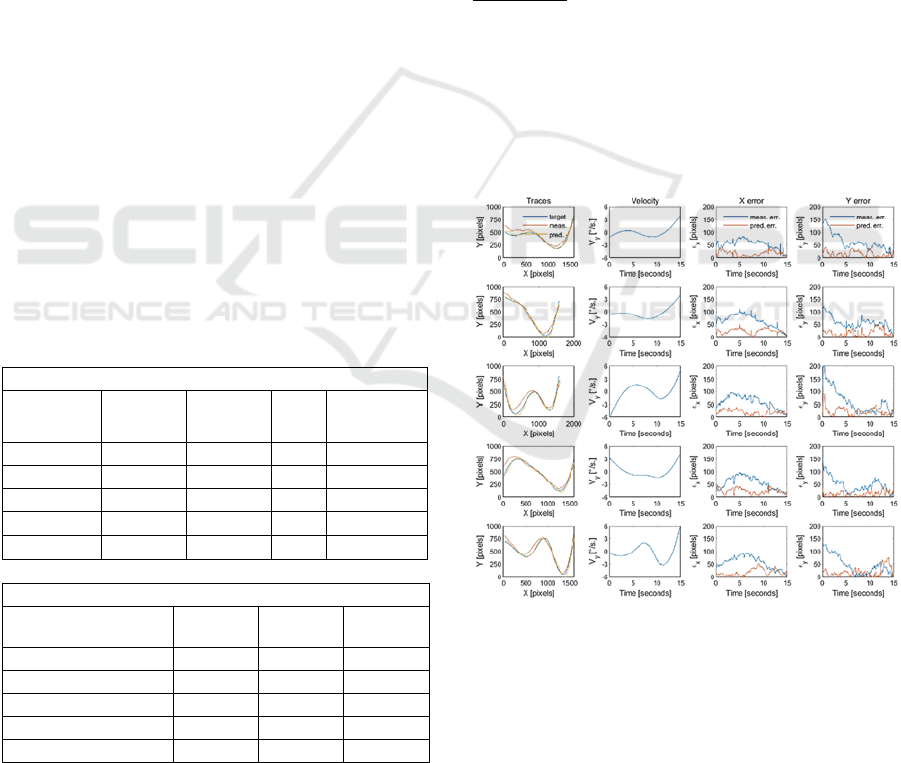

Table 2: Corrected fixation data against Table 1 (a. Errors

in degrees as units; b. Errors in pixels. Trace_1~5 data are

used again to test the recalibration model trained by

Trace_calibration data, as shown in Table 1. X_mae and

Y_mae are the mean absolute errors after correction in the

horizontal and vertical directions, respectively).

Error(degrees)

Test

(experiments

number

)

X_mae Y_mae mae R2_score

Trace_1 0.22 0.21 0.30 0.989

Trace_2 0.30 0.22 0.37 0.995

Trace_3 0.25 0.29 0.38 0.987

Trace_4 0.30 0.17 0.34 0.996

Trace_5 0.23 0.26 0.35 0.990

(a)

Error (pixels)

Test

(experiments number)

X_mae Y_mae mae

Trace_1 16 16 23

Trace_2 22 16 27

Trace_3 19 21 28

Trace_4 22 13 26

Trace_5 17 19 25

(b)

An appropriate mapping model is established

based on the machine learning library Sklearn and BP

neural network structure. The calibration dataset

collected in a group of experiments by an

experimenter is used for training by using the

appropriate model structure and method, determining

the appropriate hidden layer number and node

number, selecting ReLU (f(x) = max(0, x)) as the

activation function, Adam as the optimization method,

x_meas, y_meas, x_lefteye, y_lefteye, z_lefteye,

x_righteye, y_righteye, z_righteye as the feature

input, and x_target, y_target as the output.

We train Trace_calibration (calibration dataset)

based on the BP neural network and test the Trace_1

to Trace_5 fixation datasets based on the obtained

training model. The results are shown in Table 2.

In Table 2, R2_score (Raju, Bilgic, Edwards, &

Fleer, 1997; Yin & Fan, 2001) is a kind of model

accuracy evaluation index with 𝑅2

1

∑

∑

, where 𝑖 is the time point index in a

single test trace, 𝑦 is the target gaze point, 𝑦 is the

model corrected prediction from gaze measurement,

and 𝑦 is the temporal average of y. Figure 8 shows the

corresponding visualization of these model-based

recalibration results (with error in pixels) tested by

the random curve trajectory fixation datasets

(Trace_1 to Trace_5).

Figure 8: Spatially randomly generated curves tracking

with temporally limited speed for model verification.

4 DISCUSSION

In this study, a smooth pursuit calibration method

based on velocity modulation was proposed, and the

correction effect of the BP neural network model on

Eye Tracking Calibration based on Smooth Pursuit with Regulated Visual Guidance

423

the eye movement data generated randomly but

satisfying the regulation was verified.

Before recalibration, the mean absolute error of

the fixation point was approximately 1.0°. After

several experimental measurements of the smooth

calibration trajectory, the data trajectory of the

fixation point measured by the eye tracking

equipment basically features small variance, which

can also be seen from Figures 5 and 6. This mainly

reflects the high repeatability performance of the eye

tracking equipment, and there is statistical regularity

underpinning the residual error with high confidence.

There is a potentiality that the error can be effectively

reduced through an appropriate recalibration model.

Meanwhile, we found that there is a correlation

between the error curve of the fixation point and the

velocity curve in the varying velocity experiment. We

carried out seven groups of uniform motion tracking

experiments of horizontal and vertical lines, with

speeds set as 2, 4, 6, 8, 10, 12, and 14°/s, respectively,

and found that when the velocity of the fixation point

exceeds 6°/s, the error gradually diverges, and the

error curve becomes much more uncertain. Therefore,

in the formal experiment of smooth pursuit for

calibration, the speed of the target gaze point should

be kept smaller than 6°/s.

In our calibration experiment, we assume that the

BP neural network can predict the uncollected gaze

data points by learning the data from the calibration

curve to effectively reduce the residual error. This

assumption is indeed verified. We found that the

randomly generated test curve showed an obvious

decrease in error when applying the learnt model,

with the adjustment of the appropriate training

parameters (nonlinearity). In addition, the regression

model of the number of layers and nodes of the BP

neural network is established quickly with a good

error compensation effect. The average absolute error

of the smoothing calibration based on visual guidance

is lowered to approximately 0.4° from 1.0° within 10

s to finish training.

In addition, the experimenters may feel dry and

uncomfortable in the eyes in the process of calibration

procedures; if they deliberately avoid blinking, this is

the manifestation of extraocular eye muscle fatigue

(Sun, 2003). This situation will make the fixation data

change greatly in a short period of time. From the

original error curve in Figure 5 and Figure 6, it can be

observed that there are some erroneous jittering

points, which is the blinking situation described. The

occasional error jitters will not affect the calibration

effect of the model. In the calibration process, it is just

enough to relax and focus, and it is not necessary to

deliberately keep eyes open because extraocular eye

muscles are not fatigable in normal usage, similar to

jaw muscles (Fuchs & Binder, 1983).

5 CONCLUSION AND FUTURE

WORK

In previous research on smooth pursuit calibration, it

is generally necessary for users to gaze at the moving

target during the normal use of eye tracking

equipment and recalibrate with the target location in

running time. Although this smooth calibration

method eliminates the calibration steps before the

formal use of eye movement tracking equipment, it

does not work well without a moving target in the use

process. In our study, we found a correlation between

speed and tracking error through experiments and

proposed smooth pursuit calibration with speed-

modulated visual guidance to collect the appropriate

gaze data. We found the appropriate method to

establish the data calibration model. The sampling

and training of the recalibration process is fast, within

45s to complete, and the gaze point has a significantly

smaller error than the official calibration. In the

course of our experiment, it is found that displaying

the smooth calibration curve in the calibration

window can make users focus more naturally and

avoid visual fatigue.

In future research, we will study the influence of

the history and the future trajectory of the smooth

calibration curve near the current target point on the

model performance and design a learning algorithm

to replace the function of the smooth trajectory visual

guidance so that it is more suitable for a more general

and practical working scenario.

ACKNOWLEDGMENTS

This work was supported in part by National Natural

Science Foundation of China (Grant No. 62003324);

in part by the State Key Laboratory of Robotics and

System of China under Open Fund Program (Grant

No. SKLRS-2021-KF-06); in part by Hebei

Provincial Department of Science and Technology

under Central Government Guides Local Scientific

and Technological Development Fund Program

(Grant No. 206Z0301G).

ROBOVIS 2022 - Workshop on Robotics, Computer Vision and Intelligent Systems

424

REFERENCES

Abdrabou, Y., Mostafa, M., Khamis, M., & Elmougy, A.

(2019, June). Calibration-free text entry using smooth

pursuit eye movements. In Proceedings of the 11th

ACM Symposium on Eye Tracking Research &

Applications (pp. 1-5).

Adithya, B., Hanna, L., Kumar, P., & Chai, Y. J. T. (2018).

Calibration techniques and gaze accuracy estimation in

pupil labs eye tracker. TECHART: Journal of Arts and

Imaging Science, 5(1), 38-41.

Blignaut, P., Holmqvist, K., Nyström, M., & Dewhurst, R.

(2014). Improving the accuracy of video-based eye

tracking in real time through post-calibration

regression. In Current Trends in Eye Tracking

Research (pp. 77-100)

Drewes, H., Pfeuffer, K., & Alt, F. (2019, June). Time-and

space-efficient eye tracker calibration. In Proceedings

of the 11th ACM Symposium on Eye Tracking Research

& Applications (pp. 1-8)

Gomez, A. R., & Gellersen, H. (2018, June). Smooth-i:

smart re-calibration using smooth pursuit eye

movements. In Proceedings of the 2018 ACM

Symposium on Eye Tracking Research &

Applications (pp. 1-5).

Guestrin, E. D., & Eizenman, M. (2006). General theory of

remote gaze estimation using the pupil center and

corneal reflections. IEEE Transactions on biomedical

engineering, 53(6), 1124-1133.

Hansen, D. W., & Ji, Q. (2009). In the eye of the beholder:

A survey of models for eyes and gaze. IEEE

transactions on pattern analysis and machine

intelligence, 32(3), 478-500.

Harezlak, K., Kasprowski, P., & Stasch, M. (2014). Towards

accurate eye tracker calibration–methods and

procedures. Procedia Computer Science, 35, 1073-1081.

Harrar, V., Le Trung, W., Malienko, A., & Khan, A. Z.

(2018). A nonvisual eye tracker calibration method for

video-based tracking. Journal of Vision, 18(9), 13-13.

Holmqvist, K., Nyström, M., Andersson, R., Dewhurst, R.,

Jarodzka, H., & Van de Weijer, J. (2011). Eye tracking:

A comprehensive guide to methods and measures. OUP

Oxford.

Huang, M. X., Kwok, T. C., Ngai, G., Chan, S. C., & Leong,

H. V. (2016, May). Building a personalized, auto-

calibrating eye tracker from user interactions.

In Proceedings of the 2016 CHI Conference on Human

Factors in Computing Systems (pp. 5169-5179).

Larrazabal, A. J., Cena, C. G., & Martínez, C. E. (2019).

Video-oculography eye tracking towards clinical

applications: A review. Computers in biology and

medicine, 108, 57-66.

Mahmud, S., Lin, X., & Kim, J. H. (2020, January).

Interface for Human Machine Interaction for assistant

devices: a review. In 2020 10th Annual Computing and

Communication Workshop and Conference

(CCWC) (pp. 0768-0773).

Zineb, T., Rachid, E., & Talbi, E. G. (2019, October). Thin-

plate spline RBF surrogate model for global

optimization algorithms. In 2019 1st International

Conference on Smart Systems and Data Science

(ICSSD) (pp. 1-6).

Pfeuffer, K., Vidal, M., Turner, J., Bulling, A., & Gellersen,

H. (2013). Pursuit calibration: Making gaze calibration

less tedious and more flexible. In Proceedings of the

26th annual ACM symposium on User interface

software and technology (pp. 261-270).

Raju, N. S., Bilgic, R., Edwards, J. E., & Fleer, P. F. (1997).

Methodology review: Estimation of population validity

and cross-validity, and the use of equal weights in

prediction. Applied Psychological Measurement, 21(4),

291-305.

Rumelhart, D. E., Hinton, G. E., & Williams, R. J. (1986).

Learning representations by back-propagating

errors. nature, 323(6088), 533-536.

Valtakari, N. V., Hooge, I. T., Viktorsson, C., Nyström, P.,

Falck-Ytter, T., & Hessels, R. S. (2021). Eye tracking in

human interaction: Possibilities and limitations. Behavior

Research Methods, 53(4), 1592-1608.

Yin,P.,&Fan, X. (2001). Estimating R 2 shrinkage in

multiple regression: A comparison of different

analytical methods. The Journal of Experimental

Education, 69(2), 203-224.

Zhang, Y., & Hornof, A. J. (2014, March). Easy post-hoc

spatial recalibration of eye tracking data.

In Proceedings of the symposium on eye tracking

research and applications (pp. 95-98).

Zhu, Z., & Ji, Q. (2005, June). Eye gaze tracking under

natural head movements. In 2005 IEEE Computer

Society Conference on Computer Vision and Pattern

Recognition (CVPR'05) (Vol. 1, pp. 918-923).

Sun, H. B. (2003), Why do we blink? Journal of biology

teaching (in Chinese), 2003(12), 52-52.

Fuchs, A. F., & Binder, M. D. (1983). Fatigue resistance of

human extraocular muscles. Journal of neurophysiology,

49(1), 28-34.

Eye Tracking Calibration based on Smooth Pursuit with Regulated Visual Guidance

425