Mental and Physical Training for Elderly Population

using Service Robots

Christopher Ruff

1a

, Isaac Henderson

2b

, Tibor Vetter

3

and Andrea Horch

1c

1

Fraunhofer Institute for Industrial Engineering IAO, Nobelstrasse 12, 70569, Germany

2

Technology Management (IAT), University of Stuttgart, Allmandring 35, 70569, Germany

3

Wohlfahrtswerk für Baden-Württemberg, Germany

Tibor.Vetter@wohlfahrtswerk.de

Keywords: Elderly Care, Robotics, Social Robot, Mental Exercise, Physical Exercise, Health-Care, Social Human-Robot

Interaction, Companion Robots, Personalization, Biography Work, Artificial Intelligence, Robot Vision.

Abstract: In this paper we present the implementation and evaluation of mental and physical exercise applications on a

humanoid service robot for use in an elderly care setting. As the mental exercise application a personalized,

multi-medial quiz was designed and implemented using information from participants biography. The robot

acts as the quiz master, interacting with the participants in a natural and encouraging way. For the physical

exercise, a variant of the “charade” game was implemented that uses machine learning from previously

collected video samples and computer vision on the robot to identify the activities that participants enact.

Both applications were evaluated successfully in a real life setting and highlight the potential of using service

robots in elderly care settings.

1 INTRODUCTION

Demographic change across most western nations

and the accompanying increase of the elderly

population will have tremendous socio-economic

effects in the coming years. Current German surveys

show, that 22% of the German population are older

than 65 years and 50% of the population are older

than 45 (Destatis, 2021). Changes associated with

aging will inevitably lead to an increase of people

requiring nursing care. Providing an adequate care for

those populations is especially challenging due to a

chronic shortage of caretakers and staff in elderly care

(Destatis, 2020).

Physical and mental exercise, preferably in social

groups are deemed important activities to maintain

health and wellbeing while also providing social

exchange, which is often lacking in aging

populations. These activities can increase self-

reliance and reduce anxiety and the need for health-

and nursing care (Elias et al., 2015).

a

https://orcid.org/0000-0003-0484-4131

b

https://orcid.org/0000-0001-9397-1321

c

https://orcid.org/0000-0001-9384-316X

Healthcare Technology and particularly

autonomous service robots have the potential to

address these challenges by reducing the need for

human resources.

In this paper, we present two applications,

implemented on a social robot in order to perform

mental and physical exercise together with elderly

people. Furthermore, we evaluated those applications

in terms of acceptance, user experience and utility.

2 RELATED WORK

There are several related projects dealing with

interaction strategies and designs of companion robots

and social robotics in the field of elderly care or

common care.

The objective of the EU project ACCRA (Agile

Co-Creation of Robots for Ageing) (Fiorini et al,

2019) is the creation of advanced robotics-based

solutions in order to extend active and healthy ageing.

Therefore, the project developed an agile co-creation

Ruff, C., Henderson, I., Vetter, T. and Horch, A.

Mental and Physical Training for Elderly Population using Service Robots.

DOI: 10.5220/0011528200003332

In Proceedings of the 14th International Joint Conference on Computational Intelligence (IJCCI 2022), pages 435-444

ISBN: 978-989-758-611-8; ISSN: 2184-3236

Copyright © 2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

435

development process and defined a four-step

methodology. The applications developed by the

projects were implemented on two types of robots: (1)

Astro, a large assistive robot platform, and (2) Buddy

(Guiot et al., 2020) a small-sized companion robot.

The project HAPPIER (Wieching, 2019) (Healthy

Ageing Program with Personalized Interactive

Empathetic Robots) ( aims to support healthy and

active aging in the fields of (1) communication and

participation, (2) health and prevention and (3)

security and data protection. In order to reach the goals

the project uses different assistance robots for various

purposes. The robots are connected with the Internet

of Things (IoT) by a secure cloud-based system.

The Care-O-bot (Graf, B et al., 2009) (care-o-

bot.de) is a robot platform designed to support humans

in their home environments in an active and social

way. The platform implements different interaction

strategies for human robot interaction in order to be a

supportive service robot. The interaction strategies

include social manners for different types of humans,

such as elderly or younger people.

In contrast to the projects mentioned above the

goals of the NIKA project are (1) the creation of

interaction patterns for human robot interaction as

well as (2) the development of a middleware to

provide and use the patterns on different robot

platforms. The patterns are used in two different types

of applications on three different kinds of robots

(iRobot Roomba, MiRo, Pepper). In this paper, we

will introduce the designed applications to show how

they can be used to support elderly people with mental

and physical exercise.

3 PROJECT “NIKA”

The work presented in this paper was developed as

part of the Project “NIKA”, funded by the Federal

Ministry of Education and Research. NIKA explores

the use of social service robots in the context of elderly

care. This setting can be especially challenging, as

many elderly people rarely come in contact with new

technologies (like robots) and are not what is

commonly referred to as “digital natives”.

Additionally, elderly people often suffer from

physical impairments, such as hearing or visual

impairments. Designing the interactions and

applications in an accessible, non-threatening and

enjoyable way is therefore of utmost importance.

Thus, we chose an iterative design and

implementation process with several revisions of our

system and applications to address these challenges.

Using real life feedback from the target audience, we

were able to better adapt the system to the needs and

requirements of the elderly people. The evaluation

was carried out in a partnered elderly care institution

with clients of day-care and stationary.

4 USE-CASES

In collaboration with a partnered care-giving

institution, we conducted workshops to identify two

distinct use-cases and activities based on the

following key factors and criteria:

1. The application must provide benefit to the

clients of care institutions

2. The application must support care-givers by

taking over specific, time consuming tasks

3. The application must be acceptable for staff

and clients to work with

4. The application must be hold up to ethical

standards

Out of several potential applications of service

robots in elderly care, the application of mental and

physical exercise were identified as the most

important, advantageous, and well suited for the use

of social companion robots.

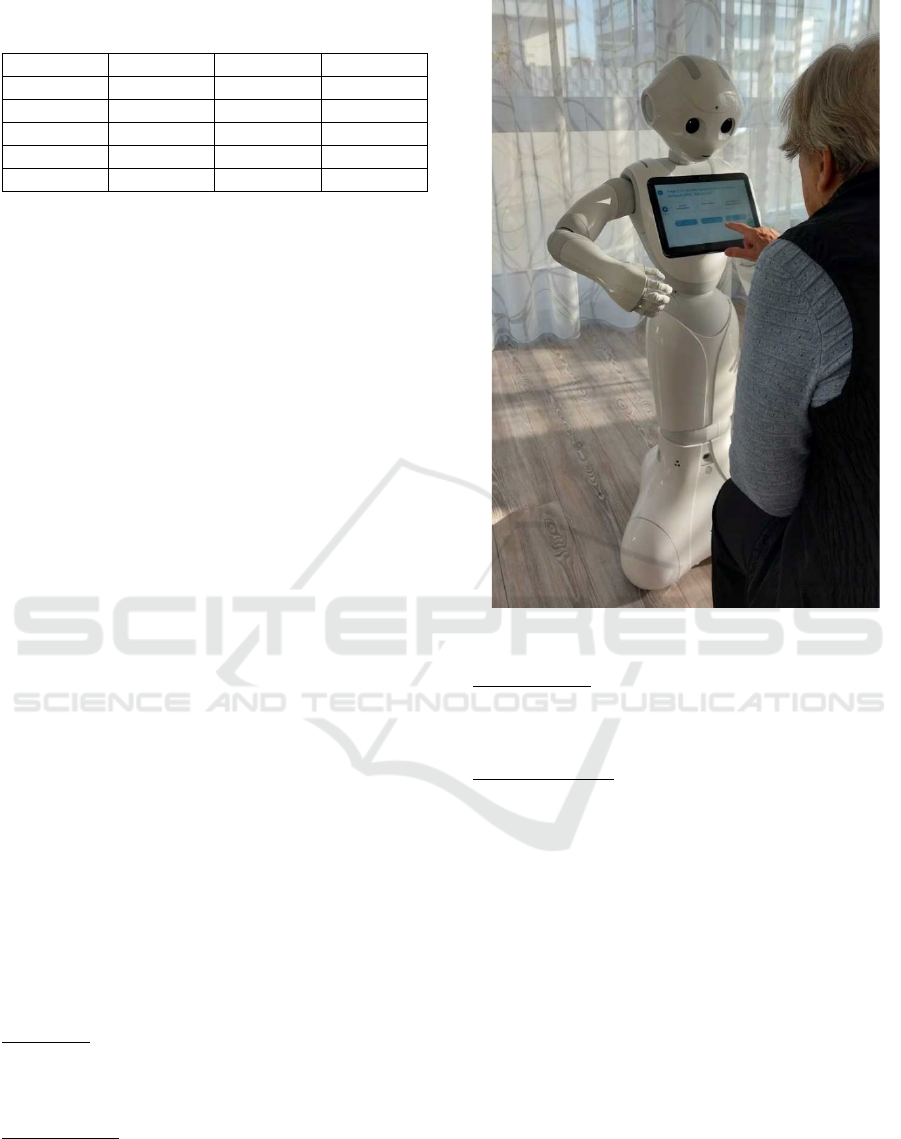

4.1 The Robot Platform

Pepper is the world’s first social humanoid robot

designed by Aldebaran Robotics, and released in 2015

by SoftBank Robotics

(https://www.softbankrobotics.com/) (SoftBank

acquired Aldebaran Robotics in 2015), which was able

to recognize faces and basic human emotions. Pepper

was optimized for human interaction and is able to

engage with people through conversation and his

touch screen. In addition to the recognition of human

emotions, Pepper is able to perform a wide variety of

actions with its head, arms, and body movement.

Figure 1: The robot "Pepper".

ROBOVIS 2022 - Workshop on Robotics, Computer Vision and Intelligent Systems

436

The robot is controlled by a dedicated, linux-

based operating system NAOqi. It contains several

modules that comprise the library, enabling the

developer to control the robot’s resources. The

NAOqi system of the Pepper robot is generally

identical to the NAO robot’s NAOqi, so switching

from working with NAO to working with Pepper is

straightforward. Pepper seems to be one of the best

options for implementing and research on Human

Machine Interface (HMI), due to the sensors,

technologies and functionalities included in its design

and pre-programmed in a form of an API (application

programming interface). A significant advantage of

this design is its full programmability, with access to

sensors (cameras, infrared) and speech synthesis and

recognition.

5 MENTAL EXERCISE

APPLICATION

Aging is associated with changes in cognitive

function and decline in performance on cognitive

tasks, including processing of information, decision-

making, working memory and executive cognitive

function. Exercise of mental and cognitive abilities

may decrease the rate of cognitive decline seen with

aging (Murman, D., 2015) and is therefore regularly

offered as an activity in elderly care facilities. In order

to explore the possibility and evaluate the usefulness

of using a service robot to conduct those exercises,

we designed and implemented a mental exercise

application.

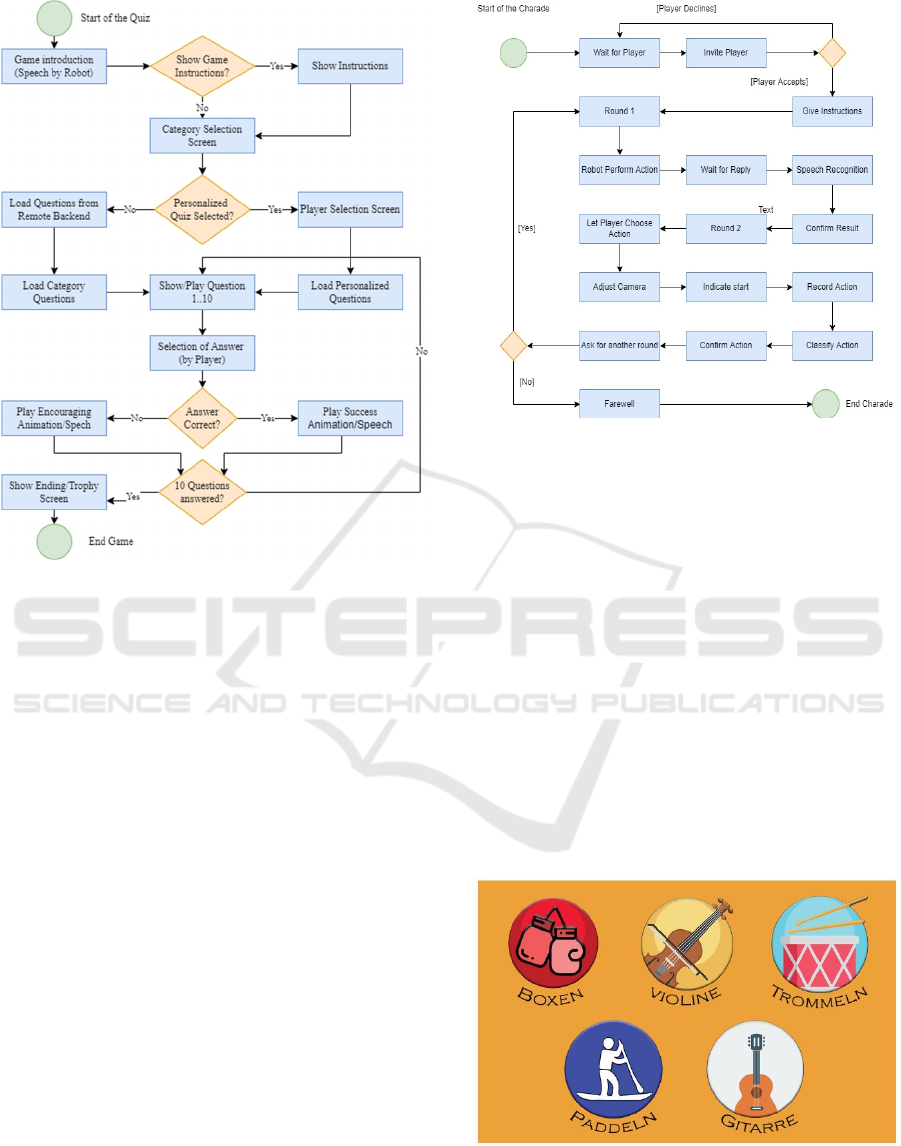

Concept. The mental exercise application was

designed as a multi-media quiz application (Figure 2).

The quiz format was used, as the concept and process

is familiar to most people. In order to facilitate

evaluation and avoid exhaustion or stress by the

participants, the quiz was designed to have rounds of

ten questions without any time limits. Questions are

grouped into several themed categories and three

difficulty settings.

The quiz supports different question formats, such as:

• Text – simple text question

• Image - text question with additional image

• Image questions - three images to choose

from

• Music/sound - a sound media file is played

with a textual question on the screen

The different question formats enhances exercise by

activating different brain regions. It also creates a

more interesting game experience for the users.

Figure 2: Quiz category selection screen, with categories:

“general”, “music”, “sport”, “nature&animals,

“engineering”, “history”, “literature”, “Film&TV”.

Biography Work and Personalization. Biography

work is a concept to improve cognition and promote

social participation (Corsten and Lauer, 2020)

(Specht-Tomann, M. 2017) often used in elderly care.

Biography work shows potential for the prevention

of mental pathologies, especially in older,

institutionalized adults (Elias et al., 2015).

To further promote mental wellbeing and increase

participation we integrated this concept into the quiz

application. Ten interviews were conducted with

participants of our evaluation study to gather their

biographic information and interests. Based on this

information, lists of questions (text, image and music)

were compiled, specific to each user (Figure 3).

Figure 3: Quiz question screen – Question: “What´s the

name of this musical instrument? (as shown in the picture).

Game Flow. When the application is started, the user

has to select his or her name, a selection for a quiz

category and the corresponding difficulty setting are

being displayed on the tablet screen, mounted on the

robot. After selection, the quiz content is downloaded

from a server and the quiz starts. The flow chart in

Figure4 shows how the game flow was designed.

Mental and Physical Training for Elderly Population using Service Robots

437

Figure 4: Mental exercise game flow.

6 PHYSICAL EXERCISE

APPLICATION

Concept: The gameplay for this application is based

on games like activity, charade or pantomime. They

are usually played by one or more teams, where each

team consists of at least two people. At the beginning

of each turn, a countdown starts (e.g. by a timer or

hourglass). One player of the team whose turn it is

draws cards from a deck. Each of those cards has a

term written on it. The player then has to pantomime

so that the other team members can recognize it and

guess the correct term. If the guess is correct, the team

gains points. This is repeated until the time runs out.

In the end, the team with the most points wins the

game.

In the current prototype, Pepper robot takes over

the part of the opponent team in performing and

recognizing actions, which, in turn, requires the

human player to mime them and vice versa. The

performing and recognizing actions of pepper are

classified as Round1 and Round2. The score

Figure 5: Charade game flow.

mechanics and the countdown have been dropped as

well. Below, these simplified game rules are

described as seen from the viewpoint of Pepper. A

visual diagram of the simplified game is shown in

Figure 4.

Explanation of Design Flow. When the application

is started, Pepper uses its face detection capabilities

and waits, until a person walks into its field of view

(FOV). If a person is detected, he or she is verbally

invited by the robot to 8 play a game of charade. If

the potential player refuses the invitation, Pepper

goes back into the waiting state until another face is

detected. In Round1, the robot performs one of the

following actions, namely: Violin, Drums, Padding,

Piano, Telephone, Tennis, Weightlifting, and

Guitar, which were

Figure 6: Charade Game activity selection, featuring

activities: “boxing”, “playing the violin”, “drumming”,

“rowing” and “guitar”.

ROBOVIS 2022 - Workshop on Robotics, Computer Vision and Intelligent Systems

438

stored as so called “behaviors” in the Pepper

operating system. After performing the

corresponding action, Pepper gives the opportunity

for the human player to reply via voice command.

The backend program uses speech recognition to find

the correct answer based on the human player’s reply.

In Round2 the robot gives a short instruction on how

the Round2 is played. Afterwards, the list of possible

activities to mime are displayed on the robot’s tablet,

showing an illustration of the possible actions (see

Figure 6).

Figure 7: Performing the "boxing" activity.

Figure 8: Participant performing "violin" activity.

This approach was chosen in order to have a uniform

system that does not require external components,

such as game cards. As a next step, the robot adjusts

its camera, so that the human player’s upper body is

located in the center of the captured image data. This

step is essential, otherwise the body parts important

for the human action recognition (HAR) (i.e. the arms

and the upper body) might not be visible, making

recognition much less accurate. Furthermore, in

combination with face detection, it ensures that the

user is visible from a frontal perspective. After

adjusting the camera, Pepper indicates to the player to

start with the performance of the previously selected

activities by counting downwards from 3.

Subsequently, the user is recorded by the robot’s

camera and the data is processed as described in the

subsection below. Using the extracted features, the

classification is conducted. Pepper then vocalizes its

guess and asks for confirmation. If the probability,

returned by the HAR system, is too low, the robot

notes that it is unsure about the correctness of its

guess. Depending on the correctness of the answer by

the human player, Pepper gives a positive or negative

response. Regardless of whether the action has been

classified correctly or not, the player is asked if he or

she wants to play another round. If so, the application

goes back to the point where the user has to choose

an action. Otherwise, Pepper says its farewell and the

application stops.

The Human Action Recognition System. A

fundamental challenge that arises in recognizing

human actions is variability. Human movements can

be influenced by multiple factors. Sheikh identified

three important reasons that can result in large

variability (Sheikh et al., 2005): (1) viewpoint, (2)

execution rate and (3) anthropometry of actors. In our

application specifically, age can greatly affect the

way elderly people move. Compared to younger

people, the elderly move differently in terms of

velocity, flexibility and smoothness of motion. This

may especially have an effect on the variability of

execution rate as elderly people may perform an

action more slowly.

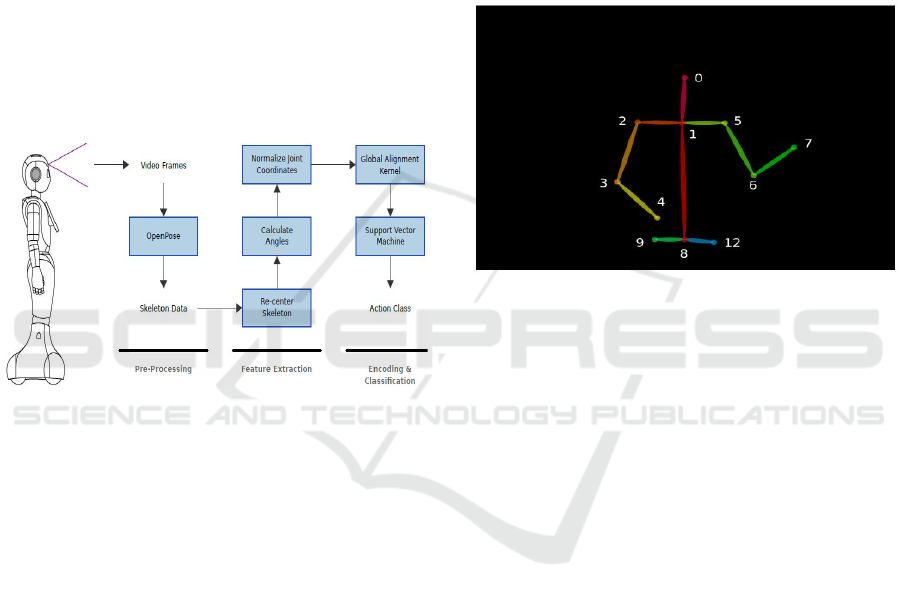

We implemented a HAR system (Ponzer 2020),

which is used for action recognition during the

execution of the simplified charade game. The

recognition is bound by the following constraints that

result from the use-case:

Indoor Environment. In the course of the NIKA

project, Pepper is only used in indoor environments,

such as care facilities and assisted living homes.

Accordingly, recognition has to focus on indoor

situations.

One Person in FOV. During the course of

implementation it was assumed that only one person

is standing in front of the robot. Therefore, no

Mental and Physical Training for Elderly Population using Service Robots

439

segmentation was necessary in order to extract the

image segment containing the player.

Frontal Perspective. The HAR system is designed

under the assumption that the player is looking at

Pepper and his or her body is positioned in front of

the robot, as argued by Fasola and Mataric (Fasola

and Mataric, 2013). The pipeline of the HAR system

introduced below is divided into three main parts.

Firstly, in the pre-processing step, the video frames

are passed on to OpenPose (Z. Cao et al., 2019) in

order to extract human skeletons from the images.

Secondly, taking the skeletons as input, feature

extraction is conducted. Thirdly, the features are

encoded and classified by combining the global

alignment kernel (GAK) with a multi-class Support

Vector Machine (SVM). The pipeline is also shown

in Figure 9.

Figure 9: Player activity recognition pipeline.

Pre-Processing. The pre-processing step described in

this subsection and is applied in order to bring the raw

video frames into a format that can be used for further

processing. In 1973, Johansson (Johansson, 1973)

inspected the visual representation of human motion

patterns. His work, which is considered a milestone

in the research of skeleton analysis (Saggese et al.,

2019), concluded that human actions can be

adequately described by the appropriate selection of

10-12 joints. Therefore, joint locations or joint angles

offer a rich source of information for vision-based

HAR (Poppe, 2010).

Training of the Human Action Recognition

System. The machine learning approach of the HAR

system involves the two stages: training and testing

(Lara and Labrador, 2013). The following section

describes the process of training in more detail.

In the training phase of the HAR model, the

sequences of the charade dataset for elderly people

was recorded with participants during the project are

used as samples. The dataset consists of 300 samples

for each of the five classes: boxing, drums, guitar,

paddling and violin. The time-consuming task of

extraction of 2D skeleton data, every time the training

is executed, has been completed beforehand, using

the OpenPose framework. Before the execution of the

training phase, the dataset was split up, as proposed

by (Chicco, 2017). 20% of the samples are withheld

for the evaluation phase while the remaining 80%

were used for training of the classifier. Accordingly,

the model is trained on 1200 samples and tested on

300 samples. In order to test under conditions that are

similar to the planned use-case, the split between

training and testing data was done at the level of the

Figure 10: Open pose derived skeleton.

individual actors. Thus, each actor, is either in the

training, or in the test set.

For the purpose of classification, the recognition

system, as described in Figure 9, is composed of a

multi-class SVM. For measuring of the similarity

between the time series, the SVM is combined with

the GAK as the kernel. Although the improved

version of the GAK, namely the Triangular Global

Alignment Kernel (TGAK), can be computed faster,

this comes at the cost of accuracy. As mentioned in

the previous section, with the large amount of data

used for training as well as during the application’s

execution, the GAK’s computation is still possible in

a reasonable time frame. We therefore, chose to use

the original kernel. The input for the classifier is

windowed to a length of 50, which means that the

concatenated features of 50 consecutive frames are

used to represent one sample.

Performance Results of our HAR Model using the

Charade Dataset. The performance results of the

proposed model are summarized in the following

table. It contains the corresponding evaluation scores

for each class, namely precision, recall and the F1-

score. The average accuracy over all classes on the

test set is 6% less than on the training set, i.e. 84.4%

instead of 90.4%.

ROBOVIS 2022 - Workshop on Robotics, Computer Vision and Intelligent Systems

440

Table1: Precision, Recall and F1 Score of the different

activities.

Precision Recall F1-Score

Boxin

g

0.77 0.95 0.85

Drums 0.81 0.77 0.79

Guita

r

0.90 0.70 0.79

Paddlin

g

0.98 1.00 0.99

Violin 0.79 0.80 0.80

7 EVALUATION

The evaluation was considered one of the most

important phases of the NIKA project as it offered the

opportunity to gather real world data about use of

humanoid service robots in elderly care settings. It

was carried out in several iterations with focus on

different aspects. The main goal of the evaluation was

to examine the feasibility of the technology, e.g.: Is it

possible to run the applications smoothly on site

under real world conditions with current state of the

art robots? Furthermore, the interaction of the senior

citizens with the robots and the usability of the quiz

and charade applications were examined.

Since the Quiz and Charade applications were

mostly focused on helping the mental and physical

activity of elderly people, most of the evaluation took

place in an elderly home of the partnered Elderly Care

organization “Wohlfahrtswerk für Baden-

Württemberg”.

As the project was carried out during the global

covid pandemic, the originally planned evaluation

had to be adapted to the circumstances. For this

reason, the first evaluation phase was done during the

lockdown phases, with questionnaires sent to the

participants and without direct robot interaction. The

objective was to gather information about the needs

and opinions of the elderly people in regards to the

interaction with service robots and our specific use-

cases.

Evaluation Timeline: From January to December

2021, a total of four evaluations were conducted in a

care facility of the Wohlfahrtswerk organization.

April 2021: First iteration of the cognitive activation

"Quiz" (5 participants). The participants each played

two rounds of quizzes, in which they could choose

one category each.

August 2021: Second iteration of the cognitive

activation "Quiz" (15 participants). The participants

each played two rounds of quizzes, in which they

could choose one category each.

Figure 11: A study participant playing the quiz game.

October 2021: First iteration of physical activation

"Charades" (10 participants). Participants played one

round of charades (6 movements in total) and were

allowed to choose whether to play a second round.

November 2021: Third iteration of the cognitive

activation "Quiz" (10 participants). The participants

played two rounds of the quiz. In the first round, they

could choose a category. The second round was "My

personal quiz," in which the content from the pre-

interviews was personally created. An observation

sheet was used to record social, physical, and

cognitive activity, as well as mood and well-being.

Likewise, any technical issues were recorded so they

could be ironed out for the next iteration.

Participants: In the run-up, the participants were

acquired with the help of flyers and with the support

of the social service management and fixed

appointments were assigned. The selection was based

on the following criteria:

at least 65 years old

home care environment

interest in technology and basic

understanding

no major cognitive impairments

Mental and Physical Training for Elderly Population using Service Robots

441

ability to read and write (if necessary by

means of aids)

Equal gender and age distribution was also taken into

account. In order to ensure comparability of the

results, the same persons were asked again for the

following iterations. Each participant was given the

same time window of 60 minutes.

Methods: The following methods were used in the

evaluation:

Pre-interview (only for third quiz evaluation

in November ‘21).

Questionnaire (pre-evaluation)

Observation

Questionnaire (post-evaluation)

Pre-Interview: A special feature of the quiz concept

is the possibility of personalization, i.e., tailoring

question content to one's own interests and

biographical events. In order to be able to evaluate a

very high degree of personalization, the participants

were interviewed in advance and a personal quiz was

generated from their answers.

Questionnaire (pre-evaluation): After welcoming

the participants, they were asked about their socio-

demographic data (age, gender, etc.), interest in

technology and awareness of robotics before using

the robot. For the charade use-case, questions about

physical activity were also included.

Observation: After the survey, the participants were

given a short introduction to the system (quiz or

charade) and could test the system independently.

Questionnaire (post-evaluation): After playing the

quiz, the participants were asked about their

experience of use with the help of a questionnaire.

a) Questionnaire on the quiz: This consisted of a

part on the quiz (including the use of the Game

Experience Questionnaire in August and

November), on usability (System Usability

Scale) and questions on the use of the robot

assistant. The questionnaires of the three

iterations were similar except for the addition of

the GEQ from August and further questions on

the use of the assistant robot.

b) Questionnaire on the charade: This consisted

of a part on the charade (among other things,

survey of activity and fatigue after the charade,

Game Experience Questionnaire). Likewise, the

usability was surveyed by means of the System

Usability Scale. Finally, questions about

potential usage were also asked here.

The iterative approach enabled the project team to

incorporate user feedback directly into the system

after evaluation. Thus, technical problems could be

identified and solved and the quiz concept could be

adapted and improved. Even though only one

iteration was possible for the charade, the system

could be further developed with the help of this.

The questionnaires contained both quantitative

and qualitative items. The quantitative data were

analyzed and presented descriptively. The scales used

(GEQ and SUS) were evaluated according to the

specifications. The qualitative evaluation was carried

out by means of a descriptive interview analysis, in

which the statements in own words are classified in

selected outlines by means of paraphrasing. The data

was transcribed and analyzed using Mayring's content

analysis (Mayring, 2004). The results were discussed

with the partners promptly after the iteration in order

to be able to incorporate important findings into

further development and the next iteration.

8 RESULTS

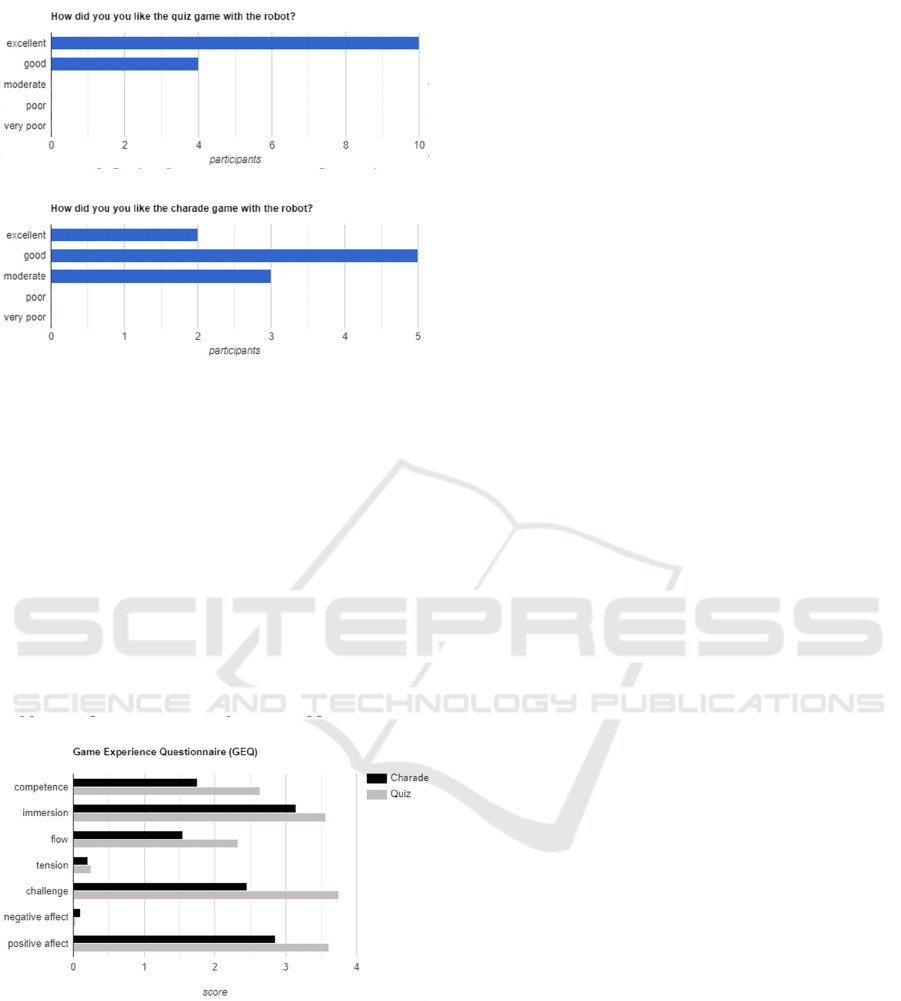

In summary, the system (Pepper with quiz/ Pepper

with charade) was very well received by the users.

The SUS score of the System Usability Scale was

always in the range of "good to excellent" (80 to 100

points) for the quiz and in the range "good" for the

charade. Overall the quiz application reached higher

scores in terms of enjoyment (Figure 12, 13) by the

majority of participants.

These results need to be contextualized with the fact

that the concept of the charade game was mostly

unknown for the participants, contained more

advanced game mechanics and technologies

(computer vision, machine learning), limited by the

robots functionalities and thus was more prone to

errors (e.g. in the detection of the user actions and

speech recognition). In contrast, most participants

were used to playing quiz games from real life

experience or mobile device apps and understood the

concept and their required actions much more

intuitively.

The results of the GEQ of both applications are

presented in Figure 14. Overall, the experience

evoked little negative feelings or tension but high

feelings of immersion with a positive affect.

ROBOVIS 2022 - Workshop on Robotics, Computer Vision and Intelligent Systems

442

Figure 5 - Overall quiz game evaluation

For the charade game, the users reported our

pre-defined user activities as well suited, but would

like to include more everyday activities (i.e. drinking

water, eating, playing cards, etc.) that especially older

Figure 12: Overall quiz game evaluation.

Figure 13: Overall charade game evaluation.

participants can relate to. Regarding the activities that

the robot performed, the activities of playing the

violin and the guitar were regarded as hard to

distinguish. In addition, the sluggish movements of

the robots were sometimes criticized (mostly a

limitation of the robotic platform used).

Due to the number of participants in our studies,

they cannot be considered statistically significant.

Nonetheless, our results yield interesting insights into

the real world application of the use of service robots

in elderly care for different use cases. More

researched is needed to validate these results using

bigger sample sizes and longer-term application.

Figure 14: Game Experience Questionnaire Results.

ACKNOWLEDGEMENTS

The NIKA project was funded by the German Federal

Ministry of Education.

REFERENCES

Federal Statistical Office. DeStatis. Retrieved June 8, 2022,

from https://www.destatis.de/DE/Presse/

Pressemitteilungen/2021/09/PD21_N057_12411.html

Federal Statistical Office. DeStatis. Retrieved June 8, 2022,

from https://www.destatis.de/DE/Themen

/GesellschaftUmwelt/Gesundheit/Pflege/Tabellen/pers

onal-pflegeeinrichtungen.html

Fiorini, L., D’Onofrio, G., Limosani, R., Sancarlo, D.,

Greco, A., Giuliani, F., Kung, A., Dario, P., & Cavallo,

F. (2019). ACCRA Project: Agile Co-Creation for

Robots and Aging. Lecture Notes in Electrical

Engineering, 133–150.

Denis Guiot, Marie Kerekes, & Eloïse Sengès. (2020,

February 28). Living with Buddy: can a social robot

help elderly with loss of autonomy to age well?. IEEE

RO-MAN Internet Of Intelligent Robotic Things For

Healthy Living and Active Ageing, International

Conference on Robot & Human Interactive

Communication, 14 October 2019, New Delhi, India

Rainer Wieching, Projekt HAPPIER (Healthy Ageing

Program with Personalized Interactive Empathetic

Robots), (DAAD 2018-2019) Website:

https://www.wineme.uni-siegen.de/projekte/happier/

Graf, B., Reiser, U., Hagele, M., Mauz, K., & Klein, P.

(2009). Robotic home assistant Care-O-bot3 - product

vision and innovation platform. 2009 IEEE Workshop

on Advanced Robotics and Its Social Impacts.

https://doi.org/10.1109/arso.2009.5587059

Murman, D. (2015). The Impact of Age on Cognition.

Seminars in Hearing, 36(03), 111–121.

https://doi.org/10.1055/s-0035-1555115

Corsten, S., & Lauer, N. (2020b). Biography work in in

long-term residential aged care with tablet support to

improve the quality of life and communication – study

protocol for app development and evaluation /

International Journal of Health Professions, 7(1), 13–

23. https://doi.org/10.2478/ijhp-2020-0002

Specht-Tomann, M. (2017). Biography work: in health

care, nursing and geriatric care. Springer-Verlag.

Elias, S. M. S., Neville, C., & Scott, T. (2015). The

effectiveness of group reminiscence therapy for

loneliness, anxiety and depression in older adults in

long-term care: a systematic review. Geriatric Nursing,

36(5), 372-380.

J. Fasola and M. J. Mataric. “A Socially Assistive Robot

Exercise Coach for the Elderly”. In: J. Hum.-Robot

Interact. 2.2 (June 2013), pp. 3–32. doi: 10.5898/

JHRI.2.2.Fasola.

Z. Cao, G. Hidalgo, T. Simon, S. -E. Wei and Y. Sheikh,

"OpenPose: Realtime Multi-Person 2D Pose Estimation

Using Part Affinity Fields," in IEEE Transactions on

Pattern Analysis and Machine Intelligence, vol. 43, no.

1, pp. 172-186, 1 Jan. 2021, doi:

10.1109/TPAMI.2019.2929257.

Gunnar Johansson. “Visual perception of biological motion

and a model for its analysis”. In: Perception &

Psychophysics 14.2 (June 1973), pp. 201–211. issn:

1532-5962. doi: 10.3758/BF03212378.

Mental and Physical Training for Elderly Population using Service Robots

443

Y. Sheikh, M. Shah, and M. Sheikh. “Exploring the space

of a human action”. In:Tenth IEEE International

Conference on Computer Vision (ICCV’05) Volume

1.Vol. 1. Oct. 2005, 144–149 Vol. 1.

Ponzer, J., (2020) Design and implementation of an

application for the physical activation of elderly people

using a service robot, [Master's Thesis, University of

Applied Sciences Würzburg-Schweinfurt]

Alessia Saggese et al. “Learning skeleton representations

for human action recognition”. In: Pattern Recognition

Letters 118 (2019), pp. 23–31.

Ronald Poppe. “A survey on vision-based human action

recognition”. In: Image and Vision Computing 28.6

(2010), pp. 976–990. issn: 0262-8856

O. D. Lara and M. A. Labrador. “A Survey on Human

Activity Recognition using Wearable Sensors”. In:

IEEE Communications Surveys Tutorials 15.3 (Third

2013), pp. 1192–1209. issn: 2373-745X.

Davide Chicco. “Ten quick tips for machine learning in

computational biology”. In: BioData mining 10.1

(2017), p. 35.

Mayring, P. (2004). Qualitative content analysis. A

companion to qualitative research, 1(2), 159-176.

ROBOVIS 2022 - Workshop on Robotics, Computer Vision and Intelligent Systems

444