Body Movement Recognition System using Deep Learning: An

Exploratory Study

António Saleiro

1

, Marcos Costa

1

, Jorge Ribeiro

1,2 a

and Bruno Silva

1,3 b

1

Instituto Politécnico de Viana do Castelo, Escola Superior de Tecnologia e Gestão, Viana do Castelo, Portugal

2

ADiT-LAB, Applied Digital Transformation Laboratory, Viana do Castelo, Portugal

3

Research Centre in Sports Sciences, Health Sciences and Human Development (CIDESD), Vila Real, Portugal

Keywords: Machine Learning, Convolutional Neural Networks, Skeleton Map, Body Recognition.

Abstract: This paper presents an exploratory work using Deep Convolutional Neural Networks (CNN) in the context of

detecting correct human movements during sports exercises, in particular specific physical exercises with the

capability to perform the “5 times Sit-to-Stand Test” following the guideline of the Delaware university which

is used in several settings to evaluate older adults’ functionality. It is used a supervised learning model

implemented with an opensource approach for the detection of the human body position with the calculation

aspect and angles of the limb movements (e.g. the angle of the knee) in order to recognize if the exercises

were or not well executed. Results have shown that, under normal operating conditions, an accuracy in the

exercises detection around 80% using the CNN Mobilenet architecture. We conclude that with the

implementation of the Posenet algorithm using Tensorflow via ML5.js we achieved results of correct

exercises accuracy between 53% and 79% for exercises involving the lower limbs and accuracy around 45%

to the upper limbs. Due the promising results achieved, we intent in the future to extend the work with

complementary approaches of human body pose estimation to improve the training strategy and different

CNN network architectures to perform the recognition accuracy in the applicability context of correct

execution exercises in elderly population physical activity scenarios.

1 INTRODUCTION

Human motion capture is used in a variety of

industries for biomechanics studies. A particularly

interesting application makes use of motion capture

data to train deep neural networks on human motion

during various activities in order to predict a human’s

intent of motion in real time as well as the correct

execution of the activities (e.g. sports exercises)

(Menolotto el al., 2020). On another hand recognition

of body movement is something that has applicability

to many real-world problems.

Due the success of the mathematical foundations

and applicability during decades, in the last years

Artificial Intelligence, Machine Learning and Deep

Learning (Wang, 2016; Liu et al., 2020) approaches

in computer vision domain have also gained

popularity in pose estimation due to the power of

a

https://orcid.org/0000-0003-1874-7340

b

https://orcid.org/0000-0001-5812-7029

convolutional networks in object recognition and

classification in particular for human pose estimation

(Chen et al., 2017; Shavit & Ferens, 2019; Chen et al.,

2019; Safarzadeh et al., 2019).

Promising results have been achieved and through

the exploration of Deep Convolutional Neural

Networks (CNN) (Wang, 2016; Liu et al., 2020; Chen

et al., 2017; Shavit & Ferens, 2019) it is possible to

develop robust technological applications (web and

mobile based) for motion detection, recognition of a

particular movement, human fall, etc. (Safarzadeh et

al., 2019).

The main goals of this work were: i) study and

exploration of CNN algorithms and implementations

for character recognition with the movements of the

body of a person (e.g., simulating sport exercises) and

ii) use of opensource CNN algorithms

implementations to recognize exercises if were or not

Saleiro, A., Costa, M., Ribeiro, J. and Silva, B.

Body Movement Recognition System using Deep Learning: An Exploratory Study.

DOI: 10.5220/0011540500003321

In Proceedings of the 10th International Conference on Sport Sciences Research and Technology Support (icSPORTS 2022), pages 101-109

ISBN: 978-989-758-610-1; ISSN: 2184-3201

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

101

being well executed in particular the angle of the knee

and the body limbs. The application context was

centred in an intervention program, named GMOVE

(Vila-Chã & Vaz, 2019) to promote physical activity

of the elderly population. It was also used the

guideline of the university of Delaware (Delaware,

U., 2022) for the assessment functionality settings of

the exercises.

2 RELATED WORK

Concerning the risk of falls in elderly, Chen et al.

(2020) presented the OpenPose algorithm to identify

points on the body in real time and to analyse three

critical parameters to recognize the fall down

behavior achieving a rate of 97% of success.

Chen et al. (2019) presented a comparison of

different types of networks that is made using pose

skeleton map in figures. They concluded that when

mapping the human body, the network would have a

greater success because there would be a greater

interconnection between the different types of the

body limbs. Using the Intersection over Union (IoU)

metric which determines how many objects were

detected correctly and how many false positives were

generated they achieve an accuracy of 95,39%

comparing with the models of the ENet (Lo et al.,

2019) (94,13%) and EDANet (Ramirez et al., 2021)

(95,25%).

Furthermore, Chen et al. (2017) uses an approach

for the identification of biologically natural or

unnatural poses trough the Posenet model. The

authors obtained 94.5% accuracy percentage when

the wrists were visible in the images and 70.7% when

not and for the elbows 95.1% and 77.6% respectively.

Likewise, using pose estimation and Multi-Layer

Perceptron classifiers, Safarzadeh et al. (2019) found

results of accuracy and loss reached 92.5% and 0.3

respectively. Ramirez et al. (2019) when focus their

study on the perception of when there were falls, they

extracted the key points of the human body with the

pose estimation network and fed a Multi-Layer

Perceptron, in which they used with the Rectified

Linear Unit (RELU) and sigmoid activation function.

Borkar et al. (2019) investigate the use of Posenet

(Chen et al., 2017) to compare positions by angles.

The authors states that through 17 key points it is

possible the calculation of the angles, to predict

whether the movement was well performed. Their

approach compares the shoulder coordinates and the

wrist coordinates of an arm to locate if the arm is

inward, outward, upward or downward. Similarly, for

the leg comparison the system uses hip angle and

knee angle.

3 METHODS

3.1 Theorical Framework

This work used recent CNN approaches and

implementations in the Artificial Intelligence and

Machine Learning field specifically to classify human

body parts and human body estimation poses using

Posenet algorithm which is built based on the CNN

Mobilenet (Chen et al., 2017; Chen et al., 2019;

Safarzadeh et al., 2019; Chang et al., 2019; Wang et

al., 2020; Cao et al., 2016; Ramirez et al., 2021; Chen

et al., 2020; Borkar et al., 2019). It was also used

programming code available on web repositories such

as GitHub to maximize the project implementation

(Posenet, M., 2022; Renotte, D., 2022; Gruselhaus,

G., 2022; Oved, D., 2022; Mediapipe, 2022).

As basis this work followed the studies of Chen et

al. (2019) to count the number of exercises repetitions

and Borkar et al. (2019) to measure the angle between

joints in the body limb movements of the exercises

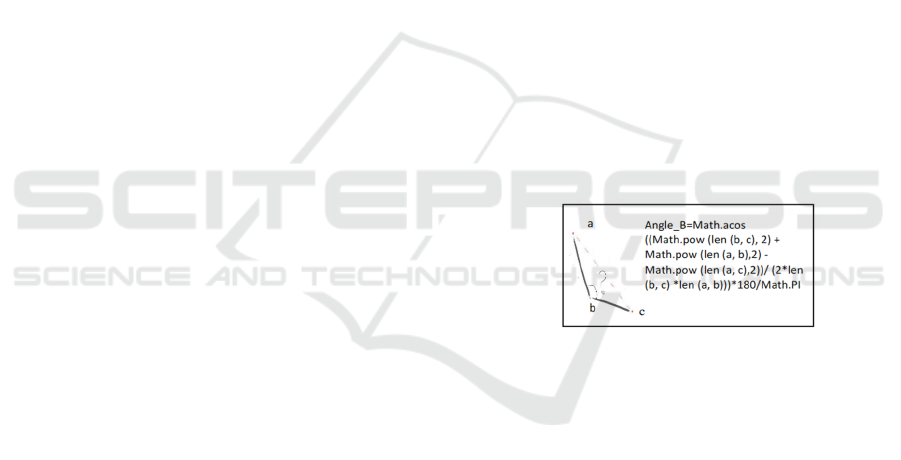

(figure 1).

Figure 1: Formula for calculating the angle between joints

(Borkar et al., 2019).

In practical terms it was created two prototypes

with the implementation of Posenet. A web to allow

the correct analysis of the character recognition with

the movements of the body of a person (e.g.,

simulating sports exercises) and to recognize if the

exercises were or not being well executed,

particularly the angle of the limbs. The

implementation was done using TensorFlow via

ML5.js (Posenet, M., 2022; Gruselhaus, G., 2022;

Oved, D. 2022). Additionally in order to provide an

application to be used with mobile devices it was

implemented a mobile app (android based) using

Mediapipe framework (Mediapipe, 2022).

3.2 Application Context

The context of applicability of the work was centred

in an intervention program, named as GMOVE (Vila-

icSPORTS 2022 - 10th International Conference on Sport Sciences Research and Technology Support

102

Chã & Vaz, 2019) to promote physical activity and

quality of life for the elderly population, following

specific exercises and the capability to perform the “5

times Sit-to-Stand Test – Five Times Sit to Stand” as

well as followed the university of Delaware guideline

(Delaware, U. 2022) “Assesses functional lower

extremity strength, transitional movements” in order

to check the number of times a person can perform

the exercises to stand up and sit in a chair for a certain

amount of time.

The GMOVE manual is structured by 45 exercises

to evaluate: cardiorespiratory resistance, muscle

strength, balance and postural control and flexibility.

To accomplish the research purpose, it was selected

the exercises that accomplish: i) muscle strength

exercises for the upper and lower limb; ii) exercises

that do not require the use of exercise equipment’s

like rubber bands, dumbbells, etc.; and iii) performed

with an isotonic contraction (Table 1).

Table 1: Exercises of the GMOVE Project (Vila-Chã & Vaz, 2019).

# Category Member Position Objective (*)

1

Muscle strength

Lower limbs

Sit to Stand on the chai

r

X

2 Squat with weigh

t

3 Isometric sitting position against the wall

4 Hip extensio

n

X

5 Dead lift with suppor

t

X

6 Lateral leg lif

t

X

7 Shoulder bridge

8 Knee flexion X

9 Seated knee extension X

10 Standing plantar flexion in the groun

d

X

11 Standing plantar flexion in a step

12 Seated Plantar flexion X

13

Muscle strength Trunk - back

Row with elastic

14 Shoulder horizontal extension with elastic

15

Muscle strength Trunk - Pectoral

Push-up on the wall X

16 Dumbbell’s chest press

17

Muscle strength Shoulders

Seated military shoulder press

18 Front raise with dumbbells elevation of arms

19 Seated shoulder lateral rise with dumbbells

20 High row

21

Muscle strength Upper limbs

Biceps curl with dumbbells

22 Kick back with dumbbells

23 Tennis ball hand squeeze

24 Towel twis

t

X

25

Muscle strength Core muscles

Sit ups

26 Sit up with heels

t

27 Diaphragmatic breathing

28 Alternate extension of arms and legs (dead bug)

29 Bird Dog exercise

30

Balance and

flexibility

Balance

Single leg stance

31 Toes rises and heel stance

32 Inline walking

33 On leg stance walking

34

Balance and

flexibility

Flexibility

Neck stretch

35 Shoulders internal and external rotation

36 Back scratch with a towel stretc

h

37 Fists stretch

38 Shoulder horizontal flexion and extension

39 Trunk mobility stretch

40 Scapular stretches

41 Lower back stretch

42 Quadriceps stretch

43 Hamstring stretch

44 Calf stretch

45 Ankle dorsiflexion and plantarflexion mobilization

Body Movement Recognition System using Deep Learning: An Exploratory Study

103

In parallel following the “5 times Sit-to-

Stand Test” protocol by checking the number of times

that a person can perform for a certain amount of

time, that assesses functional lower extremity

strength, transitional movements, balance, and fall

risk, with cut off points and reference values for

several populations it was intent to evaluate older

adults’ functionality (Marques et al., 2014).

3.3 Procedures

Individually, two human models, proficient in the

execution of the motor tasks were used to perform the

exercises. According to the inclusion criteria it was

implemented 10 of the initial 45 exercises (Table 1).

Following the related work presented in the previous

sections and in order to implement the prototype for

identification and classification of human body parts

for contactless screening systems it was created a

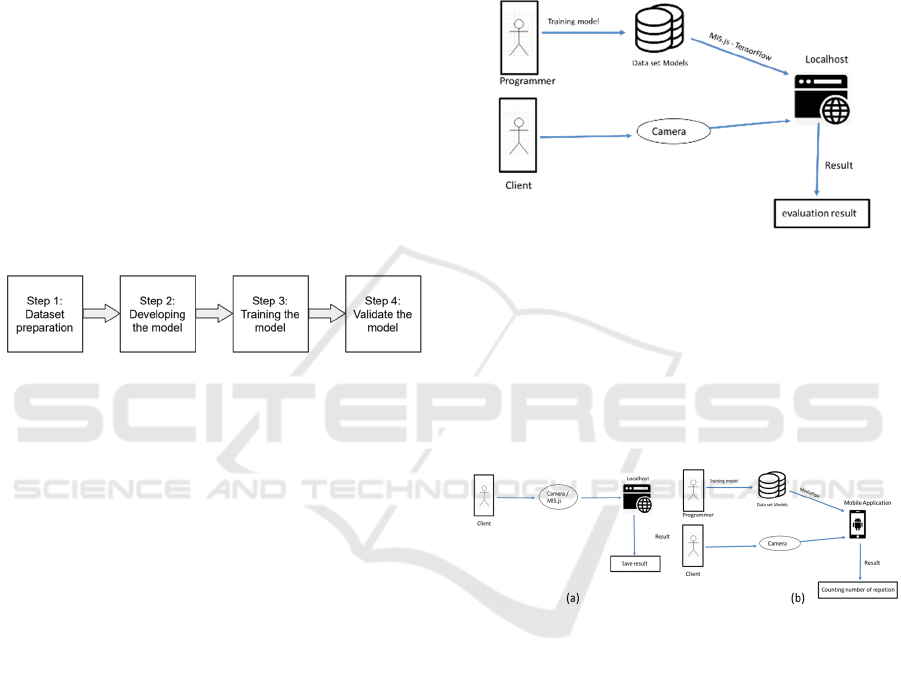

principle to adopt after the gathering the images from

the exercises (figure 2).

Figure 2: Principle adopted after collecting images with

Posenet and ML5.js.

In the first phase, it was managed to prepare the

dataset to test the data in the latter phase. Next, was

started the development of the model to identify and

classify body parts, more specifically the chest area,

evaluating the pictures taken in the previous phase.

Lastly, was implemented the methods from the

previous point to create the practical applications

(prototypes) to recognize the exercises and if were

well executed.

3.4 Architecture

In the first phase (figure 3), it was managed to prepare

the dataset to test the data in the latter phase. Next,

was started the development of the model to identify

and classify body parts, more specifically the chest

area, evaluating the pictures taken in the previous

phase. As mentioned after it was implemented the

methods from the previous point to create the

prototypes and use the models.

The process followed three steps: i) Creating the

Model: it was created a local server to run all the

services to develop the first model; when the page

starts, the user has to accept permission to access the

camera and is able to create the first model. As soon

as the data collection is finished, the process can be

repeated or finished; ii) Training the Model: to be able

to train the model, a previously saved file must be

placed inside the training folder and changed the

name of the parameter to the respective name of the

saved file. The first model was trained and saved

three files, which will be the training models (dataset

models), named "model", "model weights" and

"model meta; iii) Testing the Model: if all the

previous steps were successfully completed was

possible to test the created models.

Figure 3: General Architecture.

In the web prototype the client put the computer

in front then, execute the squat and the localhost save

the exercise in video. With the view of the angles, he

can recognize if is being performed according to the

norm and thus increase the number of repetitions

done (figure 4 – (a) – computer/web based and figure

4- (b) – mobile app - android based).

Figure 4: General Second Architecture (a – web based; b –

Android Based).

Following the same approach as the architecture

of the web platform, which allows to check whether

an exercise was well performed or not, in the mobile

application it was added the functionality of counting

the number of repetitions executed. The mobile

version differs from the web in not allowing the

visualization of metrics in the creation of the models

since was using Mediapipe which does not currently

provide this information. For this reason, the process

of creating models using Posenet (web platform)

allows the analysis of the models performance while

using Mediapipe (mobile application) does not allow

to assess the performance of models against the data

set. Throughout, the images to train this model via the

Mediapipe followed a different approach, with an

icSPORTS 2022 - 10th International Conference on Sport Sciences Research and Technology Support

104

image of the exercises in a start point (input) and

another in the end of the exercise (output) with the

human body estimation. These images must have

very high resolution (best possible quality) and

submitted to Mediapipe and the model is created.

3.5 Dataset and Data Processing

When using ML5.js to train the model and get the

dataset it was necessary to record a video in each

exercise phase, with a resolution of 640x480,

obtained directly from the computer web cam.

Considering the 10 included exercises, it was

necessary to validate the number of situations that

each exercise include and record a video for each

stage. The measures of the joint angles, especially the

flexion and extension on the knee, were visual

inspected and assessed with an accelerometer-based

smartphone application.

The dataset consists of 20 videos, with no image

processing. It was developed a web platform for the

recognition of exercises using ML5.js with Posenet

and a mobile prototype using the Mediapipe. For each

exercise it was trained with the specific video as

presenting in the figure 5.

Figure 5: Example of the model training (a) (ex for the

exercise “is starting to lift” or “está a começar a levantar”

(in portuguese language) and model performance for the

exercise 1 (b).

On the web platform, which allow to verify the

correct execution of an exercise by calculating the

angle of the joint by using the formulas from the work

of Borkar et al. (2019). It was necessary to add more

information such as age and gender to match the use

of the sit to stand test metrics. Due to this fact, in the

process, a form was made available to associate the

video to these characteristics in order to be able to use

the formulas in alignment with the guidelines of the

“5 times Sit-to-Stand Test”.

To create the model, after filling out the initial

form, it was needed to perform the exercise as many

times as possible, following the previously defined

procedures.

After completing the exercise, the video was

downloaded and created the title, name, age and

number of repetitions according to the previous

guidelines (Delaware, U., 2020).

3.6 Model Training and Validation

Posenet approach and implementations (Chen et al.,

2017) with Tensorflow via ML5.js (Posenet, 2022;

Gruselhaus, G., 2022) were used for the web platform

and Mediapipe (Mediapipe, 2022) for the mobile app.

The dataset, data processing section and the models

creation followed different approaches. The wed

platform used video to create the models and the other

used initial and final images of each exercise. In the

models created on the web platform, depending on the

exercise model, the results were different. The

models were trained with a computational

environment using a CPU with an Intel Core i7-

7700HQ processor (2.8 GHz), 32GD DDR4-2400

RAM, NVidia GForce GTX 1060 of GPU, HDD

(1Tera) and SSD 462GB and a HP Wide

Vision WebCam (FHD IR Camera).

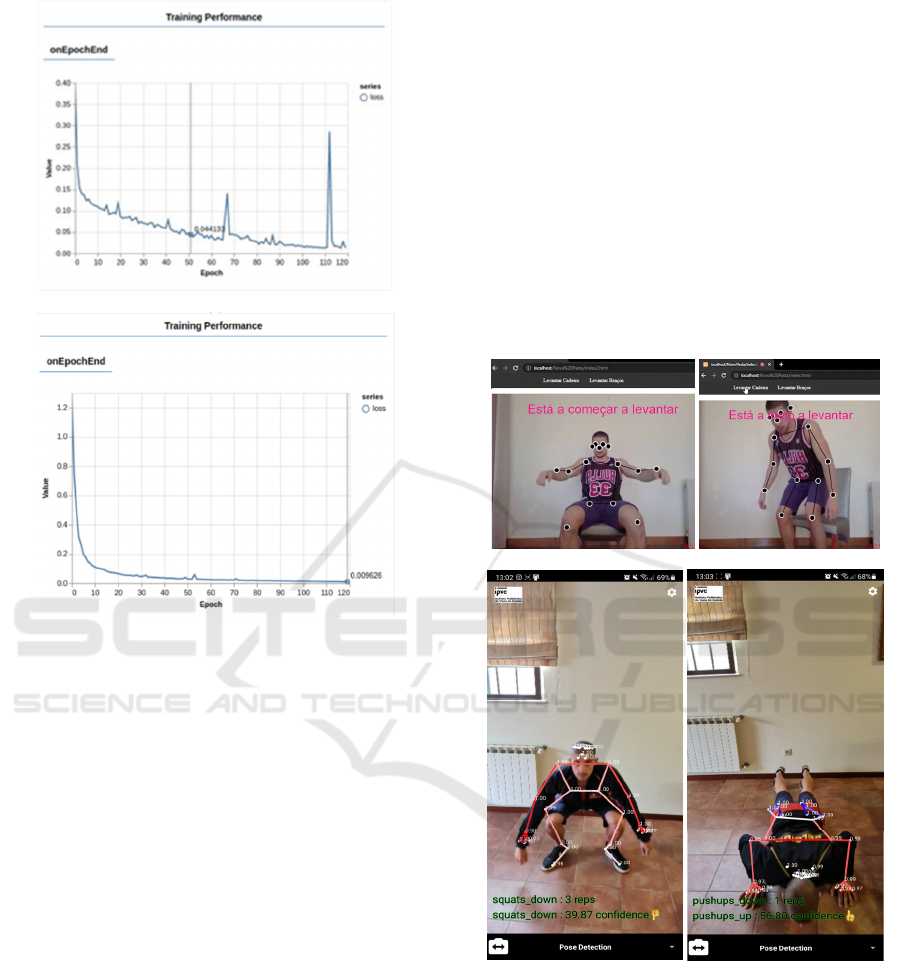

According to several values of epochs, for the

tested exercises, it was found the least loss by 120

(figure 6 (b). It should be noted that this being a proof-

of-concept work, it was also conducted a training with

500 epochs. However, the computational power was

too long, and in practice no results were seen much

better. After each workout, was performed the

exercise in live mode to analyse the performance

results. In the “5 times Sit-to-Stand Test” we used the

calculation of knee angles to check when the person

gets up from the chair, thus counting the number of

repetitions according to the calculation method

described in Chen et al (2019).

Typically in CNN evaluation are used some

specific metrics (Liu, 2020; Shavit & Ferens, 2019;

Ramirez et al., 2021) based on false positives, false

negatives, true positives, true negatives as the

confusion matrix, model accuracy, precision, root

mean square error, f1-score as well as some CPU and

GPU performance processing metrics, as the average

of the Average Precision (mAP) and the Intersection

over Union (IoU) metric determines how many

objects were detected correctly and how many false

positives were generated.

The models were trained and evaluated with the

detection accuracy and loss of the output camera pose

estimated by the DSAC - differentiable ransac

(Random Sample Consensus) for camera localization

(Brachmann, 2017).

Body Movement Recognition System using Deep Learning: An Exploratory Study

105

(a)

(

b

)

Figure 6: Training chart with 120 Epoch.

The evaluation of the models for the web

prototype using Posenet with Tensorflow via ML5.js

are presented in figure 6 (a; b) for 70 Epochs and 120

Epochs respectively. More than 120 Epochs the

results are similar to the 120 Epochs.

The model creation procedure in the mobile

prototype differentiated a few from the web prototype

described above. The Mediapipe version used to

create the models in mobile app not allowed to

provide detailed statistics of the models created and

in the recognition user step only provides the general

accuracy of the exercise. Consequently, the images to

train this model via the Mediapipe have to follow a

different approach, with an initial image and a final

image of the exercise with the best possible image

quality. In this sense with Mediapipe the models were

created but the performance metrics were unknown.

However, using the mobile app we consider that the

performance is high due the results of the exercises

recognition accuracy in general was high (around

99% in the simulations). In this sense, in the results

and discussion section we will focus only on the

models and exercises recognition performance

provided by the web prototype and only in the

exercises recognition performance using the mobile

app.

4 RESULTS AND DISCUSSION

The model’s performance from the prototypes were

quite promising considering the tests performed in the

exercise’s recognition. Figure 7 illustrates an example

of the exercise recognition in the two prototypes and

correct validation of the exercises. For example in the

mobile app (figure 7 (c)) a hand icon in the caption in

the end of the app form and colour green or red if the

exercise is well or not well executed. In the web it is

presented a message.

(

a

)

(

b

)

(c)

Figure 7: Examples of the web platform (a) “raise arms”

and (b) “bicep exercise done correctly” and mobile app (c)

correct (or not) exercise’s recognition simulation.

As we mentioned in section 3.6 due the limitation

to access to the detailed model creation performance

metrics provided by Mediapipe and the general

accuracy provided in exercises recognition in the

mobile app it was only presented in the app form the

general recognition accuracy. However, we

programmatically

complemented

this

lack

with

the

icSPORTS 2022 - 10th International Conference on Sport Sciences Research and Technology Support

106

Table 2: Loss value, accuracy and results for the ten exercises performed and trained.

Exercise Loss Accuracy Results

1 Sit to Stand on the chair 0.042 0.787 Promising results#

4 Hip extension 0.030 bad recognition * Did not get results

5 Dead lift with support 0.021 0.787 Promising results with low reliability

6 Lateral leg lift 0.120 0.537 Promising results#

8 Knee flexion 0.055 0.612 Promising results with low reliability

9 Seated knee extension 0.150 bad recognition * Low reliability

10 Standing plantar flexion in the ground 0.010 0.474 Low reliability

12 Seated Plantar flexion 0.130 0.180 Low reliability

15 Push-up on the wall 0.200 0.458 Promising results with low reliability#

24 Towel twist 0.012 bad recognition * Did not get results

* - bad recognition; # - low reliability; Loss – represents a minimizing score (loss)

calculation aspect of the angles of the limbs, using the

formulas of Borkar et al. (2019) in the exercises in

order to verify if were well or not executed as well as

counting the number of repetitions executed

following the university of Delaware guideline

(Delaware, U., 2022) for the assessment functionality

settings of the exercises.

On another hand, some of the models created

were not able to recognize the exercises. One reason

was the operational conditions of the video capture

(in the creation of the models and in the exercises,

recognition using the models) and the environment

scenario background around the person, luminosity,

objects, etc. being the normal conditions a scenario

without any objects (or a few) behind the person

during the exercises or with a white wall in the

background scenario or the “ideal” conditions a

scenario without any objects and a white “wall” in the

background, for example.

Another reason is due the little variation in the

exercise movements, making impossible to detect

changes during the exercise. For example, the fact

that exercises being in a lateral plane made it difficult

to locate the key points of the body and thus confused

their identification, as only part of the key points of

the body were moved. Table 2 presents the results for

the performance of the ten exercises tested and

trained.

For some exercises we have a bad recognition or

low reliability, identified with the characters ‘*’ and

“#” in table 2. For the exercise 5 was achieve an

accuracy of 79%, exercise 15 an accuracy of 46%. In

the exercise 24, it was evidenced that this exercise

had a bad accuracy. The good results for the lower

limbs were achieved particularly in exercises with

strong movements, namely, for the exercise 1 with an

accuracy of 79%, exercise 6 with 53%, exercise 8

with 61% and exercise 10 with 47%. The exercise 12

presented a low accuracy and exercise 4 and 9 had a

bad recognition.

To evaluate the robustness of the Posenet model,

real images without “ideal” conditions from outside

our dataset have also been used. Moreover, a

conventional webcam has also been used to evaluate

in a live stream the quality of the obtained data. In

terms of model accuracy and model loss. The Loss

and the Accuracy represent the evaluation on the

training and validation data which gives an idea of

how well the model was learning and generalizing

(Table 2). The model loss represents a minimizing

score (Loss), which means that a lower score results

in better model performance. The model accuracy

represents a maximizing score (Accuracy), which

means that a higher score denotes better performance

of the model in the recognition.

We consider that the results were promising in

terms of performance in the web prototype with a

recognition accuracy of the exercises around 80%.

The bad recognition or low reliability can be also

intermediated by the fact of the exercise’s

particularities and the relation of the camera and

model detection of the exercise’s movements.

In the exercise 24, it was evidenced that this

exercise had a bad accuracy because the key points

never change position, or the changes weren´t

significative due the fact of the low movements

detected by the camera in the wrist level.

In our exploratory work, it was possible to denote

that the more variation in the different points of the

body greater is the percentage of success in predicting

the movement, independent of being web based or

mobile app. It was also possible to observe that there

were some human movements that demonstrate bad

recognition. These low or poor recognition, in

particular exercises 4, 9, 12, 24, were mediated to the

specificity of each exercise in the process of

collecting the videos, which in the future should be

Body Movement Recognition System using Deep Learning: An Exploratory Study

107

given more attention in the recording phase. For

example, if an exercise does not change the

positioning of the key points, the model cannot check

whether the exercise has been performed. These key

points of the upper body where the eyes, ears, right

and left shoulder, elbows, and wrists. In the lower

body the key point was the right and left side of the

hip, the knees, and the ankle.

From another point of view and execution if the

exercise was being performed in a lateral plane, or if

the person had some part of the body hided, the model

cannot measure whether the exercise was being

performed, because only part of the key points of the

body were moved. For the lower limbs the results

were more sensible, particularly exercises if there

were significant joint movements, namely, for the

exercise 1, 6, 8 and 10. Nevertheless, exercise 12

presented a low accuracy possibly because being

observed in the lateral plane and only the ankle had a

significant movement with the hip and knees key

points do not change significatively.

The exercise 4 and 9 had a bad recognition

possibly because also being observed in the lateral

plane and difficult to recognize the body movement

by the camera and consequently by the model.

Though, the lateral plane observation/record of the

exercise must be looked with caution and mostly

concerning reliability since exercise 5, 8 and 12

demonstrate promising results with low reliability

and were tested in the lateral plane.

The human movement is inherently complex,

dynamic, multidimensional, and highly non-linear

requiring to work in predictive modelling,

classification and dimensionality reduction (Zago et

al., 2021). However, these results may also being

mediated by the number of observations since the

data set is also a key factor and when the number of

observations in a dataset is smaller than the number

of features the feature space is reduced (Halilaj et al.,

2018).

On another hand, when exploring the angles in

which it is possible to associate a movement it was

found that the best results were only generated when

changing the angle of 3 key points, which can serve

as a complement to exercises that are more difficult

to create prediction models and in the future it will be

interested to explore different plus functionalities for

measuring the angle of the arms and legs movements

as well as with different physical statures of the

persons during the execution of the exercise

movements in order to evaluate the discrepancy of the

body limbs length if influences or not the recognition

accuracy using the CNN approaches.

5 CONCLUSIONS

This work merges the potentialities of the Artificial

Intelligence techniques in the field of computer

vision, using Convolutional Neural Networks (CNN)

applied in the context of human movements in

particular for physical exercises of elderly persons

following an intervention program to promote their

physical activity with specific exercises as well as a

guideline to evaluate older adults’ functionality.

Using opensource CNN algorithm

implementations the aim was to recognize if the

exercises movements were or not being well executed

in particular the angle of the skeleton limbs. Using the

camera devices two prototypes have been created: a

computer web based and a mobile app. The web uses

the Posenet algorithm which is based on the CNN

Mobilenet approach for movements recognition and

was implemented using Javascript with TensorFlow

via ML5.js. We achieved correct exercises accuracy

between 53% and 79% for exercises involving the

lower limbs and accuracy around 45% to the upper

limbs.

The mobile app using Posenet was implemented

via Mediapipe framework and in order to detect the

position of the human body and verify if the exercises

was performed correctly the app was complemented

with the calculation aspect of the angles of the limbs.

However, due the specificity of the actual version of

the Mediapipe in not allowing to view and analyze the

model’s performance as well as the detailed statistics

in the recognition, only presenting the general

accuracy, we assume in this prototype that the

creation of the models achieved high rates and accept

the values of the performance rate provided by the

framework (around 99% in the simulations). In this

sense both prototypes were used for the exercise

recognition and correct execution but only was

analyzed the model’s performance created in the web

prototype.

We consider due the promising results achieved in

the future will be interesting to extend the work with

complementary approaches of human body pose

estimation to improve the training strategy and

different CNN architectures in the applicability

context of physical exercises for elderly population as

well as in a higher scale of persons and scenarios.

ACKNOWLEDGEMENTS

This publication was supported by the Award

ADIT_BII_2021_1 of the Research Unit ADiT-Lab

(Applied Digital Transformation Laboratory) of the

Polytechnic Institute of Viana do Castelo (IPVC).

icSPORTS 2022 - 10th International Conference on Sport Sciences Research and Technology Support

108

REFERENCES

Borkar, P., Pulinthitha, M., & Pansare, A. (2019). Match

Pose - A System for Comparing Poses. International

journal of engineering research and technology, Vol. 8.

https://www.ijert.org/match-pose-a-system-for-

comparing-poses.

Brachmann, E., Krull, A., Nowozin, S., Shotton, J., Michel,

F., Gumhold, S. & Rother, C. (2017). DSAC —

Differentiable RANSAC for Camera Localization.

2492-2500. https://doi.org/10.48550/arXiv.1611.05705

Chang, J., Moon, G. & Kyoung, L. (2019). AbsPoseLifter:

Absolute 3D Human Pose Lifting Network from a

Single Noisy 2D Human Pose. arXiv preprint

arXiv:1910.12029. https://doi.org/10.48550/arXiv.

1910.12029

Chen W., Jiang Z., Guo H. & Ni X. (2020). Fall Detection

Based on Key Points of Human-Skeleton Using

OpenPose. Symmetry. 12(5):744. https://doi.org/

10.3390/sym12050744

Chen, X., Zhou, Z., Ying, Y., & Qi, D. (2019). Real-time

Human Segmentation using Pose Skeleton Map. In:

Chinese Control Conference, pp.8472-8477.

https://dx.doi.org/10.23919/ChiCC.2019.8865151

Chen, Y., Shen, C., Wei, X., Liu, L. & Yang, J. (2017).

Adversarial Posenet: A Structure-Aware Convolutional

Network for Human Pose Estimation, IEEE

International Conference on Computer Vision, 1221-

1230. https://dx.doi.org/10.1109/ICCV.2017.137

Cao, Z., Simon, T., Wei, S. & Sheikh, Y. (2016). Realtime

multi-person 2d pose estimation using part affinity

fields. arXiv:1611.08050. https://doi.org/10.48550/

arXiv.1611.08050

Delaware, U. (2022, July 20). Delaware Physical Therapy

Clinic - Assesses functional lower extremity strength,

transitional movements, balance, and fall risk.

https://cpb-us-w2.wpmucdn.com/sites.udel.edu/dist

/c/3448/files/2017/08/Functional-Tests-Normative-

Data-Aug-9-2017-18kivvp.pdf

Gruselhaus, G. (2022, July 20). Github Repopsitory

implementation of Posenet with Tensorflow and

ML5.js. https://github.com/CodingTrain/website/tree/

main/learning/ml5

Halilaj, E., Rajagopal, A., Fiterau, M., Hicks, J., Hastie, T.

& Delp, S. (2018). Machine learning in human

movement biomechanics: Best practices, common

pitfalls, and new opportunities. Journal of

Biomechanics, Vol. 81, 1-11. https://doi.org/10.1016/

j.jbiomech.2018.09.009.

Liu, L., Ouyang, W., Wang, X. Fieguth, P., Chen, J., Liu,

X. & Pietikäinen, M. (2020). Deep Learning for

Generic Object Detection: A Survey. Int J Comput Vis

128, 261–318. https://doi.org/10.1007/s11263-019-

01247-4

Lo, S., Hang, H., Chan, S. & Lin, J. (2019). Efficient Dense

Modules of Asymmetric Convolution for Real-Time

Semantic Segmentation. Proceedings of the ACM

Multimedia Asia. https://doi.org/10.48550/arXiv.

1809.06323

Marques, E. A., Baptista, F., Santos, R., Vale, S., Santos,

D. A., Silva, A. M., Mota, J. & Sardinha, L. (2014).

Normative Functional Fitness Standards and Trends of

Portuguese Older Adults: Cross-Cultural Comparisons,

Journal of Aging and Physical Activity, 22(1), 126-137.

Mediapipe, D. (2022, July 20). Media Pipe Framework for

live and streaming media in mobile devices. Version

Bazelversion 5.2.0. https://mediapipe.dev

Menolotto, M., Komaris, D., Tedesco, S., O'Flynn, B. &

Walsh, M. (2020). Motion Capture Technology in

Industrial Applications: A Systematic Review. Sensors,

20(19),5687. https://doi.org/10.3390/s20195687

Oved, D. (2022, July 20). Implementation of Real-time

Human Pose Estimation in the Browser with

TensorFlow.js. https://blog.tensorflow.org/2018/05/

real-time-human-pose-estimation-in.html

Posenet, M. (2022, July 20). Machine Learning Framework

with Posenet Implementation - ML5.js.

https://ml5js.org/

Ramirez, H., Velastin, S., Meza, I., Fabregas, E., Makris D.,

Farias, G. (2021). Fall Detection and Activity

Recognition Using Human Skeleton Features. In IEEE

Access, Vol. 9, 33532-33542. Available on:

https://dx.doi.org/10.1109/ACCESS.2021.3061626

Renotte, N. (2022, July 20). Github Repository of Real

Time Posenet implementation. https://github.com/

nicknochnack/PosenetRealtime

Safarzadeh, M., Alborzi, Y. & Ardekany, A. (2019). Real-

time Fall Detection and Alert System Using Pose

Estimation, 2019 7th International Conference on

Robotics and Mechatronics, 508-511. https://dx.doi.org

/10.1109/ICRoM48714.2019.9071856

Shavit, Y., & Ferens, R. (2019). Introduction to camera

pose estimation with deep learning. arXiv preprint

arXiv:1907.05272. https://doi.org/10.48550/arXiv.

1907.05272

Vila-Chã, C. & Vaz, C. (2019). Guia de Atividade Física

para Maiores de 65 anos. GMOVE Project- intervention

program to promote physical activity and quality of life

for the elderly population. https://www.researchgate.

net/publication/338281960_Guia_de_Atividade_Fisica

_para_Maiores_de_65_anos

Wang, X. (2016), Deep Learning in Object Recognition,

Detection, and Segmentation, Foundations and Trends

in Signal Processing: Vol. 8: No. 4, 217-382.

http://dx.doi.org/10.1561/2000000071

Wang, K., Lin, L., Jiang, C., Qian, C., & Wei, P. (2020). 3D

Human Pose Machines with Self-Supervised Learning.

IEEE Transactions on Pattern Analysis and Machine

Intelligence, 42,1069-1082. https://doi.org/10.48550/

arXiv.1901.03798

Zago, M., Kleiner, A., & Federolf, P. (2021). Machine

Learning Approaches to Human Movement Analysis.

Frontiers in bioengineering and biotechnology, 8:1573.

https://doi.org/10.3389/fbioe.2020.638793

Body Movement Recognition System using Deep Learning: An Exploratory Study

109