A Comparison of Date Selection Elements on Mobile Touch Devices

in eCommerce Sites

Asta Romikaitytė

1

, Stelian Adrian Stanci

2

, Javier De Andrés

3a

, Daniel Fernández-Lanvin

2b

and Martín González-Rodríguez

2c

1

Department of Computer Science, Kaunas University of Technology, Lithuania

2

Department of Computer Science, University of Oviedo, Spain

3

Department of Computing, University of Oviedo, Spain

Keywords: Date Input, Mobile Device, GOMS, Forms, Usability.

Abstract: There are several works exploring the different ways in which a user can input a date, as it is a very common

operation on many websites, but the number of papers that cover this topic on mobile devices is very limited.

In this paper several alternatives are considered, studied using Goals, Operators, Methods, and Selection Rules

(GOMS), and tested in an online experiment with hundreds of users using these kinds of devices, to see how

each one of them performs. The results show that the drop-down menu outperforms the others, which were

more novel to the users, in completion time, user satisfaction and the number of errors committed.

1 INTRODUCTION

Nearly two-thirds (64.14%) of all Web traffic is

calculated to come from mobile phones (“Mobile vs.

Desktop Traffic Market Share [May 2022] |

Similarweb,” n.d.). They allow users to conduct daily

tasks: order a purchase, make an appointment, plan a

travel, etc. In e-Commerce sites users are frequently

required to introduce their birthdate during the

registration process. A very well-known example is

the insurance companies, which ask users to enter

their birth, vehicle registration, vehicle

manufacturing date, accident date, etc. (for example,

mutua.es). If the user faces obstacles while filling in

the form, they may decide to opt for another, more

user-friendly application, thus and rival company. It

leads to the loss of users as well as the profit

(Wroblewski, 2008). As the form consists of a variety

of data input elements and all of them contribute to

the whole performance of the form, it is worth in-

depth analyzing every single one of them.

In fact, a number of research has already been

done analyzing the usability of form elements: some

of them provide guidelines for the interaction of the

a

https://orcid.org/0000-0001-6887-4087

b

https://orcid.org/0000-0002-5666-9809

c

https://orcid.org/0000-0002-9695-3919

elements (Linderman, Fried, & 37signals (Firm),

2004; Martin, 2013, 2014; Seckler, Heinz, Bargas-

Avila, Opwis, & Tuch, 2014); others study elements'

performance for specific tasks (Healey, 2007; Jensen,

Hansen, Eika, & Sandnes, 2020). A few studies have

been executed in order to compare several date entries

(Javier A. Bargas-Avila, Brenzikofer, Tuch, Roth, &

Opwis, 2011; Brown, Jay, & Harper, 2010).

However, a minority of them investigate the topic in

the mobile context (Türkcan & Onay Durdu, 2018).

This research aims to compare three different date

input methods on mobile applications that were not

found to be compared before. These methods include

spinners, drop-down menus and radio-buttons. The

study seeks to provide guidelines for date entries'

usage discussing their completion time, user

satisfaction and errors’ probability.

2 RELATED WORK

In previous studies, many aspects of Web forms

usability have been explored. This section introduces

results found about radio-buttons, drop-down menus,

Romikaityt

˙

e, A., Stanci, S., De Andrés, J., Fernández-Lanvin, D. and González-Rodríguez, M.

A Comparison of Date Selection Elements on Mobile Touch Devices in eCommerce Sites.

DOI: 10.5220/0011577800003323

In Proceedings of the 6th Inter national Conference on Computer-Human Interaction Research and Applications (CHIRA 2022), pages 217-224

ISBN: 978-989-758-609-5; ISSN: 2184-3244

Copyright

c

2022 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

217

overall form structure and previous studies focusing

on date entries.

Regarding web form improvement, Bargas-Avila

at al. study (J.A. Bargas-Avila et al., 2010)

summarizes 20 guidelines for usable web form

design. The list is divided into five sections: form

content, form layout, input types, error handling and

form submission. Several suggestions include: place

the label above the input field (“Label Placement in

Forms :: UXmatters,” n.d.); ask one question per row;

use radio buttons or drop-down menus for entries that

can easily be mistyped (Linderman et al., 2004); for

up to four options, use radio buttons (Healey, 2007);

order options in an intuitive sequence (Beaumont,

2002); for date entries use a drop-down menu when it

is crucial to avoid format errors (Christian, Dillman,

& Smyth, 2007). Seckler et al.'s empirical study

(Seckler et al., 2014) aims to challenge the research

described and applies holistic approach in order to

evaluate guidelines' effect on efficiency,

effectiveness and user satisfaction. The results

revealed that improved web forms resulted in faster

completion times, fewer submission trials, and fewer

eye movements.

Jensen et al.'s research (Jensen et al., 2020)

compared different country entry elements, such as

drop-down menus, radio-buttons and text fields with

autocomplete. What concerns task completion time,

the radio-button interface was found to be the slowest,

while text fields were proved to be significantly

faster. Even though, no significant difference

between the drop-down menu and text-field could be

found.

Desktop date entries were analyzed by Bargas-

Avila et al. (Javier A. Bargas-Avila et al., 2011). They

compared six date input methods: (1) three separate

text fields; (2) drop-down menu; (3) text field with

the label on the left; (4) text field with the permanent

label inside the box; (5) text field with a temporary

label inside the box; (6) calendar view. Wrong format

and wrong date errors were counted; completion time

was measured, and user satisfaction questioned. The

fastest completion time was noticed when using

versions 3 and 5. Drop-down menu and calendar view

showed no formatting errors, but they also had longer

input times. Also, more incorrect dates were captured

for the calendar view.

Methods for specifying dates in mobile contexts

were investigated by Turkcan et al (Türkcan & Onay

Durdu, 2018). The study was conducted in order to

evaluate text box, divided text box, date picker and

calendar view for date entry. As in the previous study,

this research tested task completion time, number of

errors, and satisfaction, too. In terms of completion

times, even though the text box was found to be the

fastest, no significant difference between the text box

and the divided-text box was being found. Calendar

view proved to be significantly slower. Also,

participants made no mistakes when interacting with

text boxes, whilst calendar view was found to be the

most error-prone. Finally, the greatest satisfaction

rate was shown by divided-text box followed by text

box.

3 DATE SELECTION IN THE

WEB

An analysis of the 10 most visited websites in retail

(amazon.com, ebay.com, rakuten.com, etc.), social

media (facebook.com, twitter.com, instagram.com,

etc.) and information technology (google.com,

office.com, zoom.com, etc.) sectors was performed

(“Most Visited Websites - Top Websites Ranking for

May 2022 | Similarweb,” n.d.). Results showed that a

drop-down menu for date entry was the most popular

(retail – 83%, social media – 100%, IT – 100%).

Spanish insurance companies with the heaviest

website traffic (mutua.es, generali.es, etc.) are using

more diverse date entry elements, such as radio-

buttons, text-fields, calendar views (“Most Visited

Websites - Top Websites Ranking for May 2022 |

Similarweb,” n.d.). Some of those input methods have

already been analyzed by previous studies (Türkcan

& Onay Durdu, 2018), whilst radio-button analysis

for date entry was not noticed, thus made in this

paper.

4 METHOD

This study uses similar methodologies to the ones

used by Bargas-Avila et al. (Javier A. Bargas-Avila et

al., 2011) and Turkcan et al. (Türkcan & Onay Durdu,

2018).

4.1 Experimental Design

As a first filter, different date entry methods were

evaluated using Goals, Operators, Methods, and

Selection Rules (GOMS) method. GOMS allows

estimation of the time required to complete different

tasks on the GUI (Card, Moran, & Newell, 1983),

while the Keystroke-Level (KLM) extension

minimizes the effort needed to accomplish the

calculations (Setthawong & Setthawong, 2019). The

theoretical concept is widely known and used in

CHIRA 2022 - 6th International Conference on Computer-Human Interaction Research and Applications

218

Human-Computer Interaction (HCI) research (John,

Kieras, & Kieras, 1996), mainly due the GOMS

models’ ability to make exceptionally accurate

predictions (Gray, John, & Atwood, 1993).

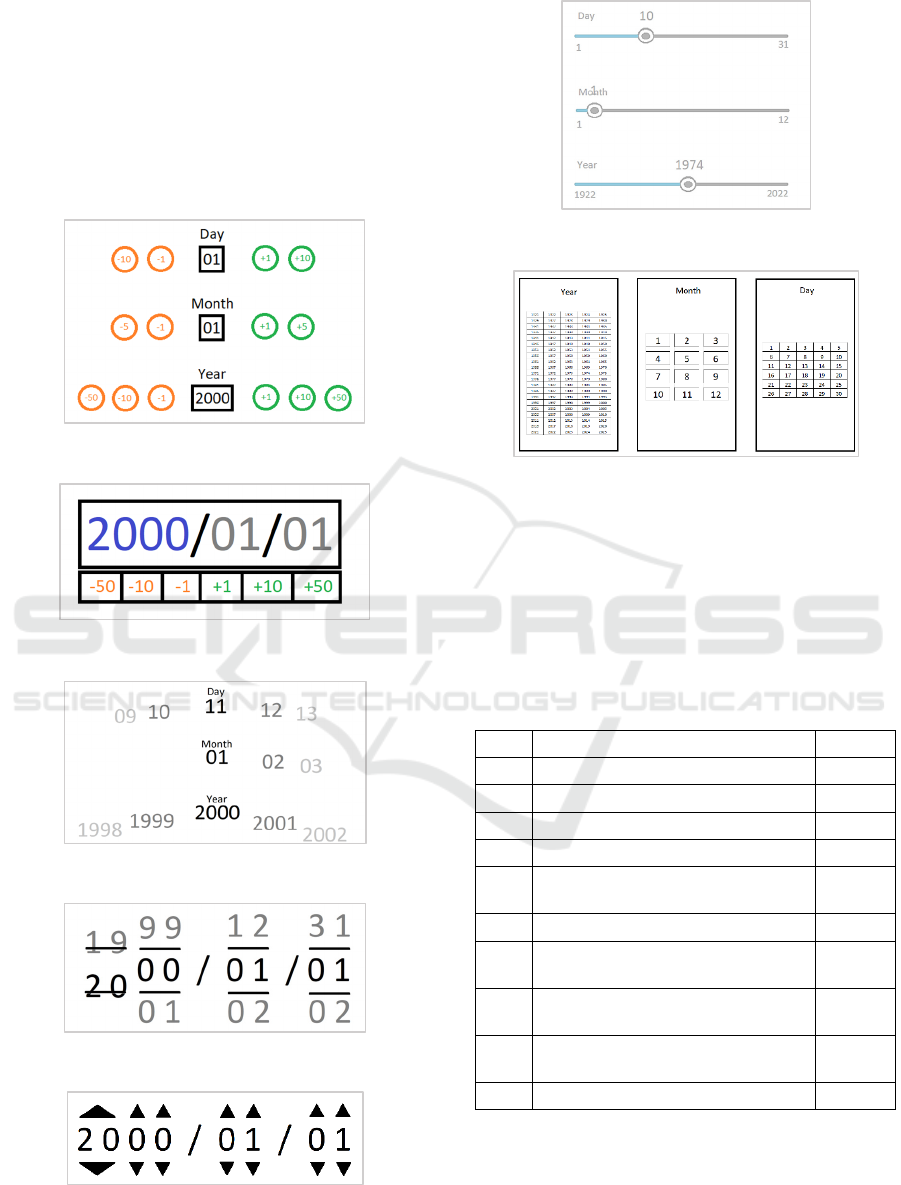

The calendars that were examined: (1)

mathematical; (2) mathematical-aligned; (3) spinner;

(4) date picker; (5) date picker with arrows; (6) slider;

(7) radio-buttons. The designs are illustrated in Fig.

1-7.

Figure 1: Calendar No. 1 – Mathematical.

Figure 2: Calendar No. 2 – Mathematical-aligned.

Figure 3: Calendar No. 3 – Spinner.

Figure 4: Calendar No. 4 – Date picker.

Figure 5: Calendar No. 5 – Date picker with arrows.

Figure 6: Calendar No. 6 – Slider.

Figure 7: Calendar No. 7 – Radio-button.

As the study focuses on mobile applications'

usability, adapted GOMS operators were being used

(see Table 1). Adaptations were made by Settgawong

et al. (Setthawong & Setthawong, 2019) so that

element completion time could be accurately

calculated for touchscreen interactions.

Table 1: Updated GOMS operators for interactions with a

touchscreen.

Code Description Time(s)

E Prepare fingers 0.5

T Touch screen with finger 0.2

TT Touch screen twice with finger 0.4

D Move finger over surface 0.5

M Move finger to a direct part of

screen

0.7

F Move finger over surface rapidly 0.4

S Pinch, squeeze, spread, or splay

g

esture

0.7

P Touch screen with finger for a

lon

g

time

1.1

R Touch screen with 2 fingers and

rotate

0.8

L Release fingers 0.1

Evaluated results revealed that Radio-buttons (M

= 6.75 s) and Spinners (M = 8.48 s) are the most

promising in terms of date entry completion times

(see Table 2). Therefore, these two as well as the

A Comparison of Date Selection Elements on Mobile Touch Devices in eCommerce Sites

219

drop-down menu (the most popular in the industry)

date entries are being investigated further.

Table 2: Average completion times estimated using GOMS.

Calendar M (s)

Mathematical 12.53

Mathematical-ali

g

ne

d

12.53

Spinne

r

8.48

Date picke

r

11.03

Date picker with arrows 13.47

Slide

r

10.95

Radio-

b

uttons 6.75

4.2 Procedure

For carrying out the experiment, an application was

developed in which the users had to input the same 3

different dates using the calendars mentioned

previously (drop-down menu, radio-buttons and

spinners). The technologies used for the development

were HTML, CSS and JavaScript. The IDEs used

were Visual Studio Code and IntelliJ; in order to host

the website Firebase was used, and it can be accessed

by visiting https://researchprototype.web.app/. The

Firestore service provided by Firebase was used for

storing the data.

At first, a page with a small explanation of the

experiment was shown, then the user was asked about

the age and gender, for classification purposes.

Afterwards, a page with videos regarding how to use

each calendar was presented, in order to make the

users more familiar with them and gain some

expertise. Before starting the experiment the user was

asked not to navigate back through the pages, as it

would interfere with the validity of the data.

The experiment consisted in introducing the

following dates: 5

th

of March of 2022, 16

th

of May of

1998 and 20

th

of June of 1964. These dates were hand-

picked having in mind the objective of not repeating

numbers, and also varying the closeness to the current

date (one near, one far and one in between them).

The drop-down menu was implemented with a

combo box for each field, and the other two calendars

were implemented following the design shown

previously, that is, for radio buttons (7) and for

spinner (3). Technical settings, set for the spinner,

where: Accuracy = 0.001, MinStepInterval = 5,

MaxStepInterval = 500, MaxSpeedAtSteps = 30,

NoActionDistance = 0.01, OneStepDistance = 0.02.

For storing the times, the time span between the

selection of the first element of the calendar and the

completion of the last one was calculated (the

operation of pressing the button for proceeding to the

next date was not taken into account), which would

be the time it elapses from the beginning to the end of

the task.

After the user finishes with all the inputs, a

questionnaire was shown, with the aim of measuring

user satisfaction. It was composed by two questions

that were also used in the study of Bargas et al. (Javier

A. Bargas-Avila et al., 2011), and it followed a 5-

point Likert scale (scale: 1 = Strongly disagree; 5 =

Strongly agree): (1) Filling in the date was

comfortable; (2) I could fill in the date quickly and

efficiently. When the user completed this

questionnaire, the data (which comprises the user age

and gender, times, dates and answers to the

questionnaire) was sent to the database and there also

existed the possibility of sharing the prototype

through several social media pages, to provide the

experiment with as much reach as possible.

5 RESULTS

In order to provide more accurate results, the data was

processed removing entries that did not fulfil the

necessary requirements for being considered as valid.

The data was required to have all the questions in the

questionnaire answered, all the dates completed (but

not necessarily correct, as the mistakes were later

counted for further analysis), and be done on a mobile

device, which was verified using the User Agent from

the browser of each participant. Entries that did not

fulfil all these requirements were deleted.

Data records were considered to be outliers if the

date entry completion time differed from the mean

value by three standard deviations (Javier A. Bargas-

Avila et al., 2011). Outliers were erased from the data

sample.

After data cleaning, the date entries’ completion

times were checked for normality. Shapiro-Wilk test

was being executed. As normality was not noticed (p

< .05), calendars’ completion times were log-

transformed. Comparisons of the calendars were done

by analyzing the mean values of figures for three

dates entered by the recipients. For all statistical

methods, .05 alpha value was used.

5.1 Recipients

After deleting invalid data or outliers, 277 data

records in total were gathered from the participants

for further analysis. 183 of them were male and were

94 female, with ages ranging from 14 to 57 years old

(M = 23.25, SD = 0.26). Recipients were invited to

participate in the experiment via Social Media

CHIRA 2022 - 6th International Conference on Computer-Human Interaction Research and Applications

220

platforms or by e-mailing current universities’

students.

5.2 Errors: The Wrong Dates Entered

In order to test for significant differences between the

errors of the three calendar versions, a linear

regression model was used. Results showed no

significant differences between the data entry

elements, as p > .05. Even though, the drop-down

menu showed the best results, with M = 1.28 %, SD

= 0.24 %, while the radio-buttons performance

proved to be the worst (M = 3.16 %, SD = 2.06 %)

(see Table 3).

Table 3: Average and standard deviation of errors made.

Calendar M (%) SD (%)

Drop-down 1.28 0.25

Radio-buttons 3.16 2.06

Spinner 2.49 0.63

5.3 Completion Times

Data on date entries’ completion times did not follow

a normal distribution (p < .05), thus it was log-

transformed. A Multiple Linear Regression (MLR)

model was built. Equation:

CompletionTime ~ CalendarTypeBinaryValue +

userAge + userGender

(1)

Three models were tested, comparing two different

calendar versions each time. The results indicate

significant differences between all calendars’

completion times (see Table 5). The best performance

was shown by Drop-down menu (M = 6.31 s, SD =

1.76 s), followed by Radio-buttons (M = 8.83 s, SD =

2.88 s). Spinner proved to be the slowest date entry

element (M = 13.25 s, SD = 3.81 s), noting that the

test was being performed on Spinner with the settings

described in the Procedure section (see Table 4).

Compared to the completion time results estimated

using GOMS, experimental analysis proved to have

higher time needs for entering the dates. Although, as

in GOMS estimation, Radio-buttons calendar proved

to show faster performance than Spinner.

Table 4: Statistics for calendars’ completion times.

Calendar M (s)

SD (s)

Drop-down menu 6.31 1.76

Radio-buttons 8.83 2.88

Spinner 13.25 3.81

5.4 User Satisfaction Rating

The first statement users had to evaluate was about

date entry elements’ comfortability. As shown in

Table 6, significant differences were noticed between

every calendar pair. Table 7 indicates mean and

standard deviation values for user satisfaction. Drop-

down menu demonstrated highest comfortability

ratings (M = 4.25, SD = 0.98), whilst Spinner did not

prove to be a preferred option (M = 2.34, SD = 1.54).

Accordingly, the drop-down menu’s results

showcase that according to the participants, the

calendar is significantly faster and more efficient than

the other versions (M = 4.24, SD = 0.91). Spinner is

significantly slower and the least efficient (M = 2.36,

SD = 1.55). Radio-buttons remain having a neutral

evaluation (M = 3.59, SD = 1.48).

Table 5: Statistics for user satisfaction.

Comfortability

Speed/

Efficiency

Calendar type M SD M SD

Drop-down menu 4.25 0.98 4.24 0.91

Radio-buttons 3.67 1.38 3.59 1.48

Spinner 2.34 1.54 2.36 1.55

6 DISCUSSION

6.1 Discussion of the Findings

First, an overview of the results of each metric can be

made to provide a perspective of the performance of

each calendar.

Regarding completion time, the best option was

the drop-down menu and the worst, by a considerable

margin, was the spinner, which took more than

double the time to complete compared to drop-down

menu (the mean of drop-down menu was 6.31

seconds, whilst spinner had one of 13.25 seconds),

while radio-buttons was almost 2 seconds slower to

the drop-down menu.

User satisfaction was another important measure

in the analysis, and the ranking in the methods

follows the same order as with completion time, the

drop-down menu was the preferred option, and the

spinner the least preferred one, with radio-buttons

being in the middle of them, closer to the drop-down

menu than to the spinner (this order is maintained in

both comfortability and efficiency, as evaluated by

the users).

A Comparison of Date Selection Elements on Mobile Touch Devices in eCommerce Sites

221

As for the errors made, it is worth noting that,

despite not being significant differences between the

three calendars in this regard (as shown in section

7.B), the most error-prone method was radio-buttons,

and the least one was the drop-down menu.

The conclusions at which we can arrive using

these results is that the drop-down menu is the fastest

and most effective option. But this result may be

conditioned by several factors, such as the other two

calendars being more novel to the user, and also their

implementations, which can vary more than the one

of the drop-down menu, and the optimal

implementation for the best user experience remains

to be researched. Many users had operated with drop-

down menus before and were already familiar with it,

but for others they had to gain some more expertise,

which could be done using the explanations provided

in the webpage (see section 4.B). One benefit that all

of the methods share is that no formatting errors can

be made, as it is implicit in the design of the calendar.

6.2 Limitations

The results suggest that spinner is an option that

should be avoided, due to it having very poor results,

but it has to be taken into account that there are many

variables that affect its usage, such as its sensitivity

or speed. The configuration used for this experiment

has proven not to be very effective, but using a more

user-friendly one could give results closer to the ones

predicted by GOMS (see section 4.A), by taking

advantage of the possibility of making a swiping

movement in mobile devices more effectively. The

experiment consisted in filling several dates, with no

other field to be completed, but this is not a very

realistic scenario, as usually there are other

components around, so the results could change if the

calendar was integrated in a more conventional

environment, such as a registration form in a website.

The role of using the same dates for each calendar has

to be considered as well, this could have had an effect

on the expectations of the user over the course of the

experiment. As mentioned in the previous section,

drop-down menu is a very popular option, and users

were more familiar with it than with the other two

alternatives, in order to deal with this, more dates

could be used, so that the user can achieve the same

level of training for each option.

6.3 Feedback

Several users provided some feedback after doing the

experiment, with the goal of describing how their

experience was and what they struggled with the

most. Many users felt that the sensitivity in the

spinner was too high, which suggests that for future

research it should be lowered so that users can be

faster and more accurate. Another user noted that the

drop-down menu was more aesthetic compared to the

radio-buttons, as it required much less space in the

webpage. One common suggestion was to change the

order of the years in the radio-buttons method, and

start from the current year instead of the lowest one,

as many users had to scan the page for more time and

to scroll down in order to find the requested year.

6.4 Future Work

As for future related research, several things could be

further tested in order to clarify even more which type

of calendar is more suitable for mobile devices. Many

of the calendars presented were not included in the

experiment, as the GOMS estimation was used as a

criterion for deciding which ones to include, but as

this research has shown, the numbers are not exactly

the ones predicted (see section 4.A), so it remains to

be seen how they would perform in another

experiment. Another line to be extended would be

testing different configurations of the spinner in order

to find the most efficient one for the users, and see

how it would perform.

Table 6: MLR models’ statistics for completion times.

I compared

calendar

II compared

calendar

Intercept

coefficient

Calendar

coefficient

T value F statistic P value

Drop-down menu Radio-buttons 9.08 -0.32 -12.22 50.01 > F(3, 550) < .001

Drop-down menu Spinner 9.42 -0.74 -33.04 365.06 > F(3, 550) < .001

Spinner Radio-buttons 9.44 -0.42 -16.41 89.94 > F(3, 550) < .001

CHIRA 2022 - 6th International Conference on Computer-Human Interaction Research and Applications

222

Table 7: MLR models’ statistics for user satisfaction.

Compared calendars

Drop down menu and

Radio-

b

uttons

Drop-down menu and

spinne

r

Spinner and

Radio-

b

uttons

Comfortability

Intercept coefficient 3.67 2.43 3.84

Calendar coefficient 0.57 1.9 -1.33

T value 6.21 19.86 -12.88

F statistic 13.55 > F(3, 550) 131.54 > F(3, 550) 55.52 > F(3, 550)

P value < .001 < .001 < .001

Speed / Efficiency

Intercept coefficient

3.56 2.32 3.69

Calendar coefficient 0.65 1.88 -1.23

T value 7.02 19.85 -11.67

F statistic 16.68 > F(3, 550) 131.77 > F(3, 550) 45.47 > F(3, 550)

P value < .001 < .001 < .001

ACKNOWLEDGEMENTS

This work was funded by the Department of Science,

Innovation and Universities (Spain) under the

National Program for Research, Development and

Innovation (Project RTI2018-099235-B-I00).

REFERENCES

Bargas-Avila, J.A., Brenzikofer, O., Roth, S. P., Tuch, A.

N., Orsini, S., & Opwis, K. (2010). Simple but Crucial

User Interfaces in the World Wide Web: Introducing 20

Guidelines for Usable Web Form Design. User

Interfaces. InTech.

Bargas-Avila, Javier A., Brenzikofer, O., Tuch, A. N.,

Roth, S. P., & Opwis, K. (2011). Working towards

usable forms on the world wide web: Optimizing date

entry input fields. Advances in Human-Computer

Interaction, 2011.

Beaumont, Andy. (2002). Usable forms for the Web :

includes fully usable code samples in HTML, Flash,

JavaScript, ASP, PHP, and more : all code written for

PC IE 4+, Netscape 4+, and Opera 5+, Mac IE 5+,

Netscape 6 and Opera 5+. Glasshaus. Retrieved June

23, 2022, from https://link.springer.com/book/97815

90591604

Brown, A., Jay, C., & Harper, S. (2010). Audio Access to

Calendars. Proceedings of the 2010 International Cross

Disciplinary Conference on Web Accessibility (W4A)-

W4A ’10. New York, New York, USA: ACM Press.

Retrieved June 2, 2022, from http://www.w3.org/WAI/

intro/aria.php

Card, S., Moran, T., & Newell, A. (1983). The Psychology

of Human-Computer Interaction.

Christian, L. M., Dillman, D. A., & Smyth, J. D. (2007).

Helping respondents get it right the first time: The

influence of words, symbols, and graphics in Web

surveys. Public Opinion Quarterly, 71(1), 113–125.

Gray, W. D., John, B. E., & Atwood, M. E. (1993). Project

Ernestine: Validating a GOMS Analysis for Predicting

and Explaining Real-World Task Performance.

Human–Computer Interaction, 8(3), 237–309.

Healey, B. (2007). Drop Downs and Scroll Mice The Effect

of Response Option Format and Input Mechanism

Employed on Data Quality in Web Surveys. Social

Science Computer Review, 25, 111–128. Retrieved

June 17, 2022, from http://ssc.sagepub.comhttp://

online.sagepub.com

Jensen, E. T., Hansen, M., Eika, E., & Sandnes, F. E.

(2020). Country Selection on Web Forms: A

Comparison of Dropdown Menus, Radio Buttons and

Text Field with Autocomplete. Proceedings of the 2020

14th International Conference on Ubiquitous

Information Management and Communication,

IMCOM 2020. Institute of Electrical and Electronics

Engineers Inc.

John, B. E., Kieras, D. E., & Kieras, ; D E. (1996). The

GOMS family of user interface analysis techniques.

ACM Transactions on Computer-Human Interaction

(TOCHI), 3(4), 320–351. ACM PUB27 New York,

NY, USA . Retrieved June 30, 2022, from

https://dl.acm.org/doi/abs/10.1145/235833.236054

Label Placement in Forms :: UXmatters. (n.d.). . Retrieved

June 23, 2022, from https://www.uxmatters.com/mt/

archives/ 2006/07/label-placement-in-forms.php

Linderman, Matthew., Fried, J., & 37signals (Firm). (2004).

Defensive design for the Web : how to improve error

A Comparison of Date Selection Elements on Mobile Touch Devices in eCommerce Sites

223

messages, help, forms, and other crisis points, 246. New

Riders.

Martin, L. (2013). Estimating Time Requirements for Web

Input Elements. Proceedings of the 2nd International

Workshop on Web Intelligence (WEBI-2013), 13–21.

Martin, L. (2014). A RESTful web service to estimating

time requirements for web forms. International Journal

of Knowledge and Web Intelligence, 5(1), 62.

Inderscience Publishers.

Mobile vs. Desktop Traffic Market Share [May 2022] |

Similarweb. (n.d.). . Retrieved June 23, 2022, from

https://www.similarweb.com/platforms/

Most Visited Websites - Top Websites Ranking for May

2022 | Similarweb. (n.d.). . Retrieved June 23, 2022,

from https://www.similarweb.com/top-websites/

Seckler, M., Heinz, S., Bargas-Avila, J. A., Opwis, K., &

Tuch, A. N. (2014). Designing usable web forms-

Empirical evaluation of web form improvement

guidelines. Conference on Human Factors in

Computing Systems - Proceedings, 1275–1284.

Association for Computing Machinery.

Setthawong, P., & Setthawong, R. (2019). Updated Goals,

Operators, Methods, and Selection Rules (GOMS) with

touch screen operations for quantitative analysis of user

interfaces. International Journal on Advanced Science,

Engineering and Information Technology, 9(1), 258–

265. Insight Society.

Türkcan, A. K., & Onay Durdu, P. (2018). Entry and

selection methods for specifying dates in mobile

context. Lecture Notes in Computer Science (including

subseries Lecture Notes in Artificial Intelligence and

Lecture Notes in Bioinformatics), 10903 LNCS, 92–

100. Springer Verlag.

Wroblewski, L. (2008). Web Form Design: Filling in the

Blanks. (M. Justak, Ed.). New York: Rosenfeld Media.

CHIRA 2022 - 6th International Conference on Computer-Human Interaction Research and Applications

224