Meaningful Guidance of Unmanned Aerial Vehicles in Dynamic

Environments

Marius Dudek

a

and Axel Schulte

b

Institute of Flight Systems, Universität der Bundeswehr München, Werner-Heisenberg-Weg 39, Neubiberg, Germany

Keywords: UAV, Guidance, Manned-Unmanned Teaming, Feedback, Behavior Generation.

Abstract: In this contribution, we discuss requirements to guide UAVs from a fighter-jet cockpit. First, we introduce

the definition of tasks as a means of common understanding between human and automation and provide a

taxonomy to define these tasks. Based on this definition, we identify the information that are necessary for

task delegation and define requirements for UAV processing methods, and UAV feedback. Required

information for task delegation includes the information of the task itself as about how the task is to be

distributed among platforms. UAV processing methods should allow the integration of an explanation

component to provide adequate feedback to the pilot. With respect to feedback, we analyse potential measures

that can be integrated into such an explanation component. Future research should address the implementation

and evaluation of task delegation methods and the integration and evaluation of feedback in UAV agents.

1 INTRODUCTION

Human pilots and unmanned aerial vehicles work

together to achieve common military objectives in

manned-unmanned teaming (MUM-T). It is still an

open question how exactly the interaction between

pilot and unmanned systems will look like in an

aircraft cockpit. In most approaches, the unmanned

platforms are delegated by the pilot who is

responsible for monitoring the derived actions (Miller

et al., 2005; Uhrmann and Schulte, 2012; Doherty,

Heintz and Kvarnström, 2013).

In modern air combat, the tactical situation can

change within minutes or even seconds requiring

pilots to adjust their plan. When pilots are responsible

for guiding multiple unmanned aircraft in addition to

their own aircraft, time pressure for plan corrections

will be vast. This pressure will further intensify when

technological advances enhance decision-making

times (e.g. by decision support systems and

automated task execution).

To accelerate decision-making, more authority

may be given towards automation or capable data-

driven methods may be used. However, it is not clear

if the pilot remains in meaningful control, when

authority is transferred to automation and when

a

https://orcid.org/0000-0002-1562-3409

b

https://orcid.org/0000-0001-9445-6911

decision-making is moved into uninterpretable

algorithms (Lepri, Staiano, Sangokoya, Letouzé, &

Oliver, 2017; Parasuraman, Sheridan, & Wickens,

2000).

Therefore, in this article, we want to discuss

requirements to enable meaningful control of

unmanned aerial vehicles in a highly dynamic

military environment. We will discuss the

formulation of tasks as a means of common

understanding between human and automation and

requirements for task delegation, UAV processing

methods and UAV feedback.

2 TASK-BASED GUIDANCE

The distribution of roles and interaction between pilot

and UAVs in manned-unmanned teaming can be

described with the design patterns proposed by

(Schulte, Donath, & Lange, 2016). To delegate

UAVs, we need a common understanding of what

should be done by the automation (Miller &

Parasuraman, 2007). For this, we use a design pattern

called task-based guidance, in which the pilot assigns

high-level tasks to UAV agents aboard the unmanned

systems, which, in turn, are responsible for task

10

Dudek, M. and Schulte, A.

Meaningful Guidance of Unmanned Aerial Vehicles in Dynamic Environments.

DOI: 10.5220/0011946500003622

In Proceedings of the 1st International Conference on Cognitive Aircraft Systems (ICCAS 2022), pages 10-14

ISBN: 978-989-758-657-6

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

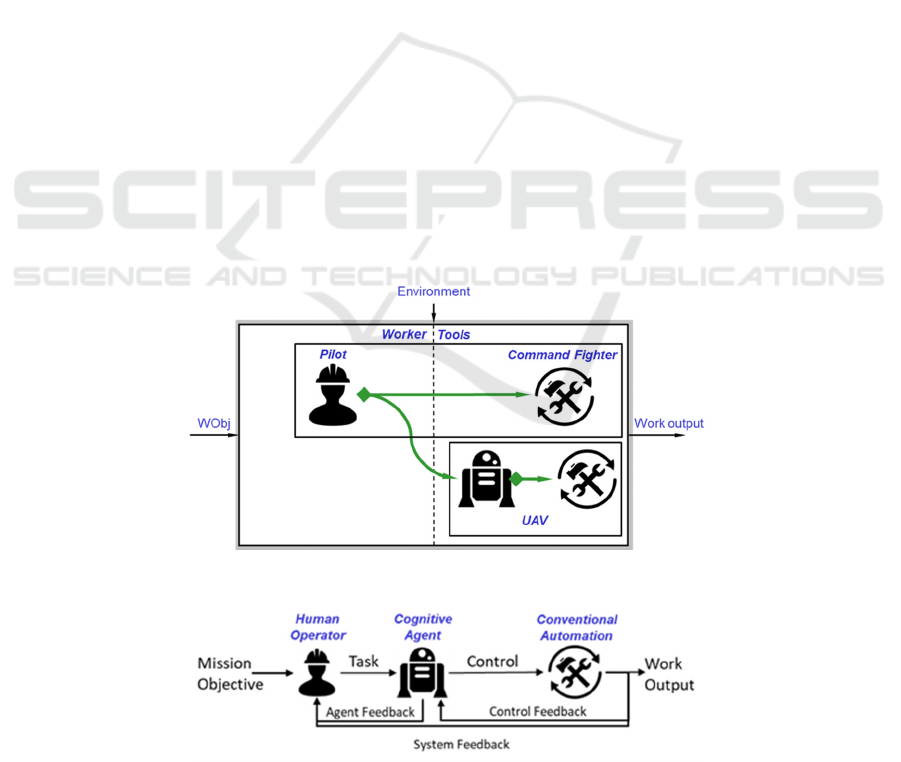

comprehension, decomposition and execution. An

exemplary work system for task-based guidance of a

single UAVs is shown in Figure 1.

The interaction between human pilot and UAV

agent in the above work system can also be

represented as an information flow (Figure 2).

The human pilot receives the mission objectives

and assigns tasks to the UAV agents. These agents

process the assigned tasks by controlling the

conventional automation aboard the aircraft (e.g.

Flight Management System) and providing feedback

to the human operator. In this contribution, we focus

on the interactions between pilot and UAV agents, i.e.

task definition, task delegation, behavior generation

and agent feedback.

2.1 Task Definition

As a first step, we define military UAV tasks by a

taxonomy adapted from (Lindner, Schwerd, &

Schulte, 2019). In this taxonomy, tasks consist of the

following components:

• Action: Actions represent military subgoals,

such as the reconnaissance of a building.

• Target: The target to perform actions on. The

variability of different object types can be

abstracted to few spatial regions according to

(Saget, Legras, & Coppin, 2009). We

represent each object with a so-called Feature

in one of the four geometric types Point,

Moving Point, Line or Area.

• Success criteria: Criteria that define whether

the task failed or succeeded. Possible success

criteria depend on action and target.

• Constraints: Conditions or limiting factors

such as resources or time requirements. The

type and number of possible constraints

depends on the respective task action (e.g.,

depression angle during reconnaissance).

2.2 Task Delegation

A delegation consists of Task Specification and Task

Assignment. Task Specification means the creation of

a task with the components described before, whereas

Task Assignment means the distribution process of

this task to an eligible platform. Considerations for

this distribution process may be the spatial

distribution of platforms as well as previously

assigned tasks and resource availability for each

platform. The requirements that these two elements

entail on the delegation interaction are described

below.

With regard to Task Specification, the interaction

must at least cover the specification of action and

target. The definition of a success criteria may be

obligatory for some tasks, while others have criteria

that can be concluded by the action. The definition of

constraints is optional, because constraints only limit

Figure 1: Work System for Task-based Guidance of a single UAV.

Figure 2: Information Flow in Task-Based Guidance.

Meaningful Guidance of Unmanned Aerial Vehicles in Dynamic Environments

11

the possibilities on how the tasks can be executed

(e.g. time constraints) or because they specify values

that could otherwise also be chosen by the system

(e.g. depression angle).

The interaction also has to support a platform

selection process during Task Assignment. This is

because after the assignment, the generated task must

be executed by a specific platform at a specific time.

Different options exist on how this platform selection

process is designed such as platform-based

approaches (Heilemann & Schulte, 2020) or

capability-based approaches (Besada et al., 2019), all

of which could be assisted by automation or not.

Regardless of the specific implementation, these

delegation options place additional demands on the

user interaction. In a platform-based approach, for

example, the pilot has to name the platform whereas

in a capability-based approach the pilot has to specify

a timing for execution.

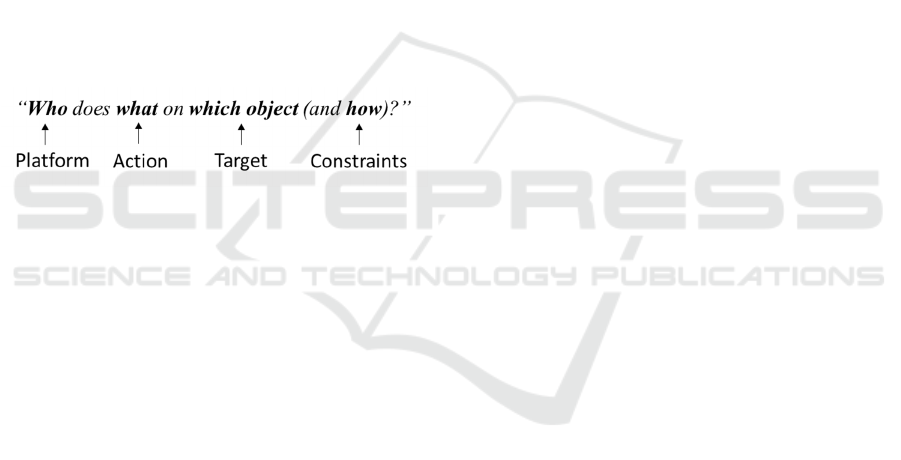

In summary, the delegation interaction must

contain all elements to answer the question (Figure

3):

Figure 3: Elements of a delegation interaction.

There are different interaction design approaches

to answer this question for guidance of UAVs from

inside a fighter cockpit. Some are based on

touchscreen interaction, others use voice interaction

or cursor control devices. The individual benefits of

these different interactions are still under research

(Calhoun, Ruff, Behymer, & Rothwell, 2017; Dudek

& Schulte, 2022; Levulis, DeLucia, & Kim, 2018).

2.3 Behavior Generation

The cognitive agents aboard of UAVs are responsible

for decomposing and executing the assigned tasks.

For this, cognitive agents have to consider the tactical

situation and select the most appropriate action

among a set of possible actions.

To do so, various processing methods exist in

UAV control domain that depend on knowledge

representation, machine learning or optimization

(Emel’yanov, Makarov, Panov, & Yakovlev, 2016).

While each of these approaches has individual

advantages when used for UAV behavior generation,

one capability is particularly necessary for

meaningful guidance of unmanned aerial vehicles:

decision making transparency.

To be able to provide reasonable feedback to the

human pilot, the algorithm used at high-level decision

making must allow embedding an explanation

component, that creates action, goal and/or status

feedback throughout the behavior generation. Model-

based approaches are well suited for this requirement,

because feedback can easily be integrated in the

control flow.

2.4 Feedback

One of the most important characteristics of a

cognitive agent is that it provides appropriate

feedback to the pilot. (Chen, Barnes, Selkowitz, &

Stowers, 2016) showed that agent decision-making

transparency can benefit operator performance and

support appropriate levels of trust. However, UAV

agent feedback is not defined by transparency alone.

Instead, agent feedback can be categorized into three

modes:

1. Transparency: Measures that attempt to

disclose agent decisions to the human pilot fall

into this category. Feedback in this category

can be classified by the Situation Awareness-

based Agent Transparency (SAT) levels.

2. Assistance: Assistance offers troubleshooting

steps based on a faulty condition, whether

caused by a change in the environment or by

incorrect pilot inputs.

3. Interaction: Pilot interventions on a lower-

level than the definition of tasks.

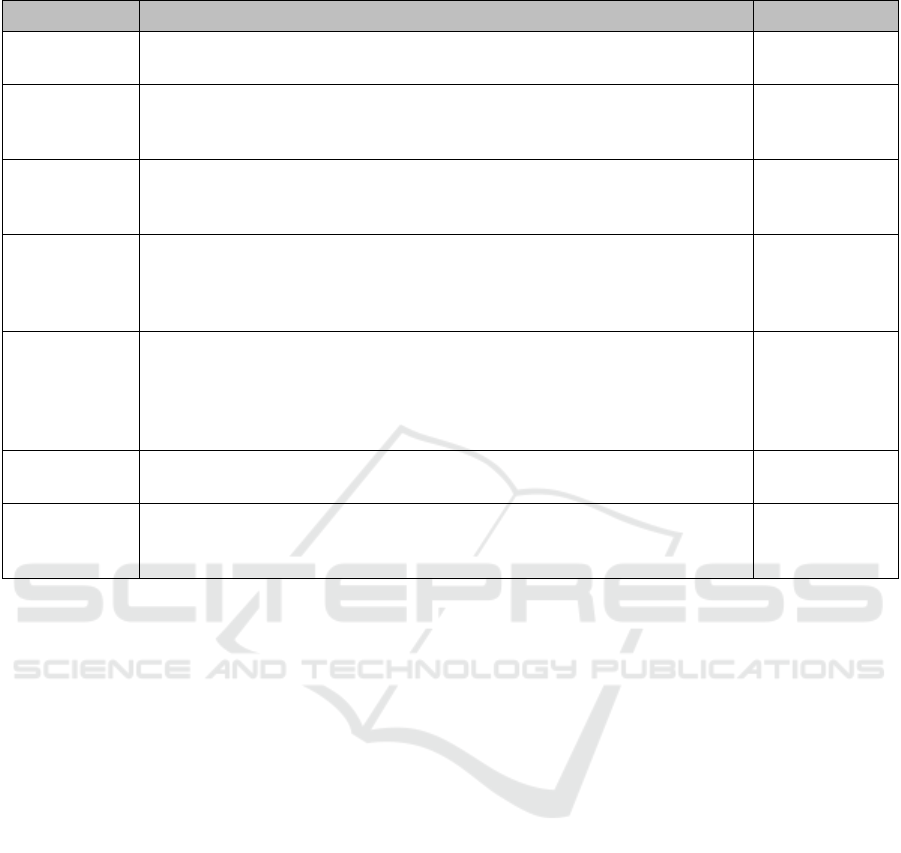

We analysed the delegation process of task-based

guidance to identify potential feedback measures in

these categories (Table 1).

3 GUIDANCE APPROACHES

After defining the requirements for meaningful

guidance of UAVs, we want to present our research

to fulfilling these requirements. We implemented

tasking interactions using voice and touch input

modalities and investigated the effects of these

modalities on mission performance and modality

preferences. We plan to further investigate the

observed effects and implement multimodal tasking

interactions. For the behavior generation, we used

Behavior Trees, in which we also integrated a

feedback creation component, that we use for action

feedback and assistance generation. Regarding

feedback, we plan to define a taxonomy for the

different types of feedback and to map feedback on

ICCAS 2022 - International Conference on Cognitive Aircraft Systems

12

Table 1: Potential Feedback Measures in Task-based Guidance.

Timing Description Mode

Task

specification

The task created by the pilot is checked for plausibility, offering

alternatives for unfeasible task parameterization.

Assistance

Task

assignment

Feedback can be given for tasks that are not in accordance with the

mission objectives or for tasks that do not meet constraints with other

tasks.

Assistance

Before task

execution

A description of the desired action chain is appropriate at this point to

externalize the UAV behavior model and convey a common

understanding of the assigned task (SAT level 1/2).

Transparency

During task

execution

Displaying the current action of each UAV can increase situation

awareness during task execution (SAT level 1). For higher SAT levels,

reasons for action selection can be displayed and projections on action

changes can be made.

Transparency

During task

execution

For tasks covering a wide scope of actions, involving the user in the

choice of action could be beneficial to situation awareness and

performance because the human pilot is not only involved with passive

monitoring but also with contributing to the task, which could increase

vigilance (Parasuraman, 1987).

Interaction

During task

execution

The pilot can be informed, when the prediction changes whether goals

can be achieved (SAT level 3).

Transparency

After task

execution

After a task, the most important feedback is whether a task was

completed successfully or whether it failed. Furthermore, providing an

overview of resource usage can be beneficial.

Transparency

different modalities as a succeeding step. We also

want to investigate the effects of different types of

feedback on mission performance and situation

awareness.

REFERENCES

Besada, J. A., Bernardos, A. M., Bergesio, L., Vaquero, D.,

Campana, I., & Casar, J. R. (2019). Drones-as-a-

service: A management architecture to provide mission

planning, resource brokerage and operation support for

fleets of drones. In IEEE International Conference on

Pervasive Computing and Communications Workshops

(pp. 931–936). IEEE. https://doi.org/10.1109/PERCO

MW.2019.8730838

Calhoun, G. L., Ruff, H. A., Behymer, K. J., & Rothwell,

C. D. (2017). Evaluation of Interface Modality for

Control of Multiple Unmanned Vehicles. In D. Harris

(Ed.), Engineering Psychology and Cognitive

Ergonomics: Cognition and Design (pp. 15–34). Cham:

Springer International Publishing. https://doi.org/10.10

07/978-3-319-58475-1_2

Chen, J. Y., Barnes, M. J., Selkowitz, A. R., & Stowers, K.

(2016). Effects of Agent Transparency on human-

autonomy teaming effectiveness. In IEEE International

Conference on Systems, Man, and Cybernetics (SMC)

(pp. 1838–1843). IEEE. https://doi.org/10.1109/

SMC.2016.7844505

Dudek, M., & Schulte, A. (2022). Effects of Tasking

Modalities in Manned-Unmanned Teaming Missions.

In AIAA SCITECH 2022 Forum. Reston, Virginia:

American Institute of Aeronautics and Astronautics.

https://doi.org/10.2514/6.2022-2478

Emel’yanov, S., Makarov, D., Panov, A. I., & Yakovlev, K.

(2016). Multilayer cognitive architecture for UAV

control. Cognitive Systems Research, 39, 58–72.

https://doi.org/10.1016/j.cogsys.2015.12.008

Heilemann, F., & Schulte, A. (2020). Time Line Based

Tasking Concept for MUM-T Mission Planning with

Multiple Delegation Levels. In T. Z. Ahram, W.

Karwowski, A. Vergnano, F. Leali, & R. Taïar (Eds.),

Advances in Intelligent Systems and Computing: Vol.

1131, Intelligent Human Systems Integration 2020 (1st

ed., pp. 1014–1020). Cham: Springer International

Publishing.

Lepri, B., Staiano, J., Sangokoya, D., Letouzé, E., & Oliver,

N. (2017). The Tyranny of Data? The Bright and Dark

Sides of Data-Driven Decision-Making for Social

Good. In T. Cerquitelli, D. Quercia, & F. Pasquale

(Eds.), Studies in Big Data: Vol. 32. Transparent Data

Mining for Big and Small Data (Vol. 32, pp. 3–24).

Cham: Springer International Publishing.

https://doi.org/10.1007/978-3-319-54024-5_1

Levulis, S. J., DeLucia, P. R., & Kim, S. Y. (2018). Effects

of Touch, Voice, and Multimodal Input, and Task Load

Meaningful Guidance of Unmanned Aerial Vehicles in Dynamic Environments

13

on Multiple-UAV Monitoring Performance During

Simulated Manned-Unmanned Teaming in a Military

Helicopter. Proceedings of the Human Factors and

Ergonomics Society Annual Meeting, 60(8), 1117–

1129. https://doi.org/10.1177/0018720818788995

Lindner, S., Schwerd, S., & Schulte, A. (2019). Defining

Generic Tasks to Guide UAVs in a MUM-T Aerial

Combat Environment. In W. Karwowski & T. Ahram

(Eds.), Intelligent Human Systems Integration 2019

(pp. 777–782). Springer Nature Switzerland.

Miller, C. A., & Parasuraman, R. (2007). Designing for

flexible interaction between humans and automation:

Delegation interfaces for supervisory control. Human

Factors, 49(1), 57–75. https://doi.org/10.1518/001872

007779598037

Parasuraman, R. (1987). Human-Computer Monitoring.

Human Factors, 29(6), 695–706. https://doi.org/

10.1177/001872088702900609

Parasuraman, R., Sheridan, T. B., & Wickens, C. D. (2000).

A model for types and levels of human interaction with

automation. IEEE Transactions on Systems, Man, and

Cybernetics. Part A, Systems and Humans A

Publication of the IEEE Systems, Man, and Cybernetics

Society, 30(3), 286–297. https://doi.org/10.1109/34

68.844354

Saget, S., Legras, F., & Coppin, G. (2009). Cooperative

Ground Operator Interface for the Next Generation of

UVS. In AIAA (American Institute of Aeronautics and

Astronautics) Infotech@Aerospace, 6 - 9 April, Seattle,

WA, USA. Retrieved from https://www.research

gate.net/profile/francois-legras/publication/234079150

_cooperative_ground_operator_interface_for_the_next

_generation_of_uvs

Schulte, A., Donath, D., & Lange, D. S. (2016). Design

Patterns for Human-Cognitive Agent Teaming. In D.

Harris (Ed.), Engineering Psychology and Cognitive

Ergonomics (pp. 231–243). Cham: Springer

International Publishing. https://doi.org/10.1007/978-

3-319-40030-3_24

ICCAS 2022 - International Conference on Cognitive Aircraft Systems

14