Reducing the Gap Between Designers and Users, Why Are Aviation

Practitioners Here Again?

T. Laursen

*

, A. J. Smoker

*a

, M. Baumgartner

*

, S. Malakis

*

and N. Berzina

*

International

Federation of Air Traffic Controllers Associations, Switzerland

Keywords: Cognitive Systems Engineering, Joint Cognitive Systems, Macro-Cognitive Work, Human-System

Integration, System Design, Complex Socio-Technical Systems.

Abstract: Designs of systems that integrate human and technical systems need to support the nature of ‘work’- the

purposeful activity of the work system. Practitioners, here air traffic controllers, explore the models of ‘work’

that designers and air traffic controllers have, and conclude that designs for human-system integration in

complex socio-technical systems need to embrace practionier needs to support adaptation necessary to sustain

production and operations.

1 INTRODUCTION

Our approach to designs for human-system

integration resulted in designs with reduced margins

to manage and work with uncertainty and surprise

within the work systems. This paper argues that

technological designs often underperform compared

to the promised benefits delivered. The reason for

this is principally because designs have been based on

a strategy where practitioners e.g., ATCOs, pilots etc,

are expected to take over in abnormal conditions - the

so called ‘left-over’ design strategy’ or the (Inagaki,

T, 2014, p235)). Inagaki also argues, citing

Rasmussen & Goodstein, that there is a need to retain

the human in the system to ‘complete the design, so

as to adapt to the situations that designers never

anticipated’ (Inagaki, 2014, p235) We argue that the

need to change this philosophy of design is necessary,

as Boy argues: “We cannot think of engineering a

design without considering the people and the

organisations that go with it” Boy argues (Boy, 2020).

The operating environments of interest here, complex

macro-cognitive work designs, are what Boy refers to

as socio-cognitive systems (Boy, 2020) and are

confronted with the challenge of digitisation and

integration of artificial intelligence.

a

https://orcid.org/0000-0003-4443-329X

2 UNCERTAINTY AND

SURPRISES WILL ALWAYS BE

AN ELEMENT OF COMPLEX

SYSTEMS

Today’s aviation system consists of many different

actors and agents that affect the ability to respond to

uncertainty and surprises. There is a political level, an

organisational level, a social dimension, training of

practitioners, and numerous others. Knowing this, the

only model of a human system is the system itself.

We assume that there is a basic shared model of

operation such as common ground in joint activity

(Klein et al, 2005) between different actors. The basic

model of operation consists of two interdependent

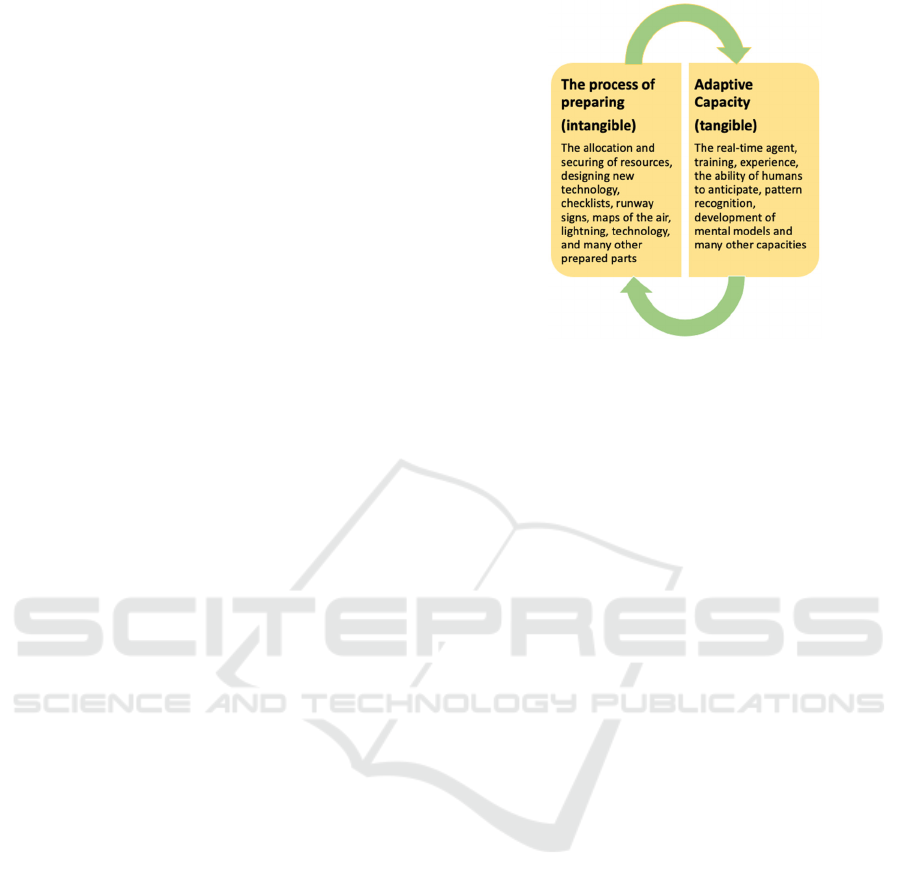

processes. One is the process of preparing the other is

the constant real-time adaptive capacity - that is the

capacity to adapt to situational and fundamental

surprises (Eisenberg et al 2019) and performance

variability whilst sustaining

production and system

goals, which practitioners deliver principally.

The process of preparing entails procedures,

checklists, runway signs, maps of the air, lightning,

technology, the allocation and securing of resources,

designing new technology and many other activities.

Organisational adaptive capacity is developed

through training, experience, the ability of humans to

anticipate, pattern recognition, mental models, the

ability to respond in real-time and many more skills

Laursen, T., Smoker, A., Baumgartner, M., Malakis, S. and Berzina, N.

Reducing the Gap Between Designers and Users, Why Are Aviation Practitioners Here Again?.

DOI: 10.5220/0011959000003622

In Proceedings of the 1st International Conference on Cognitive Aircraft Systems (ICCAS 2022), pages 65-68

ISBN: 978-989-758-657-6

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

65

needed to respond to changes, uncertainty and

surprises that we know will occur.

Designing technology to handle uncertainty and

surprises requires that the designers of technology do

so with this characteristic of ‘work’ in mind. In order

to do this, designers, need a complete understanding

of the uncertainty and surprises that will emerge

within the aviation system. However, this requires

perfect knowledge. As we all know, perfect

knowledge is never available e.g., Air traffic

controllers (ATCOs) quite often improvise in situ to

meet the challenges of traffic imposed by novel

events, unfortunate actions and shortcomings of the

work system. In the ATM system balancing

efficiency and thoroughness, involves making

improvisations and departing from existing

procedures under conditions of time pressure,

uncertainty, and high workload. The rapid expansion

of information technology has increased the amount

of information presented to ATCOs without any

assistance in how to make sense or to anticipate the

current situation or future trends. Quite frequently

ATCOs are dealing with a complex and dynamic

environment that requires them to attend to multiple

events, anticipate aircraft conflicts and comprehend

or make sense of evolving scenarios.

Experience has shown that staying in control

when exposed to surprises is the main challenge in

today’s very safe aviation system. If you can

eliminate uncertainty and surprises, you can remove

real-time control of the system by the human. Todays’

rare accidents are characterised by complexity and

surprise rather than by broken parts or components.

The latest prominent example is the 737 max

accidents (Nicas et al, 2019). Boeing management

decided that the designers of the technology could

foresee all possible uncertainty and decided to keep

the human practitioners out of the loop. It is

unrealistic, to assume that uncertainty and surprise

can be eliminated. This leads to a system requirement

for designs to have the human actively involved in the

control functions of the system.

3 TWO DIFFERENT MENTAL

MODELS

Historically, the aim for designing complex technical

systems has been to replace or limit the authority to

act of the human practitioner in real time operational

control and management of the systems activities.

Figure 1: The basic model of operation.

Another design approach has been to partially remove

the human practitioner and create a strict task-sharing

environment in which automation deals with routine

tasks and events, while the human is exclusively

responsible for rare high complexity situations. In

essence, these system activities at the micro level are

the work i.e., the purposeful activity of the real-time

system. Thus, this perspective of work reduces the

purposeful activity as it reduces the involvement of

the human practitioner. In particular it reduces the

ability to respond to uncertainty and surprise.

This approach is driven by the idea that it is

possible to substitute the human practitioner with

technology that includes prepared responses to

uncertainty and surprises. Lisanne Bainbridge

describes this approach, in her 1988 paper (Bainbridge,

1988): The designer's view of the human practitioner

may be that the practitioner is unreliable and

inefficient. so should be eliminated from the system.

An alternative approach is where systems are

designed to be able to support management and

adaptation of uncertainty and surprises by

collaboration and co-allocation between technology

and the human practitioner (Bradshaw, 2011). This

approach has been called the joint cognitive approach

(JCA) (Hollnagel and Woods, 2005) and is based on

the notion that the human practitioner stays in control

and that we design for the human practitioner to know

what the technology is doing, a design that

emphasises common ground.

Klein extends and amplifies this perspective

further in the two views in the table below (Klein

2022) that represent designers and end users’

perspectives:

ICCAS 2022 - International Conference on Cognitive Aircraft Systems

66

Table 1: Differing design requirements of system designers and system end users (Klein, 2022).

Capabilities Limitations

The designers

view

How the system works: by its parts,

connections, causal relationships, process and

control lo

g

ic.

How the system fails: Common

breakdowns and limitations (e.g., the

limitations of the human).

The users

view/JCA

How to make the system work: Detecting

anomalies, performing workaround and

adaptations.

How the users get confused:

Complexity and false interpretations.

Taking the designer’s view there are some caveats

that we have to be aware of. Again, Bainbridge

describes it in this way: One,

• that designer assumptions can be a major

source of operating problems and,

• the second problem is that the designer who

tries to eliminate the practitioner, the left-

over functions, still leaves the practitioner to

do the tasks which the designer cannot think

how to automate.

• An additional problem is that the most

successful automated systems, with rare

need for manual intervention, may need the

greatest investment in human practitioner

training.

Taking the joint cognitive and the human system

integration approaches (Hollnagel, Woods, 2005;

Boy, 2020) are extant philosophies for collaboration

between technology and the human which retain

control in real-time operation.

4 DESIGNS FOR

COLLABORATION

How do we meaningfully bring technology and social

actors – the designer and the user - together to match

a complex world with its inherent complex adaptive

solutions that are playing out in real–time?

The challenge becomes, in a complex world

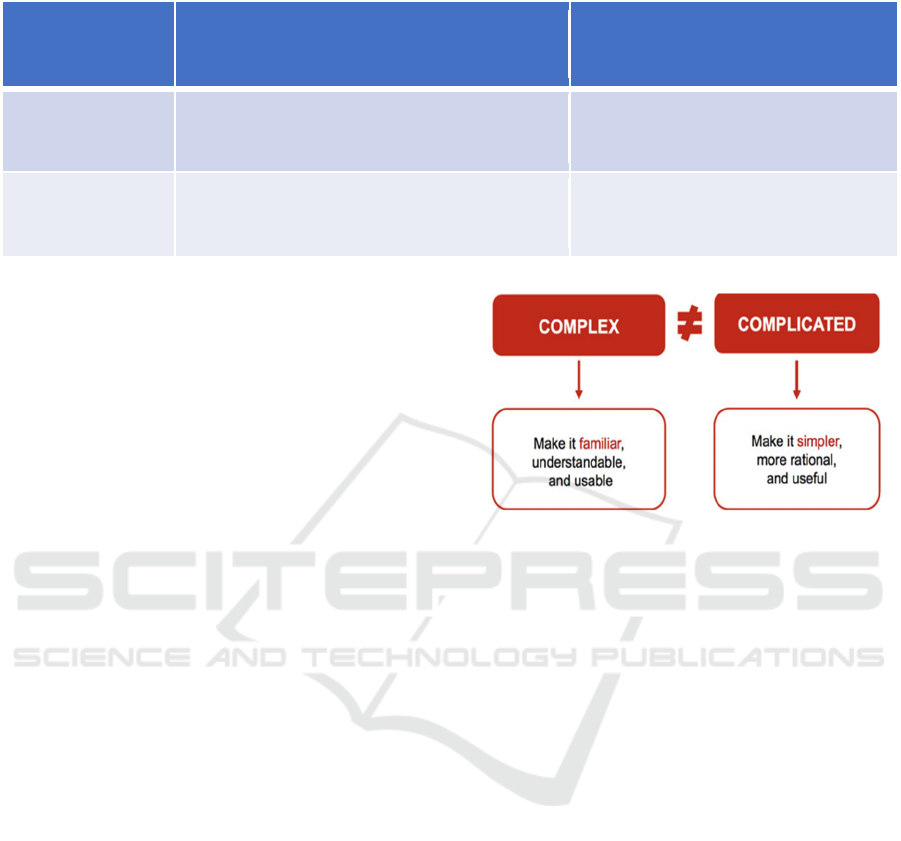

compared to a complicated word, mental how do we

reconcile the different mental models of the different

actors to create designs that enhance adaptive

capacity?

The dualism of the two different mental models

becomes more complicated when considering design

and the engineering of the design, for complex socio-

technical systems.

Figure 2: The dualism of designer and user mental models.

Design for complex socio-technical systems, can

be seen as an exercise in conflicting value systems

(Baxter & Somerville, 2011, citing Land 2009). For

example:

• Design values with a fundamental

commitment to humanistic principles: the

designer aiming to improve the quality of

working life and job satisfaction of those

operating in and with the system.

• Managerial values: the principles of socio-

technical design are focused on achieving

the company or organisational objectives

especially economic ones.

These two sets of values conflict. And we argue

that this tension can contribute to a decrement in

system adaptive capacity as well as adding costs to

the system's effectiveness and its ability to achieve

system production, goals and objectives.

One of the driving arguments for automation is

that costs of production are reduced because there are

fewer human costs - be it training, the reliability of

the practitioner, the inefficiency of the practitioner.

Designs that seek to optimise managerial values can

have the effect - intentional or otherwise -to privilege

the managerial objectives and in doing so constrain

the humanistic design. The consequences of this are

that the practitioners’ degrees of freedom are reduced;

Reducing the Gap Between Designers and Users, Why Are Aviation Practitioners Here Again?

67

buffers and margins are impacted in ways that limit

the ability of the system to maintain and sustain

adaptability when confronted with uncertainty and

surprise events and thereby making the system less

effective. Additionally, increasing the distance

between the practitioner, and the system reduces the

practitioner’s ability to intervene in case of

unexpected events:

Work changes. When work changes there are

consequences on the practitioner’s ability to create

strategies that can exploit system characteristics of

agility and flexibility, in other words adaptive

capacity. Boy (Boy, 2020) refers to this as a form of

smart integration: designing for innovative complex

systems - that exploit the ability to understand

increasing complexity. This means embracing

complexity. What are we designing for?

A design that embraces complexity will adopt the

opposite of the reductionist view – which means

reducing or eliminating the effects of complexity, by

eliminating or reducing the role of the human. As

opposed to designs that embrace and design for

complexity by matching emerging system behaviours

with creative emergent human real time responses.

5 CONCLUSIONS

In this paper we argue that we need to move towards

designing a socio-cognitive system. This is proposed

as a way forward to reduce the distance between

practitioners and designers so that designs

incorporate joint activity that supports common

ground.

To make that possible, we must embrace

complexity, uncertainty and surprises rather than

trying to eliminate it. In doing so the role of the

human practitioner is recognised and sustained,

which permits more efficient and effective operation

in real-time. Furthermore, such an approach can lead

to maintaining job satisfaction, practitioner

involvement and the real-time learning and

adjustments of patterns of activity associated with

complexity, uncertainty and surprises.

One of the means to achieve a constructive

approach to the design of effective and meaningful

human-system integration is through new ways of

working together. These need to be institutionalised

and embedded by the Regulator. In the recent Boeing

episode, the manufacturer was doing the regulators

job (Nicas, J. et al, 2019).

Further areas for consideration are a coherent

transition plan should be derived to identify the needs

of management and the human practitioner in

complex socio-cognitive systems. Another question

is whether we are deceived by the optimistic

predictions of costs saved by tools and method of

operations without the human practitioner.

REFERENCES

Bainbridge L (1983): Ironies of automation. Automatica 19,

775-779.

Baxter, G., & Somerville, I. (2011). Socio-technical

systems: from design methods to systems engineering.

Interacting with computers 23, 4-17

Boy G, (2020). Human-systems integration. CRC Press

Bradshaw, J., Feltovich, P., & Johnson. (2011). Human-

agent interaction. In G. Boy (Ed), The handbook of

human-machine interaction (pp. 283-303) Ashgate

Cilliers, P. (2000), Complexity and postmodernism:

understanding complex systems. Routledge

Eisenberg, D., Seager, T., Alderson, D.L. (2019).

Rethinking resilience analytics. Risk Analysis DOI:

10.1111/risa.13328

Flach, J. (2012), Complexity: learning to muddle through.

Cogn Tech Work) 14, (187–197), DOI 10.1007/s10111-

011-0201-8

Heylighen, F., Cilliers, P., Gershenson, (2007). Complexity

and Philosophy. In Ed. J. Bogg, R. Geyer. Complexity,

Science and Society (1

ST

Edition). CRC Press.

Hollnagel E, Woods D (2005): Joint Cognitive Systems:

Foundations of Cognitive Systems Engineering. CRC

Press, Taylor and Francis.

Inagaki, T. (2014). Human-machine co-agency for

collaborative control. In Eds, Yoshiyuki, Sankai; Kenji

Suzuki, Yasuhisa Hasegawa: Cybernetics: fusion of

human, machine an information system. Springer

Klein, G. (2022). The war on expertise. Novellus.

https://novellus.solutions/insights/podcast/war-on-

expertise-how-to-prepare-and-how-to-win/.

Klein, G., Feltovich, P.J., Bradshaw, J.M., Woods, D.D.

(2005). Common ground and coordination in joint

activity. In Eds W. B. Rouse & K.R. Boff,

Organisational Simulation: Wiley

Lanir, S. (1983). Fundamental surprises. Tel Aviv: Centre

for strategic studies

McDaniel P.R Jr., & Driebe D.J. (2006): Uncertainty and

Surprise. Complex Systems: Questions on Working with

the Unexpected. Springer Publishing.

Nicas J, Kitroeff N, Gelles, D, Glanz J. (2019). Boeing built

deadly assumptions into 737 Max, blind to a late design

change [Internet]. New York: The New York Times;

2019 Jun 1 [cited 2019 Oct 17]. Available from

https://www.nytimes.com/2019/06/01/business/boeing

-737-max-crash.html

Woods, D.D. (2010) Fundamental surprise. In Ed. D.D.

Woods, S.W.A Dekker, R. Cook, L, Johannesen, N.

Sarter. Behind human error (2

nd

Edition). CRC Press.

ICCAS 2022 - International Conference on Cognitive Aircraft Systems

68